图像处理之拼接---图像拼接opencv

//

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

using namespace std;

using namespace cv;

int main( int argc, char** argv )

{

Mat img_1 = imread( "img_opencv_1.png", 0 );

Mat img_2 = imread( "img_opencv_2.png", 0 );

if( !img_1.data || !img_2.data )

{ std::cout<< " --(!) Error reading images " << std::endl; return -1; }

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 10000;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Draw keypoints

Mat img_keypoints_1; Mat img_keypoints_2;

drawKeypoints( img_1, keypoints_1, img_keypoints_1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

drawKeypoints( img_2, keypoints_2, img_keypoints_2, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors with a brute force matcher

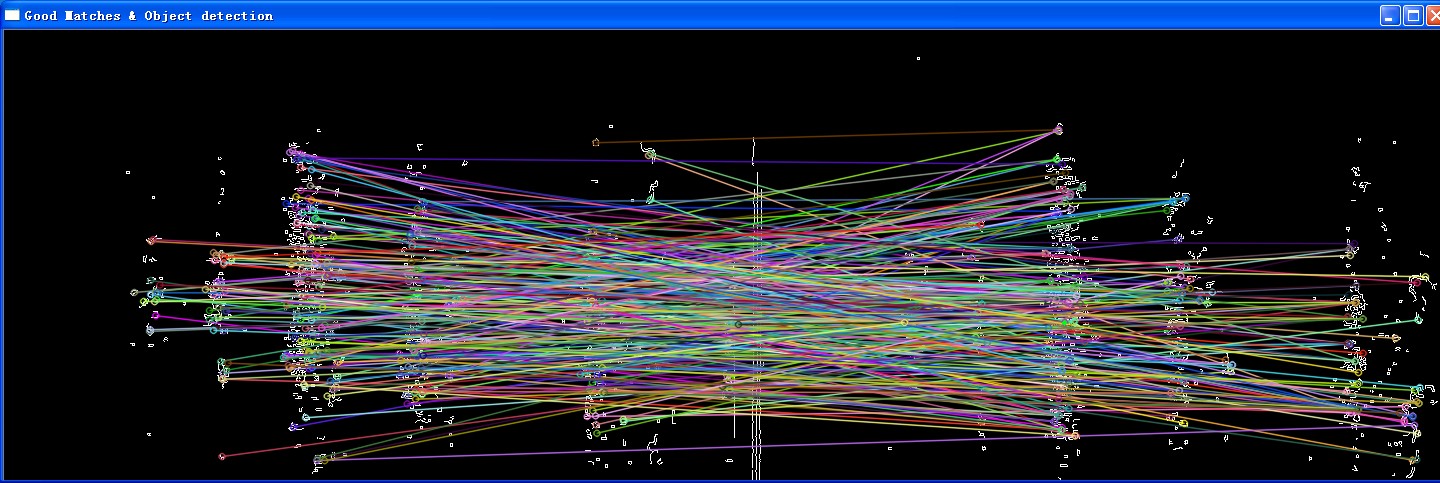

BFMatcher matcher(NORM_L2);

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

//-- Draw matches

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2, matches, img_matches );

//-- Show detected (drawn) keypoints

imshow("Keypoints 1", img_keypoints_1 );

imshow("Keypoints 2", img_keypoints_2 );

//-- Show detected matches

imshow("Matches", img_matches );

waitKey(0);

return 0;

}

//

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

using namespace std;

using namespace cv;

int main( int argc, char** argv )

{

Mat img_1 = imread( "img_opencv_1.png", 0 );

Mat img_2 = imread( "img_opencv_2.png", 0 );

if( !img_1.data || !img_2.data )

{ std::cout<< " --(!) Error reading images " << std::endl; return -1; }

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Draw keypoints

Mat img_keypoints_1; Mat img_keypoints_2;

drawKeypoints( img_1, keypoints_1, img_keypoints_1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

drawKeypoints( img_2, keypoints_2, img_keypoints_2, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < descriptors_1.rows; i++ )

{ double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist );

printf("-- Min dist : %f \n", min_dist );

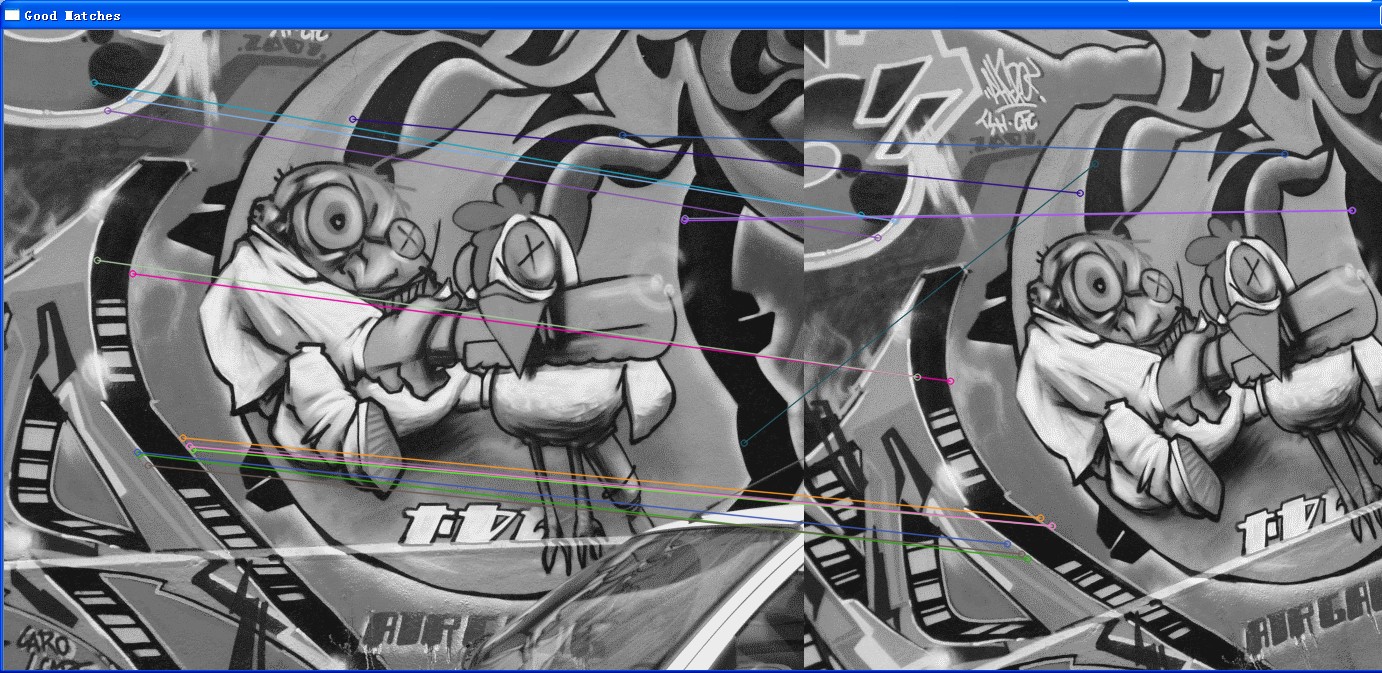

//-- Draw only "good" matches (i.e. whose distance is less than 2*min_dist,

//-- or a small arbitary value ( 0.02 ) in the event that min_dist is very

//-- small)

//-- PS.- radiusMatch can also be used here.

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_1.rows; i++ )

{ if( matches[i].distance <= max(2*min_dist, 0.02) )

{ good_matches.push_back( matches[i]); }

}

//-- Draw only "good" matches

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Show detected matches

imshow( "Good Matches", img_matches );

for( int i = 0; i < (int)good_matches.size(); i++ )

{ printf( "-- Good Match [%d] Keypoint 1: %d -- Keypoint 2: %d \n", i, good_matches[i].queryIdx, good_matches[i].trainIdx ); }

waitKey(0);

return 0;

}

//

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

using namespace std;

using namespace cv;

int main( int argc, char** argv )

{

Mat img_1 = imread( "img_opencv_1.png", 0 );

Mat img_2 = imread( "img_opencv_2.png", 0 );

if( !img_1.data || !img_2.data )

{ std::cout<< " --(!) Error reading images " << std::endl; return -1; }

//-- Step 1: Detect the keypoints using SURF Detector

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Draw keypoints

Mat img_keypoints_1; Mat img_keypoints_2;

drawKeypoints( img_1, keypoints_1, img_keypoints_1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

drawKeypoints( img_2, keypoints_2, img_keypoints_2, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

double max_dist = 0; double min_dist = 100;

//-- Quick calculation of max and min distances between keypoints

for( int i = 0; i < descriptors_1.rows; i++ )

{ double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist );

printf("-- Min dist : %f \n", min_dist );

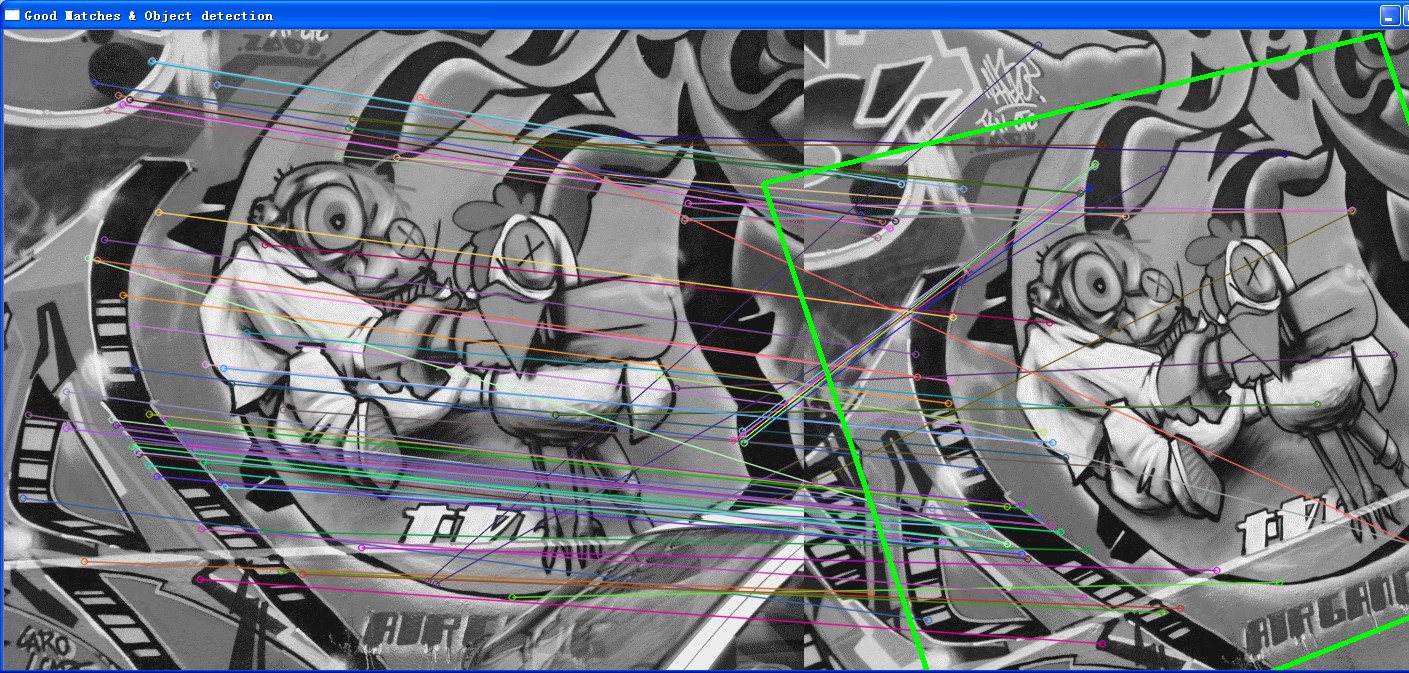

//-- Draw only "good" matches (i.e. whose distance is less than 2*min_dist,

//-- or a small arbitary value ( 0.02 ) in the event that min_dist is very

//-- small)

//-- PS.- radiusMatch can also be used here.

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_1.rows; i++ )

{ if( matches[i].distance <= /*max(2*min_dist, 0.02)*/3*min_dist )

{ good_matches.push_back( matches[i]); }

}

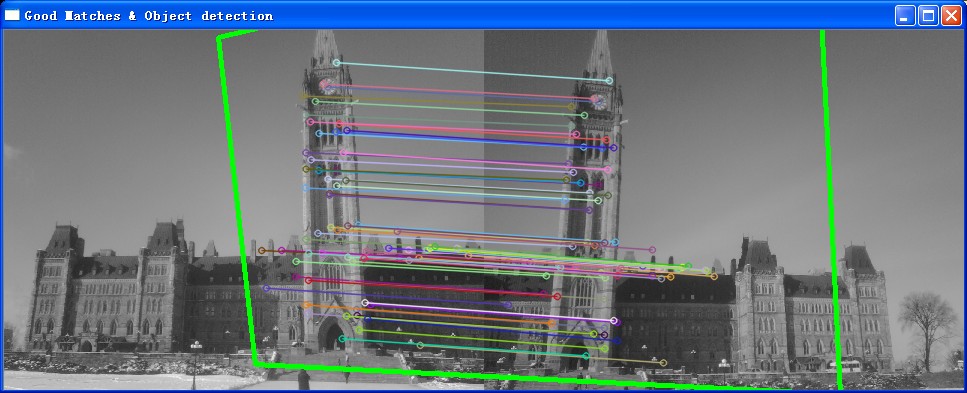

//-- Draw only "good" matches

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object from img_1 in img_2

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < (int)good_matches.size(); i++ )

{

obj.push_back( keypoints_1[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_2[ good_matches[i].trainIdx ].pt );

printf( "-- Good Match [%d] Keypoint 1: %d -- Keypoint 2: %d \n", i, good_matches[i].queryIdx, good_matches[i].trainIdx );

}

//直接调用ransac

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = Point(0,0); obj_corners[1] = Point( img_1.cols, 0 );

obj_corners[2] = Point( img_1.cols, img_1.rows ); obj_corners[3] = Point( 0, img_1.rows );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

Point2f offset( (float)img_1.cols, 0);

line( img_matches, scene_corners[0] + offset, scene_corners[1] + offset, Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + offset, scene_corners[2] + offset, Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + offset, scene_corners[3] + offset, Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + offset, scene_corners[0] + offset, Scalar( 0, 255, 0), 4 );

//-- Show detected matches

imshow( "Good Matches & Object detection", img_matches );

waitKey(0);

return 0;

}

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

using namespace std;

using namespace cv;

//获得两个pointf之间的距离

float fDistance(Point2f p1,Point2f p2)

{

float ftmp = (p1.x-p2.x)*(p1.x-p2.x) + (p1.y-p2.y)*(p1.y-p2.y);

ftmp = sqrt((float)ftmp);

return ftmp;

}

int main( int argc, char** argv )

{

Mat img_1 = imread( "img_opencv_1.png", 0 );

Mat img_2 = imread( "img_opencv_2.png", 0 );

////添加于连铸图像

//img_1 = img_1(Rect(20,0,img_1.cols-40,img_1.rows));

//img_2 = img_2(Rect(20,0,img_1.cols-40,img_1.rows));

// cv::Canny(img_1,img_1,100,200);

// cv::Canny(img_2,img_2,100,200);

if( !img_1.data || !img_2.data )

{ std::cout<< " --(!) Error reading images " << std::endl; return -1; }

//-- Step 1: 使用SURF识别出特征点

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Step 2: 描述SURF特征

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: 匹配

FlannBasedMatcher matcher;//BFMatcher为强制匹配

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

//取最大最小距离

double max_dist = 0; double min_dist = 100;

for( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

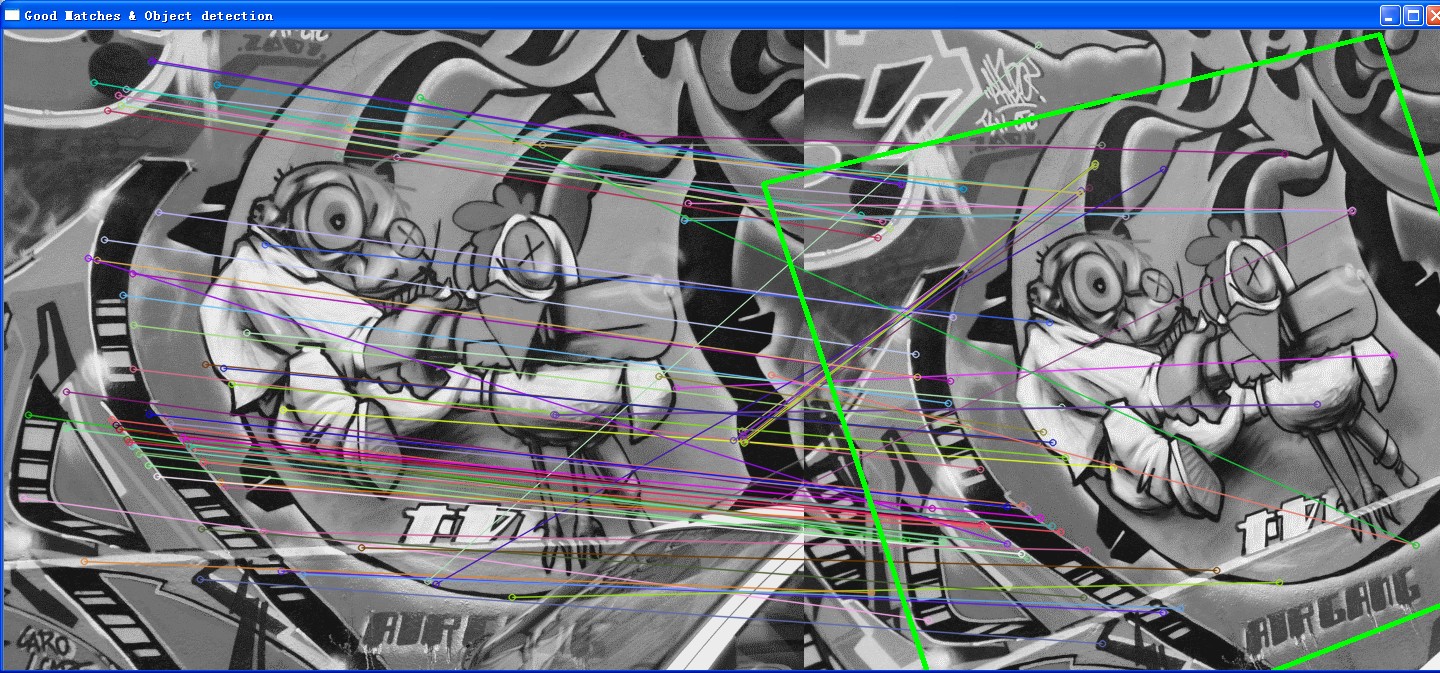

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_1.rows; i++ )

{

if( matches[i].distance <= 3*min_dist )//这里的阈值选择了3倍的min_dist

{

good_matches.push_back( matches[i]);

}

}

//画出"good match"

Mat img_matches;

drawMatches( img_1, keypoints_1, img_2, keypoints_2,

good_matches, img_matches, Scalar::all(-1), Scalar::all(-1),

vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS );

//-- Localize the object from img_1 in img_2

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < (int)good_matches.size(); i++ )

{

obj.push_back( keypoints_1[ good_matches[i].queryIdx ].pt );

scene.push_back( keypoints_2[ good_matches[i].trainIdx ].pt );

}

//直接调用ransac,计算单应矩阵

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector<Point2f> obj_corners(4);

obj_corners[0] = Point(0,0);

obj_corners[1] = Point( img_1.cols, 0 );

obj_corners[2] = Point( img_1.cols, img_1.rows );

obj_corners[3] = Point( 0, img_1.rows );

std::vector<Point2f> scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

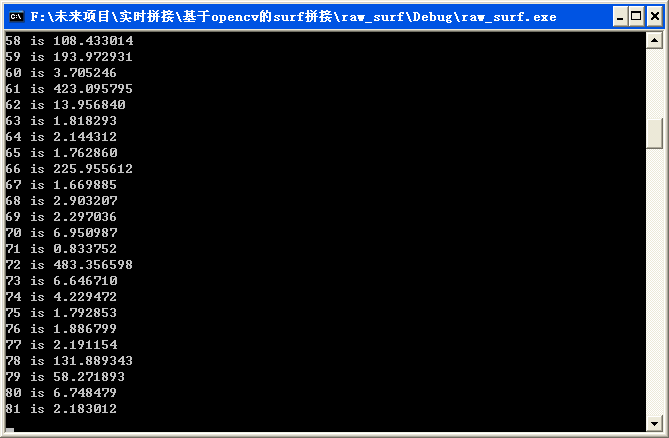

//计算内点外点

std::vector<Point2f> scene_test(obj.size());

perspectiveTransform(obj,scene_test,H);

for (int i=0;i<scene_test.size();i++)

{

printf("%d is %f \n",i+1,fDistance(scene[i],scene_test[i]));

}

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

Point2f offset( (float)img_1.cols, 0);

line( img_matches, scene_corners[0] + offset, scene_corners[1] + offset, Scalar(0, 255, 0), 4 );

line( img_matches, scene_corners[1] + offset, scene_corners[2] + offset, Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[2] + offset, scene_corners[3] + offset, Scalar( 0, 255, 0), 4 );

line( img_matches, scene_corners[3] + offset, scene_corners[0] + offset, Scalar( 0, 255, 0), 4 );

//-- Show detected matches

imshow( "Good Matches & Object detection", img_matches );

waitKey(0);

return 0;

}

//

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

using namespace std;

using namespace cv;

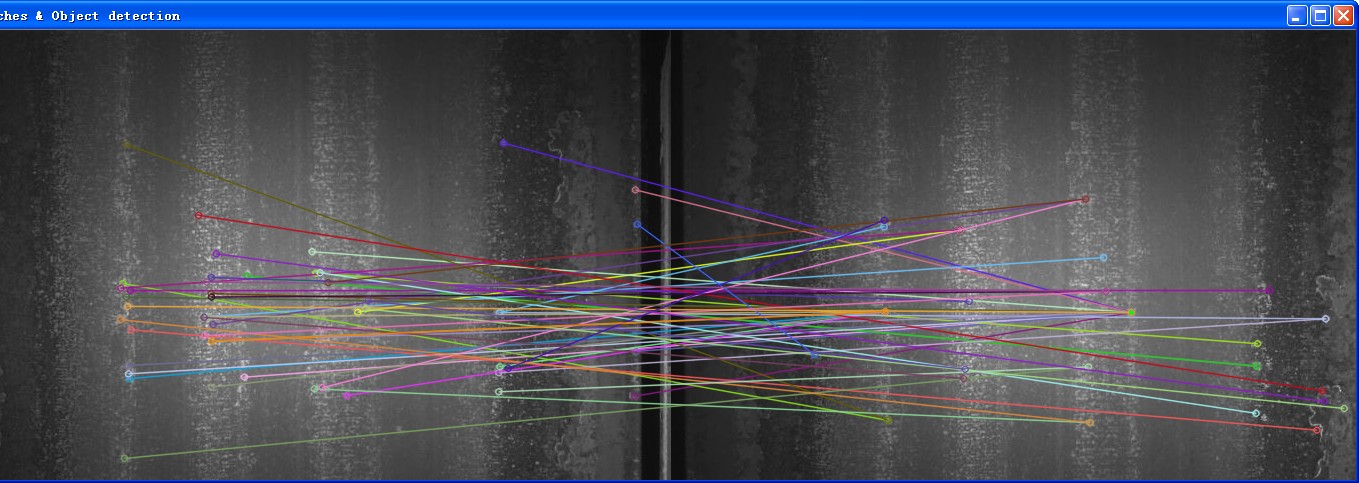

int main( int argc, char** argv )

{

Mat img_1 ;

Mat img_2 ;

Mat img_raw_1 = imread("c1.bmp");

Mat img_raw_2 = imread("c3.bmp");

cvtColor(img_raw_1,img_1,CV_BGR2GRAY);

cvtColor(img_raw_2,img_2,CV_BGR2GRAY);

//-- Step 1: 使用SURF识别出特征点

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Step 2: 描述SURF特征

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: 匹配

FlannBasedMatcher matcher;//BFMatcher为强制匹配

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

//取最大最小距离

double max_dist = 0; double min_dist = 100;

for( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_1.rows; i++ )

{

if( matches[i].distance <= 3*min_dist )//这里的阈值选择了3倍的min_dist

{

good_matches.push_back( matches[i]);

}

}

//-- Localize the object from img_1 in img_2

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < (int)good_matches.size(); i++ )

{

//这里采用“帧向拼接图像中添加的方法”,因此左边的是scene,右边的是obj

scene.push_back( keypoints_1[ good_matches[i].queryIdx ].pt );

obj.push_back( keypoints_2[ good_matches[i].trainIdx ].pt );

}

//直接调用ransac,计算单应矩阵

Mat H = findHomography( obj, scene, CV_RANSAC );

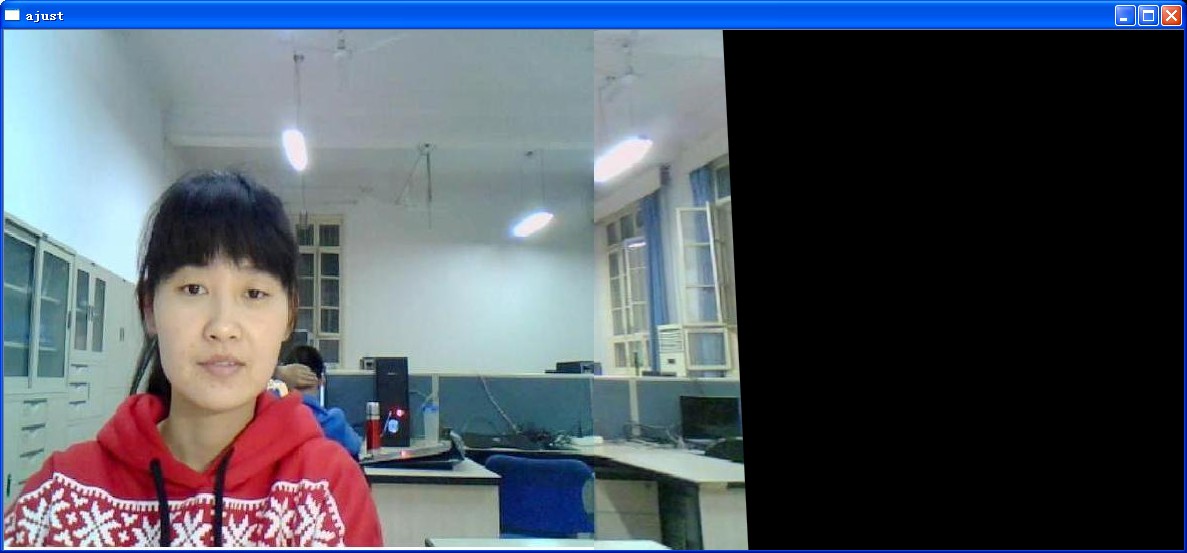

//图像对准

Mat result;

warpPerspective(img_raw_2,result,H,Size(2*img_2.cols,img_2.rows));

Mat half(result,cv::Rect(0,0,img_2.cols,img_2.rows));

img_raw_1.copyTo(half);

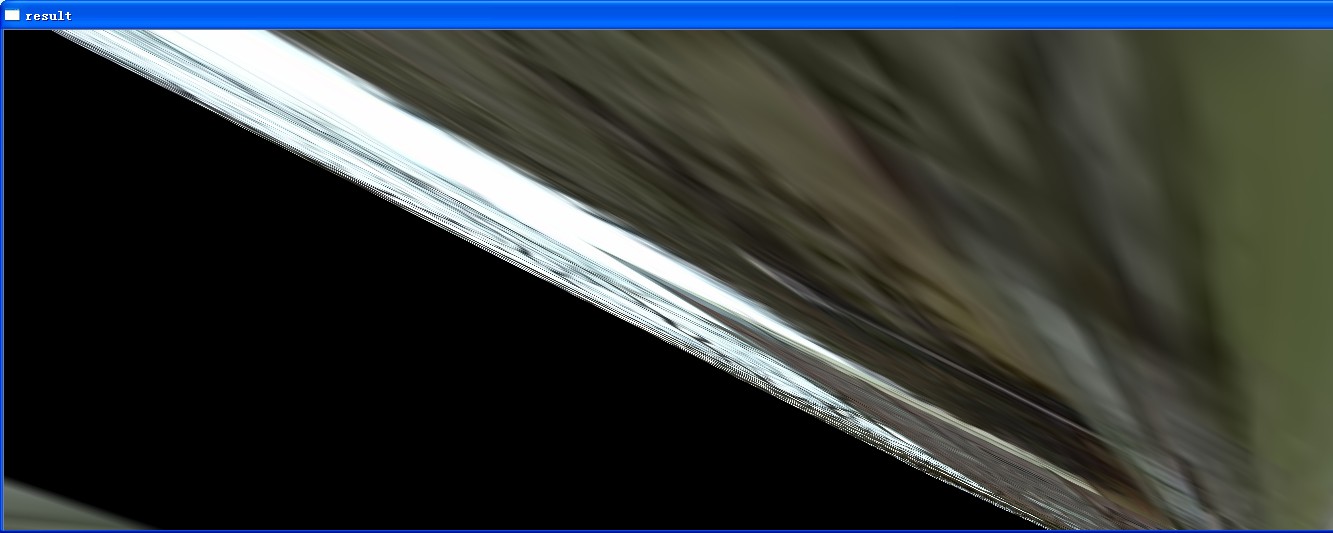

imshow("result",result);

waitKey(0);

return 0;

}

//

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

using namespace std;

using namespace cv;

int main( int argc, char** argv )

{

Mat img_1 ;

Mat img_2 ;

Mat img_raw_1 = imread("c1.bmp");

Mat img_raw_2 = imread("c3.bmp");

cvtColor(img_raw_1,img_1,CV_BGR2GRAY);

cvtColor(img_raw_2,img_2,CV_BGR2GRAY);

//-- Step 1: 使用SURF识别出特征点

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Step 2: 描述SURF特征

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: 匹配

FlannBasedMatcher matcher;//BFMatcher为强制匹配

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

//取最大最小距离

double max_dist = 0; double min_dist = 100;

for( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_1.rows; i++ )

{

if( matches[i].distance <= 3*min_dist )//这里的阈值选择了3倍的min_dist

{

good_matches.push_back( matches[i]);

}

}

//-- Localize the object from img_1 in img_2

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < (int)good_matches.size(); i++ )

{

//这里采用“帧向拼接图像中添加的方法”,因此左边的是scene,右边的是obj

scene.push_back( keypoints_1[ good_matches[i].queryIdx ].pt );

obj.push_back( keypoints_2[ good_matches[i].trainIdx ].pt );

}

//直接调用ransac,计算单应矩阵

Mat H = findHomography( obj, scene, CV_RANSAC );

//图像对准

Mat result;

Mat resultback; //保存的是新帧经过单应矩阵变换以后的图像

warpPerspective(img_raw_2,result,H,Size(2*img_2.cols,img_2.rows));

result.copyTo(resultback);

Mat half(result,cv::Rect(0,0,img_2.cols,img_2.rows));

img_raw_1.copyTo(half);

imshow("ajust",result);

//渐入渐出融合

Mat result_linerblend = result.clone();

double dblend = 0.0;

int ioffset =img_2.cols-100;

for (int i = 0;i<100;i++)

{

result_linerblend.col(ioffset+i) = result.col(ioffset+i)*(1-dblend) + resultback.col(ioffset+i)*dblend;

dblend = dblend +0.01;

}

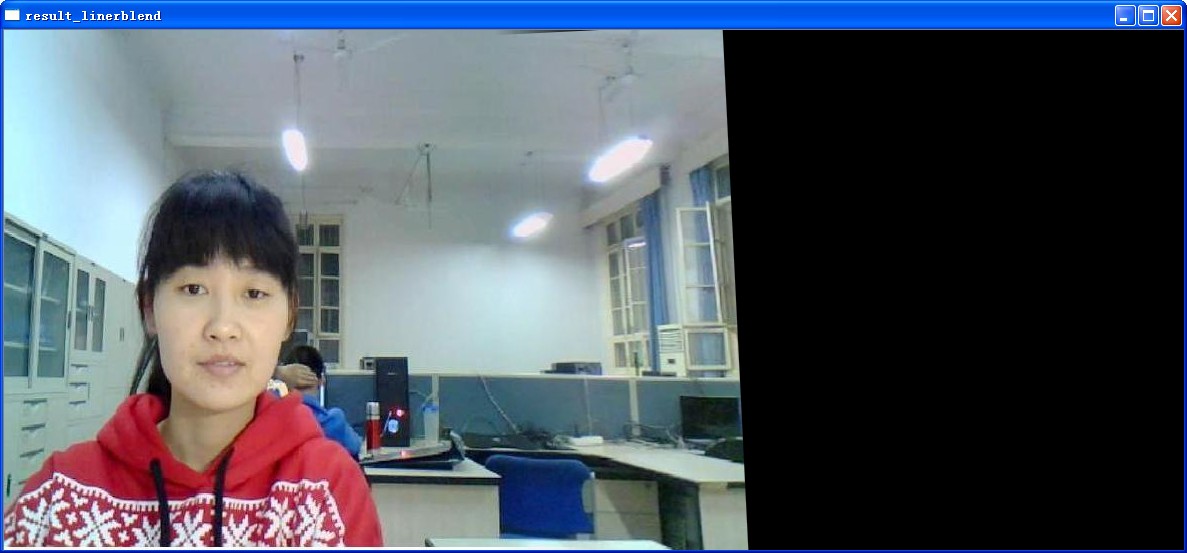

imshow("result_linerblend",result_linerblend);

//最大值法融合

Mat result_maxvalue = result.clone();

for (int i = 0;i<img_2.rows;i++)

{

for (int j=0;j<100;j++)

{

int iresult= result.at<Vec3b>(i,ioffset+j)[0]+ result.at<Vec3b>(i,ioffset+j)[1]+ result.at<Vec3b>(i,ioffset+j)[2];

int iresultback = resultback.at<Vec3b>(i,ioffset+j)[0]+ resultback.at<Vec3b>(i,ioffset+j)[1]+ resultback.at<Vec3b>(i,ioffset+j)[2];

if (iresultback >iresult)

{

result_maxvalue.at<Vec3b>(i,ioffset+j) = resultback.at<Vec3b>(i,ioffset+j);

}

}

}

imshow("result_maxvalue",result_maxvalue);

//带阈值的加权平滑处理

Mat result_advance = result.clone();

for (int i = 0;i<img_2.rows;i++)

{

for (int j = 0;j<33;j++)

{

int iimg1= result.at<Vec3b>(i,ioffset+j)[0]+ result.at<Vec3b>(i,ioffset+j)[1]+ result.at<Vec3b>(i,ioffset+j)[2];

//int iimg2= resultback.at<Vec3b>(i,ioffset+j)[0]+ resultback.at<Vec3b>(i,ioffset+j)[1]+ resultback.at<Vec3b>(i,ioffset+j)[2];

int ilinerblend = result_linerblend.at<Vec3b>(i,ioffset+j)[0]+ result_linerblend.at<Vec3b>(i,ioffset+j)[1]+ result_linerblend.at<Vec3b>(i,ioffset+j)[2];

if (abs(iimg1 - ilinerblend)<3)

{

result_advance.at<Vec3b>(i,ioffset+j) = result_linerblend.at<Vec3b>(i,ioffset+j);

}

}

}

for (int i = 0;i<img_2.rows;i++)

{

for (int j = 33;j<66;j++)

{

int iimg1= result.at<Vec3b>(i,ioffset+j)[0]+ result.at<Vec3b>(i,ioffset+j)[1]+ result.at<Vec3b>(i,ioffset+j)[2];

int iimg2= resultback.at<Vec3b>(i,ioffset+j)[0]+ resultback.at<Vec3b>(i,ioffset+j)[1]+ resultback.at<Vec3b>(i,ioffset+j)[2];

int ilinerblend = result_linerblend.at<Vec3b>(i,ioffset+j)[0]+ result_linerblend.at<Vec3b>(i,ioffset+j)[1]+ result_linerblend.at<Vec3b>(i,ioffset+j)[2];

if (abs(max(iimg1,iimg2) - ilinerblend)<3)

{

result_advance.at<Vec3b>(i,ioffset+j) = result_linerblend.at<Vec3b>(i,ioffset+j);

}

else if (iimg2>iimg1)

{

result_advance.at<Vec3b>(i,ioffset+j) = resultback.at<Vec3b>(i,ioffset+j);

}

}

}

for (int i = 0;i<img_2.rows;i++)

{

for (int j = 66;j<100;j++)

{

//int iimg1= result.at<Vec3b>(i,ioffset+j)[0]+ result.at<Vec3b>(i,ioffset+j)[1]+ result.at<Vec3b>(i,ioffset+j)[2];

int iimg2= resultback.at<Vec3b>(i,ioffset+j)[0]+ resultback.at<Vec3b>(i,ioffset+j)[1]+ resultback.at<Vec3b>(i,ioffset+j)[2];

int ilinerblend = result_linerblend.at<Vec3b>(i,ioffset+j)[0]+ result_linerblend.at<Vec3b>(i,ioffset+j)[1]+ result_linerblend.at<Vec3b>(i,ioffset+j)[2];

if (abs(iimg2 - ilinerblend)<3)

{

result_advance.at<Vec3b>(i,ioffset+j) = result_linerblend.at<Vec3b>(i,ioffset+j);

}

else

{

result_advance.at<Vec3b>(i,ioffset+j) = resultback.at<Vec3b>(i,ioffset+j);

}

}

}

imshow("result_advance",result_advance);

waitKey(0);

return 0;

}

uchar* outData=outImage.ptr<uchar>(0);

int itemp = outData[2]; //获得偏移

line(result_linerblend,Point(result_linerblend.cols-itemp,0),Point(result_linerblend.cols-itemp,img_2.rows),Scalar(255,255,255),2);

imshow("result_linerblend",result_linerblend);

Mat matmask = result_linerblend.clone();

int idaterow0 = 0;int idaterowend = 0;//标识了最上面和最小面第一个不为0的树,这里采用的是宽度减去的算法

for(int j=matmask.cols-1;j>=0;j--)

{

if (matmask.at<Vec3b>(0,j)[0]>0)

{

idaterow0 = j;

break;

}

}

for(int j=matmask.cols-1;j>=0;j--)

{

if (matmask.at<Vec3b>(matmask.rows-1,j)[0]>0)

{

idaterowend = j;

break;

}

}

line(matmask,Point(min(idaterow0,idaterowend),0),Point(min(idaterow0,idaterowend),img_2.rows),Scalar(255,255,255),2);

imshow("result_linerblend",matmask);

//

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

using namespace std;

using namespace cv;

int main( int argc, char** argv )

{

Mat img_1 ;

Mat img_2 ;

Mat img_raw_1 = imread("Univ3.jpg");

Mat img_raw_2 = imread("Univ2.jpg");

cvtColor(img_raw_1,img_1,CV_BGR2GRAY);

cvtColor(img_raw_2,img_2,CV_BGR2GRAY);

//-- Step 1: 使用SURF识别出特征点

int minHessian = 400;

SurfFeatureDetector detector( minHessian );

std::vector<KeyPoint> keypoints_1, keypoints_2;

detector.detect( img_1, keypoints_1 );

detector.detect( img_2, keypoints_2 );

//-- Step 2: 描述SURF特征

SurfDescriptorExtractor extractor;

Mat descriptors_1, descriptors_2;

extractor.compute( img_1, keypoints_1, descriptors_1 );

extractor.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: 匹配

FlannBasedMatcher matcher;//BFMatcher为强制匹配

std::vector< DMatch > matches;

matcher.match( descriptors_1, descriptors_2, matches );

//取最大最小距离

double max_dist = 0; double min_dist = 100;

for( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

std::vector< DMatch > good_matches;

for( int i = 0; i < descriptors_1.rows; i++ )

{

if( matches[i].distance <= 3*min_dist )//这里的阈值选择了3倍的min_dist

{

good_matches.push_back( matches[i]);

}

}

//-- Localize the object from img_1 in img_2

std::vector<Point2f> obj;

std::vector<Point2f> scene;

for( int i = 0; i < (int)good_matches.size(); i++ )

{

//这里采用“帧向拼接图像中添加的方法”,因此左边的是scene,右边的是obj

scene.push_back( keypoints_1[ good_matches[i].queryIdx ].pt );

obj.push_back( keypoints_2[ good_matches[i].trainIdx ].pt );

}

//直接调用ransac,计算单应矩阵

Mat H = findHomography( obj, scene, CV_RANSAC );

//图像对准

Mat result;

Mat resultback; //保存的是新帧经过单应矩阵变换以后的图像

warpPerspective(img_raw_2,result,H,Size(2*img_2.cols,img_2.rows));

result.copyTo(resultback);

Mat half(result,cv::Rect(0,0,img_2.cols,img_2.rows));

img_raw_1.copyTo(half);

//imshow("ajust",result);

//渐入渐出融合

Mat result_linerblend = result.clone();

double dblend = 0.0;

int ioffset =img_2.cols-100;

for (int i = 0;i<100;i++)

{

result_linerblend.col(ioffset+i) = result.col(ioffset+i)*(1-dblend) + resultback.col(ioffset+i)*dblend;

dblend = dblend +0.01;

}

//获取已经处理图像的边界

Mat matmask = result_linerblend.clone();

int idaterow0 = 0;int idaterowend = 0;//标识了最上面和最小面第一个不为0的树,这里采用的是宽度减去的算法

for(int j=matmask.cols-1;j>=0;j--)

{

if (matmask.at<Vec3b>(0,j)[0]>0)

{

idaterow0 = j;

break;

}

}

for(int j=matmask.cols-1;j>=0;j--)

{

if (matmask.at<Vec3b>(matmask.rows-1,j)[0]>0)

{

idaterowend = j;

break;

}

}

line(matmask,Point(min(idaterow0,idaterowend),0),Point(min(idaterow0,idaterowend),img_2.rows),Scalar(255,255,255),2);

imshow("result_linerblend",matmask);

/////////////////---------------对结果图像继续处理---------------------------------/////////////////

img_raw_1 = result_linerblend(Rect(0,0,min(idaterow0,idaterowend),img_2.rows));

img_raw_2 = imread("Univ1.jpg");

cvtColor(img_raw_1,img_1,CV_BGR2GRAY);

cvtColor(img_raw_2,img_2,CV_BGR2GRAY);

////-- Step 1: 使用SURF识别出特征点

//

SurfFeatureDetector detector2( minHessian );

keypoints_1.clear();

keypoints_2.clear();

detector2.detect( img_1, keypoints_1 );

detector2.detect( img_2, keypoints_2 );

//-- Step 2: 描述SURF特征

SurfDescriptorExtractor extractor2;

extractor2.compute( img_1, keypoints_1, descriptors_1 );

extractor2.compute( img_2, keypoints_2, descriptors_2 );

//-- Step 3: 匹配

FlannBasedMatcher matcher2;//BFMatcher为强制匹配

matcher2.match( descriptors_1, descriptors_2, matches );

//取最大最小距离

max_dist = 0; min_dist = 100;

for( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if( dist < min_dist ) min_dist = dist;

if( dist > max_dist ) max_dist = dist;

}

good_matches.clear();

for( int i = 0; i < descriptors_1.rows; i++ )

{

if( matches[i].distance <= 3*min_dist )//这里的阈值选择了3倍的min_dist

{

good_matches.push_back( matches[i]);

}

}

//-- Localize the object from img_1 in img_2

obj.clear();

scene.clear();

for( int i = 0; i < (int)good_matches.size(); i++ )

{

//这里采用“帧向拼接图像中添加的方法”,因此左边的是scene,右边的是obj

scene.push_back( keypoints_1[ good_matches[i].queryIdx ].pt );

obj.push_back( keypoints_2[ good_matches[i].trainIdx ].pt );

}

//直接调用ransac,计算单应矩阵

H = findHomography( obj, scene, CV_RANSAC );

//图像对准

warpPerspective(img_raw_2,result,H,Size(img_1.cols+img_2.cols,img_2.rows));

result.copyTo(resultback);

Mat half2(result,cv::Rect(0,0,img_1.cols,img_1.rows));

img_raw_1.copyTo(half2);

imshow("ajust",result);

//渐入渐出融合

result_linerblend = result.clone();

dblend = 0.0;

ioffset =img_1.cols-100;

for (int i = 0;i<100;i++)

{

result_linerblend.col(ioffset+i) = result.col(ioffset+i)*(1-dblend) + resultback.col(ioffset+i)*dblend;

dblend = dblend +0.01;

}

imshow("result_linerblend",result_linerblend);

waitKey(0);

return 0;

}

//

#include "stdafx.h"

#include <iostream>

#include "opencv2/core/core.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include "opencv2/features2d/features2d.hpp"

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/nonfree/features2d.hpp"

#include "opencv2/calib3d/calib3d.hpp"

using namespace std;

using namespace cv;

#define PI 3.14159

int main( int argc, char** argv )

{

Mat img_1 = imread( "Univ1.jpg");

Mat img_result = img_1.clone();

for(int i=0;i<img_result.rows;i++)

{ for(int j=0;j<img_result.cols;j++)

{

img_result.at<Vec3b>(i,j)=0;

}

}

int W = img_1.cols;

int H = img_1.rows;

float r = W/(2*tan(PI/6));

float k = 0;

float fx=0;

float fy=0;

for(int i=0;i<img_1.rows;i++)

{ for(int j=0;j<img_1.cols;j++)

{

k = sqrt((float)(r*r+(W/2-j)*(W/2-j)));

fx = r*sin(PI/6)+r*sin(atan((j -W/2 )/r));

fy = H/2 +r*(i-H/2)/k;

int ix = (int)fx;

int iy = (int)fy;

if (ix<W&&ix>=0&&iy<H&&iy>=0)

{

img_result.at<Vec3b>(iy,ix)= img_1.at<Vec3b>(i,j);

}

}

}

imshow( "桶状投影", img_1 );

imshow("img_result",img_result);

waitKey(0);

return 0;

}

http://blog.csdn.net/jh19871985/article/details/8477935

图像处理之拼接---图像拼接opencv的更多相关文章

- javacpp-opencv图像处理3:使用opencv原生方法遍历摄像头设备及调用(增加实时帧率计算方法)

javaCV图像处理系列: javaCV图像处理之1:实时视频添加文字水印并截取视频图像保存成图片,实现文字水印的字体.位置.大小.粗度.翻转.平滑等操作 javaCV图像处理之2:实时视频添加图片水 ...

- 图像处理简单实例[OpenCV 笔记1]

几个入门的简单程序,和对应的CMakeList, 虽然简单重新测一下写一下也是好的. CMake教程传送门 图像显示 ShowImage.cxx #include <opencv2/opencv ...

- 使用OpenMP加快OpenCV图像处理性能 | speed up opencv image processing with openmp

本文首发于个人博客https://kezunlin.me/post/7a6ba82e/,欢迎阅读! speed up opencv image processing with openmp Serie ...

- 使用OpenCL提升OpenCV图像处理性能 | speed up opencv image processing with OpenCL

本文首发于个人博客https://kezunlin.me/post/59afd8b3/,欢迎阅读最新内容! speed up opencv image processing with OpenCL G ...

- 图像处理之基础---基于opencv的灰度图像微分

argv分别为,可执行文件名.读入的原始图像.输出原始图像的灰度值.输出原始图像灰度值沿x轴方向的一阶微分.输出原始图像灰度值沿x轴方向的二阶微分. #include #include #includ ...

- OpenCV探索之路(二十四)图像拼接和图像融合技术

图像拼接在实际的应用场景很广,比如无人机航拍,遥感图像等等,图像拼接是进一步做图像理解基础步骤,拼接效果的好坏直接影响接下来的工作,所以一个好的图像拼接算法非常重要. 再举一个身边的例子吧,你用你的手 ...

- Python图像处理丨基于OpenCV和像素处理的图像灰度化处理

摘要:本篇文章讲解图像灰度化处理的知识,结合OpenCV调用cv2.cvtColor()函数实现图像灰度操作,使用像素处理方法对图像进行灰度化处理. 本文分享自华为云社区<[Python图像处理 ...

- OpenCV框架介绍

OpenCV框架介绍 概述 OpenCV是一个开放源代码的计算机视觉应用平台,由英特尔公司下属研发中心俄罗斯团队发起该项目,开源BSD证书,OpenCV的目标是实现实时计算机视觉,,是一个跨平台的计算 ...

- opencv:opencv概述

opencv官方:www.opencv.org github:https://github.com/opencv OpenCV OpenCV是一个开放源代码的计算机视觉应用平台,由英特尔公司研发中心俄 ...

随机推荐

- Office WPS如何让页与页之间不相互影响

在一个页面结束的位置点击插入-分页符,完了之后测试按回车下一页的内容有没有跟着往下跑,如果还是跟着往下跑的,再插入一次分页符,一般插入多次之后就不会跟着跑了,但是插的太多会有空白页面 测试按回车, ...

- ffmpeg的新东东:AVFilter

http://blog.csdn.net/niu_gao/article/details/7219641 利用ffmpeg做图像的pixel format转换你还在用libswscale吗?嘿嘿,过时 ...

- CAS(Central Authentication Service)——windows上简单搭建及測试

入手文章,大神绕行. 一.服务端搭建 我使用的服务端版本号为:cas-server-3.4.11-release.zip.解压之后,将\cas-server-3.4.11-release\cas-se ...

- 网络编程入坑基础-BIO总结

IO总结 前提 参考资料: <Java I/O> -- 这本书没有翻译版,需要自己啃一下. <Java I/O>这本书主要介绍了IO和NIO的相关API使用,但是NIO部分并不 ...

- vs2017安装过程问题及解决方法

1. 问题:C++ 无法打开 源 文件 "errno.h"等文件 解决方法:https://jingyan.baidu.com/article/8ebacdf0167b2249f6 ...

- oracle expdp导入时 提示“ORA-39002: 操作无效 ORA-39070: 无法打开日志文件 ”

1.导出数据库的时候报错 expdp zz/zz@orcl directory=exp_dp dumpfile=zz_20170520.dump logfile=zz_20170520.log 2 ...

- JS JSOP跨域请求实例详解

JSONP(JSON with Padding)是JSON的一种“使用模式”,可用于解决主流浏览器的跨域数据访问的问题.这篇文章主要介绍了JS JSOP跨域请求实例详解的相关资料,需要的朋友可以参考下 ...

- python list插入、拼接

1可以使用"+"号完成操作 输出为: [1, 2, 3, 8, 'google', 'com'] 2.使用extend方法 . 输入相同 3使用切片 输出相同 PS:len(l1) ...

- jfinal的maven配置

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/20 ...

- Linux下双网卡-双外网网关-电信联通双线主机设置

1.实现:通过运营商提供的智能DNS,把电信用户访问时,数据进电信的网卡,出来时也从电信的网关出来,访问联通时,从联通网卡时,联通网卡出.这样速度就会快,实现双线主机的功能. 2.网卡信息:电信IP( ...