1.1 Introduction中 Topics and Logs官网剖析(博主推荐)

不多说,直接上干货!

一切来源于官网

http://kafka.apache.org/documentation/

Topics and Logs

话题和日志 (Topic和Log)

Let's first dive into the core abstraction Kafka provides for a stream of records—the topic.

让我们更深入的了解Kafka中的Topic。

A topic is a category or feed name to which records are published. Topics in Kafka are always multi-subscriber; that is, a topic can have zero, one, or many consumers that subscribe to the data written to it.

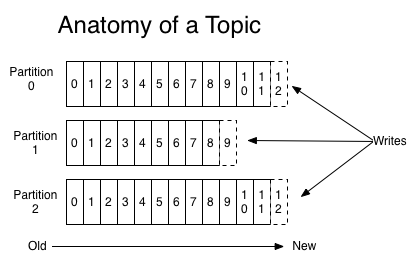

For each topic, the Kafka cluster maintains a partitioned log that looks like this:

Topic是发布的消息的类别或者种子Feed名。对于每一个Topic,Kafka集群维护这一个分区的log,就像下图中的示例:

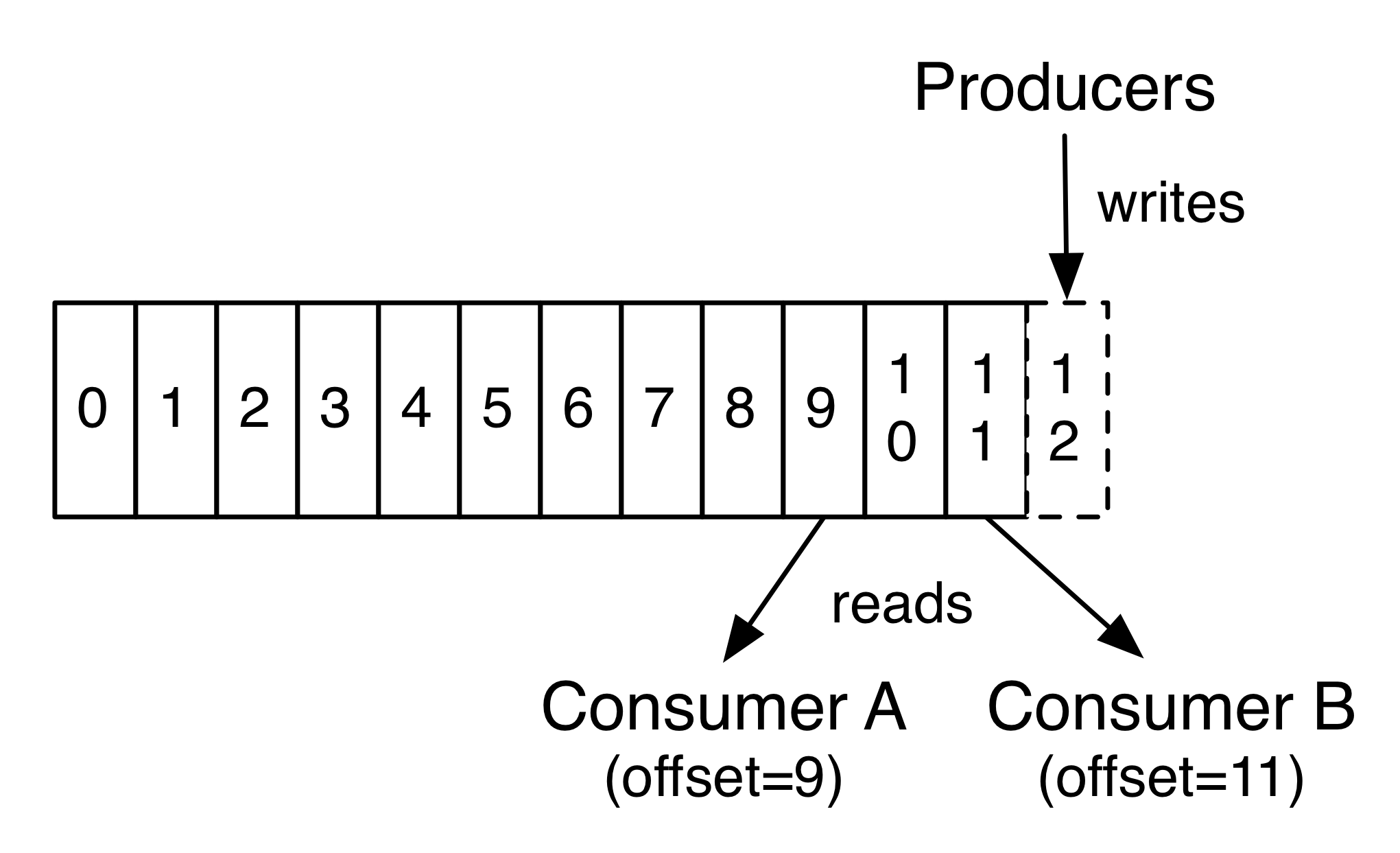

Each partition is an ordered, immutable sequence of records that is continually appended to—a structured commit log. The records in the partitions are each assigned a sequential id number called the offset that uniquely identifies each record within the partition.

每一个分区都是一个顺序的、不可变的消息队列, 并且可以持续的添加。分区中的消息都被分了一个序列号,称之为偏移量(offset),在每个分区中此偏移量都是唯一的。

The Kafka cluster retains all published records—whether or not they have been consumed—using a configurable retention period. For example, if the retention policy is set to two days, then for the two days after a record is published, it is available for consumption, after which it will be discarded to free up space. Kafka's performance is effectively constant with respect to data size so storing data for a long time is not a problem.

Kafka集群保持所有的消息,直到它们过期, 无论消息是否被消费了。 实际上消费者所持有的仅有的元数据就是这个偏移量,也就是消费者在这个log中的位置。

这个偏移量由消费者控制:正常情况当消费者消费消息的时候,偏移量也线性的的增加。

但是实际偏移量由消费者控制,消费者可以将偏移量重置为更老的一个偏移量,重新读取消息。

可以看到这种设计对消费者来说操作自如, 一个消费者的操作不会影响其它消费者对此log的处理。

再说说分区。Kafka中采用分区的设计有几个目的。一是可以处理更多的消息,不受单台服务器的限制。

Topic拥有多个分区意味着它可以不受限的处理更多的数据。第二,分区可以作为并行处理的单元,稍后会谈到这一点。

In fact, the only metadata retained on a per-consumer basis is the offset or position of that consumer in the log. This offset is controlled by the consumer: normally a consumer will advance its offset linearly as it reads records, but, in fact, since the position is controlled by the consumer it can consume records in any order it likes. For example a consumer can reset to an older offset to reprocess data from the past or skip ahead to the most recent record and start consuming from "now".

This combination of features means that Kafka consumers are very cheap—they can come and go without much impact on the cluster or on other consumers. For example, you can use our command line tools to "tail" the contents of any topic without changing what is consumed by any existing consumers.

The partitions in the log serve several purposes. First, they allow the log to scale beyond a size that will fit on a single server. Each individual partition must fit on the servers that host it, but a topic may have many partitions so it can handle an arbitrary amount of data. Second they act as the unit of parallelism—more on that in a bit.

1.1 Introduction中 Topics and Logs官网剖析(博主推荐)的更多相关文章

- Flume Channel Selectors官网剖析(博主推荐)

不多说,直接上干货! Flume Sources官网剖析(博主推荐) Flume Channels官网剖析(博主推荐) 一切来源于flume官网 http://flume.apache.org/Flu ...

- Flume Channels官网剖析(博主推荐)

不多说,直接上干货! Flume Sources官网剖析(博主推荐) 一切来源于flume官网 http://flume.apache.org/FlumeUserGuide.html Flume Ch ...

- Flume Source官网剖析(博主推荐)

不多说,直接上干货! 一切来源于flume官网 http://flume.apache.org/FlumeUserGuide.html Flume Sources Avro Source Thrift ...

- 1.2 Use Cases中 Website Activity Tracking官网剖析(博主推荐)

不多说,直接上干货! 一切来源于官网 http://kafka.apache.org/documentation/ Website Activity Tracking 网站活动追踪 The origi ...

- Flume Interceptors官网剖析(博主推荐)

不多说,直接上干货! Flume Sources官网剖析(博主推荐) Flume Channels官网剖析(博主推荐) Flume Channel Selectors官网剖析(博主推荐) Flume ...

- Event Serializers官网剖析(博主推荐)

不多说,直接上干货! Flume Sources官网剖析(博主推荐) Flume Channels官网剖析(博主推荐) Flume Channel Selectors官网剖析(博主推荐) Flume ...

- Flume Sink Processors官网剖析(博主推荐)

不多说,直接上干货! Flume Sources官网剖析(博主推荐) Flume Channels官网剖析(博主推荐) Flume Channel Selectors官网剖析(博主推荐) Flume ...

- Flume Sinks官网剖析(博主推荐)

不多说,直接上干货! Flume Sources官网剖析(博主推荐) Flume Channels官网剖析(博主推荐) Flume Channel Selectors官网剖析(博主推荐) 一切来源于f ...

- 怎样取消老毛桃软件赞助商---只需在输入框中输入老毛桃官网网址“laomaotao.org”

来源:www.laomaotao.org 时间:2015-01-29 在众多网友和赞助商的支持下,迄今为止,老毛桃u盘启动盘制作工具已经推出了多个版本.如果有用户希望取消显示老毛桃软件中的赞助商,那不 ...

随机推荐

- C#读写config配置文件--读取配置文件类

一:通过Key访问Value的方法: //判断App.config配置文件中是否有Key(非null) if (ConfigurationManager.AppSettings.HasKeys()) ...

- 使用js实现简单放大镜的效果

实现原理:使用2个div,里面分别放大图片和小图片,在小图片上应该还有一个遮罩层,通过定位遮罩层的位置来定位大图片的相对位置,而且,遮罩层的移动应该和大图片的移动方向相反 关键: 大图片和小图片大小比 ...

- scp---远程拷贝文件

scp命令用于在Linux下进行远程拷贝文件的命令,和它类似的命令有cp,不过cp只是在本机进行拷贝不能跨服务器,而且scp传输是加密的.可能会稍微影响一下速度.当你服务器硬盘变为只读read onl ...

- QQ空间说说爬虫

QQ空间说说爬虫 闲来无事,写了一个QQ空间的爬虫,主要是爬取以前的说说,然后生成词云. 这次采用的主要模块是selenium,这是一个模拟浏览器的模块,一开始我不想用这个模块写的,但是后面分析的时候 ...

- HDU 3911 Black And White

Black And White Time Limit: 3000ms Memory Limit: 32768KB This problem will be judged on HDU. Origina ...

- HDU1788 Chinese remainder theorem again【中国剩余定理】

题目链接: pid=1788">http://acm.hdu.edu.cn/showproblem.php?pid=1788 题目大意: 题眼下边的描写叙述是多余的... 一个正整N除 ...

- Android图像处理之冰冻效果

原图 效果图 代码: package com.colo ...

- 60.浅谈nodejs中的Crypto模块

转自:https://www.cnblogs.com/c-and-unity/articles/4552059.html node.js的crypto在0.8版本并没有改版多少,这个模块的主要功能是加 ...

- 最大子矩阵和 51Nod 1051 模板题

一个M*N的矩阵,找到此矩阵的一个子矩阵,并且这个子矩阵的元素的和是最大的,输出这个最大的值. 例如:3*3的矩阵: -1 3 -1 2 -1 3 -3 1 2 和最大的子矩阵是: 3 - ...

- Linux 关闭正在运行的程序---命令

Ctrl + C 终止 Ctrl + D 退出 Ctrl + S 挂起 Ctrl + Q 解挂 Ctrl + Z 强制结束