kafka和zookeeper安装

一、Kafka简介

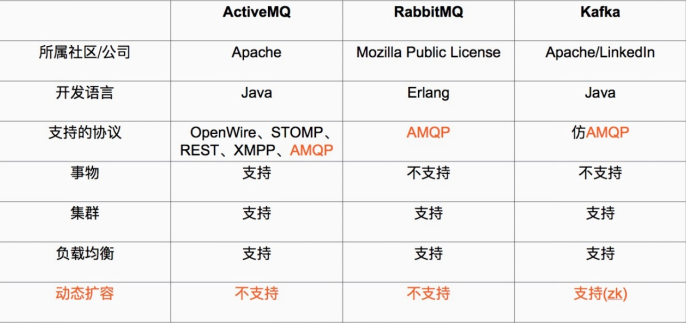

Kafka 被称为下一代分布式消息系统,是非营利性组织ASF(Apache Software Foundation,简称为ASF)基金会中的一个开源项目,比如HTTP Server、Hadoop、ActiveMQ、Tomcat等开源软件都属于Apache基金会的开源软件,类似的消息系统还有RbbitMQ、ActiveMQ、ZeroMQ,最主要的优势是其具备分布式功能、并且结合zookeeper可以实现动态扩容。

相关链接介绍:http://www.infoq.com/cn/articles/apache-kafka

二、安装环境准备

三台服务器配置hosts,并可以互相ping通,这里我选用centos系统

[root@kafka70 ~]# vim /etc/hosts

[root@kafka70 ~]# cat /etc/hosts

127.0.0.1 localhost

10.0.0.200 debian

# The following lines are desirable for IPv6 capable hosts

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.47.70 kafka70

192.168.47.71 kafka71

192.168.47.72 kafka72

[root@kafka70 ~]# ping kafka71

PING kafka70 (192.168.47.70) 56(84) bytes of data.

64 bytes from kafka70 (192.168.47.70): icmp_seq=1 ttl=64 time=0.019 ms

三、下载安装并验证zookeeper

3.1 zookeeper 下载地址

http://zookeeper.apache.org/releases.html

3.2 Kafka 下载地址

http://kafka.apache.org/downloads.html

3.3 安装 zookeeper

zookeeper 集群特性:整个集群中只有超过集群一半的 zookeeper 工作是正常的,那么整个集群对外就算可用的,例如有2台服务器做了一个zookeeper,只要有任何一台故障或宕机,那么这个zookeeper 集群就是不可用的了,因为剩下的一台没有超过集群的一半的数量,但是假如有三台zookeeper 组成一个集群,那么损坏一台还剩下两台,大于3台的一半,所以损坏一台还是可以正常运行的,但是再损坏一台就剩下一台,集群就不可用了。

如果是4台组成,损坏一台正常,损坏两台还剩两台,不满足集群总数的一半,所以3台的集群和4台的集群算坏两台的结果都是集群不可用,所以这也是为什么集群一般是奇数的原因。

3.3.1 所有节点下载软件包 所有节点都操作

节点配置操作基本一样,只是最后 zookeeper的myid不一样而已

mkdir /opt/soft

cd /opt/soft wget https://mirrors.yangxingzhen.com/jdk/jdk-11.0.1_linux-x64_bin.tar.g

wget http://archive.apache.org/dist/zookeeper/zookeeper-3.4.9/zookeeper-3.4.9.tar.gz

wget https://archive.apache.org/dist/kafka/0.9.0.0/kafka_2.11-0.9.0.0.tgz

3.3.2 安装配置 Java 环境

tar xf jdk11.0.1_linux-x64_bin.tar.gz

mv jdk11.0.1 /usr/java cat >>/etc/profile<<EOF

export JAVA_HOME=/usr/java

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

EOF source /etc/profile

java -version

3.3.3 安装zookeeper

[root@kafka70 soft]# tar zxvf zookeeper-3.4.9.tar.gz -C/opt/

[root@kafka70 soft]# ln -s /opt/zookeeper-3.4.9/ /opt/zookeeper [root@kafka70 soft]# mkdir -p /data/zookeeper

[root@kafka70 soft]# cp /opt/zookeeper/conf/zoo_sample.cfg /opt/zookeeper/conf/zoo.cfg

[root@kafka70 soft]# vim /opt/zookeeper/conf/zoo.cfg

[root@kafka70 soft]# grep "^[a-Z]" /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper

clientPort=2181

server.1=192.168.47.70:2888:3888

server.2=192.168.47.71:2888:3888

server.3=192.168.47.72:2888:3888

[root@kafka70 soft]# echo "1" > /data/zookeeper/myid

[root@kafka70 soft]# ls -lh /data/zookeeper/

total 4.0K

-rw-r--r-- 1 root root 2 Mar 12 14:17 myid

[root@kafka70 soft]# cat /data/zookeeper/myid

1

节点2

[root@kafka70 soft]# cat /data/zookeeper/myid

2

节点3

[root@kafka70 soft]# cat /data/zookeeper/myid

2

3.3.5 各节点启动zookeeper

节点1

[root@kafka70 ~]# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

节点2

[root@kafka71 soft]# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

节点3

[root@kafka72 ~]# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

3.3.6 查看各节点的zookeeper状态

节点1

[root@kafka70 ~]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower 节点2

[root@kafka71 soft]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: leader

节点3

[root@kafka72 ~]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower

3.3.7 zookeeper简单操作命令

连接到任意节点生成数据:

我们在节点1生成数据,然后在其他节点验证数据

[root@kafka70 ~]# /opt/zookeeper/bin/zkCli.sh -server 192.168.47.70:2181

Connecting to 192.168.47.70:2181

=================

WATCHER:: WatchedEvent state:SyncConnected type:None path:null

[zk: 192.168.47.70:2181(CONNECTED) 0] create /test "hello"

Created /test

[zk: 192.168.47.70:2181(CONNECTED) 1]

在其他节点上验证数据

[root@kafka71 ~]# /opt/zookeeper/bin/zkCli.sh -server 192.168.47.71:2181

Connecting to 192.168.47.71:2181

===========================

WATCHER:: WatchedEvent state:SyncConnected type:None path:null

[zk: 192.168.47.71:2181(CONNECTED) 0] get /test

hello

cZxid = 0x100000002

ctime = Mon Mar 12 15:15:52 CST 2018

mZxid = 0x100000002

mtime = Mon Mar 12 15:15:52 CST 2018

pZxid = 0x100000002

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 5

numChildren = 0

[zk: 192.168.47.71:2181(CONNECTED) 1] 在节点3上查看

[root@kafka72 ~]# /opt/zookeeper/bin/zkCli.sh -server 192.168.47.72:2181

Connecting to 192.168.47.72:2181

===========================

WATCHER:: WatchedEvent state:SyncConnected type:None path:null

[zk: 192.168.47.72:2181(CONNECTED) 0] get /test

hello

cZxid = 0x100000002

ctime = Mon Mar 12 15:15:52 CST 2018

mZxid = 0x100000002

mtime = Mon Mar 12 15:15:52 CST 2018

pZxid = 0x100000002

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 5

numChildren = 0

[zk: 192.168.47.72:2181(CONNECTED) 1]

3.4 安装并测试Kafka

节点1的配置

[root@kafka70 ~]# cd /opt/soft/

[root@kafka70 soft]# tar zxf kafka_2.11-1.0.0.tgz -C /opt/

[root@kafka70 soft]# ln -s /opt/kafka_2.11-1.0.0/ /opt/kafka

[root@kafka70 soft]# mkdir /opt/kafka/logs

[root@kafka70 soft]# vim /opt/kafka/config/server.properties

21 broker.id=1

31 listeners=PLAINTEXT://192.168.47.70:9092

60 log.dirs=/opt/kafka/logs

103 log.retention.hours=24

123 zookeeper.connect=192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 节点2的配置

[root@kafka71 ~]# cd /opt/soft/

[root@kafka71 soft]# tar zxf kafka_2.11-1.0.0.tgz -C /opt/

[root@kafka71 soft]# ln -s /opt/kafka_2.11-1.0.0/ /opt/kafka

[root@kafka71 soft]# mkdir /opt/kafka/logs

[root@kafka71 soft]# vim /opt/kafka/config/server.properties

21 broker.id=2

31 listeners=PLAINTEXT://192.168.47.71:9092

60 log.dirs=/opt/kafka/logs

103 log.retention.hours=24

123 zookeeper.connect=192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 节点3的配置

[root@kafka72 ~]# cd /opt/soft/

[root@kafka72 soft]# tar zxf kafka_2.11-1.0.0.tgz -C /opt/

[root@kafka72 soft]# ln -s /opt/kafka_2.11-1.0.0/ /opt/kafka

[root@kafka72 soft]# mkdir /opt/kafka/logs

[root@kafka72 soft]# vim /opt/kafka/config/server.properties

21 broker.id=3

31 listeners=PLAINTEXT://192.168.47.72:9092

60 log.dirs=/opt/kafka/logs

103 log.retention.hours=24

123 zookeeper.connect=192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181

3.4.1 各节点启动Kafka

节点1,可以先前台启动,方便查看错误日志

[root@kafka70 soft]# /opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

===========================

[2018-03-14 11:04:05,397] INFO Kafka version : 1.0.0 (org.apache.kafka.common.utils.AppInfoParser)

[2018-03-14 11:04:05,397] INFO Kafka commitId : aaa7af6d4a11b29d (org.apache.kafka.common.utils.AppInfoParser)

[2018-03-14 11:04:05,414] INFO [KafkaServer id=1] started (kafka.server.KafkaServer) 最后一行出现KafkaServer id和started字样,就表明启动成功了,然后就可以放到后台启动了

[root@kafka70 logs]# /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

[root@kafka70 logs]# tail -f /opt/kafka/logs/server.log

=========================

[2018-03-14 11:04:05,414] INFO [KafkaServer id=1] started (kafka.server.KafkaServer) 节点2,我们这次直接后台启动然后查看日志

[root@kafka71 kafka]# /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

[root@kafka71 kafka]# tail -f /opt/kafka/logs/server.log

====================================

[2018-03-14 11:04:13,679] INFO [KafkaServer id=2] started (kafka.server.KafkaServer) 节点3,一样后台启动然后查看日志

[root@kafka72 kafka]# /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

[root@kafka72 kafka]# tail -f /opt/kafka/logs/server.log

=======================================

[2018-03-14 11:06:38,274] INFO [KafkaServer id=3] started (kafka.server.KafkaServer)

3.4.2 验证进程

节点1

[root@kafka70 ~]# /opt/jdk/bin/jps

4531 Jps

4334 Kafka

1230 QuorumPeerMain

节点2

[root@kafka71 kafka]# /opt/jdk/bin/jps

2513 Kafka

2664 Jps

1163 QuorumPeerMain

节点3

[root@kafka72 kafka]# /opt/jdk/bin/jps

2835 Jps

2728 Kafka

1385 QuorumPeerMain

3.4.3 测试创建topic

创建名为kafkatest,partitions(分区)为3,replication(复制)为3的topic(主题),在任意机器操作即可 [root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --partitions 3 --replication-factor 3 --topic kafkatest

Created topic "kafkatest".

3.4.4 测试获取topic

可以在任意一台kafka服务器进行测试 节点1

[root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --describe --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic kafkatest

Topic:kafkatest PartitionCount:3 ReplicationFactor:3 Configs:

Topic: kafkatest Partition: 0 Leader: 2 Replicas: 2,3,1 Isr: 2,3,1

Topic: kafkatest Partition: 1 Leader: 3 Replicas: 3,1,2 Isr: 3,1,2

Topic: kafkatest Partition: 2 Leader: 1 Replicas: 1,2,3 Isr: 1,2,3 节点2

[root@kafka71 ~]# /opt/kafka/bin/kafka-topics.sh --describe --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic kafkatest

Topic:kafkatest PartitionCount:3 ReplicationFactor:3 Configs:

Topic: kafkatest Partition: 0 Leader: 2 Replicas: 2,3,1 Isr: 2,3,1

Topic: kafkatest Partition: 1 Leader: 3 Replicas: 3,1,2 Isr: 3,1,2

Topic: kafkatest Partition: 2 Leader: 1 Replicas: 1,2,3 Isr: 1,2,3 节点3

[root@kafka72 ~]# /opt/kafka/bin/kafka-topics.sh --describe --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic kafkatest

Topic:kafkatest PartitionCount:3 ReplicationFactor:3 Configs:

Topic: kafkatest Partition: 0 Leader: 2 Replicas: 2,3,1 Isr: 2,3,1

Topic: kafkatest Partition: 1 Leader: 3 Replicas: 3,1,2 Isr: 3,1,2

Topic: kafkatest Partition: 2 Leader: 1 Replicas: 1,2,3 Isr: 1,2,3 状态说明:kafkatest有三个分区分别为1、2、3,分区0的leader是2(broker.id),分区0有三个副本,并且状态都为lsr(ln-sync,表示可以参加选举成为leader)。

3.4.5 测试获取topic

[root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --delete --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic kafkatest

Topic kafkatest is marked for deletion.

Note: This will have no impact if delete.topic.enable is not set to true.

3.4.6 验证是否真的删除

[root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --describe --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic kafkatest

3.4.7 测试获取所有的topic列表

首先创建两个topic

[root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --partitions 3 --replication-factor 3 --topic kafkatest

Created topic "kafkatest".

[root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --partitions 3 --replication-factor 3 --topic kafkatest2

Created topic "kafkatest2". 然后查看所有的topic列表

[root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --list --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 kafkatest

kafkatest2

3.4.8 Kafka测试命令发送消息

创建一个名为messagetest的topic

[root@kafka70 ~]# /opt/kafka/bin/kafka-topics.sh --create --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --partitions 3 --replication-factor 3 --topic messagetest

Created topic "messagetest". 发送消息:注意,端口是 kafka的9092,而不是zookeeper的2181

[root@kafka70 ~]# /opt/kafka/bin/kafka-console-producer.sh --broker-list 192.168.47.70:9092,192.168.47.71:9092,192.168.47.72:9092 --topic messagetest

>hello

>mymy

>Yo!

>

3.4.9 其他Kafka服务器获取消息

[root@kafka70 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic messagetest --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

mymy

Yo!

hello

[root@kafka71 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic messagetest --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

mymy

Yo!

hello

[root@kafka72 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic messagetest --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

hello

mymy

Yo!

3.5 报错解决

3.5.1 zookeeper配置文件的server写错导致zookeeper状态为standalone

配置文件里zoo.cfg里的server地址写错了,导致启动的时候只会查找自己的节点

[root@kafka70 soft]# grep "^[a-Z]" /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper

clientPort=2181

server.1=192.168.47.70:2888:3888

server.1=192.168.47.71:2888:3888

server.1=192.168.47.72:2888:3888

[root@kafka70 ~]# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@kafka70 ~]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: standalone 解决:各节点修改标签为正确的数字,然后重启zookeeper服务,注意!所有节点都要操作!

[root@kafka70 soft]# grep "^[a-Z]" /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper

clientPort=2181

server.1=192.168.47.70:2888:3888

server.2=192.168.47.71:2888:3888

server.3=192.168.47.72:2888:3888

[root@kafka70 soft]# /opt/zookeeper/bin/zkServer.sh restart

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[root@kafka71 soft]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower

3.5.2 发送消息失败

[root@kafka70 ~]# /opt/kafka/bin/kafka-console-producer.sh --broker-list 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic messagetest

>hellp mymy

meme

[2018-03-14 11:47:31,269] ERROR Error when sending message to topic messagetest with key: null, value: 5 bytes with error: (org.apache.kafka.clients.producer.internals.ErrorLoggingCallback)

org.apache.kafka.common.errors.TimeoutException: Failed to update metadata after 60000 ms.

>hello

[2018-03-14 11:48:31,277] ERROR Error when sending message to topic messagetest with key: null, value: 0 bytes with error: (org.apache.kafka.clients.producer.internals.ErrorLoggingCallback)

org.apache.kafka.common.errors.TimeoutException: Failed to update metadata after 60000 ms.

> 报错原因.端口写错了,应该是kafka的9092,而不是zookeeper的2181

解决:使用正确的端口

[root@kafka70 ~]# /opt/kafka/bin/kafka-console-producer.sh --broker-list 192.168.47.70:9092,192.168.47.71:9092,192.168.47.72:9092 --topic messagetest

>hello

>mymy

>Yo!

>

3.5.2 接受消息失败报错

[root@kafka71 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic messagetest --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

[2018-03-14 12:02:01,648] ERROR Unknown error when running consumer: (kafka.tools.ConsoleConsumer$)

java.net.UnknownHostException: kafka71: kafka71: Name or service not known

at java.net.InetAddress.getLocalHost(InetAddress.java:1505)

at kafka.consumer.ZookeeperConsumerConnector.<init>(ZookeeperConsumerConnector.scala:135)

at kafka.consumer.ZookeeperConsumerConnector.<init>(ZookeeperConsumerConnector.scala:159)

at kafka.consumer.Consumer$.create(ConsumerConnector.scala:112)

at kafka.consumer.OldConsumer.<init>(BaseConsumer.scala:130)

at kafka.tools.ConsoleConsumer$.run(ConsoleConsumer.scala:72)

at kafka.tools.ConsoleConsumer$.main(ConsoleConsumer.scala:54)

at kafka.tools.ConsoleConsumer.main(ConsoleConsumer.scala)

Caused by: java.net.UnknownHostException: kafka71: Name or service not known

at java.net.Inet6AddressImpl.lookupAllHostAddr(Native Method)

at java.net.InetAddress$2.lookupAllHostAddr(InetAddress.java:928)

at java.net.InetAddress.getAddressesFromNameService(InetAddress.java:1323)

at java.net.InetAddress.getLocalHost(InetAddress.java:1500)

... 7 more 报错原因:主机名和hosts解析名不一致

[root@kafka71 ~]# cat /etc/hostname

kafka71

[root@kafka71 ~]# tail -3 /etc/hosts

192.168.47.70 kafka70

192.168.47.71 kafka71

192.168.47.72 kafka72 解决办法:所有主机的主机名和hosts解析名保持一致,然后重新获取

修改所有主机的主机名

[root@kafka70 ~]# hostname

kafka70

[root@kafka70 ~]# tail -3 /etc/hosts

192.168.47.70 kafka70

192.168.47.71 kafka71

192.168.47.72 kafka72

[root@kafka71 ~]# hostname

kafka71

[root@kafka71 ~]# tail -3 /etc/hosts

192.168.47.70 kafka70

192.168.47.71 kafka71

192.168.47.72 kafka72

[root@kafka72 ~]# hostname

kafka72

[root@kafka72 ~]# tail -3 /etc/hosts

192.168.47.70 kafka70

192.168.47.71 kafka71

192.168.47.72 kafka72 重新获取消息

[root@kafka71 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic messagetest --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

mymy

Yo!

hello

[root@kafka72 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 192.168.47.70:2181,192.168.47.71:2181,192.168.47.72:2181 --topic messagetest --from-beginning

Using the ConsoleConsumer with old consumer is deprecated and will be removed in a future major release. Consider using the new consumer by passing [bootstrap-server] instead of [zookeeper].

hello

mymy

Yo!

kafka和zookeeper安装的更多相关文章

- kafka及zookeeper安装

kafka_2.9.2-0.8.1.tgzzookeeper-3.4.8.tar.gz 安装 zookeeper1 export PATH=$PATH:/usr/local/zookeeper/bin ...

- kafka和zookeeper安装部署(版本弄不好就是坑)

yum install -y unzip zip 配置host vi /etc/host172.19.68.10 zk1 1. zookeeper zookeeper下载地址 http://mirro ...

- elk、kafka、zookeeper 安装

.elk解释 ELK分别是Elasticsearch.Logstash.Kibana三个开源框架缩写 Elasticsearch 开源分布式搜索引擎,提供存储.分析.搜索功能.特点:分布式.基于rea ...

- zookeeper+kafka集群安装之二

zookeeper+kafka集群安装之二 此为上一篇文章的续篇, kafka安装需要依赖zookeeper, 本文与上一篇文章都是真正分布式安装配置, 可以直接用于生产环境. zookeeper安装 ...

- zookeeper+kafka集群安装之一

zookeeper+kafka集群安装之一 准备3台虚拟机, 系统是RHEL64服务版. 1) 每台机器配置如下: $ cat /etc/hosts ... # zookeeper hostnames ...

- kubernetes(k8s) helm安装kafka、zookeeper

通过helm在k8s上部署kafka.zookeeper 通过helm方法安装 k8s上安装kafka,可以使用helm,将kafka作为一个应用安装.当然这首先要你的k8s支持使用helm安装.he ...

- zookeeper+kafka集群安装之中的一个

版权声明:本文为博主原创文章.未经博主同意不得转载. https://blog.csdn.net/cheungmine/article/details/26678877 zookeeper+kafka ...

- Kafka集群安装Version1.0.1(自带Zookeeper)

1.说明 Kafka集群安装,基于版本1.0.1, 使用kafka_2.12-1.0.1.tgz安装包, 其中2.12是编译工具Scala的版本. 而且不需要另外安装Zookeeper服务, 使用Ka ...

- 安装kafka和zookeeper以及使用

1.安装zookeeper zookeeper下载:http://zookeeper.apache.org/releases.html 从3.5.5开始,带有bin名称的包才是要下载的包可以直接使用 ...

- centOS7安装kafka和zookeeper

wget http://mirrors.hust.edu.cn/apache/kafka/2.0.0/kafka_2.11-2.0.0.tgz tar zxvf kafka_2.-.tgz cd ka ...

随机推荐

- 一行命令使用 Docker 编译 Latex 文件,简单优雅

使用 Docker 编译 LaTeX 文章 LaTeX 是一种常用的排版系统,它可以帮助用户创建漂亮.专业的文档.但是,安装和配置 LaTeX 比较麻烦,特别是对于初学者而言. Docker 是一个开 ...

- SpringBoot整合Websocket,实现作为客户端接收消息的同时作为服务端向下游客户发送消息

SpringBoot整合Websocket 1. SpringBoot作为服务端 作为服务端时,需要先导入websocket的依赖 <dependency> <groupId> ...

- Linux 中设备的分类及网络设备接口路径

设备分类 字符设备 块设备 网络设备 参考文档: 手把手教Linux驱动 网络设备位置 [root@localhost ~]# cd /sys/class/net/ [root@localhost n ...

- asp.net core之配置

简介 配置在asp.net core中可以说是我们必不可少一部分.ASP.NET Core 中的应用程序配置是使用一个或多个配置提供程序执行的. 配置提供程序使用各种配置源从键值对读取配置数据,普通最 ...

- pandas 生成新的Dataframe

选择某些列 import pandas as pd # 从Excel中读取数据,生成DataFrame数据 # 导入Excel路径和sheet name df = pd.read_excel(exce ...

- 使用ClamAV进行linux病毒扫描

前言 ClamAV是一个在命令行下查毒(并非杀毒)的软件,其免费开源跨平台.ClamAV默认只能查出服务器内的病毒,但是无法清除,最多删除. 安装ClamAV yum install -y epel- ...

- 工具—批量备案信息查询并生成fofa查询语句

描述: 1.可以输入一个或多个公司名或域名或备案号,得到备案信息(备案公司名,备案公司网站url,备案号,域名类型,审核时间) 2.读取生成的信息并转为fofa语句,方便了指定目标的信息收集速度 工具 ...

- 基于Supabase开发公众号接口

在<开源BaaS平台Supabase介绍>一文中我们对什么是BaaS以及一个优秀的BaaS平台--Supabase做了一些介绍.在这之后,出于探究的目的,我利用一些空闲时间基于Micros ...

- 领域驱动设计(DDD):DDD落地问题和一些解决方法

欢迎继续关注本系列文章,下面我们继续讲解下DDD在实战落地时候,会具体碰到哪些问题,以及解决的方式有哪些. DDD 是一种思想,主要知道我们方向,具体如何做,需要我们根据业务场景具体问题具体分析. 充 ...

- Redis从入门到放弃(12):pipeline管道技术

1.引言 在现代应用程序中,高性能和低延迟是至关重要的因素.而在处理大规模数据操作时,Redis作为一种快速.可靠的内存数据库,成为了许多开发人员的首选. 在Redis中,每个操作都需要与服务器进行往 ...