Storm-wordcount实时统计单词次数

一、本地模式

1、WordCountSpout类

package com.demo.wc; import java.util.Map; import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values; /**

* 需求:单词计数 hello world hello Beijing China

*

* 实现接口: IRichSpout IRichBolt

* 继承抽象类:BaseRichSpout BaseRichBolt 常用*/

public class WordCountSpout extends BaseRichSpout { //定义收集器

private SpoutOutputCollector collector; //发送数据

@Override

public void nextTuple() {

//1.发送数据 到bolt

collector.emit(new Values("I like China very much")); //2.设置延迟

try {

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

} //创建收集器

@Override

public void open(Map arg0, TopologyContext arg1, SpoutOutputCollector collector) {

this.collector = collector;

} //声明描述

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

//起别名

declarer.declare(new Fields("wordcount"));

}

}

2、WordCountSplitBolt类

package com.demo.wc; import java.util.Map; import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values; public class WordCountSplitBolt extends BaseRichBolt { //数据继续发送到下一个bolt

private OutputCollector collector; //业务逻辑

@Override

public void execute(Tuple in) {

//1.获取数据

String line = in.getStringByField("wordcount"); //2.切分数据

String[] fields = line.split(" "); //3.<单词,1> 发送出去 下一个bolt(累加求和)

for (String w : fields) {

collector.emit(new Values(w, 1));

}

} //初始化

@Override

public void prepare(Map arg0, TopologyContext arg1, OutputCollector collector) {

this.collector = collector;

} //声明描述

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "sum"));

}

}

3、WordCountBolt类

package com.demo.wc; import java.util.HashMap;

import java.util.Map; import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple; public class WordCountBolt extends BaseRichBolt{ private Map<String, Integer> map = new HashMap<>(); //累加求和

@Override

public void execute(Tuple in) {

//1.获取数据

String word = in.getStringByField("word");

Integer sum = in.getIntegerByField("sum"); //2.业务处理

if (map.containsKey(word)) {

//之前出现几次

Integer count = map.get(word);

//已有的

map.put(word, count + sum);

} else {

map.put(word, sum);

} //3.打印控制台

System.out.println(Thread.currentThread().getName() + "\t 单词为:" + word + "\t 当前已出现次数为:" + map.get(word));

} @Override

public void prepare(Map arg0, TopologyContext arg1, OutputCollector arg2) {

} @Override

public void declareOutputFields(OutputFieldsDeclarer arg0) {

}

}

4、WordCountDriver类

package com.demo.wc; import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields; public class WordCountDriver {

public static void main(String[] args) {

//1.hadoop->Job storm->topology 创建拓扑

TopologyBuilder builder = new TopologyBuilder();

//2.指定设置

builder.setSpout("WordCountSpout", new WordCountSpout(), 1);

builder.setBolt("WordCountSplitBolt", new WordCountSplitBolt(), 4).fieldsGrouping("WordCountSpout", new Fields("wordcount"));

builder.setBolt("WordCountBolt", new WordCountBolt(), 2).fieldsGrouping("WordCountSplitBolt", new Fields("word")); //3.创建配置信息

Config conf = new Config(); //4.提交任务

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("wordcounttopology", conf, builder.createTopology());

}

}

5、直接运行(4)里面的main方法即可启动本地模式。

二、集群模式

前三个类和上面本地模式一样,第4个类WordCountDriver和本地模式有点区别

package com.demo.wc; import org.apache.storm.Config;

import org.apache.storm.StormSubmitter;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields; public class WordCountDriver {

public static void main(String[] args) {

//1.hadoop->Job storm->topology 创建拓扑

TopologyBuilder builder = new TopologyBuilder();

//2.指定设置

builder.setSpout("WordCountSpout", new WordCountSpout(), 1);

builder.setBolt("WordCountSplitBolt", new WordCountSplitBolt(), 4).fieldsGrouping("WordCountSpout", new Fields("wordcount"));

builder.setBolt("WordCountBolt", new WordCountBolt(), 2).fieldsGrouping("WordCountSplitBolt", new Fields("word")); //3.创建配置信息

Config conf = new Config();

//conf.setNumWorkers(10); //集群模式

try {

StormSubmitter.submitTopology(args[0], conf, builder.createTopology());

} catch (Exception e) {

e.printStackTrace();

} //4.提交任务

//LocalCluster localCluster = new LocalCluster();

//localCluster.submitTopology("wordcounttopology", conf, builder.createTopology());

}

}

把程序打成jar包放在启动了Storm集群的机器里,在stormwordcount.jar所在目录下执行

storm jar stormwordcount.jar com.demo.wc.WordCountDriver wordcount01

即可启动程序。

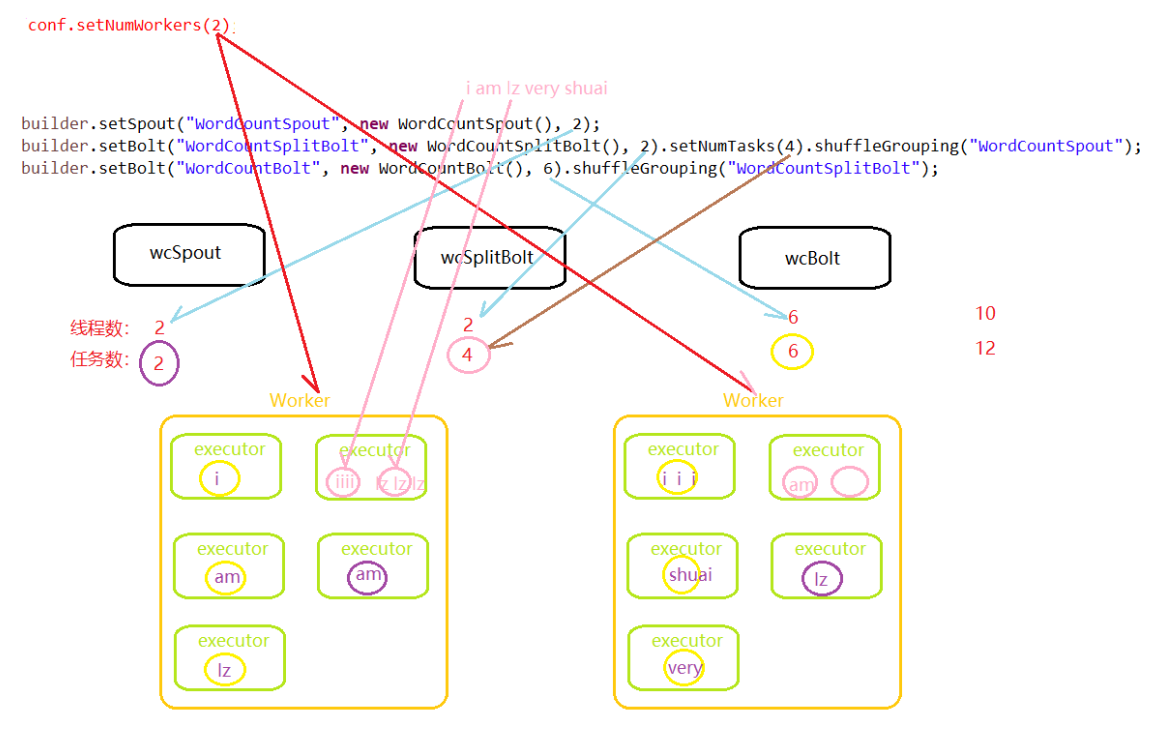

三、并发度和分组策略

1、WordCountDriver_Shuffle类

package com.demo.wc; import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.topology.TopologyBuilder; public class WordCountDriver_Shuffle {

public static void main(String[] args) {

//1.hadoop->Job storm->topology 创建拓扑

TopologyBuilder builder = new TopologyBuilder();

//2.指定设置

builder.setSpout("WordCountSpout", new WordCountSpout(), 2);

builder.setBolt("WordCountSplitBolt", new WordCountSplitBolt(), 2).setNumTasks(4).shuffleGrouping("WordCountSpout");

builder.setBolt("WordCountBolt", new WordCountBolt(), 6).shuffleGrouping("WordCountSplitBolt"); //3.创建配置信息

Config conf = new Config();

//conf.setNumWorkers(2); //集群模式

// try {

// StormSubmitter.submitTopology(args[0], conf, builder.createTopology());

// } catch (Exception e) {

// e.printStackTrace();

// } //4.提交任务

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("wordcounttopology", conf, builder.createTopology());

}

}

2、并发度与分组策略

Storm-wordcount实时统计单词次数的更多相关文章

- lucene 统计单词次数(词频tf)并进行排序

public class WordCount { static Directory directory; // 创建分词器 static Analyzer analyzer = new IKAnaly ...

- 大数据学习day32-----spark12-----1. sparkstreaming(1.1简介,1.2 sparkstreaming入门程序(统计单词个数,updateStageByKey的用法,1.3 SparkStreaming整合Kafka,1.4 SparkStreaming获取KafkaRDD的偏移量,并将偏移量写入kafka中)

1. Spark Streaming 1.1 简介(来源:spark官网介绍) Spark Streaming是Spark Core API的扩展,其是支持可伸缩.高吞吐量.容错的实时数据流处理.Sp ...

- Storm基础概念与单词统计示例

Storm基本概念 Storm是一个分布式的.可靠地.容错的数据流处理系统.Storm分布式计算结构称为Topology(拓扑)结构,顾名思义,与拓扑图十分类似.该拓扑图主要由数据流Stream.数据 ...

- Storm+HBase实时实践

1.HBase Increment计数器 hbase counter的原理: read+count+write,正好完成,就是讲key的value读出,若存在,则完成累加,再写入,若不存在,则按&qu ...

- 3、SpringBoot 集成Storm wordcount

WordCountBolt public class WordCountBolt extends BaseBasicBolt { private Map<String,Integer> c ...

- Storm WordCount Topology学习

1,分布式单词计数的流程 首先要有数据源,在SentenceSpout中定义了一个字符串数组sentences来模拟数据源.字符串数组中的每句话作为一个tuple发射.其实,SplitBolt接收Se ...

- 使用HDFS完成wordcount词频统计

任务需求 统计HDFS上文件的wordcount,并将统计结果输出到HDFS 功能拆解 读取HDFS文件 业务处理(词频统计) 缓存处理结果 将结果输出到HDFS 数据准备 事先往HDFS上传需要进行 ...

- C++读取文件统计单词个数及频率

1.Github链接 GitHub链接地址https://github.com/Zzwenm/PersonProject-C2 2.PSP表格 PSP2.1 Personal Software Pro ...

- Hadoop基础学习(一)分析、编写并执行WordCount词频统计程序

版权声明:本文为博主原创文章,未经博主同意不得转载. https://blog.csdn.net/jiq408694711/article/details/34181439 前面已经在我的Ubuntu ...

随机推荐

- 延时NSTimer

import Foundationimport UIKit class YijfkController:UIViewController{ override func viewDidLoad() { ...

- json中把非json格式的字符串转换成json对象再转换成json字符串

JSON.toJson(str).toString()假如key和value都是整数的时候,先转换成jsonObject对象,再转换成json字符串

- mysql学习笔记1---mysql ERROR 1045 (28000): 错误解决办法(续:深入分析)

在命令行输入mysql -u root –p,输入密码,或通过工具连接数据库时,经常出现下面的错误信息,详细该错误信息很多人在使用MySQL时都遇到过. ERROR 1045 (28000): Acc ...

- No output operations registered, so nothing to execute

SparkStreaming和KafKa结合报错!报错之前代码如下: object KafkaWordCount{ val updateFunc = (iter:Iterator[(String,Se ...

- plsql programming 01 plsql概述

授权 从 oracle 8i 开始, oracle 用通过提供 authid 子句为 pl/sql 的执行授权模型, 这样我们可以选择使用 authid current_user(调用者权限)来执行这 ...

- html5shiv.js分析-读源码之javascript系列

xiaolingzi 发表于 2012-05-31 23:42:29 首先,我们先了解一下html5shiv.js是什么. html5shiv.js是一套实现让ie低版本等浏览器支持html5标签的解 ...

- 如果想要跨平台,在file类下有separtor(),返回锁出平台的文件分隔符

对于命令:File f2=new file(“d:\\abc\\789\\1.txt”) 这个命令不具备跨平台性,因为不同的OS的文件系统很不相同. 如果想要跨平台,在file类下有separtor( ...

- Linux Shell Vim 经常使用命令、使用技巧总结

前言 本文总结了自己实际开发中的经常使用命令,不定时更新,方便自己和其它人查阅. 如有其它提高效率的使用技巧.欢迎留言. 本文地址 http://blog.csdn.net/never_cxb/art ...

- centos7安装avahi

sudo yum install avahi sudo yum install avahi-tools 转自: http://unix.stackexchange.com/questions/1829 ...

- WPF 在TextBox失去焦点时检测数据,出错重新获得焦点解决办法

WPF 在TextBox失去焦点时检测数据,出错重新获得焦点解决办法 在WPF的TextBox的LostFocus事件中直接使用Focus()方法会出现死循环的问题 正确的使用方式有2中方法: 方法一 ...