Machine learning吴恩达第三周 Logistic Regression

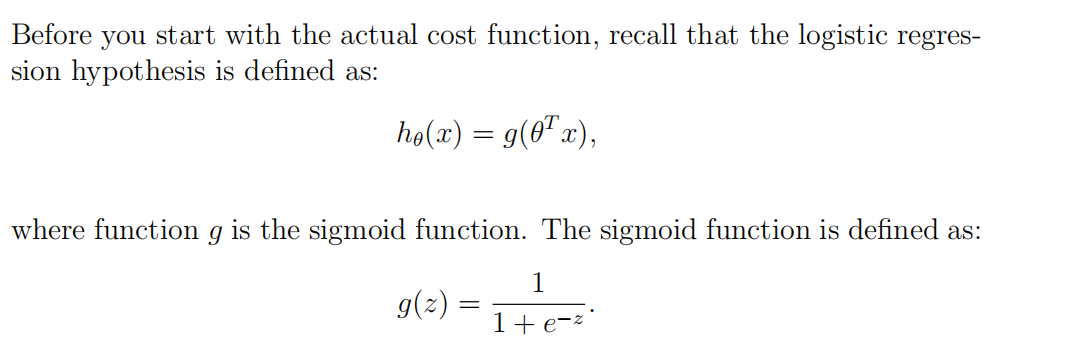

1. Sigmoid function

function g = sigmoid(z)

%SIGMOID Compute sigmoid function

% g = SIGMOID(z) computes the sigmoid of z. % You need to return the following variables correctly

g = zeros(size(z)); % ====================== YOUR CODE HERE ======================

% Instructions: Compute the sigmoid of each value of z (z can be a matrix,

% vector or scalar). g=1./(1+exp(-z)); % ============================================================= end

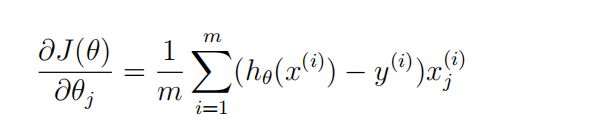

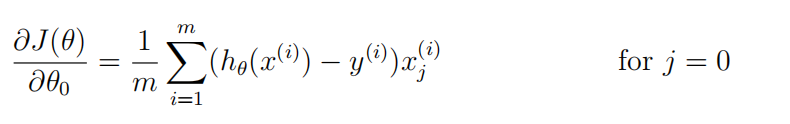

2. Logistic Regression Cost & Logistic Regression Gradient

首先可以将h(x)表示出来----sigmoid函数

然后对于gredient(j)来说,

可以现在草稿纸上把矩阵画出来,然后观察,用向量来解决;

function [J, grad] = costFunction(theta, X, y)

%COSTFUNCTION Compute cost and gradient for logistic regression

% J = COSTFUNCTION(theta, X, y) computes the cost of using theta as the

% parameter for logistic regression and the gradient of the cost

% w.r.t. to the parameters. % Initialize some useful values

m = length(y); % number of training examples % You need to return the following variables correctly

J = 0;

grad = zeros(size(theta)); % ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta.

% You should set J to the cost.

% Compute the partial derivatives and set grad to the partial

% derivatives of the cost w.r.t. each parameter in theta

%

% Note: grad should have the same dimensions as theta

%

h=sigmoid(X*theta); for i=1:m,

J=J+1/m*(-y(i)*log(h(i))-(1-y(i))*log(1-h(i)));

endfor grad=1/m*X'*(h.-y); % ============================================================= end

3. Predict

function p = predict(theta, X)

%PREDICT Predict whether the label is 0 or 1 using learned logistic

%regression parameters theta

% p = PREDICT(theta, X) computes the predictions for X using a

% threshold at 0.5 (i.e., if sigmoid(theta'*x) >= 0.5, predict 1) m = size(X, 1); % Number of training examples % You need to return the following variables correctly

p = zeros(m, 1); % ====================== YOUR CODE HERE ======================

% Instructions: Complete the following code to make predictions using

% your learned logistic regression parameters.

% You should set p to a vector of 0's and 1's

% p=sigmoid(X*theta);

for i=1:m

if(p(i)>=0.5)p(i)=1;

else p(i)=0;

end

endfor % ========================================================================= end

4.Regularized Logistic Regression Cost & Regularized Logistic Regression Gradient

要注意的是:

Octave中,下标是从1开始的;

其次:

对于gradient(j)而言;

我们可以用X(:,j)的方式获取第j列的所有元素;

function [J, grad] = costFunctionReg(theta, X, y, lambda)

%COSTFUNCTIONREG Compute cost and gradient for logistic regression with regularization

% J = COSTFUNCTIONREG(theta, X, y, lambda) computes the cost of using

% theta as the parameter for regularized logistic regression and the

% gradient of the cost w.r.t. to the parameters. % Initialize some useful values

m = length(y); % number of training examples % You need to return the following variables correctly

J = 0;

grad = zeros(size(theta)); % ====================== YOUR CODE HERE ======================

% Instructions: Compute the cost of a particular choice of theta.

% You should set J to the cost.

% Compute the partial derivatives and set grad to the partial

% derivatives of the cost w.r.t. each parameter in theta h=sigmoid(X*theta); for i=1:m

J=J+1/m*(-y(i)*log(h(i))-(1-y(i))*log(1-h(i)));

endfor for i=2:length(theta)

J=J+lambda/(2*m)*theta(i)^2;

endfor grad(1)=1/m*(h-y)'*X(:,1);

for i=2:length(theta)

grad(i)=1/m*(h-y)'*X(:,i)+lambda/m*theta(i);

endfor % ============================================================= end

Machine learning吴恩达第三周 Logistic Regression的更多相关文章

- Machine Learning——吴恩达机器学习笔记(酷

[1] ML Introduction a. supervised learning & unsupervised learning 监督学习:从给定的训练数据集中学习出一个函数(模型参数), ...

- Machine learning吴恩达第二周coding作业(选做)

1.Feature Normalization: 归一化的处理 function [X_norm, mu, sigma] = featureNormalize(X) %FEATURENORMALIZE ...

- Machine learning 吴恩达第二周coding作业(必做题)

1.warmUpExercise: function A = warmUpExercise() %WARMUPEXERCISE Example function in octave % A = WAR ...

- 吴恩达+neural-networks-deep-learning+第二周作业

Logistic Regression with a Neural Network mindset v4 简单用logistic实现了猫的识别,logistic可以被看做一个简单的神经网络结构,下面是 ...

- Deap Learning (吴恩达) 第一章深度学习概论 学习笔记

Deap Learning(Ng) 学习笔记 author: 相忠良(Zhong-Liang Xiang) start from: Sep. 8st, 2017 1 深度学习概论 打字太麻烦了,索性在 ...

- 吴恩达机器学习笔记 —— 7 Logistic回归

http://www.cnblogs.com/xing901022/p/9332529.html 本章主要讲解了逻辑回归相关的问题,比如什么是分类?逻辑回归如何定义损失函数?逻辑回归如何求最优解?如何 ...

- Github | 吴恩达新书《Machine Learning Yearning》完整中文版开源

最近开源了周志华老师的西瓜书<机器学习>纯手推笔记: 博士笔记 | 周志华<机器学习>手推笔记第一章思维导图 [博士笔记 | 周志华<机器学习>手推笔记第二章&qu ...

- 我在 B 站学机器学习(Machine Learning)- 吴恩达(Andrew Ng)【中英双语】

我在 B 站学机器学习(Machine Learning)- 吴恩达(Andrew Ng)[中英双语] 视频地址:https://www.bilibili.com/video/av9912938/ t ...

- Coursera课程《Machine Learning》吴恩达课堂笔记

强烈安利吴恩达老师的<Machine Learning>课程,讲得非常好懂,基本上算是无基础就可以学习的课程. 课程地址 强烈建议在线学习,而不是把视频下载下来看.视频中间可能会有一些问题 ...

随机推荐

- TF录像存储专项测试

测试环境 移动设备:小米4C 移动设备版本:Android 5.1 IPC版本号:0.1.4110_10.1.1.1.3948 安居小宝版本:Version:2.0.1 测试网络:IPC使用WIFI网 ...

- Spring MVC 配置文件

<?xml version="1.0" encoding="UTF-8"?><beans xmlns="http://www.spr ...

- es学习-基础增删改查

创建库 插入数据 修改文档: 查询文档: 删除文档:

- NodeJS下的阿里云企业邮箱邮件发送问题

还没有到11点,再顺带发一个上次碰到NodeJS的邮箱插件nodeMailer不支持阿里云邮件问题. 网上很多资料都默认使用QQ之类的邮箱,因为nodeMailer默认添加了QQ之类的SMTP地址,但 ...

- javascript总结47: JS动画原理&jQuery 效果- 各种动画

1 动画的原理就是: 盒子本身的位置+步长 2 什么是步长? var box=document.getElementById('box'); btn.onclick = function() { // ...

- C# 银行系统

今天分享一个大家都爱的Money 银行系统代码 可以随心所欲的存钱取钱 //要想成功,必须马到 //建立数组 Card[] cards = ]; //卡类初始化 public void Initia ...

- HBase-0.98.0和Phoenix-4.0.0分布式安装指南

目录 目录 1 1. 前言 1 2. 约定 2 3. 相关端口 2 4. 下载HBase 2 5. 安装步骤 2 5.1. 修改conf/regionservers 2 5.2. 修改conf/hba ...

- 用 Inkscape 做 SVG 给 TPath

FireMonkey 里的 TPathData 支持 SVG 的基本绘图指令,因此可以运用 Inkscape 软件,提取 SVG 的绘图内容,请见图片说明: INKSCAPE https://inks ...

- 使用PowerShell自动部署ASP.NetCore程序到IIS

Windows PowerShell 是一种命令行外壳程序和脚本环境,使命令行用户和脚本编写者可以利用 .NET Framework的强大功能.有关于更多PowerShell的信息,可参阅百度词条 接 ...

- zookeeper 开机启动

第一种:直接修改/etc/rc.d/rc.local文件 在/etc/rc.d/rc.local文件中需要输入两行,其中export JAVA_HOME=/usr/java/jdk1.8.0_112是 ...