linux运维、架构之路-Kubernetes离线集群部署-无坑

一、部署环境介绍

1、服务器规划

|

系统 |

IP地址 |

主机名 |

CPU |

内存 |

|

CentOS 7.5 |

192.168.56.11 |

k8s-node1 |

2C |

2G |

|

CentOS 7.5 |

192.168.56.12 |

k8s-node2 |

2C |

2G |

|

CentOS 7.5 |

192.168.56.13 |

k8s-node3 |

2C |

2G |

2、Kubernetes 1.10.1包下载

官方地址:https://github.com/kubernetes/kubernetes/releases

网盘地址:https://pan.baidu.com/s/1sJgRhGWhvBDqFVVJbNvk-g

提取码:nirh

所用到包的版本:

创建软件包存放目录

mkdir -p /opt/kubernetes/tools

解压下载好软件包

cd /opt/kubernetes/tools #软件包上传放在此目录

tar zxf kubernetes.tar.gz

tar zxf kubernetes-server-linux-amd64.tar.gz

tar zxf kubernetes-client-linux-amd64.tar.gz

tar zxf kubernetes-node-linux-amd64.tar.gz

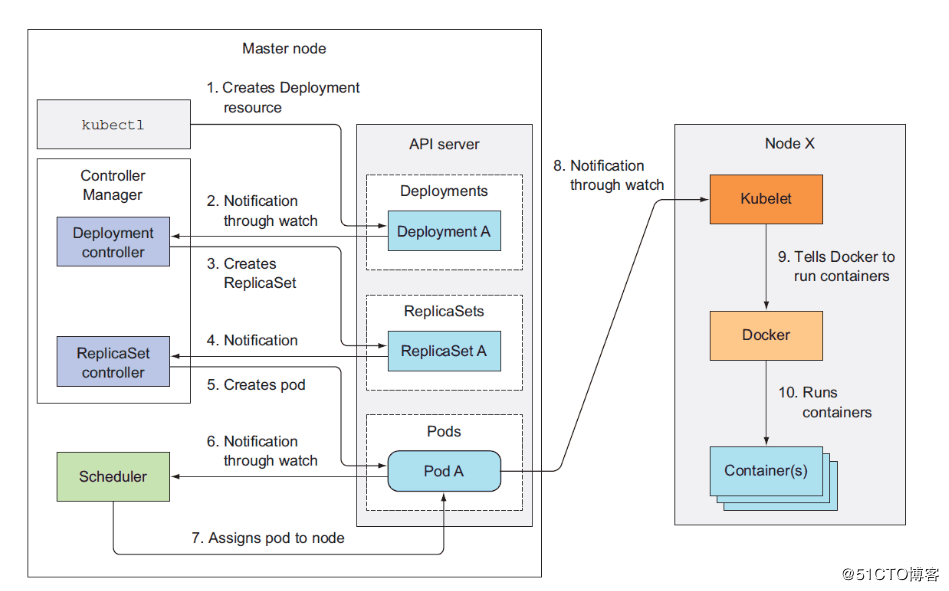

3、Kubernetes工作流程

4、Kubernetes环境初始化

①设置主机名

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

hostnamectl set-hostname k8s-node3

②设置/etc/hosts保证主机名能够解析

cat > /etc/hosts <<EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

:: localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.56.11 k8s-node1 k8s-node1

192.168.56.12 k8s-node2 k8s-node2

192.168.56.13 k8s-node3 k8s-node3

EOF

③关闭SELinux和防火墙

systemctl disable firewalld.service

systemctl stop firewalld.service

systemctl disable NetworkManager

#关闭selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#' /etc/selinux/config

setenforce

④优化内核参数

cat > /etc/sysctl.conf <<EOF

# For more information, see sysctl.conf() and sysctl.d().

net.ipv6.conf.all.disable_ipv6 =

net.ipv6.conf.default.disable_ipv6 =

net.ipv6.conf.lo.disable_ipv6 = vm.swappiness =

net.ipv4.neigh.default.gc_stale_time=

net.ipv4.ip_forward = # see details in https://help.aliyun.com/knowledge_detail/39428.html

net.ipv4.conf.all.rp_filter=

net.ipv4.conf.default.rp_filter=

net.ipv4.conf.default.arp_announce =

net.ipv4.conf.lo.arp_announce=

net.ipv4.conf.all.arp_announce= # see details in https://help.aliyun.com/knowledge_detail/41334.html

net.ipv4.tcp_max_tw_buckets =

net.ipv4.tcp_syncookies =

net.ipv4.tcp_max_syn_backlog =

net.ipv4.tcp_synack_retries =

kernel.sysrq = #iptables透明网桥的实现

# NOTE: kube-proxy 要求 NODE 节点操作系统中要具备 /sys/module/br_netfilter 文件,而且还要设置 bridge-nf-call-iptables=,如果不满足要求,那么 kube-proxy 只是将检查信息记录到日志中,kube-proxy 仍然会正常运行,但是这样通过 Kube-proxy 设置的某些 iptables 规则就不会工作。 net.bridge.bridge-nf-call-ip6tables =

net.bridge.bridge-nf-call-iptables =

net.bridge.bridge-nf-call-arptables =

EOF sysctl -p #内核参数生效

⑤设置部署节点到其它所有节点的SSH免密码登录

ssh-keygen -t rsa

ssh-copy-id k8s-node1

ssh-copy-id k8s-node2

ssh-copy-id k8s-node3

5、部署 Docker(所有节点)

①添加yum源安装Docker

cd /etc/yum.repos.d/ && wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce

systemctl start docker

systemctl enable docker

②统一创建安装所需目录(所有节点)

mkdir -p /opt/kubernetes/{cfg,bin,ssl,log}

二、安装证书工具CFSSL制作CA证书

如无特别说明,所有操作都在节点k8s-node1执行

1、下载CFSSL工具

cd /opt/kubernetes/tools/

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

2、初始化cfssl

cd /opt/kubernetes/ssl

cfssl print-defaults config > config.json

cfssl print-defaults csr > csr.json

3、生成CA文件的JSON配置文件

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF

4、创建用来生成CA证书签名请求CSR的JSON配置文件

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

5、生成CA证书ca.pem和密钥ca-key.pem

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

[root@k8s-node1 ssl]# ll

总用量

-rw-r--r-- root root 10月 : ca-config.json

-rw-r--r-- root root 10月 : ca.csr

-rw-r--r-- root root 10月 : ca-csr.json

-rw------- root root 10月 : ca-key.pem

-rw-r--r-- root root 10月 : ca.pem

-rw-r--r-- root root 10月 : config.json

-rw-r--r-- root root 10月 : csr.json

6、分发CA证书到k8s-node1和k8s-node2节点

scp -rp /opt/kubernetes/ssl/ca.csr ca.pem ca-key.pem ca-config.json 192.168.56.12:/opt/kubernetes/ssl/

scp -rp /opt/kubernetes/ssl/ca.csr ca.pem ca-key.pem ca-config.json 192.168.56.13:/opt/kubernetes/ssl/

三、ETCD集群部署

1、下载分发ETCD软件包

cd /opt/kubernetes/tools

wget https://github.com/coreos/etcd/releases/download/v3.2.18/etcd-v3.2.18-linux-amd64.tar.gz

tar xf etcd-v3.2.18-linux-amd64.tar.gz

cd etcd-v3.2.18-linux-amd64/ && cp etcd etcdctl /opt/kubernetes/bin/

scp etcd etcdctl 192.168.56.12:/opt/kubernetes/bin/

scp etcd etcdctl 192.168.56.13:/opt/kubernetes/bin/

配置环境变量

vim ~/.bash_profile PATH=$PATH:$HOME/bin:/opt/kubernetes/bin source ~/.bash_profile #环境变量生效

2、创建 etcd 证书签名请求

红色部分改为自己集群的IP地址

cd /opt/kubernetes/ssl

cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.56.11",

"192.168.56.12",

"192.168.56.13"

],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

3、生成 etcd 证书和私钥

cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \

-ca-key=/opt/kubernetes/ssl/ca-key.pem \

-config=/opt/kubernetes/ssl/ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

生成下面证书文件

[root@k8s-node1 ssl]# ls -l etcd*

-rw-r--r-- root root 10月 : etcd.csr

-rw-r--r-- root root 10月 : etcd-csr.json

-rw------- root root 10月 : etcd-key.pem

-rw-r--r-- root root 10月 : etcd.pem

4、分发ETCD证书文件

scp /opt/kubernetes/ssl/etcd*.pem 192.168.56.12:/opt/kubernetes/ssl/

scp /opt/kubernetes/ssl/etcd*.pem 192.168.56.13:/opt/kubernetes/ssl/

5、设置ETCD配置文件

红色部分为分发配置文件到节点后需要修改的

cat > /opt/kubernetes/cfg/etcd.conf <<EOF

#[member]

ETCD_NAME="etcd-node1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_SNAPSHOT_COUNTER=""

#ETCD_HEARTBEAT_INTERVAL=""

#ETCD_ELECTION_TIMEOUT=""

ETCD_LISTEN_PEER_URLS="https://192.168.56.11:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.56.11:2379,https://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS=""

#ETCD_MAX_WALS=""

#ETCD_CORS=""

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.56.11:2380"

# if you use different ETCD_NAME (e.g. test),

# set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

ETCD_INITIAL_CLUSTER="etcd-node1=https://192.168.56.11:2380,etcd-node2=https://192.168.56.12:2380,etcd-node3=https://192.168.56.13:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.56.11:2379"

#[security]

CLIENT_CERT_AUTH="true"

ETCD_CA_FILE="/opt/kubernetes/ssl/ca.pem"

ETCD_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"

ETCD_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"

PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_CA_FILE="/opt/kubernetes/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/opt/kubernetes/ssl/etcd.pem"

ETCD_PEER_KEY_FILE="/opt/kubernetes/ssl/etcd-key.pem"

EOF

6、创建ETCD系统启动服务

mkdir /var/lib/etcd -p #所有节点上创建etcd存储目录 cat > /etc/systemd/system/etcd.service <<EOF

[Unit]

Description=Etcd Server

After=network.target [Service]

Type=simple

WorkingDirectory=/var/lib/etcd

EnvironmentFile=-/opt/kubernetes/cfg/etcd.conf

# set GOMAXPROCS to number of processors

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /opt/kubernetes/bin/etcd"

Type=notify [Install]

WantedBy=multi-user.target

EOF

7、分发ETCD配置文件及系统服务文件

scp /opt/kubernetes/cfg/etcd.conf 192.168.56.12:/opt/kubernetes/cfg/ #etcd配置文件分发

scp /opt/kubernetes/cfg/etcd.conf 192.168.56.13:/opt/kubernetes/cfg/ scp /etc/systemd/system/etcd.service 192.168.56.12:/etc/systemd/system/ #etcd系统服务分发

scp /etc/systemd/system/etcd.service 192.168.56.13:/etc/systemd/system/

8、启动集群ETCD服务

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

9、验证ETCD集群状态

etcdctl --endpoints=https://192.168.56.11:2379 \

--ca-file=/opt/kubernetes/ssl/ca.pem \

--cert-file=/opt/kubernetes/ssl/etcd.pem \

--key-file=/opt/kubernetes/ssl/etcd-key.pem cluster-health

集群状态输出结果:

member 435fb0a8da627a4c is healthy: got healthy result from https://192.168.56.11:2379

member 6566e06d7343e1bb is healthy: got healthy result from https://192.168.56.11:2379

member ce7b884e428b6c8c is healthy: got healthy result from https://192.168.56.11:2379

cluster is healthy

四、Master节点服务部署

拷贝kube-apiserver、kube-controller-manager、kube-scheduler三个服务的命令到/opt/kubernetes/bin/

cd /opt/kubernetes/tools/kubernetes

cp server/bin/kube-apiserver /opt/kubernetes/bin/

cp server/bin/kube-controller-manager /opt/kubernetes/bin/

cp server/bin/kube-scheduler /opt/kubernetes/bin/

1、Kubernetes API服务部署

①创建生成CSR的JSON配置文件

cd /opt/kubernetes/ssl

cat > kubernetes-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.56.11",

"10.1.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

②生成 kubernetes 证书和私钥

cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \

-ca-key=/opt/kubernetes/ssl/ca-key.pem \

-config=/opt/kubernetes/ssl/ca-config.json \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

③证书分发

cp kubernetes*.pem /opt/kubernetes/ssl/

scp kubernetes*.pem 192.168.56.12:/opt/kubernetes/ssl/

scp kubernetes*.pem 192.168.56.13:/opt/kubernetes/ssl/

④创建 kube-apiserver使用的客户端token文件

head -c /dev/urandom | od -An -t x | tr -d ' '

ad6d5bb607a186796d8861557df0d17f

cat > /opt/kubernetes/ssl/bootstrap-token.csv <<EOF

ad6d5bb607a186796d8861557df0d17f,kubelet-bootstrap,,"system:kubelet-bootstrap"

EOF

⑤创建基础用户名/密码认证配置

cat > /opt/kubernetes/ssl/basic-auth.csv <<EOF

admin,admin,

readonly,readonly,

EOF

⑥创建Kubernetes API Server系统服务文件

cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target [Service]

ExecStart=/opt/kubernetes/bin/kube-apiserver \

--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota,NodeRestriction \

--bind-address=192.168.56.11 \

--insecure-bind-address=127.0.0.1 \

--authorization-mode=Node,RBAC \

--runtime-config=rbac.authorization.k8s.io/v1 \

--kubelet-https=true \

--anonymous-auth=false \

--basic-auth-file=/opt/kubernetes/ssl/basic-auth.csv \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/ssl/bootstrap-token.csv \

--service-cluster-ip-range=10.1.0.0/ \

--service-node-port-range=- \

--tls-cert-file=/opt/kubernetes/ssl/kubernetes.pem \

--tls-private-key-file=/opt/kubernetes/ssl/kubernetes-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/kubernetes/ssl/ca.pem \

--etcd-certfile=/opt/kubernetes/ssl/kubernetes.pem \

--etcd-keyfile=/opt/kubernetes/ssl/kubernetes-key.pem \

--etcd-servers=https://192.168.56.11:2379,https://192.168.56.12:2379,https://192.168.56.13:2379 \

--enable-swagger-ui=true \

--allow-privileged=true \

--audit-log-maxage= \

--audit-log-maxbackup= \

--audit-log-maxsize= \

--audit-log-path=/opt/kubernetes/log/api-audit.log \

--event-ttl=1h \

--v= \

--logtostderr=false \

--log-dir=/opt/kubernetes/log

Restart=on-failure

RestartSec=

Type=notify

LimitNOFILE= [Install]

WantedBy=multi-user.target

⑦启动API Server服务

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl start kube-apiserver

2、部署Controller Manager服务

①创建Controller Manager系统服务文件

cat /usr/lib/systemd/system/kube-controller-manager.service [Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service]

ExecStart=/opt/kubernetes/bin/kube-controller-manager \

--address=127.0.0.1 \

--master=http://127.0.0.1:8080 \

--allocate-node-cidrs=true \

--service-cluster-ip-range=10.1.0.0/ \

--cluster-cidr=10.2.0.0/ \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \

--root-ca-file=/opt/kubernetes/ssl/ca.pem \

--leader-elect=true \

--v= \

--logtostderr=false \

--log-dir=/opt/kubernetes/log Restart=on-failure

RestartSec= [Install]

WantedBy=multi-user.target

②启动Controller Manager

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl start kube-controller-manager

3、部署Kubernetes Scheduler

①创建Kubernetes Scheduler系统服务文件

cat /usr/lib/systemd/system/kube-scheduler.service [Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes [Service]

ExecStart=/opt/kubernetes/bin/kube-scheduler \

--address=127.0.0.1 \

--master=http://127.0.0.1:8080 \

--leader-elect=true \

--v= \

--logtostderr=false \

--log-dir=/opt/kubernetes/log Restart=on-failure

RestartSec= [Install]

WantedBy=multi-user.target

②启动Kubernetes Scheduler服务

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

4、部署kubectl 命令行工具

①拷贝二进制命令

cp /opt/kubernetes/tools/kubernetes/client/bin/kubectl /opt/kubernetes/bin/

②创建 admin 证书签名请求

cd /opt/kubernetes/ssl cat > admin-csr.json << EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

③生成 admin 证书和私钥

cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \

-ca-key=/opt/kubernetes/ssl/ca-key.pem \

-config=/opt/kubernetes/ssl/ca-config.json \

-profile=kubernetes admin-csr.json | cfssljson -bare admin

生成如下文件:

[root@k8s-node1 ssl]# ls -l admin*

-rw-r--r-- root root 10月 : admin.csr

-rw-r--r-- root root 10月 : admin-csr.json

-rw------- root root 10月 : admin-key.pem

-rw-r--r-- root root 10月 : admin.pem

④设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.56.11:6443

⑤设置客户端认证参数

kubectl config set-credentials admin \

--client-certificate=/opt/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/opt/kubernetes/ssl/admin-key.pem

⑥设置上下文参数

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=admin

⑦设置默认上下文参数

kubectl config use-context kubernetes

⑧使用kubectl工具查看集群节点

[root@k8s-node1 ssl]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd- Healthy {"health": "true"}

etcd- Healthy {"health": "true"}

etcd- Healthy {"health": "true"}

五、Node节点部署

拷贝Node节点所用到的二进制包到集群节点

cd /opt/kubernetes/tools/kubernetes/server/bin

cp kubelet kube-proxy /opt/kubernetes/bin/

scp kubelet kube-proxy 192.168.56.12:/opt/kubernetes/bin/

scp kubelet kube-proxy 192.168.56.13:/opt/kubernetes/bin/

1、部署kubelet

①创建角色绑定

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

②创建kubelet bootstrapping kubeconfig文件,设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.56.11:6443 \

--kubeconfig=bootstrap.kubeconfig

③设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=ad6d5bb607a186796d8861557df0d17f \

--kubeconfig=bootstrap.kubeconfig

④设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

⑤选择默认上下文参数

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

⑥分发生成的bootstrap.kubeconfig文件

cp bootstrap.kubeconfig /opt/kubernetes/cfg

scp bootstrap.kubeconfig 192.168.56.12:/opt/kubernetes/cfg

scp bootstrap.kubeconfig 192.168.56.13:/opt/kubernetes/cfg

⑦设置CNI支持kubelet-Node节点执行

mkdir -p /etc/cni/net.d cat > /etc/cni/net.d/-default.conf << EOF

{

"name": "flannel",

"type": "flannel",

"delegate": {

"bridge": "docker0",

"isDefaultGateway": true,

"mtu":

}

}

EOF

⑧创建kubelet系统服务文件

mkdir /var/lib/kubelet #创建kubelet服务目录 cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service [Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/opt/kubernetes/bin/kubelet \

--address=192.168.56.11 \

--hostname-override=192.168.56.11 \

--pod-infra-container-image=mirrorgooglecontainers/pause-amd64:3.0 \

--experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--cert-dir=/opt/kubernetes/ssl \

--network-plugin=cni \

--cni-conf-dir=/etc/cni/net.d \

--cni-bin-dir=/opt/kubernetes/bin/cni \

--cluster-dns=10.1.0.2 \

--cluster-domain=cluster.local. \

--hairpin-mode hairpin-veth \

--allow-privileged=true \

--fail-swap-on=false \

--logtostderr=true \

--v= \

--logtostderr=false \

--log-dir=/opt/kubernetes/log

Restart=on-failure

RestartSec=

分发kubelet.service到Node节点,注意修改上图红色部分IP地址

scp /usr/lib/systemd/system/kubelet.service 192.168.56.12:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kubelet.service 192.168.56.13:/usr/lib/systemd/system/

⑨启动kubelet服务

systemctl daemon-reload

systemctl enable kubelet

systemctl start kubelet

Master上面查看csr请求

[root@k8s-node1 ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-JYT2V-hWMlex2hsYj913TgiXxBfKFKhoFX85VBwGOCQ 1m kubelet-bootstrap Pending

node-csr-VhlW-dP_O44Avewgz2A1zvV8vp3KSpIRMGFdjpE2RQk 1m kubelet-bootstrap Pending

允许kubelet 的TLS证书请求

kubectl get csr|grep 'Pending' | awk 'NR>0{print $1}'| xargs kubectl certificate approve

查看节点状态

[root@k8s-node1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

192.168.56.12 Ready <none> 37m v1.10.1

192.168.56.13 Ready <none> 37m v1.10.1

2、部署Kubernetes Proxy服务

①配置kube-proxy使用LVS

yum install -y ipvsadm ipset conntrack

②创建 kube-proxy 证书请求

cd /opt/kubernetes/ssl/ cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

③生成证书

cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \

-ca-key=/opt/kubernetes/ssl/ca-key.pem \

-config=/opt/kubernetes/ssl/ca-config.json \

-profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

④分发证书到Node节点

scp kube-proxy*.pem 192.168.56.12:/opt/kubernetes/ssl/

scp kube-proxy*.pem 192.168.56.13:/opt/kubernetes/ssl/

⑤创建kube-proxy配置文件

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.56.11:6443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/ssl/kube-proxy.pem \

--client-key=/opt/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

⑥分发kubeconfig配置文件

cp kube-proxy.kubeconfig /opt/kubernetes/cfg/

scp kube-proxy.kubeconfig 192.168.56.12:/opt/kubernetes/cfg/

scp kube-proxy.kubeconfig 192.168.56.13:/opt/kubernetes/cfg/

⑦创建kube-proxy系统服务文件

mkdir /var/lib/kube-proxy #创建kube-proxy服务目录 cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target [Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy \

--bind-address=192.168.56.11 \

--hostname-override=192.168.56.11 \

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig \

--masquerade-all \

--feature-gates=SupportIPVSProxyMode=true \

--proxy-mode=ipvs \

--ipvs-min-sync-period=5s \

--ipvs-sync-period=5s \

--ipvs-scheduler=rr \

--logtostderr=true \

--v= \

--logtostderr=false \

--log-dir=/opt/kubernetes/log Restart=on-failure

RestartSec=

LimitNOFILE= [Install]

WantedBy=multi-user.target

⑧分发kube-proxy系统服务文件到Node节点

scp /usr/lib/systemd/system/kube-proxy.service 192.168.56.12:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/kube-proxy.service 192.168.56.13:/usr/lib/systemd/system/

⑨启动kube-proxy服务

systemctl daemon-reload

systemctl enable kube-proxy

systemctl start kube-proxy

Node节点查看LVS状态

[root@k8s-node2 ~]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.1.0.1: rr persistent

-> 192.168.56.11: Masq

[root@k8s-node3 ~]# ipvsadm -L -n

IP Virtual Server version 1.2. (size=)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.1.0.1: rr persistent

-> 192.168.56.11: Masq

六、Flannel网络服务部署

1、生成Flannel证书

cd /opt/kubernetes/ssl/ cat > flanneld-csr.json <<EOF

{

"CN": "flanneld",

"hosts": [],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

cfssl gencert -ca=/opt/kubernetes/ssl/ca.pem \

-ca-key=/opt/kubernetes/ssl/ca-key.pem \

-config=/opt/kubernetes/ssl/ca-config.json \

-profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld

2、分发证书到Node节点

scp flanneld*.pem 192.168.56.12:/opt/kubernetes/ssl/

scp flanneld*.pem 192.168.56.13:/opt/kubernetes/ssl/

3、分发Flannel软件包到Node节点

①下载解压Flannel

cd /opt/kubernetes/tools

wget https://github.com/coreos/flannel/releases/download/v0.10.0/flannel-v0.10.0-linux-amd64.tar.gz

tar xf flannel-v0.10.0-linux-amd64.tar.gz

cp flanneld mk-docker-opts.sh /opt/kubernetes/bin/

②分发到节点

scp flanneld mk-docker-opts.sh 192.168.56.12:/opt/kubernetes/bin/

scp flanneld mk-docker-opts.sh 192.168.56.13:/opt/kubernetes/bin/

③分发对应脚本到/opt/kubernetes/bin目录下

cd /opt/kubernetes/tools/kubernetes/cluster/centos/node/bin

cp remove-docker0.sh /opt/kubernetes/bin/

scp remove-docker0.sh 192.168.56.12:/opt/kubernetes/bin/

scp remove-docker0.sh 192.168.56.13:/opt/kubernetes/bin/

4、创建Flannel配置文件

cat > /opt/kubernetes/cfg/flannel <<EOF

FLANNEL_ETCD="-etcd-endpoints=https://192.168.56.11:2379,https://192.168.56.12:2379,https://192.168.56.13:2379"

FLANNEL_ETCD_KEY="-etcd-prefix=/kubernetes/network"

FLANNEL_ETCD_CAFILE="--etcd-cafile=/opt/kubernetes/ssl/ca.pem"

FLANNEL_ETCD_CERTFILE="--etcd-certfile=/opt/kubernetes/ssl/flanneld.pem"

FLANNEL_ETCD_KEYFILE="--etcd-keyfile=/opt/kubernetes/ssl/flanneld-key.pem"

EOF

分发到Node节点

scp /opt/kubernetes/cfg/flannel 192.168.56.12:/opt/kubernetes/cfg/

scp /opt/kubernetes/cfg/flannel 192.168.56.13:/opt/kubernetes/cfg/

5、设置Flannel系统服务文件

cat > /usr/lib/systemd/system/flannel.service <<EOF

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

Before=docker.service [Service]

EnvironmentFile=-/opt/kubernetes/cfg/flannel

ExecStartPre=/opt/kubernetes/bin/remove-docker0.sh

ExecStart=/opt/kubernetes/bin/flanneld ${FLANNEL_ETCD} ${FLANNEL_ETCD_KEY} ${FLANNEL_ETCD_CAFILE} ${FLANNEL_ETCD_CERTFILE} ${FLANNEL_ETCD_KEYFILE}

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -d /run/flannel/docker Type=notify [Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

分发到Node节点

scp /usr/lib/systemd/system/flannel.service 192.168.56.12:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/flannel.service 192.168.56.13:/usr/lib/systemd/system/

6、Flannel集成CNI

①下载CNI软件包

###官方下载地址:

###https://github.com/containernetworking/plugins/releases###

cd /opt/kubernetes/tools/

wget https://github.com/containernetworking/plugins/releases/download/v0.7.1/cni-plugins-amd64-v0.7.1.tgz mkdir /opt/kubernetes/bin/cni

tar xf cni-plugins-amd64-v0.7.1.tgz -C /opt/kubernetes/bin/cni

scp -r /opt/kubernetes/bin/cni/*

②分发到Node节点

scp -r /opt/kubernetes/bin/cni/* 192.168.56.12:/opt/kubernetes/bin/cni/

scp -r /opt/kubernetes/bin/cni/* 192.168.56.13:/opt/kubernetes/bin/cni/

③创建Etcd的key-Master上面执行一次即可

/opt/kubernetes/bin/etcdctl --ca-file /opt/kubernetes/ssl/ca.pem --cert-file /opt/kubernetes/ssl/flanneld.pem --key-file /opt/kubernetes/ssl/flanneld-key.pem \

--no-sync -C https://192.168.56.11:2379,https://192.168.56.12:2379,https://192.168.56.13:2379 \

mk /kubernetes/network/config '{ "Network": "10.2.0.0/16", "Backend": { "Type": "vxlan", "VNI": 1 }}' >/dev/null >&

④启动flannel服务

systemctl daemon-reload

systemctl enable flannel

chmod +x /opt/kubernetes/bin/*

systemctl start flannel

7、配置Docker使用Flannel

①修改/usr/lib/systemd/system/docker.service

[Unit]

After=network-online.target firewalld.service flannel.service

Wants=network-online.target

Requires=flannel.service Description=Docker Application Container Engine

Documentation=https://docs.docker.com

BindsTo=containerd.service

#After=network-online.target firewalld.service containerd.service

#Wants=network-online.target

#Requires=docker.socket [Service]

EnvironmentFile=-/run/flannel/docker

ExecStart=/usr/bin/dockerd $DOCKER_OPTS②分发到Node节点

scp /usr/lib/systemd/system/docker.service 192.168.56.12:/usr/lib/systemd/system/

scp /usr/lib/systemd/system/docker.service 192.168.56.13:/usr/lib/systemd/system

③重启Docker

systemctl daemon-reload

systemctl restart docker

七、创建服务测试集群

1、创建一个测试用的deployment

kubectl run net-test --image=alpine --replicas= sleep

2、查看Pod获取IP情况

[root@k8s-node1 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

net-test-5767cb94df-qhznf / Running 3m 10.2.64.252 192.168.56.13

net-test-5767cb94df-rlq2c / Running 3m 10.2.26.249 192.168.56.12

3、测试连通性

ping 10.2.64.252

八、Kubernetes必备插件安装

1、CoreDNS

①YAML文件

cat /srv/addons/coredns/coredns.yaml apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExists

data:

Corefile: |

.: {

errors

health

kubernetes cluster.local. in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :

proxy . /etc/resolv.conf

cache

}

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

replicas:

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable:

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

spec:

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: coredns

image: coredns/coredns:1.0.

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

ports:

- containerPort:

name: dns

protocol: UDP

- containerPort:

name: dns-tcp

protocol: TCP

livenessProbe:

httpGet:

path: /health

port:

scheme: HTTP

initialDelaySeconds:

timeoutSeconds:

successThreshold:

failureThreshold:

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 10.1.0.2

ports:

- name: dns

port:

protocol: UDP

- name: dns-tcp

port:

protocol: TCP

②创建CoreDNS

kubectl create -f /srv/addons/coredns/coredns.yaml

③查看

[root@k8s-node1 ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-77c989547b-h8x7n / Running 7m

coredns-77c989547b-xvkhz / Running 7m

2、Dashboard

①YAML文件

cat /srv/addons/dashboard/kubernetes-dashboard.yaml

# Copyright The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License. # Configuration to deploy release version of the Dashboard UI compatible with

# Kubernetes 1.8.

#

# Example usage: kubectl create -f <this_file> # ------------------- Dashboard Secret ------------------- # apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kube-system

type: Opaque ---

# ------------------- Dashboard Service Account ------------------- # apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system ---

# ------------------- Dashboard Role & Role Binding ------------------- # kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

rules:

# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create"]

# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["create"]

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics from heapster.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

verbs: ["get"] ---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: kubernetes-dashboard-minimal

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard-minimal

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system ---

# ------------------- Dashboard Deployment ------------------- # kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

replicas:

revisionHistoryLimit:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

#image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.8.3

image: mirrorgooglecontainers/kubernetes-dashboard-amd64:v1.8.3

ports:

- containerPort:

protocol: TCP

args:

- --auto-generate-certificates

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port:

initialDelaySeconds:

timeoutSeconds:

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule ---

# ------------------- Dashboard Service ------------------- # kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port:

targetPort:

nodePort:

selector:

k8s-app: kubernetes-dashboard

type: NodePort

②创建Dashboard

kubectl create -f /srv/addons/dashboard/

③查看

kubectl cluster-info

Kubernetes master is running at https://192.168.56.11:6443

CoreDNS is running at https://192.168.56.11:6443/api/v1/namespaces/kube-system/services/coredns:dns/proxy

kubernetes-dashboard is running at https://192.168.56.11:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

④访问Dashboard

https://192.168.56.11:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

用户名:admin 密码:admin 选择Token令牌模式登录

⑤获取Token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

3、heapster

①YAML文件

cat /srv/addons/heapster/heapster.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

--- apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

roleRef:

kind: ClusterRole

name: system:heapster

apiGroup: rbac.authorization.k8s.io

--- apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas:

selector:

matchLabels:

k8s-app: heapster

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

#image: gcr.io/google_containers/heapster-amd64:v1.5.1

image: mirrorgooglecontainers/heapster-amd64:v1.5.1

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:https://kubernetes.default

- --sink=influxdb:http://monitoring-influxdb.kube-system.svc:8086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

#kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port:

targetPort:

selector:

k8s-app: heapster

②创建heapster

kubectl create -f /srv/addons/heapster/

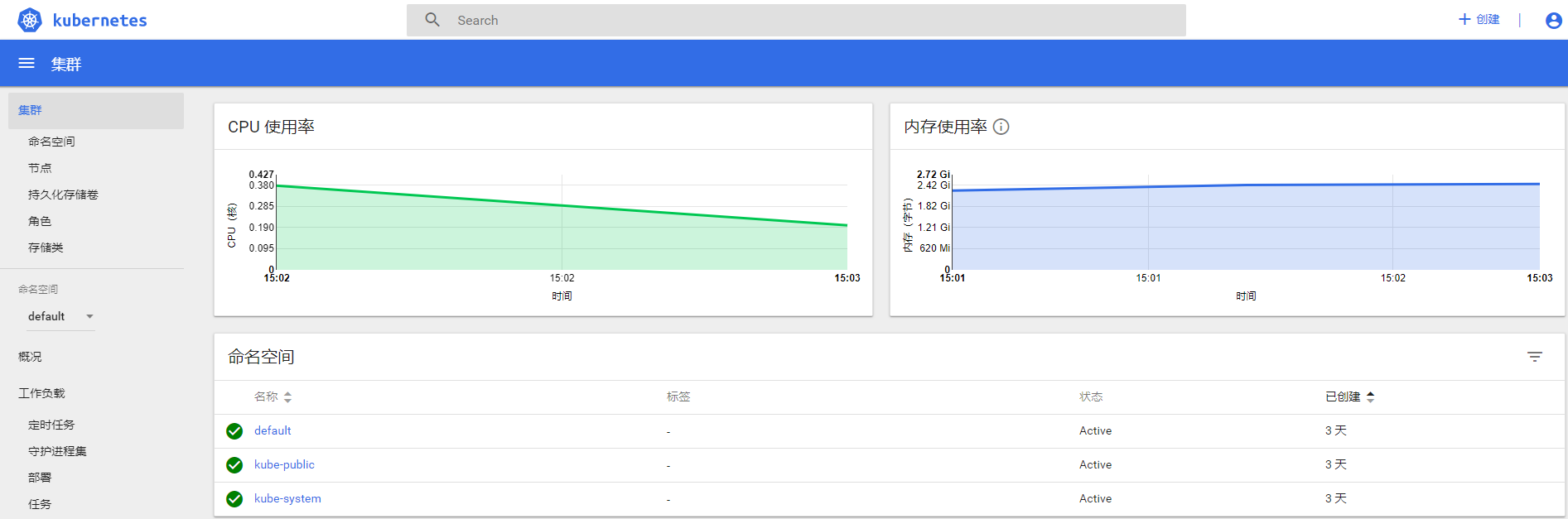

③登录Dashboard查看

4、ingress

①YAML文件

cat /srv/addons/ingress/ingress-rbac.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress

namespace: kube-system --- kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: ingress

subjects:

- kind: ServiceAccount

name: ingress

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

[root@k8s-node1 ~]# cat /srv/addons/ingress/ingress-rbac.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress

namespace: kube-system --- kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: ingress

subjects:

- kind: ServiceAccount

name: ingress

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

②部署Traefik

kubectl create -f /srv/addons/ingress/

5、Helm

①部署Helm客户端

cd /opt/kubernetes/tools

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.9.1-linux-amd64.tar.gz

tar xf helm-v2.9.1-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/

②初始化Helm并部署Tiller服务端

helm init --upgrade –i registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.9.1 --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

③所有节点安装socat命令

yum install -y socat

④验证安装是否成功

[root@k8s-node1 ~]# helm version

Client: &version.Version{SemVer:"v2.9.1", GitCommit:"20adb27c7c5868466912eebdf6664e7390ebe710", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.9.1", GitCommit:"20adb27c7c5868466912eebdf6664e7390ebe710", GitTreeState:"clean"}

⑤使用Helm部署第一个应用

创建服务账号

kubectl create serviceaccount --namespace kube-system tiller

创建集群的角色绑定

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

为应用程序设置serviceAccount

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

搜索Helm应用

[root@k8s-node1 ~]# helm search jenkins

NAME CHART VERSION APP VERSION DESCRIPTION

stable/jenkins 0.13. 2.73 Open source continuous integration server. It s..

查看repo helm repo list

[root@k8s-node1 ~]# helm repo list

NAME URL

stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

local http://127.0.0.1:8879/charts

安装

helm install stable/jenkins

linux运维、架构之路-Kubernetes离线集群部署-无坑的更多相关文章

- linux运维、架构之路-Kubernetes离线、二进制部署集群

一.Kubernetes对应Docker的版本支持列表 Kubernetes 1.9 <--Docker 1.11.2 to 1.13.1 and 17.03.x Kubernetes 1.8 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之集群部署环境规划(一)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.环境规划 软件 版本 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之自签TLS证书及Etcd集群部署(二)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.服务器设置 1.把每一 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录

0.目录 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.感谢 在此感谢.net ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之flanneld网络介绍及部署(三)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.flanneld介绍 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之部署master/node节点组件(四)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 1.部署master组件 ...

- linux运维架构师职业规划

1.假如你从来未接触过Linux的话,首先要做的就找一本指导书来学习.现在公认的Linux的入门书籍是“鸟哥的私房菜”,讲的很全面,鸟哥的私房菜一共分为两部,一部是基础篇,一部是服务器篇.“鸟哥的私房 ...

- Kubernetes 企业级集群部署方式

一.Kubernetes介绍与特性 1.1.kubernetes是什么 官方网站:http://www.kubernetes.io • Kubernetes是Google在2014年开源的一个容器集群 ...

- Kubernetes&Docker集群部署

集群环境搭建 搭建kubernetes的集群环境 环境规划 集群类型 kubernetes集群大体上分为两类:一主多从和多主多从. 一主多从:一台Master节点和多台Node节点,搭建简单,但是有单 ...

随机推荐

- CentOS配置java环境,mysql数据库等文章链接

配置jdk 配置jdk 安装mysql8 yum install -y mysql-community-server 安装mysql8 安装redi 安装redis 安装docker 安装docker

- 第五次java实验报告

Java实验报告 班级 计科二班 学号 20188437 姓名 何磊 完成时间2019/10/10 评分等级 实验四 类的继承 实验目的 理解抽象类与接口的使用: 了解包的作用,掌握包的设计方法. 实 ...

- 2019icpc南昌邀请赛F(线段树)

题目链接:https://nanti.jisuanke.com/t/40258 题意:给长为n的数组a,有m次操作,包括单点修改和查询F(l,r),其值为所有f(i,j)的异或和,l<=i< ...

- Luogu P2617 Dynamic Rankings(整体二分)

题目 动态区间第K小模板题. 一个非常可行的办法是BIT套动态开点权值SegTree,但是它跑的实在太慢了. 然后由于这题并没有强制在线,所以我们可以使用整体二分来吊打树套树. 当然如果强制在线的话就 ...

- python_0基础开始_day11

第十一节 一,函数名的第一类对象 函数名当作值,赋值给变量 print(函数名) 查看看书的内存地址 函数名可以当作容器中的元素 lis = []dic = {}def func(): prin ...

- python_0基础开始_day08

第八节 1,文件操作 文件操作目的: 持久化,永久存储 (数据库之前 -- 文件操作就是代替数据库) 读 1,找到文件位 2,双击打开 3,进行一些操作 4,关闭文件 open() 打开,通过pyth ...

- sql server truncate语句

truncate语句 --truncate table '表名' --这样就利用SQL语句清空了该数据表,而不保留日志

- pytorch中torch.narrow()函数

torch.narrow(input, dim, start, length) → Tensor Returns a new tensor that is a narrowed version of ...

- Altium Designer 编译原理图出现has no driving source警告解决办法

版权声明:本文为博主原创文章,遵循CC 4.0 BY-SA 版权协议,转载请附上原文出处链接及本声明. 作者:struct_mooc 博客地址:https://www.cnblogs.com/stru ...

- DES加密解密 MD5加密解密

#region MD5 加密 /// <summary> /// MD5加密静态方法 /// </summary> /// <param name="Encry ...