Hadoop分布式集群部署(单namenode节点)

Hadoop分布式集群部署

系统系统环境:

OS: CentOS 6.8

内存:2G

CPU:1核

Software:jdk-8u151-linux-x64.rpm

hadoop-2.7.4.tar.gz

hadoop下载地址:

sudo wget http://mirrors.hust.edu.cn/apache/hadoop/common/hadoop-2.7.4/hadoop-2.7.4.tar.gz

主机列表信息:

|

主机名 |

IP 地址 |

安装软件 |

Hadoop role |

Node role |

|

hadoop-01 |

192.168.153.128 |

Jdk,hadoop |

NameNode |

namenode |

|

hadoop-02 |

192.168.153.129 |

Jdk,hadoop |

DataNode |

datenode |

|

hadoop-03 |

192.168.153.130 |

Jdk,hadoop |

DataNode |

datenode |

基础配置:

1.Hosts文件设置(三台主机的host文件需要保持一致)

[root@hadoop- ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

:: localhost localhost.localdomain localhost6 localhost6.localdomain6

#######################################

192.168.153.128 hadoop-

192.168.153.129 hadoop-

192.168.153.130 hadoop-

2.创建hadoop账户,使用该账户运行hadoop服务(三台主机都创建hadoop用户),创建hadoop用户,设置拥有sudo权限

[root@hadoop-01 ~]# useradd hadoop && echo hadoop | passwd --stdin hadoop

Changing password for user hadoop.

passwd: all authentication tokens updated successfully.

[root@hadoop-01 ~]# echo "hadoop ALL=(ALL) NOPASSWD:ALL" >> /etc/sudoers

3.生成SSH免秘钥认证文件(在三台主机都执行)

[root@hadoop-01 ~]# su - hadoop

[hadoop@hadoop-01 ~]$ ssh-keygen -t rsa

4.SSH密钥认证文件分发.

1.>将hadoop-01的公钥信息复制到另外两台机器上面

[hadoop@hadoop-01 ~]$ ssh-copy-id 192.168.153.128

[hadoop@hadoop-01 ~]$ ssh-copy-id 192.168.153.129

[hadoop@hadoop-01 ~]$ ssh-copy-id 192.168.153.130

测试ssh免密码登录

[hadoop@hadoop-01 ~]$ ssh 192.168.153.128

Last login: Wed Nov 15 22:37:10 2017 from hadoop-01

[hadoop@hadoop-02 ~]$ exit

logout

Connection to 192.168.153.128 closed.

[hadoop@hadoop-01 ~]$ ssh 192.168.153.129

Last login: Wed Nov 15 22:37:10 2017 from hadoop-01

[hadoop@hadoop-02 ~]$ exit

logout

Connection to 192.168.153.129 closed.

[hadoop@hadoop-01 ~]$ ssh 192.168.153.130

Last login: Thu Nov 16 07:46:35 2017 from hadoop-01

[hadoop@hadoop-03 ~]$ exit

logout

Connection to 192.168.153.130 closed.

2.>分发hadoop@hadoop-02的公钥到其他两台主机.

hadoop@hadoop-02 ~]$ ssh-copy-id 192.168.153.128

[hadoop@hadoop-02 ~]$ ssh-copy-id 192.168.153.129

[hadoop@hadoop-02 ~]$ ssh-copy-id 192.168.153.130

测试hadoop@hadoop-02 ssh免密码登录其他三台主机,包括自己本身的登录.

[hadoop@hadoop-02 ~]$ ssh 192.168.153.128

Last login: Sat Oct 28 19:37:16 2017 from hadoop-01

[hadoop@hadoop-01 ~]$ exit

logout

Connection to 192.168.153.128 closed.

[hadoop@hadoop-02 ~]$ ssh 192.168.153.129

Last login: Wed Nov 15 21:26:12 2017 from hadoop-01

[hadoop@hadoop-02 ~]$ exit

logout

Connection to 192.168.153.129 closed.

[hadoop@hadoop-02 ~]$ ssh 192.168.153.130

Last login: Thu Nov 16 06:35:35 2017 from hadoop-01

[hadoop@hadoop-03 ~]$

[hadoop@hadoop-03 ~]$ exit

logout

Connection to 192.168.153.130 closed.

3.>分发hadoop@hadoop-03的公钥到其他两台主机.

[hadoop@hadoop-03 ~]$ ssh-copy-id 192.168.153.128

[hadoop@hadoop-03 ~]$ ssh-copy-id 192.168.153.129

[hadoop@hadoop-03 ~]$ ssh-copy-id 192.168.153.130

测试hadoop@hadoop-03的免秘钥分发.

[hadoop@hadoop-03 ~]$ ssh 192.168.153.128

Last login: Sat Oct 28 19:43:39 2017 from hadoop-02

[hadoop@hadoop-01 ~]$ exit

logout

Connection to 192.168.153.128 closed.

[hadoop@hadoop-03 ~]$ ssh 192.168.153.129

Last login: Wed Nov 15 21:32:31 2017 from hadoop-02

[hadoop@hadoop-02 ~]$ exit

logout

Connection to 192.168.153.129 closed.

[hadoop@hadoop-03 ~]$ ssh 192.168.153.130

Last login: Thu Nov 16 06:41:53 2017 from hadoop-02

[hadoop@hadoop-03 ~]$ exit

logout

Connection to 192.168.153.130 closed.

[hadoop@hadoop-03 ~]$

这样三台机器都可以互相免密钥访问, ssh-copy-id会以追加的方式进行密钥的分发.

在root@hadoop-01上面上传下载软件,然后使用scp分发到02、03节点,速度更快.

5.安装jdk(三台主机jdk安装)

[root@hadoop-01 ~]# rpm -ivh jdk-8u151-linux-x64.rpm

Preparing... ########################################### [100%]

1:jdk1.8 ########################################### [100%]

[root@hadoop-01 ~]# export JAVA_HOME=/usr/java/jdk1.8.0_151/

[root@hadoop-01 ~]# export PATH=$JAVA_HOME/bin:$PATH

[root@hadoop-01 ~]# java -version

java version "1.8.0_151"

Java(TM) SE Runtime Environment (build 1.8.0_151-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.151-b12, mixed mode)

6、安装hadoop:

[hadoop@hadoop-01~]$ wget http://mirrors.hust.edu.cn/apache/hadoop/common/hadoop-2.7.4/hadoop-2.7.4-src.tar.gz

[hadoop@hadoop-01 ~]$ sudo tar zxvf hadoop-2.7.4.tar.gz -C /home/hadoop/ && cd /home/hadoop

[hadoop@hadoop-01 ~]$ sudo mv hadoop-2.7.4/ hadoop

[hadoop@hadoop-01 ~]$ sudo chown -R hadoop:hadoop hadoop/

#将hadoop的二进制目录添加到PATH变量,并设置HADOOP_HOME环境变量

[hadoop@hadoop-01 ~]$ export HADOOP_HOME=/home/hadoop/hadoop/

[hadoop@hadoop-01 ~]$ export PATH=$HADOOP_HOME/bin:$PATH

二.配置文件修改

修改hadoop的配置文件.

配置文件位置:/home/hadoop/hadoop/etc/hadoop

1.修改hadoop-env.sh配置文件,指定JAVA_HOME为JAVA的安装路径

export JAVA_HOME=${JAVA_HOME}

修改为:

export JAVA_HOME=/usr/java/jdk1.8.0_151/

2. 修改yarn-env.sh文件.

指定yran框架的java运行环境,该文件是yarn框架运行环境的配置文件,需要修改JAVA_HOME的位置

# export JAVA_HOME=/home/y/libexec/jdk1.6.0/

修改为:

export JAVA_HOME=/usr/java/jdk1.8.0_151/

3. 修改slaves文件.

指定DataNode数据存储服务器,将所有的DataNode的机器的主机名写入到此文件中,如下:

[hadoop@hadoop-01 hadoop]$ cat slaves

hadoop-02

hadoop-03

4.修改core-site.xml文件.在<configuration>……</configuration>中添加如下:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://hadoop-01:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

</configuration>

5. 修改hdfs-site.xml配置文件,在<configuration>……</configuration>中添加如下:

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop-:</value>

<description># 通过web界面来查看HDFS状态 </description>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value></value>

<description># 每个Block有2个备份</description>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

6. 修改mapred-site.xml

指定Hadoop的MapReduce运行在YARN环境

[hadoop@hadoop- hadoop]$ cp mapred-site.xml.template mapred-site.xml

[hadoop@hadoop- hadoop]$ vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop-:</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop-:</value>

</property>

</configuration>

7.> 修改yarn-site.xml

#该文件为yarn架构的相关配置

<configuration>

<!-- Site specific YARN configuration properties --> <property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop-:</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop-:</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop-:</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>hadoop-:</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>hadoop-:</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value></value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value></value>

</property>

</configuration>

三.节点分发

复制hadoop到其他节点

[hadoop@hadoop-01 ~]$ scp -r /home/hadoop/hadoop/ 192.168.153.129:/home/hadoop/

[hadoop@hadoop-01 ~]$ scp -r /home/hadoop/hadoop/ 192.168.153.130:/home/hadoop/

四、初始化及服务启停.

1.在hadoop-01使用hadoop用户初始化NameNode.

[hadoop@hadoop-01 ~]$ /home/hadoop/hadoop/bin/hdfs namenode -format

17/10/28 22:54:33 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop-01/192.168.153.128

************************************************************/

[hadoop@hadoop-01 ~]$ echo $?

0

说明执行成功.

[root@localhost ~]# tree /home/hadoop/dfs

/home/hadoop/dfs

├── data

└── name

└── current

├── fsimage_0000000000000000000

├── fsimage_0000000000000000000.md5

├── seen_txid

└── VERSION

2.启停hadoop服务:

/home/hadoop/hadoop/sbin/start-dfs.sh

/home/hadoop/hadoop/sbin/stop-dfs.sh

启动服务:

[hadoop@hadoop-01 ~]$ /home/hadoop/hadoop/sbin/start-dfs.sh

Starting namenodes on [hadoop-01]

hadoop-01: starting namenode, logging to /home/hadoop/hadoop/logs/hadoop-hadoop-namenode-hadoop-01.out

hadoop-03: starting datanode, logging to /home/hadoop/hadoop/logs/hadoop-hadoop-datanode-hadoop-03.out

hadoop-02: starting datanode, logging to /home/hadoop/hadoop/logs/hadoop-hadoop-datanode-hadoop-02.out

Starting secondary namenodes [hadoop-01]

hadoop-01: starting secondarynamenode, logging to /home/hadoop/hadoop/logs/hadoop-hadoop-secondarynamenode-hadoop-01.out

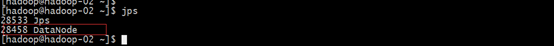

3.查看服务状态.

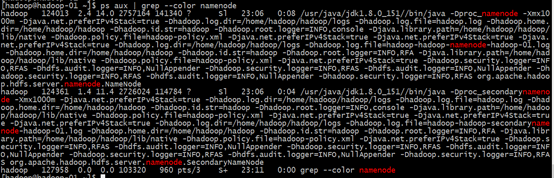

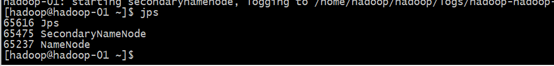

namenode节点(hadoop-01)上面查看进程

[hadoop@hadoop-01 ~]$ps aux | grep --color namenode

或者使用jps查看是否有namenode进程

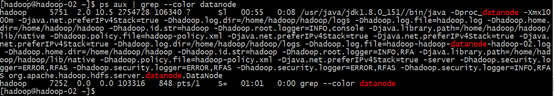

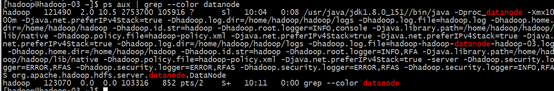

#DataNode上面查看进程(在hadoop-02、hadoop-03)上查看

#ps aux | grep --color datanode

或者jps命令查看:

4.启动yarn分布式计算框架:

在 hadoop-01上启动ResourceManager

[hadoop@hadoop-01 ~]$ /home/hadoop/hadoop/sbin/start-yarn.sh starting yarn daemons

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/hadoop/logs/yarn-hadoop-resourcemanager-hadoop-01.out

hadoop-02: starting nodemanager, logging to /home/hadoop/hadoop/logs/yarn-hadoop-nodemanager-hadoop-02.out

hadoop-03: starting nodemanager, logging to /home/hadoop/hadoop/logs/yarn-hadoop-nodemanager-hadoop-03.out

[hadoop@hadoop-01 ~]$

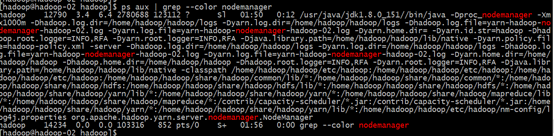

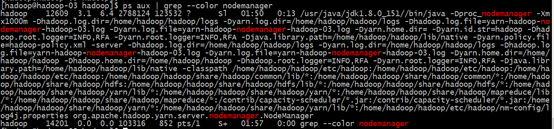

#NameNode节点上查看进程

#DataNode节点上查看进程nodemanager进程.

[hadoop@hadoop-02 ~]$ ps aux |grep --color nodemanager

5.启动jobhistory服务,查看mapreduce状态

#在NameNode节点上

[hadoop@hadoop-01 ~]$ /home/hadoop/hadoop/sbin/mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /home/hadoop/hadoop/logs/mapred-hadoop-historyserver-hadoop-01.out

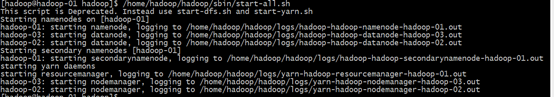

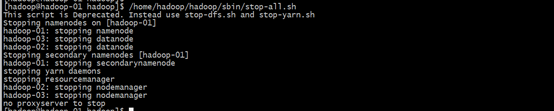

至此单节点namenode的集群环境部署完成,单节点的启停操作都是在nameserve(hadoop-01)端操作的

注:start-dfs.sh和start-yarn.sh这两个脚本可用start-all.sh代替

/home/hadoop/hadoop/sbin/stop-all.sh

/home/hadoop/hadoop/sbin/start-all.sh

启动:

[hadoop@hadoop-01 hadoop]$ /home/hadoop/hadoop/sbin/start-all.sh

[hadoop@hadoop-01 hadoop]$ /home/hadoop/hadoop/sbin/stop-all.sh

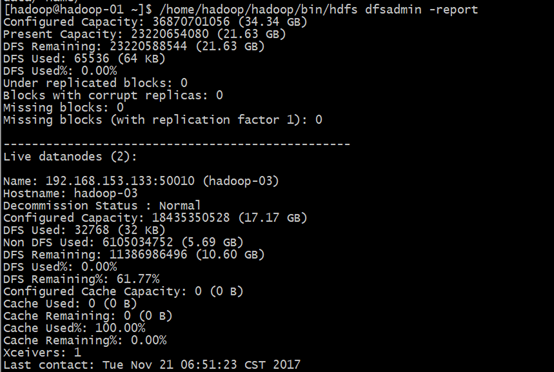

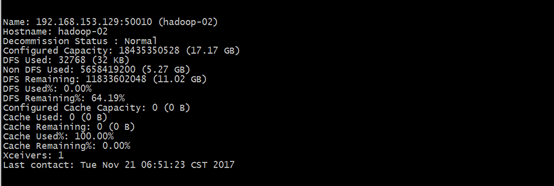

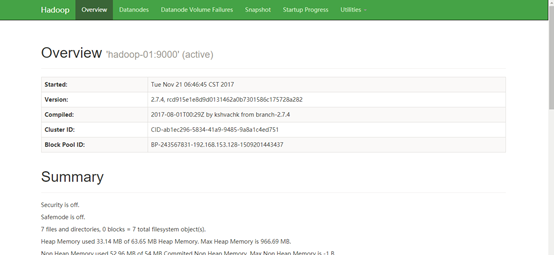

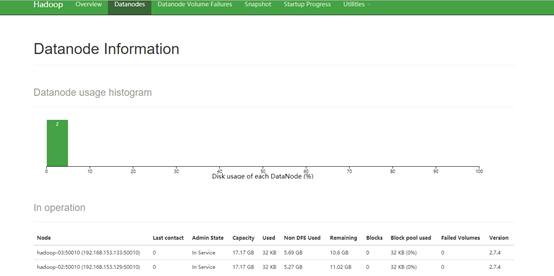

6.查看HDFS分布式文件系统状态

[hadoop@hadoop-01 ~]$ /home/hadoop/hadoop/bin/hdfs dfsadmin -report

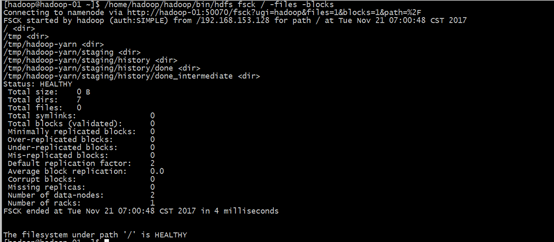

#查看文件块组成,一个文件由那些块组成

[hadoop@hadoop-01 ~]$ /home/hadoop/hadoop/bin/hdfs fsck / -files -blocks

web页面查看hadoop集群状态

查看HDFS状态:http://192.168.153.128:50070

查看Hadoop集群状态: http://192.168.153.128:8088/cluster

登录web也成功,至此环境部署完成.

Hadoop分布式集群部署(单namenode节点)的更多相关文章

- Hadoop教程(五)Hadoop分布式集群部署安装

Hadoop教程(五)Hadoop分布式集群部署安装 1 Hadoop分布式集群部署安装 在hadoop2.0中通常由两个NameNode组成,一个处于active状态,还有一个处于standby状态 ...

- hadoop分布式集群部署①

Linux系统的安装和配置.(在VM虚拟机上) 一:安装虚拟机VMware Workstation 14 Pro 以上,虚拟机软件安装完成. 二:创建虚拟机. 三:安装CentOS系统 (1)上面步 ...

- 大数据系列之Hadoop分布式集群部署

本节目的:搭建Hadoop分布式集群环境 环境准备 LZ用OS X系统 ,安装两台Linux虚拟机,Linux系统用的是CentOS6.5:Master Ip:10.211.55.3 ,Slave ...

- Hadoop实战:Hadoop分布式集群部署(一)

一.系统参数优化配置 1.1 系统内核参数优化配置 修改文件/etc/sysctl.conf,使用sysctl -p命令即时生效. 1 2 3 4 5 6 7 8 9 10 11 12 13 14 ...

- Hadoop分布式集群部署

环境准备 IP HOSTNAME SYSTEM 192.168.131.129 hadoop-master CentOS 7.6 192.168.131.135 hadoop-slave1 CentO ...

- Hadoop(HA)分布式集群部署

Hadoop(HA)分布式集群部署和单节点namenode部署其实一样,只是配置文件的不同罢了. 这篇就讲解hadoop双namenode的部署,实现高可用. 系统环境: OS: CentOS 6.8 ...

- 一脸懵逼学习Hadoop分布式集群HA模式部署(七台机器跑集群)

1)集群规划:主机名 IP 安装的软件 运行的进程master 192.168.199.130 jdk.hadoop ...

- 基于Hadoop分布式集群YARN模式下的TensorFlowOnSpark平台搭建

1. 介绍 在过去几年中,神经网络已经有了很壮观的进展,现在他们几乎已经是图像识别和自动翻译领域中最强者[1].为了从海量数据中获得洞察力,需要部署分布式深度学习.现有的DL框架通常需要为深度学习设置 ...

- 使用Docker在本地搭建Hadoop分布式集群

学习Hadoop集群环境搭建是Hadoop入门必经之路.搭建分布式集群通常有两个办法: 要么找多台机器来部署(常常找不到机器) 或者在本地开多个虚拟机(开销很大,对宿主机器性能要求高,光是安装多个虚拟 ...

随机推荐

- 使用HttpClient配置代理服务器模拟浏览器发送请求调用接口测试

在调用公司的某个接口时,直接通过浏览器配置代理服务器可以请求到如下数据: 请求url地址:http://wwwnei.xuebusi.com/rd-interface/getsales.jsp?cid ...

- s3c2440——按键中断

s3c2440的异常向量表: IRQ中断地址是0x18.所以,根据之前的异常处理方式,我们编写启动文件: 为什么需要lr减4,可以参考这篇文章:http://blog.csdn.net/zzsfqiu ...

- myeclipse 10 +Axis2 1.62 开发WebService手记

由于临时需求,不得不用java来开发一个webservice,之前对java webservice一片空白.临时查资料,耗费近一天,终于搞定,效率是慢了点.呵呵. 首先 配置Tomcat 中WebSe ...

- Go语言学习(四)经常使用类型介绍

1.布尔类型 var v1 bool v1 = true; v2 := (1==2) // v2也会被推导为bool类型 2.整型 类 型 长度(字节) 值 范 围 int8 1 128 ~ 12 ...

- facebook工具xhprof的安装与使用-分析php执行性能

下载源码包的网址 http://pecl.php.net/package/xhprof

- GitHub限制上传单个大于100M的大文件

工作中遇到这个问题,一些美术资源..unitypackage文件大于100M,Push到GitHub时被拒绝.意思是Push到GitHub的每个文件的大小都要求小于100M. 搜了一下,很多解决办法只 ...

- git diff 的用法

git diff 对比两个文件修改的记录 不带参数的调用 git diff filename 这种是比较 工作区和暂存区 比较暂存区与最新本地版本库 git diff --cached filenam ...

- 【整理】fiddler不能监听 localhost和 127.0.0.1的问题

localhost/127.0.0.1的请求不会通过任何代理发送,fiddler也就无法截获. 解决方案 1,用 http://localhost. (locahost紧跟一个点号)2,用 http: ...

- UK 更新惊魂记

本文前提是.由于更easy安装各种webserver.数据库,redis缓存.mq等软件,笔者使用Ubuntu Kylin作为开发系统已经好长时间了. 而今天(2015-07-23)下午2时许,系统提 ...

- nginx 4层tcp代理获取真实ip

举个例子,Nginx 中的代理配置假如是这样配置的: location / { proxy_http_version 1.1; proxy_set_header X-Real-IP $remote_a ...