Why Apache Beam? A data Artisans perspective

https://cloud.google.com/dataflow/blog/dataflow-beam-and-spark-comparison

https://github.com/apache/incubator-beam

https://www.oreilly.com/ideas/the-world-beyond-batch-streaming-101

https://www.oreilly.com/ideas/the-world-beyond-batch-streaming-102

http://data-artisans.com/why-apache-beam/

As the Dataflow SDK and the Runners were moving to Apache Incubator as Apache Beam, we were asked by Google to bring the Flink runner into the codebase of Beam, and become committers and PMC members in the new project. We decided to go full st(r)eam ahead with this opportunity as we believe that

(1) the Beam model is the future reference programming model for writing data applications in both stream and batch, and

(2) Flink is the definitive platform to execute these data applications. As Beam is now taking shape, Flink is currently the only practical execution engine for Beam programs outside Google’s Cloud.

Beam包括,SDK部分和runner部分,而在Google外,当前Flink是一个可以作为runner的比较好的选择

Flink and Beam are completely aligned in their concepts, which makes the translation of Beam programs to Flink jobs both straightforward and very efficient.

With full support for concepts such as event time, watermarks, and triggers, and with the new features we are contributing to Flink, we believe that the superiority of the Flink runner will stick for the foreseeable future.

Flink和beam的设计和概念都很相似,所以从Beam programs翻译到Flink jobs 是非常直接的。

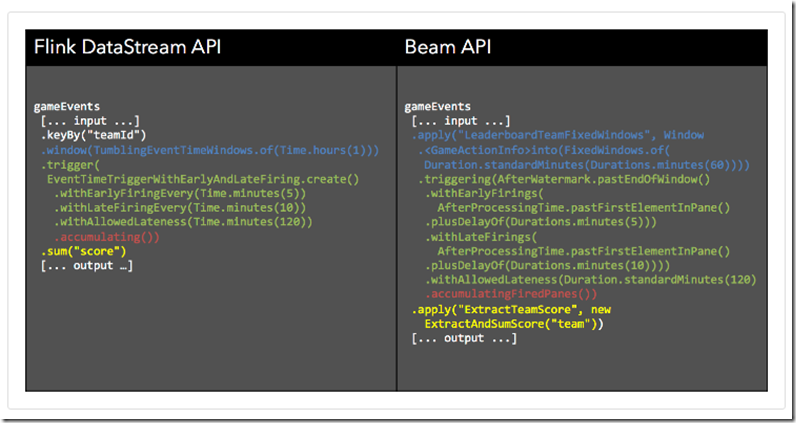

One question that remains is what is the relationship between Flink’s own native API (DataStream), and the Beam API?

Will both of these continue to be supported, and is it confusing for developers to have two different APIs that in the end generate Flink jobs?

Our committers at data Artisans will continue to fully support both the Flink DataStream API (which is, as of Flink 1.0, stable and backwards compatible), as well as the Beam API as it evolves for Beam programs that run on Flink.

The differences between the two APIs are largely syntactical (and a matter of taste), and, we are working together with Google towards unifying the APIs, with the end goal of making the Beam and Flink APIs source compatible.

We believe that the two communities can learn from each other, and we encourage users to use either of the two APIs to implement their Flink jobs for stream data processing.

With the native Flink DataStream API you get an already mature and backwards-compatible API, built-in libraries (e.g., CEP and upcoming SQL), mature tooling and connectors, key-value state (with the ability to query that state in the future), and an API which fully utilizes all the features of Flink’s powerful engine.

With the Beam API, you get the option of portability down the line as more Beam runners mature.

Flink API和Beam API的区别是什么?

区别主要是语法上的,并且会致力于unify两种接口,但两种接口会都有其存在的价值

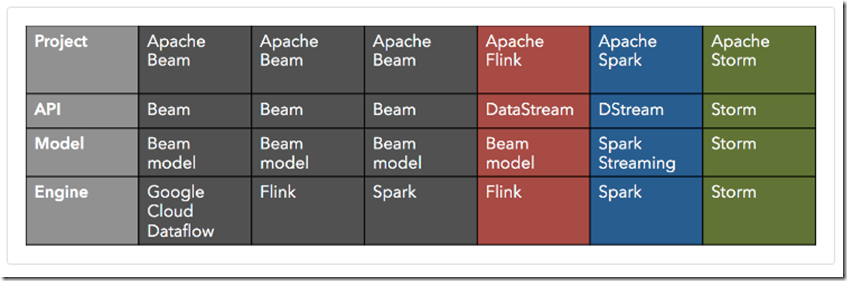

API, model, and engine

To clarify our points above, we would like to explain what we mean by choice of API, choice of programming model, and choice of execution engine.

Currently, Beam has three available runners: the Google Cloud Dataflow proprietary runner by Google, as well as the Flink and Spark runners, included in the open source Apache Beam project. Let us look at this ecosystem, and add Flink and Spark themselves with their native APIs, as well as Storm:

可以看出Beam的好处,关键在于,API和Model的统一,虽然Engine可以是不一样的

Even more, Google and data Artisans are working together to make the two APIs semantically equivalent, ironing out any minor inconsistencies.

This means that users of either API can switch with relatively low effort.

Our long term goal is to make the Beam and Flink DataStream APIs source-compatible, so that programs written in one can natively run on the other with no code changes.

If you choose to invest in the Beam programming model now, you have two options:

- Use the Flink DataStream API in Java and Scala

- Use the Beam API directly in Java (and soon Python) with the Flink runner

长期看, Beam和Flink的API会兼容,所以如果想用Beam编程模型,可以有两种选择,直接用Flink DataStream API或用Beam API加上Flink Runner

We recommend option 1 to users that want to get started immediately, using an already mature and backwards-compatible API, access to libraries (e.g., the existing CEP library and the upcoming SQL functionality), mature tooling and connectors (e.g., to Kafka), as well as an API that fully and natively utilizes all the existing and upcoming features of the Flink engine. In addition, we recommend the Flink native API for use cases that use Flink’s key-value state abstraction, and in the future Flink’s facilities for querying that state.

We recommend option 2 to users that want to keep the option of engine portability (as other Beam runners progress).

选择BeamAPI的唯一好处是,可以做到各个runner的兼容;而显然,Flink的API更丰富和成熟

In a recent blog post, Google compared Beam and Spark Streaming from a programming model perspective. They took a mobile gaming scenario, and implemented several use cases in Beam and Spark Streaming, focusing their analysis on how well are the following concerns separated in the code:

- What results are calculated? Sums, joins, histograms, machine learning models?

- Where in event time are results calculated? Does the time each event originally occurred affect results? Are results aggregated in fixed windows, sessions, or a single global window?

- When in processing time are results materialized? Does the time each event is observed within the system affect results? When are results emitted? Speculatively, as data evolve? When data arrive late and results must be revised? Some combination of these?

- How do refinements of results relate? If additional data arrive and results change, are they independent and distinct, do they build upon one another, etc.?

用一个实际例子比较一下,Flink和Beam API的不同,不同颜色部分解决不同的问题,一共4个问题

Conclusion

We firmly believe that the Beam model is the correct programming model for streaming and batch data processing.

We encourage users to adopt this model for their future data applications, embodied in either the Beam API itself or the Flink DataStream API.

Further, we believe that Flink, with its current features and roadmap, is currently the most advanced open source stream processor, and at the same time the only practical solution for deploying Beam programs in production on on-premise or non-GCP clusters. We are looking forward to continue pushing the envelope in stream processing and enabling enterprises to use stream processing technology for their data applications.

Why Apache Beam? A data Artisans perspective的更多相关文章

- beam 的异常处理 Error Handling Elements in Apache Beam Pipelines

Error Handling Elements in Apache Beam Pipelines Vallery LanceyFollow Mar 15 I have noticed a defici ...

- Apache Beam编程指南

术语 Apache Beam:谷歌开源的统一批处理和流处理的编程模型和SDK. Beam: Apache Beam开源工程的简写 Beam SDK: Beam开发工具包 **Beam Java SDK ...

- Apache Beam是什么?

Apache Beam 的前世今生 1月10日,Apache软件基金会宣布,Apache Beam成功孵化,成为该基金会的一个新的顶级项目,基于Apache V2许可证开源. 2003年,谷歌发布了著 ...

- Beam编程系列之Apache Beam WordCount Examples(MinimalWordCount example、WordCount example、Debugging WordCount example、WindowedWordCount example)(官网的推荐步骤)

不多说,直接上干货! https://beam.apache.org/get-started/wordcount-example/ 来自官网的: The WordCount examples demo ...

- Apache Beam的目标

不多说,直接上干货! Apache Beam的目标 统一(UNIFIED) 基于单一的编程模型,能够实现批处理(Batch processing).流处理(Streaming Processing), ...

- Apache Beam实战指南 | 大数据管道(pipeline)设计及实践

Apache Beam实战指南 | 大数据管道(pipeline)设计及实践 mp.weixin.qq.com 策划 & 审校 | Natalie作者 | 张海涛编辑 | LindaAI 前 ...

- Apache Beam 剖析

1.概述 在大数据的浪潮之下,技术的更新迭代十分频繁.受技术开源的影响,大数据开发者提供了十分丰富的工具.但也因为如此,增加了开发者选择合适工具的难度.在大数据处理一些问题的时候,往往使用的技术是多样 ...

- Apache Beam—透视Google统一流式计算的野心

Google是最早实践大数据的公司,目前大数据繁荣的生态很大一部分都要归功于Google最早的几篇论文,这几篇论文早就了以Hadoop为开端的整个开源大数据生态,但是很可惜的是Google内部的这些系 ...

- 初探Apache Beam

文章作者:luxianghao 文章来源:http://www.cnblogs.com/luxianghao/p/9010748.html 转载请注明,谢谢合作. 免责声明:文章内容仅代表个人观点, ...

随机推荐

- 2-SAT问题及其算法

原文地址:http://www.cppblog.com/MatoNo1/archive/2011/07/13/150766.aspx [2-SAT问题]现有一个由N个布尔值组成的序列A,给出一些限制关 ...

- SQL Server--用户自定义函数

除了使用系统提供的函数外,用户还可以根据需要自定义函数.用户自定义函数是 SQL Server 2000 新增的数据库对象,是 SQL Server 的一大改进.与编程语言中的函数类似,Microso ...

- ajax请求node.js接口时出现 No 'Access-Control-Allow-Origin' header is present on the requested resource错误

ajax请求node.js接口出现了如下的错误: XMLHttpRequest cannot load http://xxx.xxx.xx.xx:8888/getTem?cityId=110105&a ...

- sql按天分组

sql按天分组,这都不会 晕!!!!!!! ) ;

- 枚举GC Roots的实现

枚举根节点 从可达性分析中从GC Roots节点找引用链这个操作为例,可作为GC Roots的节点主要在全局性的引用(例如常量或类静态属性)与执行上下文(例如栈帧中的本地变量表)中,现在很多应用仅仅方 ...

- 简单几何(点与线段的位置) POJ 2318 TOYS && POJ 2398 Toy Storage

题目传送门 题意:POJ 2318 有一个长方形,用线段划分若干区域,给若干个点,问每个区域点的分布情况 分析:点和线段的位置判断可以用叉积判断.给的线段是排好序的,但是点是无序的,所以可以用二分优化 ...

- SCU3109 Space flight(最大权闭合子图)

嗯,裸的最大权闭合子图. #include<cstdio> #include<cstring> #include<queue> #include<algori ...

- SplendidCRM 中文语言包改正版

由于官方的中文语言包太多地方词不达意,可能是文化差异吧,如“删除”却写成“德尔”.本人修改了几十个地方,还修改了不能清除已有数据的Bug.相关文件在下载包中. http://files.cnblogs ...

- emacs auto-complete

安装的是autocomplete http://cx4a.org/software/auto-complete/ 是bz2格式压缩的 下载后 在终端输入命令 tar -xjvf auto-compl ...

- [leetCode][016] Add Two Numbers

[题目]: You are given two linked lists representing two non-negative numbers. The digits are stored in ...