Kubernetes 二进制部署(一)单节点部署(Master 与 Node 同一机器)

0. 前言

- 最近受“新冠肺炎”疫情影响,在家等着,入职暂时延后,在家里办公和学习

- 尝试通过源码编译二进制的方式在单一节点(Master 与 Node 部署在同一个机器上)上部署一个 k8s 环境,整理相关步骤和脚本如下

- 参考原文:Kubernetes二进制部署(一)单节点部署

1. 相关概念

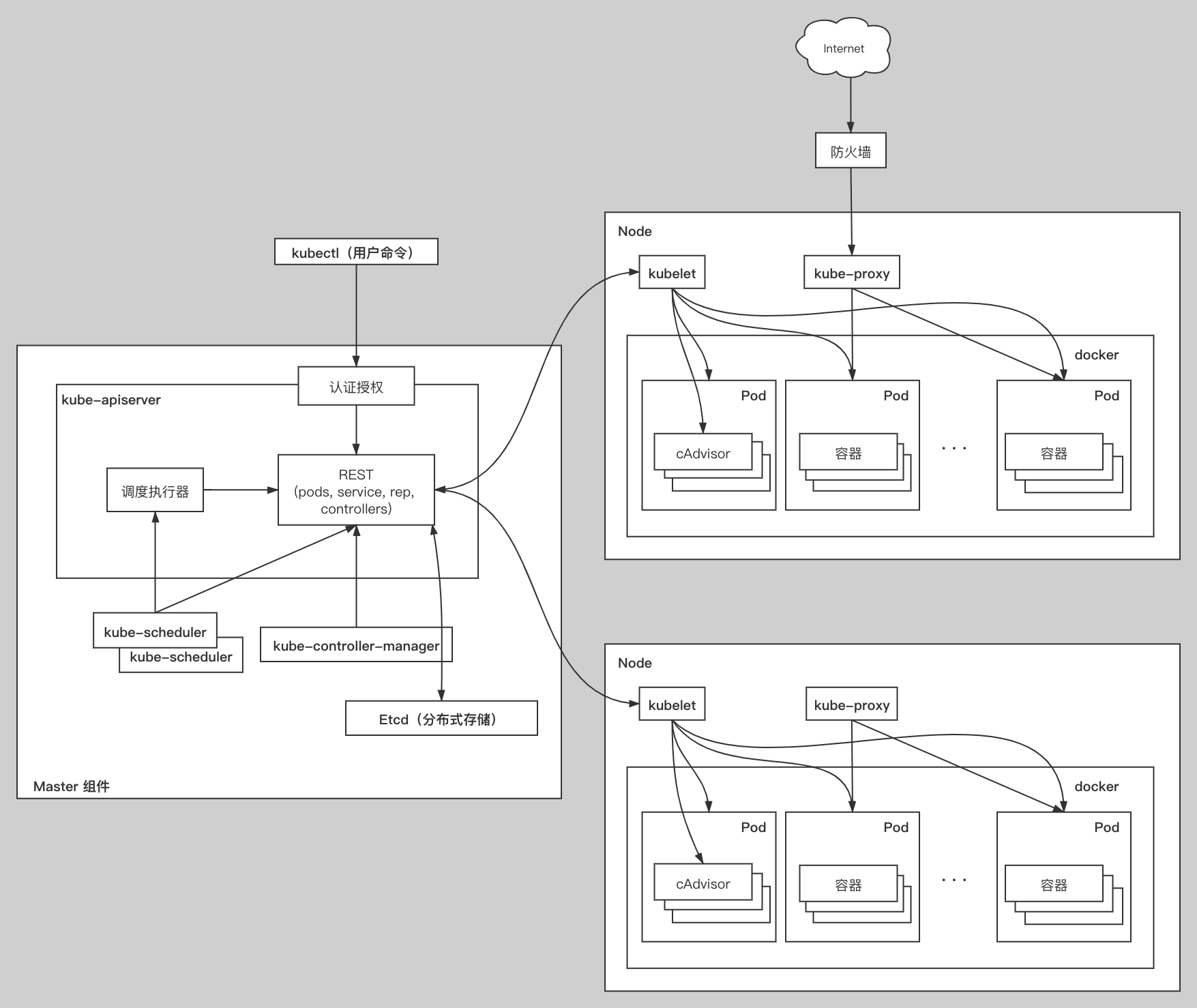

1.1 基本架构

1.2 核心组件

1.2.1 Master

1.2.1.1 kube-apiserver

- 集群的统一入口,各组件协调者

- 以RESTful API提供接口服务

- 所有对象资源的增删改查和监听操作都交给 kube-apiserver 处理

- 之后再通过分布式存储组件 Etcd 保存状态

1.2.1.2 kube-controller-manager

- 处理集群中常规后台任务

- 一个资源对应一个控制器,而 kube-controller-manager 就是负责管理这些控制器的

1.2.1.3 kube-scheduler

- 根据调度算法为新创建的 Pod 选择一个 Node 节点

- 可以任意部署,可以部署在同一个节点上,也可以部署在不同的节点上

1.2.1.4 etcd

- 分布式键值存储系统

- 用于保存集群状态数据,比如 Pod、Service 等对象信息

1.2.2 Node

1.2.2.1 kubelet

- kubelet 是 Master 在Node 节点上的 Agent

- 管理本机运行容器的生命周期,比如创建容器、Pod 挂载数据卷、下载 Secret、获取容器和节点状态等工作

- kubelet 将每个 Pod 转换成一组容器

1.2.2.2 kube-proxy

- 在 Node 节点上实现 Pod 网络代理,维护网络规则和四层负载均衡工作

- 对于从主机上发出的数据,它可以基于请求地址发现远程服务器

- 并将数据正确路由,在某些情况下会使用轮循调度算法(Round-robin)将请求发送到集群中的多个实例

1.2.2.3 docker

- 容器引擎

2. 部署流程

2.1 源码编译

- 安装 golang 环境

- kubernetes v1.18 要求使用的 golang 版本为 1.13

$ wget https://dl.google.com/go/go1.13.8.linux-amd64.tar.gz

$ tar -zxvf go1.13.8.linux-amd64.tar.gz -C /usr/local/

- 添加如下环境变量至 ~/.bashrc 或者 ~/.zshrc

export GOROOT=/usr/local/go # GOPATH

export GOPATH=$HOME/go # GOROOT bin

export PATH=$PATH:$GOROOT/bin # GOPATH bin

export PATH=$PATH:$GOPATH/bin

- 更新环境变量

$ source ~/.bashrc

- 从 github 上下载 kubernetes 最新源码

$ git clone https://github.com/kubernetes/kubernetes.git

- 编译形成二进制文件

$ make KUBE_BUILD_PLATFORMS=linux/amd64

+++ [ ::] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/deepcopy-gen

+++ [ ::] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/defaulter-gen

+++ [ ::] Building go targets for linux/amd64:

./vendor/k8s.io/code-generator/cmd/conversion-gen

+++ [ ::] Building go targets for linux/amd64:

./vendor/k8s.io/kube-openapi/cmd/openapi-gen

+++ [ ::] Building go targets for linux/amd64:

./vendor/github.com/go-bindata/go-bindata/go-bindata

+++ [ ::] Building go targets for linux/amd64:

cmd/kube-proxy

cmd/kube-apiserver

cmd/kube-controller-manager

cmd/kubelet

cmd/kubeadm

cmd/kube-scheduler

vendor/k8s.io/apiextensions-apiserver

cluster/gce/gci/mounter

cmd/kubectl

cmd/gendocs

cmd/genkubedocs

cmd/genman

cmd/genyaml

cmd/genswaggertypedocs

cmd/linkcheck

vendor/github.com/onsi/ginkgo/ginkgo

test/e2e/e2e.test

cluster/images/conformance/go-runner

cmd/kubemark

vendor/github.com/onsi/ginkgo/ginkgo

- KUBE_BUILD_PLATFORMS 指定了编译生成的二进制文件的目标平台,包括 darwin/amd64、linux/amd64 和 windows/amd64 等

- 执行 make cross 会生成所有平台的二进制文件

- 云服务器占用资源比较小,建议在本地编译然后上传至服务器

- 生成的 _output 目录即为编译生成文件,核心二进制文件在 _output/local/bin/linux/amd64 中

$ pwd

/root/Coding/kubernetes/_output/local/bin/linux/amd64

$ ls

apiextensions-apiserver genman go-runner kube-scheduler kubemark

e2e.test genswaggertypedocs kube-apiserver kubeadm linkcheck

gendocs genyaml kube-controller-manager kubectl mounter

genkubedocs ginkgo kube-proxy kubelet

- 其中 kube-apiserver、kube-scheduler、kube-controller-manager、kubectl、kube-proxy 和 kubelet 为安装需要的二进制文件

2.2 安装 docker

- 云服务器上已经安装了 docker,因此此次部署无需安装

- 具体安装细节参见 官方文档

2.3 下载安装脚本

- 后续安装部署的所有脚本已经上传至 github 仓库 中,感兴趣的朋友可以下载

- 创建工作目录 k8s 和脚本目录 k8s/scripts,复制仓库中的所有脚本,到工作目录中的脚本文件夹中

$ git clone https://github.com/wangao1236/k8s_single_deploy.git

$ cd k8s_single_deploy/scripts

$ chmod +x *.sh

$ mkdir -p k8s/scripts

$ cp k8s_single_deploy/scripts/* k8s/scripts

2.4 安装 cfssl

- 安装 cfssl,执行 k8s/scripts/cfssl.sh 脚本,或者执行如下命令:

$ curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

$ curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

$ curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

$ chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

- k8s/scripts/cfssl.sh 脚本内容如下:

$ cat k8s_single_deploy/scripts/cfssl.sh

curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /usr/local/bin/cfssl

curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /usr/local/bin/cfssljson

curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /usr/local/bin/cfssl-certinfo

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo

2.5 安装 etcd

- 创建目标文件夹

$ mkdir -p /opt/etcd/{cfg,bin,ssl}

- 下载 etcd 最新版安装包

$ wget https://github.com/etcd-io/etcd/releases/download/v3.3.18/etcd-v3.3.18-linux-amd64.tar.gz

$ tar -zxvf etcd-v3.3.18-linux-amd64.tar.gz

$ cp etcd-v3.3.18-linux-amd64/etcdctl etcd-v3.3.18-linux-amd64/etcd /opt/etcd/bin

- 创建文件夹 k8s/etcd-cert,其中 k8s 部署相关文件和脚本的存储根目录,etcd-cert 暂存 etcd https 的证书

$ mkdir -p k8s/etcd-cert

- 复制 etcd-cert.sh 脚本到 etcd-cert 目录中,并执行

$ cp k8s/scripts/etcd-cert.sh k8s/etcd-cert

- 脚本内容如下:

$ cat k8s/scripts/etcd-cert.sh

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"www": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF cat > ca-csr.json <<EOF

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- cat > server-csr.json <<EOF

{

"CN": "etcd",

"hosts": [

"10.206.240.188",

"10.206.240.189",

"10.206.240.111"

],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing"

}

]

}

EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

- 注意修改 server-csr.json 部分的 hosts 内容为 127.0.0.1 和服务器的 IP 地址

- 执行脚本

$ ./etcd-cert.sh

// :: [INFO] generating a new CA key and certificate from CSR

// :: [INFO] generate received request

// :: [INFO] received CSR

// :: [INFO] generating key: rsa-

// :: [INFO] encoded CSR

// :: [INFO] signed certificate with serial number

// :: [INFO] generate received request

// :: [INFO] received CSR

// :: [INFO] generating key: rsa-

// :: [INFO] encoded CSR

// :: [INFO] signed certificate with serial number

// :: [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1., from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2. ("Information Requirements").

- 拷贝证书

$ cp *.pem /opt/etcd/ssl

- 执行 k8s/scripts/etcd.sh 脚本

$ ./k8s/scripts/etcd.sh etcd01 127.0.0.1

# 或者

$ ./k8s/scripts/etcd.sh etcd01 ${服务器 IP}

- k8s/scripts/etcd.sh 脚本内容如下:

$ cat k8s/scripts/etcd.sh

#!/bin/bash

# example: ./etcd.sh etcd01 192.168.1.10 ETCD_NAME=$

ETCD_IP=$ WORK_DIR=/opt/etcd cat <<EOF >$WORK_DIR/cfg/etcd

#[Member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_IP}:2379" #[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_IP}:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_IP}:2379"

ETCD_INITIAL_CLUSTER="${ETCD_NAME}=https://${ETCD_IP}:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF cat <<EOF >/usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target [Service]

Type=notify

EnvironmentFile=${WORK_DIR}/cfg/etcd

ExecStart=${WORK_DIR}/bin/etcd \

--name=\${ETCD_NAME} \

--data-dir=\${ETCD_DATA_DIR} \

--listen-peer-urls=\${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=\${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=\${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=\${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=\${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=\${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=${WORK_DIR}/ssl/server.pem \

--key-file=${WORK_DIR}/ssl/server-key.pem \

--peer-cert-file=${WORK_DIR}/ssl/server.pem \

--peer-key-file=${WORK_DIR}/ssl/server-key.pem \

--trusted-ca-file=${WORK_DIR}/ssl/ca.pem \

--peer-trusted-ca-file=${WORK_DIR}/ssl/ca.pem

Restart=on-failure

LimitNOFILE= [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload

systemctl enable etcd

systemctl restart etcd

- 由于证书已经加入了 127.0.0.1 和服务器 IP 地址,因此脚本第二个参数可以为 127.0.0.1 或者 服务器 IP

- 为了隐私安全,本文隐藏了服务器 IP,尽可能使用 127.0.0.1 作为节点地址

- 检查安装是否成功,执行如下命令:

$ /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://127.0.0.1:2379" cluster-health

member f6947f26c76d8a6b is healthy: got healthy result from https://127.0.0.1:2379

cluster is healthy

# 或者

$ /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://${服务器 IP}:2379" cluster-health

member f6947f26c76d8a6b is healthy: got healthy result from https://${服务器 IP}:2379

cluster is healthy

- 由于是单节点集群,因此指定集群地址时只有一个地址,出现“member ...... is healthy: go healthy result from .......”,说明 etcd 正常启动了

- 脚本会生成配置文件

$ cat /opt/etcd/cfg/etcd

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://127.0.0.1:2380"

ETCD_LISTEN_CLIENT_URLS="https://127.0.0.1:2379" #[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://127.0.0.1:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://127.0.0.1:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://127.0.0.1:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

2.6 部署 flannel

- 写入分配的子网段到 etcd 中,供 flannel 使用:

$ /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://127.0.0.1:2379" set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

- 查看写入的信息

$ /opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://127.0.0.1:2379" get /coreos.com/network/config

{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}

- 下载 flannel 最新安装包

$ wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

$ tar -zxvf flannel-v0.11.0-linux-amd64.tar.gz

$ mkdir -p /opt/kubernetes/{cfg,bin,ssl}

$ mv mk-docker-opts.sh flanneld /opt/kubernetes/bin/

- 执行脚本 k8s/scripts/flannel.sh,第一个参数为 etcd 地址

$ ./k8s/scripts/flannel.sh https://127.0.0.1:2379

- 脚本内容如下:

$ cat k8s/scripts/flannel.sh

#!/bin/bash ETCD_ENDPOINTS=${:-"http://127.0.0.1:2379"} cat <<EOF >/opt/kubernetes/cfg/flanneld FLANNEL_OPTIONS="--etcd-endpoints=${ETCD_ENDPOINTS} \

-etcd-cafile=/opt/etcd/ssl/ca.pem \

-etcd-certfile=/opt/etcd/ssl/server.pem \

-etcd-keyfile=/opt/etcd/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service [Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure [Install]

WantedBy=multi-user.target EOF systemctl daemon-reload

systemctl enable flanneld

systemctl restart flanneld

- 查看启动时指定的子网

$ cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.23.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.23.1/24 --ip-masq=false --mtu=1450"

- 执行

vim /usr/lib/systemd/system/docker.service修改 docker 配置

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

BindsTo=containerd.service

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=docker.socket [Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H unix:///var/run/docker.sock

#ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.soc

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=

RestartSec=

Restart=always

......

- 重启 docker 服务

$ systemctl daemon-reload

$ systemctl restart docker

- 查看 flannel 网络,docker0 位于 flannel 分配的子网中

$ ifconfig

docker0:flags=<UP,BROADCAST,MULTICAST>mtu1500

inet172.17.23.1netmask255.255.255.0broadcast172.17.23.

ether02::c4::b7:e3txqueuelen0(Ethernet)

RXpackets0bytes0(.0B)

RXerrors0dropped0overruns0frame0

TXpackets0bytes0(.0B)

TXerrors0dropped0overruns0carrier0collisions0

eth1:......

flannel.:flags=<UP,BROADCAST,RUNNING,MULTICAST>mtu1450

inet172.17.23.0netmask255.255.255.255broadcast0.0.0.

ether1e:7a:e8:a0:4d:a5txqueuelen0(Ethernet)

RXpackets0bytes0(.0B)

RXerrors0dropped0overruns0frame0

TXpackets0bytes0(.0B)

TXerrors0dropped0overruns0carrier0collisions0

lo:flags=<UP,LOOPBACK,RUNNING>mtu65536

inet127.0.0.1netmask255.0.0.

looptxqueuelen0(LocalLoopback)

RXpackets2807bytes220030(.8KiB)

RXerrors0dropped0overruns0frame0

TXpackets2807bytes220030(.8KiB)

TXerrors0dropped0overruns0carrier0collisions0

- 创建容器,查看容器网络

$ docker run -it centos: /bin/bash

[root@f04f38dfa5ec /]# yum install -y net-tools

[root@f04f38dfa5ec /]# ifconfig

eth0: flags=<UP,BROADCAST,RUNNING,MULTICAST> mtu

inet 172.17.23.2 netmask 255.255.255.0 broadcast 172.17.23.255

ether ::ac::: txqueuelen (Ethernet)

RX packets bytes (14.2 MiB)

RX errors dropped overruns frame

TX packets bytes (435.0 KiB)

TX errors dropped overruns carrier collisions lo: flags=<UP,LOOPBACK,RUNNING> mtu

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen (Local Loopback)

RX packets bytes (0.0 B)

RX errors dropped overruns frame

TX packets bytes (0.0 B)

TX errors dropped overruns carrier collisions

[root@f04f38dfa5ec /]# ping 172.17.23.1

PING 172.17.23.1 (172.17.23.1) () bytes of data.

bytes from 172.17.23.1: icmp_seq= ttl= time=0.056 ms

bytes from 172.17.23.1: icmp_seq= ttl= time=0.056 ms

bytes from 172.17.23.1: icmp_seq= ttl= time=0.046 ms

bytes from 172.17.23.1: icmp_seq= ttl= time=0.048 ms

bytes from 172.17.23.1: icmp_seq= ttl= time=0.049 ms

bytes from 172.17.23.1: icmp_seq= ttl= time=0.046 ms

bytes from 172.17.23.1: icmp_seq= ttl= time=0.055 ms

- 测试可以 ping 通 docker0 网卡 证明 flannel 起到路由作用

2.7 安装 kube-apiserver

- 修改 k8s/scripts/k8s-cert.sh 中 server-csr.json 部分的 hosts 字段为 127.0.0.1 和服务器 IP 地址

cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"${服务器IP}",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

- 使用 k8s/scripts/k8s-cert.sh 脚本生成认证:

$ mkdir -p k8s/k8s-cert

$ cp k8s/scripts/k8s-cert.sh k8s/k8s-cert

$ cd k8s/k8s-cert

$ ./k8s-cert.sh

$ ls

admin.csr admin.pem ca-csr.json k8s-cert.sh kube-proxy-key.pem server-csr.json

admin-csr.json ca-config.json ca-key.pem kube-proxy.csr kube-proxy.pem server-key.pem

admin-key.pem ca.csr ca.pem kube-proxy-csr.json server.csr server.pem

$ cp ca*pem server*pem /opt/kubernetes/ssl/

- 脚本内容如下:

$ cat k8s-cert.sh

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #----------------------- cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"10.0.0.1",

"127.0.0.1",

"10.206.176.19",

"10.206.240.188",

"10.206.240.189",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server #----------------------- cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin #----------------------- cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size":

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

- 复制上述提到的 kube-apiserver、kubectl、kube-controller-manager、kube-scheduler、kubelet 和 kube-proxy 到 /

opt/kubernetes/bin/ 中

$ cp kube-apiserver kubectl kube-controller-manager kube-scheduler kubelet kube-proxy /opt/kubernetes/bin/

- 生成随机序列号

$ head -c /dev/urandom | od -An -t x | tr -d ' '

20cd735bd334f4334118f8be496df49d

$ cat /opt/kubernetes/cfg/token.csv

20cd735bd334f4334118f8be496df49d,kubelet-bootstrap,,"system:kubelet-bootstrap"

- 执行 k8s/scripts/apiserver.sh 脚本,启动 kube-apiserver.service 服务,第一个参数为 Master 节点地址,第二个为 etcd 集群地址

$ ./k8s/scripts/apiserver.sh ${服务器IP} https://127.0.0.1:2379

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-apiserver.service to /usr/lib/systemd/system/kube-apiserver.service.

- 脚本内容如下:

$ cat k8s/scripts/apiserver.sh

#!/bin/bash MASTER_ADDRESS=$

ETCD_SERVERS=$ cat <<EOF >/opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \\

--v= \\

--etcd-servers=${ETCD_SERVERS} \\

--bind-address=${MASTER_ADDRESS} \\

--secure-port= \\

--advertise-address=${MASTER_ADDRESS} \\

--allow-privileged=true \\

--service-cluster-ip-range=10.0.0.0/ \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--kubelet-https=true \\

--enable-bootstrap-token-auth \\

--token-auth-file=/opt/kubernetes/cfg/token.csv \\

--service-node-port-range=- \\

--tls-cert-file=/opt/kubernetes/ssl/server.pem \\

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\

--client-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--etcd-cafile=/opt/etcd/ssl/ca.pem \\

--etcd-certfile=/opt/etcd/ssl/server.pem \\

--etcd-keyfile=/opt/etcd/ssl/server-key.pem" EOF cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes [Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-apiserver

ExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload

systemctl enable kube-apiserver

systemctl restart kube-apiserver

- 脚本会创建 kube-apiserver.service 的服务,查看服务状态

$ systemctl status kube-apiserver.service

* kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since Sun -- :: CST; 3min 10s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: (kube-apiserver)

Tasks:

Memory: 244.5M

CGroup: /system.slice/kube-apiserver.service

`- /opt/kubernetes/bin/kube-apiserver --logtostderr=true --v= --etcd-servers=https://127.0.0.1:... Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::10.491629 available_controller.g...ons

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::10.514914 httplog.go:] verb="G…668":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::10.516879 httplog.go:] verb="G...8":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::10.525747 httplog.go:] verb="G...8":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::10.527263 httplog.go:] verb="G...8":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::10.528568 httplog.go:] verb="G…668":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::11.609546 httplog.go:] verb="G...8":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::11.611355 httplog.go:] verb="G...8":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::11.619297 httplog.go:] verb="G...8":

Feb :: VM_121_198_centos kube-apiserver[]: I0216 ::11.624253 httplog.go:] verb="G…668":

Hint: Some lines were ellipsized, use -l to show in full.

- 查看 kube-apiservce.service 的配置文件

$ cat /opt/kubernetes/cfg/kube-apiserver KUBE_APISERVER_OPTS="--logtostderr=true \

--v= \

--etcd-servers=https://127.0.0.1:2379 \

--bind-address=${服务器IP} \

--secure-port= \

--advertise-address=${服务器IP} \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/ \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/opt/kubernetes/cfg/token.csv \

--service-node-port-range=- \

--tls-cert-file=/opt/kubernetes/ssl/server.pem \

--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \

--client-ca-file=/opt/kubernetes/ssl/ca.pem \

--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \

--etcd-cafile=/opt/etcd/ssl/ca.pem \

--etcd-certfile=/opt/etcd/ssl/server.pem \

--etcd-keyfile=/opt/etcd/ssl/server-key.pem"

2.8 安装 kube-scheduler

- 执行 k8s/scripts/scheduler.sh 脚本,创建 kube-scheduler.service 服务并启动,第一个参数为 Master 节点地址

$ ./k8s/scripts/scheduler.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

- 脚本内容如下:

$ cat k8s/scripts/scheduler.sh

#!/bin/bash MASTER_ADDRESS=$ cat <<EOF >/opt/kubernetes/cfg/kube-scheduler KUBE_SCHEDULER_OPTS="--logtostderr=true \\

--v= \\

--master=${MASTER_ADDRESS}: \\

--leader-elect" EOF cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes [Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-scheduler

ExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload

systemctl enable kube-scheduler

systemctl restart kube-scheduler

- 脚本会创建 kube-scheduler.service 服务,查看服务状态

$ systemctl status kube-scheduler.service

* kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since Sun -- :: CST; 5h 35min ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: (kube-scheduler)

Tasks:

Memory: 12.4M

CGroup: /system.slice/kube-scheduler.service

`- /opt/kubernetes/bin/kube-scheduler --logtostderr=true --v= --master=127.0.0.1: --leader-... Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::36.873191 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::33.873911 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::34.876413 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::05.874120 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::38.873990 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::47.869403 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::16.876848 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::24.873540 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::52.876115 reflector.go:] k8s....ved

Feb :: VM_121_198_centos kube-scheduler[]: I0216 ::42.874884 reflector.go:] k8s....ved

Hint: Some lines were ellipsized, use -l to show in full.

2.9 安装 kube-controller-manager

- 执行 k8s/scripts/controller-manager.sh 脚本,创建 kube-controller-manager.service 服务并启动,第一个参数为 Master 节点地址

$ ./k8s/scripts/controller-manager.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

- 脚本内容如下:

$ cat ./k8s/scripts/controller-manager.sh

#!/bin/bash MASTER_ADDRESS=$ cat <<EOF >/opt/kubernetes/cfg/kube-controller-manager KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \\

--v= \\

--master=${MASTER_ADDRESS}: \\

--leader-elect=true \\

--address=127.0.0.1 \\

--service-cluster-ip-range=10.0.0.0/ \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/opt/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s" EOF cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes [Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-controller-manager

ExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl restart kube-controller-manager

- 脚本会创建 kube-controller-manager.service 服务,查看服务状态

$ systemctl status kube-controller-manager.service

* kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Sun -- :: CST; 54s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: (kube-controller)

Tasks:

Memory: 24.9M

CGroup: /system.slice/kube-controller-manager.service

`- /opt/kubernetes/bin/kube-controller-manager --logtostderr=true --v= --master=127.0.0.1: ... Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::57.577189 pv_controller_...er

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.128738 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.178743 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.228729 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.278737 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.635791 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.685804 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.735807 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.785776 request.go:...2s

Feb :: VM_121_198_centos kube-controller-manager[]: I0216 ::00.786828 resource_quota...nc

Hint: Some lines were ellipsized, use -l to show in full.

- 至此,Master 节点的相关组件已经安装完毕了

- 将二进制文件目录加入环境变量:export PATH=$PATH:/opt/kubernetes/bin/

$ vim ~/.zshrc

......

export PATH=$PATH:/opt/kubernetes/bin/

$ source ~/.zshrc

- 查看 Master 节点状态

$ kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd- Healthy {"health":"true"}

2.10 安装 kubelet

- 从此节开始,安装的组件均为 Node 节点使用,因为是单节点,因此安装在同一机器上

- 创建工作目录,复制 k8s/scripts/kubeconfig.sh 脚本

$ mkdir -p k8s/kubeconfig

$ cp k8s/scripts/kubeconfig.sh k8s/kubeconfig

$ cd k8s/kubeconfig

- 查看 kube-apiserver 的 token

$ cat /opt/kubernetes/cfg/token.csv

20cd735bd334f4334118f8be496df49d,kubelet-bootstrap,,"system:kubelet-bootstrap"

- 修改脚本 设置客户端认证参数 部分,将如下:

......

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

......

- 替换为 /opt/kubernetes/cfg/token.csv 中的 token

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=20cd735bd334f4334118f8be496df49d \

--kubeconfig=bootstrap.kubeconfig

- 执行 kubeconfig.sh 脚本,第一个参数为 kube-apiserver 监听的 IP 地址,第二个为上述建立的 k8s-cert 目录,生成的文件复制到配置文件目录中

$ ./kubeconfig.sh ${服务器 IP} ../k8s-cert

f920d3cad77834c418494860695ea887

Cluster "kubernetes" set.

User "kubelet-bootstrap" set.

Context "default" modified.

Switched to context "default".

Cluster "kubernetes" set.

User "kube-proxy" set.

Context "default" modified.

Switched to context "default".

$ cp bootstrap.kubeconfig kube-proxy.kubeconfig /opt/kubernetes/cfg/

- 脚本内容如下:

$ cat kubeconfig.sh

# 创建 TLS Bootstrapping Token

BOOTSTRAP_TOKEN=$(head -c /dev/urandom | od -An -t x | tr -d ' ')

echo ${BOOTSTRAP_TOKEN} cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,,"system:kubelet-bootstrap"

EOF #---------------------- APISERVER=$

SSL_DIR=$ # 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443" # 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig # 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=20cd735bd334f4334118f8be496df49d \

--kubeconfig=bootstrap.kubeconfig # 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig # 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig #---------------------- # 创建kube-proxy kubeconfig文件 kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

- 执行 k8s/scripts/kubelet.sh 脚本,创建 kubelet.service 服务并启动,第一个参数为 Node 节点地址(127.0.0.1 或者服务器 IP)

$ ./k8s/scripts/kubelet.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

- 脚本内容如下:

$ cat ./k8s/scripts/kubelet.sh

#!/bin/bash NODE_ADDRESS=$

DNS_SERVER_IP=${:-"10.0.0.2"} cat <<EOF >/opt/kubernetes/cfg/kubelet KUBELET_OPTS="--logtostderr=true \\

--v= \\

--hostname-override=${NODE_ADDRESS} \\

--node-labels=node.kubernetes.io/k8s-master=true \\

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\

--config=/opt/kubernetes/cfg/kubelet.config \\

--cert-dir=/opt/kubernetes/ssl \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0" EOF cat <<EOF >/opt/kubernetes/cfg/kubelet.config kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: ${NODE_ADDRESS}

port:

readOnlyPort:

cgroupDriver: cgroupfs

clusterDNS:

- ${DNS_SERVER_IP}

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true

EOF cat <<EOF >/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service [Service]

EnvironmentFile=/opt/kubernetes/cfg/kubelet

ExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

KillMode=process [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

- 创建 bootstrap 角色赋予权限用于连接 kube-apiserver 请求签名

$ kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

- 检查请求

$ kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-080q0dk5uSm0JxED14c6UB7q4jeUSCUHUxqosnqtnpA 16s kubelet-bootstrap Pending

- 同意请求并颁发证书

$ kubectl certificate approve node-csr-080q0dk5uSm0JxED14c6UB7q4jeUSCUHUxqosnqtnpA

certificatesigningrequest.certificates.k8s.io/node-csr-080q0dk5uSm0JxED14c6UB7q4jeUSCUHUxqosnqtnpA approved

$ kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-080q0dk5uSm0JxED14c6UB7q4jeUSCUHUxqosnqtnpA 2m51s kubelet-bootstrap Approved,Issued

- 查看集群节点

$ kubectl get node

NAME STATUS ROLES AGE VERSION

127.0.0.1 Ready <none> 77s v1.18.0-alpha.5.158+1c60045db0bd6e

- 已经是 Ready 状态,说明加入成功

- 由于该 Node 同时也是 Master 角色,因此需要标记一下

$ kubectl label node 127.0.0.1 node-role.kubernetes.io/master=true

node/127.0.0.1 labeled

$ kubectl get node

NAME STATUS ROLES AGE VERSION

127.0.0.1 Ready master 4m21s v1.18.0-alpha.5.158+1c60045db0bd6e

2.11 安装 kube-proxy

- 执行 k8s/scripts/proxy.sh 脚本,创建 kube-proxy.service 服务并启动,第一个参数为 Node 节点地址

$ ./k8s/scripts/proxy.sh 127.0.0.1

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

- 脚本内容如下:

$ cat ./k8s/scripts/proxy.sh

#!/bin/bash NODE_ADDRESS=$ cat <<EOF >/opt/kubernetes/cfg/kube-proxy KUBE_PROXY_OPTS="--logtostderr=true \\

--v= \\

--hostname-override=${NODE_ADDRESS} \\

--cluster-cidr=10.0.0.0/ \\

--proxy-mode=ipvs \\

--kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig" EOF cat <<EOF >/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target [Service]

EnvironmentFile=-/opt/kubernetes/cfg/kube-proxy

ExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

- 脚本会创建 kube-proxy.service 服务,查看服务状态

$ systemctl status kube-proxy.service

* kube-proxy.service - Kubernetes Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since Sun -- :: CST; 4min 13s ago

Main PID: (kube-proxy)

Tasks:

Memory: 7.6M

CGroup: /system.slice/kube-proxy.service

`- /opt/kubernetes/bin/kube-proxy --logtostderr=true --v= --hostname-override=127.0.0.1 --cluste... Feb :: VM_121_198_centos kube-proxy[]: I0216 ::20.716190 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::20.800724 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::22.724946 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::22.809768 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::24.733676 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::24.818662 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::26.743754 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::26.830673 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::28.755816 config.go:] Calling han...date

Feb :: VM_121_198_centos kube-proxy[]: I0216 ::28.838915 config.go:] Calling han...date

Hint: Some lines were ellipsized, use -l to show in full.

2.12 检验安装

- 创建 yaml 文件

$ mkdir -p k8s/yamls

$ cd k8s/yamls

$ vim nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas:

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.

ports:

- containerPort:

- 创建 deployment 对象,查看生成的 Pod,进入 Running 状态,说明已经成功创建

$ kubectl apply -f nginx-deployment.yaml

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-54f57cf6bf-c24p4 / Running 12s

nginx-deployment-54f57cf6bf-w2pqp / Running 12s

- 查看 Pod 具体信息

$ kubectl describe pod nginx-deployment-54f57cf6bf-c24p4

Name: nginx-deployment-54f57cf6bf-c24p4

Namespace: default

Priority:

Node: 127.0.0.1/127.0.0.1

Start Time: Sun, Feb :: +

Labels: app=nginx

pod-template-hash=54f57cf6bf

Annotations: <none>

Status: Running

IP: 172.17.23.2

IPs:

IP: 172.17.23.2

Controlled By: ReplicaSet/nginx-deployment-54f57cf6bf

Containers:

nginx:

Container ID: docker://ffdfdefa8a743e5911634ca4b4d5c00b3e98955799c7c52c8040e6a8161706f9

Image: nginx:1.7.

Image ID: docker-pullable://nginx@sha256:e3456c851a152494c3e4ff5fcc26f240206abac0c9d794affb40e0714846c451

Port: /TCP

Host Port: /TCP

State: Running

Started: Sun, Feb :: +

Ready: True

Restart Count:

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-x7gjm (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-x7gjm:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-x7gjm

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned default/nginx-deployment-54f57cf6bf-c24p4 to 127.0.0.1

Normal Pulled 2m11s kubelet, 127.0.0.1 Container image "nginx:1.7.9" already present on machine

Normal Created 2m11s kubelet, 127.0.0.1 Created container nginx

Normal Started 2m11s kubelet, 127.0.0.1 Started container nginx

- 若查看时报如下错误:

Error from server (Forbidden): Forbidden (user=system:anonymous, verb=get, resource=nodes, subresource=proxy)

- 则需要给集群加一个 cluster-admin 权限:

$ kubectl create clusterrolebinding system:anonymous --clusterrole=cluster-admin --user=system:anonymous

3. 小结

- 相对于 kubeadm,二进制安装复杂的多

- 但是基于源码的开发和测试需要通过二进制方式部署

- 因此掌握二进制方式部署 k8s 集群的方法非常重要

- 上述的脚本均上传至 github 仓库

- 欢迎各位提出问题和批评

4. 参考文献

Kubernetes 二进制部署(一)单节点部署(Master 与 Node 同一机器)的更多相关文章

- 手动部署一个单节点kubernetes

目录 简要说明 安装环境说明 部署 生成相关证书 证书类型说明 安装cfssl证书生成工具 生成CA证书 生成Kubernetes master节点使用的证书 生成kubectl证书 生成kube-p ...

- 记录一个奇葩的问题:k8s集群中master节点上部署一个单节点的nacos,导致master节点状态不在线

情况详细描述; k8s集群,一台master,两台worker 在master节点上部署一个单节点的nacos,导致master节点状态不在线(不论是否修改nacos的默认端口号都会导致master节 ...

- HyperLedger Fabric 1.1 手动部署单机单节点

手动部署单机单节点 之前发布过官方的e2e部署方案,由于环境或是访问权限等各种问题,还是有相当一部分码友无法成功跑起来,故此,本章将来一次纯手动操作的集群部署. 主要需要的步骤如下: 1:环境整理 2 ...

- Ubuntu下用devstack单节点部署Openstack

一.实验环境 本实验是在Vmware Workstation下创建的单台Ubuntu服务器版系统中,利用devstack部署的Openstack Pike版. 宿主机:win10 1803 8G内存 ...

- HyperLedger Fabric 1.4 单机单节点部署(10.2)

单机单节点指在一台电脑上部署一个排序(Orderer)服务.一个组织(Org1),一个节点(Peer,属于Org1),然后运行官方案例中的example02智能合约例子,实现转财交易和查询功能.单机单 ...

- .netcore consul实现服务注册与发现-单节点部署

原文:.netcore consul实现服务注册与发现-单节点部署 一.Consul的基础介绍 Consul是HashiCorp公司推出的开源工具,用于实现分布式系统的服务发现与配置.与其他分 ...

- Dubbo入门到精通学习笔记(九):简易版支付系统介绍、部署(单节点)

文章目录 部署(单节点) 一.前期准备 二.对部署环境进行规划 创建数据库 调整公共配置文件 应用部署前期准备 部署服务 部署 Web 应用 部署定时任务 一. 工程结构 第三方支付系统架构 pay- ...

- K8s - Kubernetes重要概念介绍(Cluster、Master、Node、Pod、Controller、Service、Namespace)

K8s - Kubernetes重要概念介绍(Cluster.Master.Node.Pod.Controller.Service.Namespace) Kubernetes 是目前发展最 ...

- ubuntu18.04使用kubeadm部署k8s单节点

实验目的: 体验kubeadm部署k8s服务,全流程体验! 实验环境: ubuntu18.04 联网在线部署 kubeadm 01.系统检查 节点主机名唯一,建议写入/etc/hosts 禁止swap ...

随机推荐

- win10 下安装 tesseract + tesserocr

首先参考博文一贴:https://blog.csdn.net/u014179267/article/details/80908790 1.那么安装这两个模块是为了爬虫的时候识别验证码用的,但是安装的过 ...

- 模块学习-json pickle

json json序列化 import json def sayhi(name): print("hello",name) info = { 'name':'mogu', 'age ...

- POJ 2559 Largest Rectangle in a Histogram(单调栈) && 单调栈

嗯... 题目链接:http://poj.org/problem?id=2559 一.单调栈: 1.性质: 单调栈是一种特殊的栈,特殊之处在于栈内的元素都保持一个单调性,可能为单调递增,也可能为单调递 ...

- php类的魔术方法也就是带下划线的类方法介绍及应用

001 PHP把所有以__(两个下划线)开头的类方法当成魔术方法,并且这些魔术方法的参数都不能通过引用传递.php的魔术方法有: 002 __construct(), __destruct(), _ ...

- 关于PGSQL连接问题

今天把运维管理服务移植在Linux下测试,但又不想在Linux中安装PGSQL的服务器,就想让运维管理服务在虚拟机中连接windows的PG服务,却发现PG服务器一直拒绝连接,检查了网络端口之后都没问 ...

- solve License Key is legacy format when use ACTIVATION_CODE activate jetbrains-product 2019.3.1

1.the java-agent and ACTIVATION_CODE can get from this site:https://zhile.io/2018/08/25/jetbrains-li ...

- 时间复杂度Big O以及Python 内置函数的时间复杂度

声明:本文部分内容摘自 原文 本文翻译自Python Wiki 本文基于GPL v2协议,转载请保留此协议. 本页面涵盖了Python中若干方法的时间复杂度(或者叫"大欧",&qu ...

- New Airless Pump Bottle Technical Features

Airless Pump Bottle protect sensitive products such as natural skin creams, serums, foundations a ...

- 为什么需要NAT,目前家庭的计算机器如何上网?(原创)

.什么是NAT? 字面翻译网络地址转换. 2.产生的背景 解决公网IP不足的问题. 官方规定,将IP地址资源进行分类,分为ABCDE,常用ABC三类,在每类中划分出了一些私有IP供 ...

- 最长公共子序列/子串 LCS(模板)

首先区分子序列和子串,序列不要求连续性(连续和不连续都可以),但子串一定是连续的 1.最长公共子序列 1.最长公共子序列问题有最优子结构,这个问题可以分解称为更小的问题 2.同时,子问题的解释可以被重 ...