【转载】Beautiful Soup库(bs4)入门

from bs4 import BeautifulSoup

import requests

r = requests.get('http://www.23us.so/')

html = r.text

soup = BeautifulSoup(html,'html.parser')

print soup.prettify()

from bs4 import BeautifulSoup

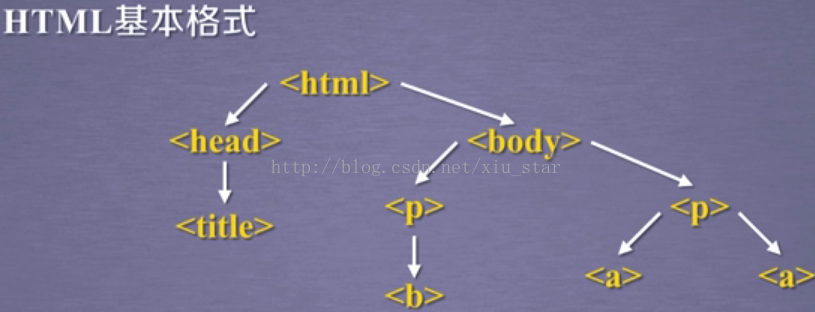

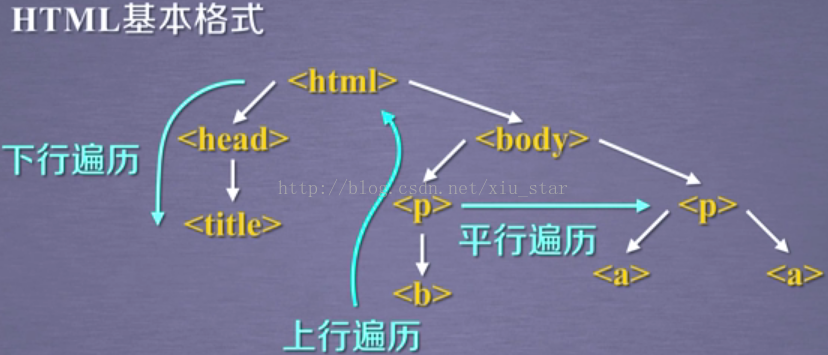

标签树的下行遍历:

- .contents属性:子节点的列表,将<tag>所有儿子节点存入列表

- .children属性:子节点的迭代类型,与.contents类似,用于循环遍历儿子节点

- .descendants属性:子孙节点的迭代类型,包含所有子孙节点,用于循环遍历

from bs4 import BeautifulSoup #beautifulsoup4库使用时是简写的bs4

import requests

r = requests.get('http://python123.io/ws/demo.html')

demo = r.text

soup = BeautifulSoup(demo,'html.parser') #解析器:html.parser

child = soup.body.contents

print(child)

for child in soup.body.descendants:

print(child)

- .parent属性:节点的父标签

- parents属性:节点先辈标签的迭代类型,用于循环遍历先辈节点

from bs4 import BeautifulSoup #beautifulsoup4库使用时是简写的bs4

import requests

r = requests.get('http://python123.io/ws/demo.html')

demo = r.text

soup = BeautifulSoup(demo,'html.parser') #解析器:html.parser

print(soup.prettify()) #打印解析好的内容

from bs4 import BeautifulSoup

标签树的下行遍历:

- .contents属性:子节点的列表,将<tag>所有儿子节点存入列表

- .children属性:子节点的迭代类型,与.contents类似,用于循环遍历儿子节点

- .descendants属性:子孙节点的迭代类型,包含所有子孙节点,用于循环遍历

import requests

r = requests.get('http://python123.io/ws/demo.html')

demo = r.text

soup = BeautifulSoup(demo,'html.parser') #解析器:html.parser

child = soup.body.contents

print(child)

for child in soup.body.descendants:

- .parent属性:节点的父标签

- parents属性:节点先辈标签的迭代类型,用于循环遍历先辈节点

【转载】Beautiful Soup库(bs4)入门的更多相关文章

- Beautiful Soup库入门

1.安装:pip install beautifulsoup4 Beautiful Soup库是解析.遍历.维护“标签树”的功能库 2.引用:(1)from bs4 import BeautifulS ...

- Beautiful Soup库基础用法(爬虫)

初识Beautiful Soup 官方文档:https://www.crummy.com/software/BeautifulSoup/bs4/doc/# 中文文档:https://www.crumm ...

- Python Beautiful Soup库

Beautiful Soup库 Beautiful Soup库:https://www.crummy.com/software/BeautifulSoup/ 安装Beautiful Soup: 使用B ...

- crawler碎碎念4 关于python requests、Beautiful Soup库、SQLlite的基本操作

Requests import requests from PIL import Image from io improt BytesTO import jason url = "..... ...

- 【Python爬虫学习笔记(3)】Beautiful Soup库相关知识点总结

1. Beautiful Soup简介 Beautiful Soup是将数据从HTML和XML文件中解析出来的一个python库,它能够提供一种符合习惯的方法去遍历搜索和修改解析树,这将大大减 ...

- python beautiful soup库的超详细用法

原文地址https://blog.csdn.net/love666666shen/article/details/77512353 参考文章https://cuiqingcai.com/1319.ht ...

- python之Beautiful Soup库

1.简介 简单来说,Beautiful Soup是python的一个库,最主要的功能是从网页抓取数据.官方解释如下: Beautiful Soup提供一些简单的.python式的函数用来处理导航.搜索 ...

- Beautiful Soup库介绍

开始前需安装Beautiful Soup 和lxml. Beautiful Soup在解析时依赖解析器,下表列出bs4支持的解析器. 解析器 使用方法 Python标准库 BeautifulSoup( ...

- Beautiful Soup库

原文传送门:静觅 » Python爬虫利器二之Beautiful Soup的用法

随机推荐

- 【spoj】DIVCNTK

Portal -->Spoj DIVCNTK Solution 这题的话其实是..洲阁筛模板题?差不多吧 题意就是给你一个函数\(S_k(x)\) \[ S_k(n)=\sum\limits_{ ...

- Chiaki Sequence Revisited HDU - 6304 lowbit找规律法

Problem Description Chiaki is interested in an infinite sequence a1,a2,a3,..., which is defined as f ...

- Libevent学习笔记(四) bufferevent 的 concepts and basics

Bufferevents and evbuffers Every bufferevent has an input buffer and an output buffer. These are of ...

- pandans导出Excel并将数据保存到不同的Sheet表中

数据存在mongodb中,按照类别导出到Excel文件,问题是想把同一类的数据放到一个sheet表中,最后只导出到一个excel文件中# coding=utf-8import pandas as pd ...

- McNemar test麦克尼马尔检验

sklearn实战-乳腺癌细胞数据挖掘(博主亲自录视频) https://study.163.com/course/introduction.htm?courseId=1005269003&u ...

- Sql2008 全文索引创建

在SQL Server 中提供了一种名为全文索引的技术,可以大大提高从长字符串里搜索数 据的速度,不用在用LIKE这样低效率的模糊查询了. 下面简明的介绍如何使用Sql2008 全文索引 一.检查 ...

- [DeeplearningAI笔记]卷积神经网络1.9-1.11池化层/卷积神经网络示例/优点

4.1卷积神经网络 觉得有用的话,欢迎一起讨论相互学习~Follow Me 1.9池化层 优点 池化层可以缩减模型的大小,提高计算速度,同时提高所提取特征的鲁棒性. 池化层操作 池化操作与卷积操作类似 ...

- ACM-ICPC2018 青岛赛区网络预赛-B- Red Black Tree

题目描述 BaoBao has just found a rooted tree with n vertices and (n-1) weighted edges in his backyard. A ...

- CCPC2018-A-Buy and Resell

Problem Description The Power Cube is used as a stash of Exotic Power. There are n cities numbered 1 ...

- HDU 2582 规律 素因子

定义$Gcd(n)=gcd(\binom{n}{1},\binom{n}{2}...\binom{n}{n-1})$,$f(n)=\sum_{i=3}^{n}{Gcd(i)}$,其中$(3<=n ...