AlphaZero并行五子棋AI

AlphaZero-Gomoku-MPI

Link

Github : AlphaZero-Gomoku-MPI

Overview

This repo is based on junxiaosong/AlphaZero_Gomoku, sincerely grateful for it.

I do these things:

- Implement asynchronous self-play training pipeline in parallel like AlphaGo Zero's way

- Write a root parallel mcts (vote a move using ensemble way)

- Use ResNet structure to train the model and set a transfer learning API to train a larger board model based on small board's model (like pre-training way in order to save time)

Strength

- Current model is on 11x11 board, and playout 400 times when test

- Play with this model, can always win regardless of black or white

- Play with gomocup's AI, can rank around 20th-30th for some rough tests

- When I play white, I can't win AI. When I play black, end up with tie/lose for most of my time

References

- Mastering the game of Go without human knowledge

- A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play

- Parallel Monte-Carlo Tree Search

Blog

Installation Dependencies

- Python3

- tensorflow>=1.8.0

- tensorlayer>=1.8.5

- mpi4py (parallel train and play)

- pygame (GUI)

How to Install

tensorflow/tensorlayer/pygame install :

conda install tensorflow

conda install tensorlayer

conda install pygame

mpi4py install click here

mpi4py on windows click here

How to Run

- Play with AI

python human_play.py

- Play with parallel AI (-np : set number of processings, take care of OOM !)

mpiexec -np 3 python -u human_play_mpi.py

- Train from scratch

python train.py

- Train in parallel

mpiexec -np 43 python -u train_mpi.py

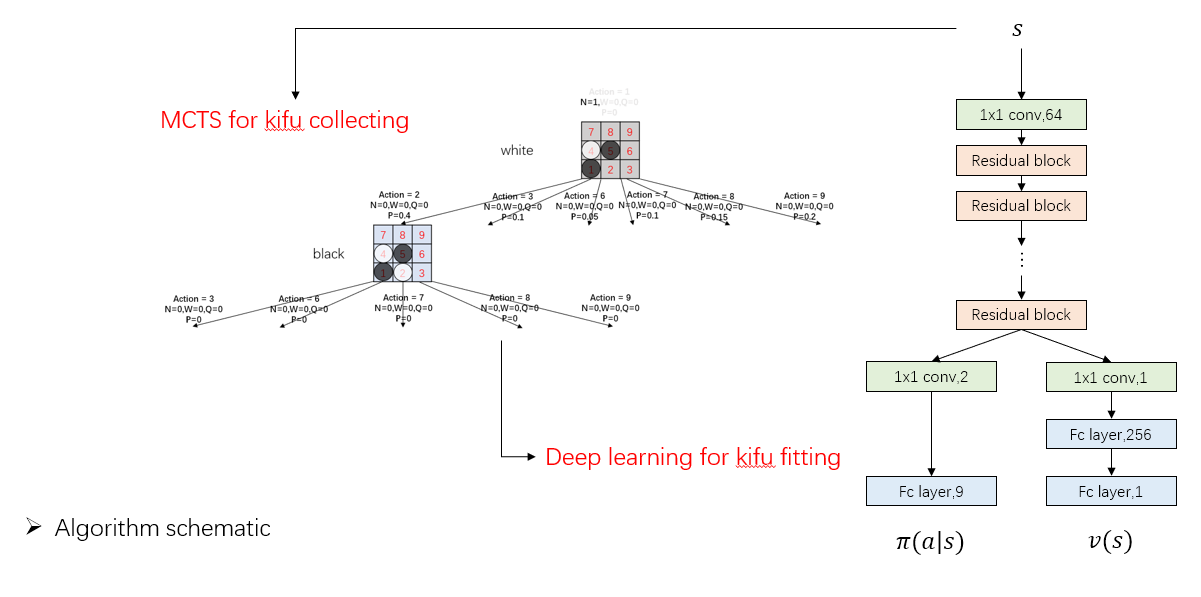

Algorithm

It's almost no difference between AlphaGo Zero except APV-MCTS.

A PPT can be found in dir demo/slides

Details

Most settings are the same with AlphaGo Zero, details as follow :

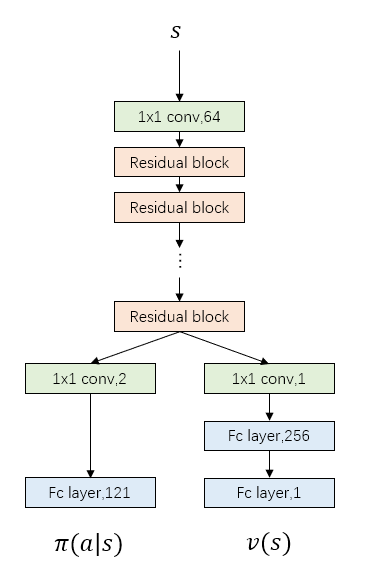

Network Structure

- Current model uses 19 residual blocks, more blocks means more accurate prediction but also slower speed

- The number of filters in convolutional layer shows in the follow picture

Feature Planes

- In AlphaGo Zero paper, there are 19 feature planes: 8 for current player's stones, 8 for opponent's stones, and the final feature plane represents the colour to play

- Here I only use 4 for each player, it can be easily changed in

game_board.py

Dirichlet Noise

- I add dirichlet noises in each node, it's different from paper that only add noises in root node. I guess AlphaGo Zero discard the whole tree after each move and rebuild a new tree, while here I keep the nodes under the chosen action, it's a little different

- Weights between prior probabilities and noises are not changed here (0.75/0.25), though I think maybe 0.8/0.2 or even 0.9/0.1 is better because noises are added in every node

parameters in detail

I try to maintain the original parameters in AlphaGo Zero paper, so as to testify it's generalization. Besides, I also take training time and computer configuration into consideration.

Parameters Setting Gomoku AlphaGo Zero MPI num 43 - c_puct 5 5 n_playout 400 1600 blocks 19 19/39 buffer size 500,000(data) 500,000(games) batch_size 512 2048 lr 0.001 annealed optimizer Adam SGD with momentum dirichlet noise 0.3 0.03 weight of noise 0.25 0.25 first n move 12 30

Training detials

- I train the model for about 100,000 games and takes 800 hours or so

- Computer configuration : 2 CPU and 2 1080ti GPU

- We can easily find the computation gap with DeepMind and rich people can do some future work

Some Tips

- Network

- ZeroPadding with Input : Sometimes when play with AI, it's unaware of the risk at the edge of board even though I'm three/four in a row. ZeroPadding data input can mitigate the problem

- Put the network on GPU : If the network is shallow, it's not matter CPU/GPU to use, otherwise it's faster to use GPU when self-play

- Dirichlet Noise

- Add Noise in Node : In junxiaosong/AlphaZero_Gomoku, noises are added outside the tree, seemingly like DQN's \(\epsilon-greedy\) way. It's ok when I test on 6x6 and 8x8 board, but when on 11x11 some problems occur. After a long time training on 11x11, black player will always play the first stone in the middle place with policy probability equal to 1. It's very rational for black to play here, however, the white player will never see other kifu that play in the other place at first stone. So, when I play black with AI and place somewhere not the middle place, AI will get very stupid because it has never seen this way at all. Add noise in node can mitigate the problem

- Smaller Weight with Noise : As I said before, I think maybe 0.8/0.2 or even 0.9/0.1 is a better choice between prior probabilities and noises' weights, because noises are added in every node

- Randomness

- Dihedral Reflection or Rotation : When use the network to output probabilities/value, it's better to do as paper said: The leaf node \(s_L\) is added to a queue for neural network evaluation, \((d_i(p),v)=f_{\theta}(d_i(s_L))\), where \(d_i\) is a dihedral reflection or rotation selected uniformly at random from \(i\) in \([1..8]\)

- Add Randomness when Test : I add the dihedral reflection or rotation also when play with it, so as to avoid to play the same game all the time

- Tradeoffs

- Network Depth : If the network is too shallow, loss will increase. If too deep, it's slow when train and test. (My network is still a little slow when play with it, I think maybe 9 blocks is all right)

- Buffer Size : If the size is small, it's easy to fit by network but can't guarantee it's performance for only learning from these few data. If it's too large, much longer time and deeper network structure should be taken

- Playout Number : If small, it's quick to finish a self-play game but can't guarantee kifu's quality. On the contrary with more playout times, better kifu will get but also take longer time

Future Work Can Try

- Continue to train (a larger board) and increase the playout number

- Try some other parameters for better performance

- Alter network structure

- Alter feature planes

- Implement APV-MCTS

- Train on standard/renju rule

AlphaZero并行五子棋AI的更多相关文章

- 五子棋AI清月连珠开源

经过差不多两年的业余时间学习和编写,最近把清月连珠的无禁手部分完善得差不多了.这中间进行了很多思考,也有很多错误认识,到现在有一些东西还没有全面掌握,所以想通过开源于大家共同交流. 最近一直发表一些五 ...

- 五子棋AI大战OC实现

Gobang 五子棋AI大战,该项目主要用到MVC框架,用算法搭建AI实现进攻或防守 一.项目介绍 1.地址: github地址:Gobang 2.效果图: 二.思路介绍 大概说下思路,具体看代码实现 ...

- 五子棋AI教程

https://github.com/Chuck-Ai/gobang 我写了非常详细的中文教程,教你如何一步步编写自己的五子棋AI: 五子棋AI设计教程第二版一:前言 五子棋AI设计教程第二版二:博弈 ...

- 使用QT creator实现一个五子棋AI包括GUI实现(8K字超详细)

五子棋AI实现 五子棋游戏介绍 五子棋的定义 五子棋是全国智力运动会竞技项目之一,是具有完整信息的.确定性的.轮流行动的.两个游戏者的零和游戏.因此,五子棋是一个博弈问题. 五子棋的玩法 五子棋有两种 ...

- 【五子棋AI循序渐进】——开局库

首先,对前面几篇当中未修复的BUG致歉,在使用代码时请万分小心…………尤其是前面关于VCF\VCT的一些代码和思考,有一些错误.虽然现在基本都修正了,但是我的程序还没有经过非常大量的对局,在这之前,不 ...

- 【五子棋AI循序渐进】关于VCT,VCF的思考和核心代码

前面几篇发布了一些有关五子棋的基本算法,其中有一些BUG也有很多值得再次思考的问题,在框架和效果上基本达到了一个简单的AI的水平,当然,我也是初学并没有掌握太多的高级技术.对于这个程序现在还在优化当中 ...

- 人机ai五子棋 ——五子棋AI算法之Java实现

人机ai五子棋 下载:chess.jar (可直接运行) 源码:https://github.com/xcr1234/chess 其实机器博弈最重要的就是打分,分数也就是权重,把棋子下到分数大的地方, ...

- 五子棋 AI(AIpha-beta算法)

博弈树 下过五子棋的人都应该知道,越厉害的人,对棋面的预测程度越深.换句话讲,就是当你下完一步棋,我就能在我的脑海里假设把我所有可能下的地方都下一遍,然后考虑我下完之后你又会下在哪里,最后我根据每次预 ...

- 五子棋AI的思路

隔了一年才把AI思路给写了... 需求分析与设计方案:http://www.cnblogs.com/songdechiu/p/4951634.html 如需整个工程,移步http://download ...

随机推荐

- java反射动态加载类Class.forName();

1,所有的new出来的对象都是静态加载的,在程序编译的时候就会进行加载.而使用反射机制Class.forName是动态加载的,在运行时刻进行加载. 例子:直接上两个例子 public class Ca ...

- OK6410 rmmod卸载模块失败:No such file or directory -- 转

原文地址:http://hi.baidu.com/andio/item/b8be9810282841433a176e86 rmmod chdir no such file or directory 说 ...

- php 创建验证码方法

php创建验证码方法: <?php function getVerify($length=4,$sessName='verify'){ //验证码 //获取字符串 去除01ol等较难辨认字符 $ ...

- 【方法】纯jQuery实现星巴克官网导航栏效果

前言 大冬天的没得玩,只能和代码玩. 所以就无聊研究了一下星巴克官网,在我看来应该是基本还原吧~ 请各位大神指教! 官网效果图 要写的就是最上方的会闪现的白色条条 效果分析 1.在滚动条往下拉到一定距 ...

- 对string 的操作

相信使用过MFC编程的朋友对CString这个类的印象应该非常深刻吧?的确,MFC中的CString类使用起来真的非常的方便好用.但是如果离开了MFC框架,还有没有这样使用起来非常方便的类呢?答案是肯 ...

- 新手向-同步关键字synchronized对this、class、object、方法的区别

synchronized的语义 实验 分析 在看源代码时遇到多线程需要同步的时候,总是会看见几种写法,修饰方法.修饰静态方法.synchronized(Xxx.class).synchronized( ...

- DenseNet笔记

一.DenseNet的优点 减轻梯度消失问题 加强特征的传递 充分利用特征 减少了参数量 二.网络结构公式 对于每一个DenseBlock中的每一个层, [x0,x1,…,xl-1]表示将0到l-1层 ...

- android studio 解决avd启动问题 ----waiting for target device come online

android studio 模拟器打不开,一直停留在第三方.waiting for target device come online 问题解决方法 方法1.Android Emulator 未 ...

- 机器学习 Python实践-K近邻算法

机器学习K近邻算法的实现主要是参考<机器学习实战>这本书. 一.K近邻(KNN)算法 K最近邻(k-Nearest Neighbour,KNN)分类算法,理解的思路是:如果一个样本在特征空 ...

- Linux 命令find、grep

本文就向大家介绍find.grep命令,他哥俩可以算是必会的linux命令,我几乎每天都要用到他们.本文结构如下: find命令 find命令的一般形式 find命令的常用选项及实例 find与xar ...