报错:The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

报错背景:

CDH中集成hive插件,启动报错。

报错现象:

[main]: Metastore Thrift Server threw an exception...

javax.jdo.JDOFatalInternalException: Error creating transactional connection factory

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.createPersistenceManagerFactory(JDOPersistenceManagerFactory.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.getPersistenceManagerFactory(JDOPersistenceManagerFactory.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at javax.jdo.JDOHelper$.run(JDOHelper.java:)

at java.security.AccessController.doPrivileged(Native Method)

at javax.jdo.JDOHelper.invoke(JDOHelper.java:)

at javax.jdo.JDOHelper.invokeGetPersistenceManagerFactoryOnImplementation(JDOHelper.java:)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.getPMF(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.getPersistenceManager(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

NestedThrowablesStackTrace:

java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:)

at java.lang.reflect.Constructor.newInstance(Constructor.java:)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:)

at org.datanucleus.store.AbstractStoreManager.registerConnectionFactory(AbstractStoreManager.java:)

at org.datanucleus.store.AbstractStoreManager.<init>(AbstractStoreManager.java:)

at org.datanucleus.store.rdbms.RDBMSStoreManager.<init>(RDBMSStoreManager.java:)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:)

at java.lang.reflect.Constructor.newInstance(Constructor.java:)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:)

at org.datanucleus.NucleusContext.createStoreManagerForProperties(NucleusContext.java:)

at org.datanucleus.NucleusContext.initialise(NucleusContext.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.createPersistenceManagerFactory(JDOPersistenceManagerFactory.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.getPersistenceManagerFactory(JDOPersistenceManagerFactory.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at javax.jdo.JDOHelper$.run(JDOHelper.java:)

at java.security.AccessController.doPrivileged(Native Method)

at javax.jdo.JDOHelper.invoke(JDOHelper.java:)

at javax.jdo.JDOHelper.invokeGetPersistenceManagerFactoryOnImplementation(JDOHelper.java:)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.getPMF(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.getPersistenceManager(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.startMetaStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.main(HiveMetaStore.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

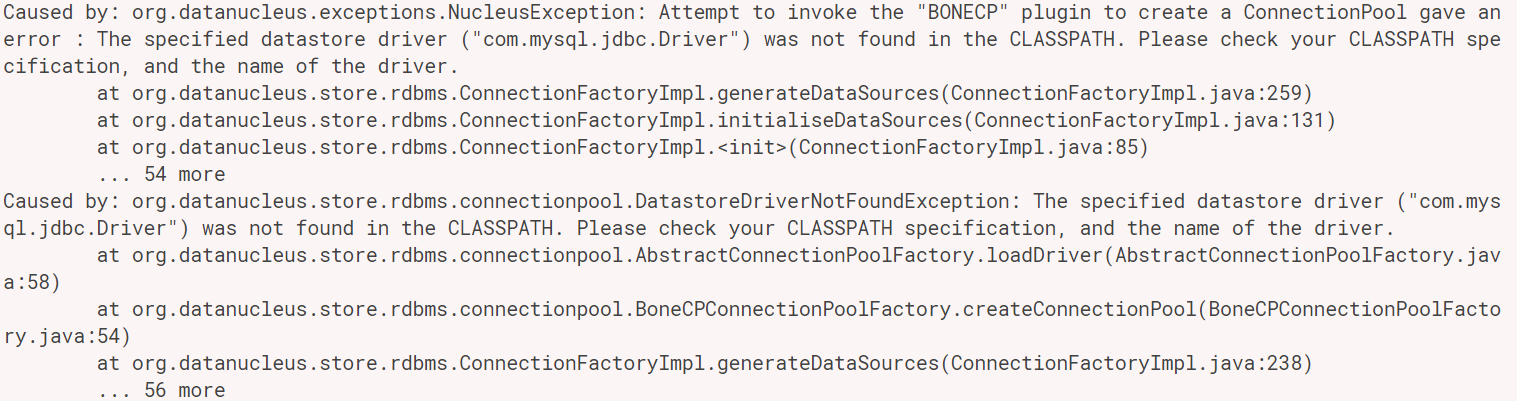

Caused by: org.datanucleus.exceptions.NucleusException: Attempt to invoke the "BONECP" plugin to create a ConnectionPool gave an error : The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.generateDataSources(ConnectionFactoryImpl.java:)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.initialiseDataSources(ConnectionFactoryImpl.java:)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.<init>(ConnectionFactoryImpl.java:)

... more

Caused by: org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException: The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

at org.datanucleus.store.rdbms.connectionpool.AbstractConnectionPoolFactory.loadDriver(AbstractConnectionPoolFactory.java:)

at org.datanucleus.store.rdbms.connectionpool.BoneCPConnectionPoolFactory.createConnectionPool(BoneCPConnectionPoolFactory.java:)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.generateDataSources(ConnectionFactoryImpl.java:)

... more

报错原因:

driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH

驱动出问题,找不到mysql驱动。

报错解决:

将jdbc驱动复制到/opt/cloudera/parcels/CDH-5.15.1-1.cdh5.15.1.p0.4/lib/hive/lib目录下

cp /bigdata/sources/mysql-connector-java-5.1.47.jar ./

报错:The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.的更多相关文章

- Maven项目启动报错:java.lang.ClassNotFoundException: com.mysql.jdbc.Driver

1.场景 1.1.先确认pom.xml文件已添加mysql依赖: <dependency> <groupId>mysql</groupId> < ...

- JDCP连接池连接数据库报错:java.lang.AbstractMethodError: com.mysql.jdbc.Connection.isValid(I)Z

完整报错是这样的: 小编的情况: 使用mysql的jar包版本: 使用的jdcp的相关jar包版本: 报错的原因: mysql的jar包版本过低. 更新到最新版mysql的jar包即可. 小编更新后的 ...

- sparkshell运行sql报错: java.lang.ClassNotFoundException: com.mysql.jdbc.Driver

下载msyql的连接driver https://download.csdn.net/download/xz360717118/10662304 把其中一个: mysql-connector-java ...

- 报错:java.lang.ClassNotFoundException: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException

1.创建mysql_link的时候一定要注意是不是多加了空格: 2.检查Sqoop是否引入了jdbc的jar包.

- CentOS的MySQL报错:Can't connect to MySQL server

原文链接: http://www.centoscn.com/CentosBug/softbug/2015/0622/5709.html 问题描述: 使用客户端远程登录连接基于CentOS 6.5服务器 ...

- 远程登陆linux连接mysql root账号报错:2003-can't connect to MYSQL serve(转)

远程连接mysql root账号报错:2003-can't connect to MYSQL serve 1.远程连接Linux系统,登录数据库:mysql -uroot -p(密码) 2.修改roo ...

- 远程连接mysql root账号报错:2003-can't connect to MYSQL serve(转)

远程连接mysql root账号报错:2003-can't connect to MYSQL serve 1.远程连接Linux系统,登录数据库:mysql -uroot -p(密码) 2.修改roo ...

- rails执行sidekiq任务的时候报错“can't connect to local mysql server through socket '/var/run/mysqld/mysqld.sock'”

rails执行sidekiq任务的时候报错“can't connect to local mysql server through socket '/var/run/mysqld/mysqld.soc ...

- Ubuntu14连接MySql报错“can't connect to local mysql server through socket '/var/run/mysqld/mysqld.sock'”

起因:我在Ubuntu14 64位系统中安装mysql后,后来通过mysql -u用户名 -p密码 的命令连接 Mysql数据库时,报错"can't connect to local mys ...

随机推荐

- HDFS的NameNode中的Namespace管理

在NameNode中的Namespace管理层是负责管理整个HDFS集群文件系统的目录树以及文件与数据块的映射关系.以下就是Namespace的内存结构: 以上是一棵文件目录树,可见Namespace ...

- Faster-RCNN用于场景文字检测训练测试过程记录(转)

[训练测试过程记录]Faster-RCNN用于场景文字检测 原创 2017年11月06日 20:09:00 标签: 609 编辑 删除 写在前面:github上面的Text-Detection-wit ...

- 案例:3D切割轮播图

一.3d转换 3D旋转套路:顺着轴的正方向看,顺时针旋转是负角度,逆时针旋转是正角度 二.代码 <!DOCTYPE html> <html lang="en"&g ...

- luogu 2592 区间dp

\(f_{i, j, a, b}\) 表示当前一共有 \(i\) 人排队, \(j\) 名男生,男生数目 - 女生数目为 \(a\), 女生数目 - 男生数目为 \(b\),\(a, b >= ...

- Codevs 3160 最长公共子串(后缀数组)

3160 最长公共子串 时间限制: 2 s 空间限制: 128000 KB 题目等级 : 大师 Master 题目描述 Description 给出两个由小写字母组成的字符串,求它们的最长公共子串的长 ...

- IntelliJ IDEA 2017 MySQL5 绿色版 Spring 4 Mybatis 3 配置步骤详解(二)

前言 继续上一篇安装教程 首先是MySQL绿色版安装之后其他组件安装,如果篇幅较长会分为多篇深入讲解,随笔属于学习笔记诸多错误还望指出 共同学习. MySQL 5.7 绿色版 我本地安装的是 ...

- 网络请求之get post

——http get和post的区别? 1.get用于获取数据,post用于提交数据 2.get提交参数追加在url后面,post参数可以通过http body提交 3.get的url会有长度上的限制 ...

- SSM + ehcache 异常

异常如下: 十二月 26, 2017 1:24:44 下午 org.apache.jasper.servlet.TldScanner scanJars 信息: At least one JAR was ...

- [loj 6496]「雅礼集训 2018 Day1」仙人掌

传送门 Description 给出一张 \(n\)个点 \(m\)条边的无向连通图,其中每条边至多属于一个简单环,保证没有自环,可能有重边.你需要为其中每条边定向,其中第 \(i\)个点的出度不能超 ...

- C语言的柔性数组的实现及应用

c编程的时候数组长度一般都是固定好的,实际上c还能实现变长数组.其实c99中确实是有变长数组的说法,C99中通过允许结构体中的最后一个成员是长度未知的数组实现变长数组,其定义格式如下: typedef ...