HBase编程 API入门系列之put(客户端而言)(1)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了。

[hadoop@HadoopSlave1 conf]$ cat regionservers

HadoopMaster

HadoopSlave1

HadoopSlave2

<configuration>

<property>

<name>hbase.zookeeper.quorum</name>

<value>HadoopMaster,HadoopSlave1,HadoopSlave2</value>

</property>

<property>

<name>hbase.rootdir</name>

<value>hdfs://HadoopMaster:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/home/hadoop/data/hbase-1.2.3/tmp</value>

</property>

<property>

<name>zookeeper.znode.parent</name>

<value>/hbase</value>

</property>

</configuration>

export JAVA_HOME=/home/hadoop/app/jdk1.7.0_79

export HBASE_MANAGES_ZK=false (外装的Zookeeper集群)

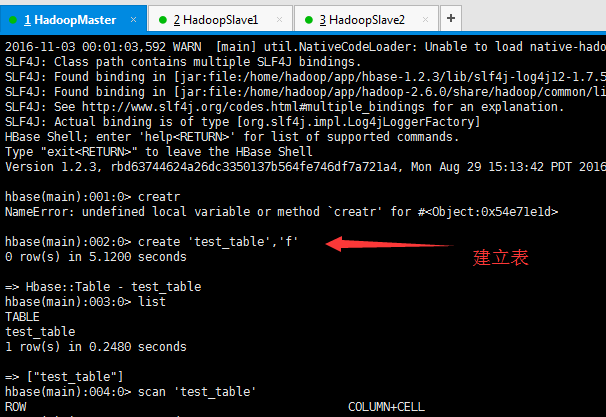

hbase(main):002:0> create 'test_table','f'

语法: create table name , column family

test_table 是HBase的数据表名,f是列簇

为了后续的编程,我这里直接先手动创建最简单的hbase数据表。在代码里可以修改。

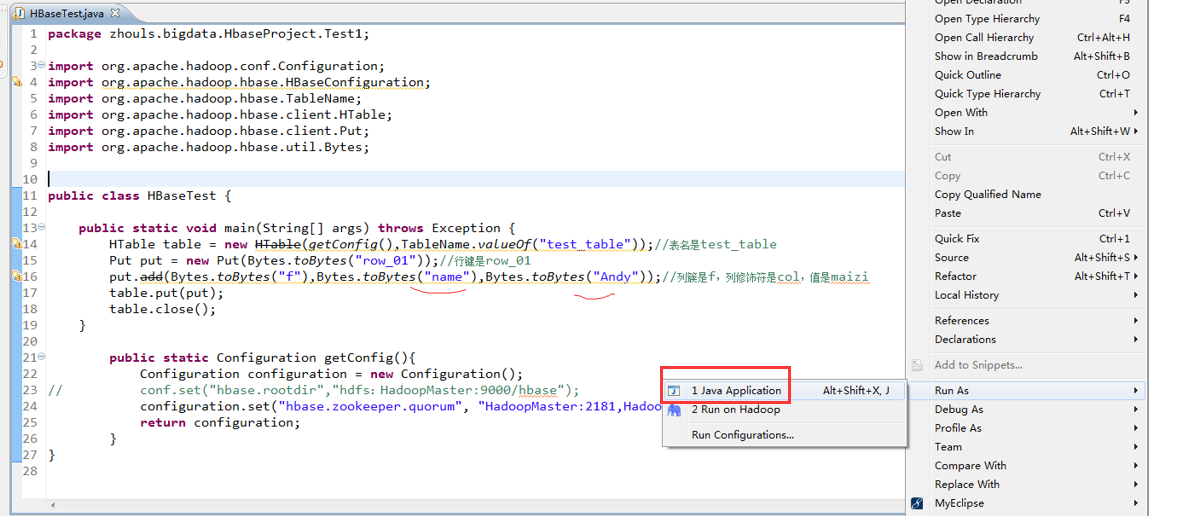

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("col"),Bytes.toBytes("maizi"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

2016-12-10 11:05:45,077 INFO [org.apache.hadoop.hbase.zookeeper.RecoverableZooKeeper] - Process identifier=hconnection-0x5fc2fc59 connecting to ZooKeeper ensemble=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:zookeeper.version=3.4.6-1569965, built on 02/20/2014 09:09 GMT

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:host.name=WIN-BQOBV63OBNM

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.version=1.7.0_51

2016-12-10 11:05:45,115 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.vendor=Oracle Corporation

2016-12-10 11:05:45,116 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.home=C:\Program Files\Java\jdk1.7.0_51\jre

2016-12-10 11:05:45,116 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.class.path=D:\Code\MyEclipseJavaCode\HbaseProject\bin;D:\SoftWare\hbase-1.2.3\lib\activation-1.1.jar;D:\SoftWare\hbase-1.2.3\lib\aopalliance-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-i18n-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\apacheds-kerberos-codec-2.0.0-M15.jar;D:\SoftWare\hbase-1.2.3\lib\api-asn1-api-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\api-util-1.0.0-M20.jar;D:\SoftWare\hbase-1.2.3\lib\asm-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\avro-1.7.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-1.7.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-beanutils-core-1.8.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-cli-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-codec-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\commons-collections-3.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-compress-1.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-configuration-1.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-daemon-1.0.13.jar;D:\SoftWare\hbase-1.2.3\lib\commons-digester-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\commons-el-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\commons-httpclient-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-io-2.4.jar;D:\SoftWare\hbase-1.2.3\lib\commons-lang-2.6.jar;D:\SoftWare\hbase-1.2.3\lib\commons-logging-1.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math-2.2.jar;D:\SoftWare\hbase-1.2.3\lib\commons-math3-3.1.1.jar;D:\SoftWare\hbase-1.2.3\lib\commons-net-3.1.jar;D:\SoftWare\hbase-1.2.3\lib\disruptor-3.3.0.jar;D:\SoftWare\hbase-1.2.3\lib\findbugs-annotations-1.3.9-1.jar;D:\SoftWare\hbase-1.2.3\lib\guava-12.0.1.jar;D:\SoftWare\hbase-1.2.3\lib\guice-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\guice-servlet-3.0.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-annotations-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-auth-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-hdfs-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-app-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-core-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-jobclient-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-mapreduce-client-shuffle-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-api-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-client-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hadoop-yarn-server-common-2.5.1.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-annotations-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-client-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-common-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-examples-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-external-blockcache-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop2-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-hadoop-compat-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-it-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-prefix-tree-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-procedure-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-protocol-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-resource-bundle-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-rest-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-server-1.2.3-tests.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-shell-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\hbase-thrift-1.2.3.jar;D:\SoftWare\hbase-1.2.3\lib\htrace-core-3.1.0-incubating.jar;D:\SoftWare\hbase-1.2.3\lib\httpclient-4.2.5.jar;D:\SoftWare\hbase-1.2.3\lib\httpcore-4.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-core-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-jaxrs-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-mapper-asl-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jackson-xc-1.9.13.jar;D:\SoftWare\hbase-1.2.3\lib\jamon-runtime-2.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-compiler-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\jasper-runtime-5.5.23.jar;D:\SoftWare\hbase-1.2.3\lib\javax.inject-1.jar;D:\SoftWare\hbase-1.2.3\lib\java-xmlbuilder-0.4.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-api-2.2.2.jar;D:\SoftWare\hbase-1.2.3\lib\jaxb-impl-2.2.3-1.jar;D:\SoftWare\hbase-1.2.3\lib\jcodings-1.0.8.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-client-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-core-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-guice-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-json-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jersey-server-1.9.jar;D:\SoftWare\hbase-1.2.3\lib\jets3t-0.9.0.jar;D:\SoftWare\hbase-1.2.3\lib\jettison-1.3.3.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-sslengine-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\jetty-util-6.1.26.jar;D:\SoftWare\hbase-1.2.3\lib\joni-2.1.2.jar;D:\SoftWare\hbase-1.2.3\lib\jruby-complete-1.6.8.jar;D:\SoftWare\hbase-1.2.3\lib\jsch-0.1.42.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\jsp-api-2.1-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\junit-4.12.jar;D:\SoftWare\hbase-1.2.3\lib\leveldbjni-all-1.8.jar;D:\SoftWare\hbase-1.2.3\lib\libthrift-0.9.3.jar;D:\SoftWare\hbase-1.2.3\lib\log4j-1.2.17.jar;D:\SoftWare\hbase-1.2.3\lib\metrics-core-2.2.0.jar;D:\SoftWare\hbase-1.2.3\lib\netty-all-4.0.23.Final.jar;D:\SoftWare\hbase-1.2.3\lib\paranamer-2.3.jar;D:\SoftWare\hbase-1.2.3\lib\protobuf-java-2.5.0.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5.jar;D:\SoftWare\hbase-1.2.3\lib\servlet-api-2.5-6.1.14.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-api-1.7.7.jar;D:\SoftWare\hbase-1.2.3\lib\slf4j-log4j12-1.7.5.jar;D:\SoftWare\hbase-1.2.3\lib\snappy-java-1.0.4.1.jar;D:\SoftWare\hbase-1.2.3\lib\spymemcached-2.11.6.jar;D:\SoftWare\hbase-1.2.3\lib\xmlenc-0.52.jar;D:\SoftWare\hbase-1.2.3\lib\xz-1.0.jar;D:\SoftWare\hbase-1.2.3\lib\zookeeper-3.4.6.jar

2016-12-10 11:05:45,118 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.library.path=C:\Program Files\Java\jdk1.7.0_51\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\ProgramData\Oracle\Java\javapath;C:\Python27\;C:\Python27\Scripts;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;D:\SoftWare\MATLAB R2013a\runtime\win64;D:\SoftWare\MATLAB R2013a\bin;C:\Program Files (x86)\IDM Computer Solutions\UltraCompare;C:\Program Files\Java\jdk1.7.0_51\bin;C:\Program Files\Java\jdk1.7.0_51\jre\bin;D:\SoftWare\apache-ant-1.9.0\bin;HADOOP_HOME\bin;D:\SoftWare\apache-maven-3.3.9\bin;D:\SoftWare\Scala\bin;D:\SoftWare\Scala\jre\bin;%MYSQL_HOME\bin;D:\SoftWare\MySQL Server\MySQL Server 5.0\bin;D:\SoftWare\apache-tomcat-7.0.69\bin;%C:\Windows\System32;%C:\Windows\SysWOW64;D:\SoftWare\SSH Secure Shell;.

2016-12-10 11:05:45,119 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.io.tmpdir=C:\Users\ADMINI~1\AppData\Local\Temp\

2016-12-10 11:05:45,120 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:java.compiler=<NA>

2016-12-10 11:05:45,120 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.name=Windows 7

2016-12-10 11:05:45,121 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.arch=amd64

2016-12-10 11:05:45,121 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:os.version=6.1

2016-12-10 11:05:45,131 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.name=Administrator

2016-12-10 11:05:45,131 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.home=C:\Users\Administrator

2016-12-10 11:05:45,132 INFO [org.apache.zookeeper.ZooKeeper] - Client environment:user.dir=D:\Code\MyEclipseJavaCode\HbaseProject

2016-12-10 11:05:45,136 INFO [org.apache.zookeeper.ZooKeeper] - Initiating client connection, connectString=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181 sessionTimeout=180000 watcher=hconnection-0x5fc2fc590x0, quorum=HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181, baseZNode=/hbase

2016-12-10 11:05:45,329 INFO [org.apache.zookeeper.ClientCnxn] - Opening socket connection to server HadoopMaster/192.168.80.10:2181. Will not attempt to authenticate using SASL (unknown error)

2016-12-10 11:05:45,365 INFO [org.apache.zookeeper.ClientCnxn] - Socket connection established to HadoopMaster/192.168.80.10:2181, initiating session

2016-12-10 11:05:45,421 INFO [org.apache.zookeeper.ClientCnxn] - Session establishment complete on server HadoopMaster/192.168.80.10:2181, sessionid = 0x1582587a9550008, negotiated timeout = 40000

2016-12-10 11:05:47,266 INFO [org.apache.hadoop.hbase.client.ConnectionManager$HConnectionImplementation] - Closing zookeeper sessionid=0x1582587a9550008

2016-12-10 11:05:47,275 INFO [org.apache.zookeeper.ZooKeeper] - Session: 0x1582587a9550008 closed

2016-12-10 11:05:47,275 INFO [org.apache.zookeeper.ClientCnxn] - EventThread shut down

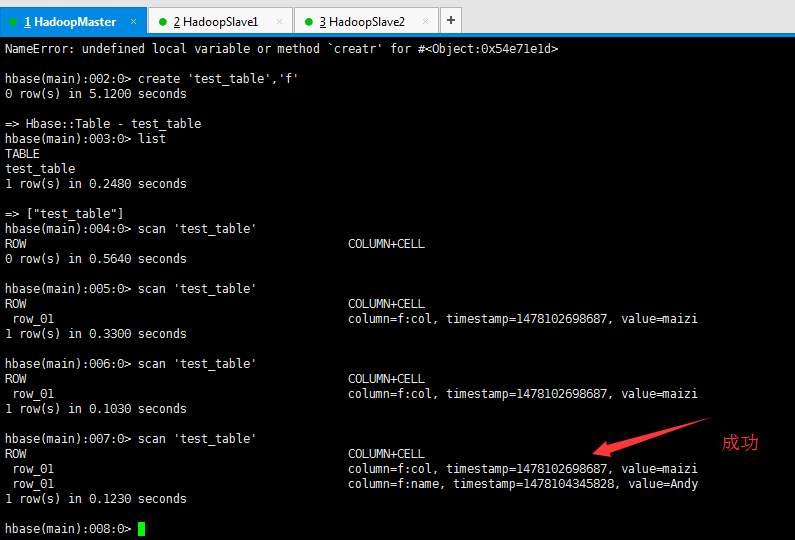

scan 'test_table'

全盘扫描该Hbase数据表test_table

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_01"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

scan 'test_table'

全盘扫描该Hbase数据表test_table

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

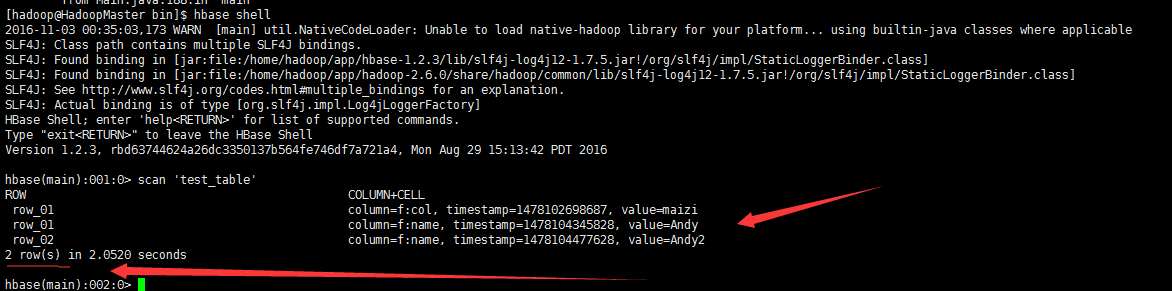

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_02"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy2"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

scan 'test_table'

全盘扫描该Hbase数据表test_table

package zhouls.bigdata.HbaseProject.Test1; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.util.Bytes; public class HBaseTest {

public static void main(String[] args) throws Exception {

HTable table = new HTable(getConfig(),TableName.valueOf("test_table"));//表名是test_table

Put put = new Put(Bytes.toBytes("row_03"));//行键是row_01

put.add(Bytes.toBytes("f"),Bytes.toBytes("name"),Bytes.toBytes("Andy3"));//列簇是f,列修饰符是col,值是maizi

table.put(put);

table.close();

} public static Configuration getConfig(){

Configuration configuration = new Configuration();

// conf.set("hbase.rootdir","hdfs:HadoopMaster:9000/hbase");

configuration.set("hbase.zookeeper.quorum", "HadoopMaster:2181,HadoopSlave1:2181,HadoopSlave2:2181");

return configuration;

}

}

scan 'test_table'

全盘扫描该Hbase数据表test_table

HBase编程 API入门系列之put(客户端而言)(1)的更多相关文章

- HBase编程 API入门系列之delete(客户端而言)(3)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. 前面的基础,如下 HBase编程 API入门系列之put(客户端而言)(1) HBase编程 API入门系列之get(客户端而言) ...

- HBase编程 API入门系列之get(客户端而言)(2)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. 前面是基础,如下 HBase编程 API入门系列之put(客户端而言)(1) package zhouls.bigdata.Hba ...

- HBase编程 API入门系列之create(管理端而言)(8)

大家,若是看过我前期的这篇博客的话,则 HBase编程 API入门系列之put(客户端而言)(1) 就知道,在这篇博文里,我是在HBase Shell里创建HBase表的. 这里,我带领大家,学习更高 ...

- HBase编程 API入门系列之HTable pool(6)

HTable是一个比较重的对此,比如加载配置文件,连接ZK,查询meta表等等,高并发的时候影响系统的性能,因此引入了“池”的概念. 引入“HBase里的连接池”的目的是: 为了更高的,提高程序的并发 ...

- HBase编程 API入门系列之delete(管理端而言)(9)

大家,若是看过我前期的这篇博客的话,则 HBase编程 API入门之delete(客户端而言) 就知道,在这篇博文里,我是在客户端里删除HBase表的. 这里,我带领大家,学习更高级的,因为,在开发中 ...

- HBase编程 API入门系列之工具Bytes类(7)

这是从程度开发层面来说,为了方便和提高开发人员. 这个工具Bytes类,有很多很多方法,帮助我们HBase编程开发人员,提高开发. 这里,我只赘述,很常用的! package zhouls.bigda ...

- HBase编程 API入门系列之scan(客户端而言)(5)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. package zhouls.bigdata.HbaseProject.Test1; import javax.xml.trans ...

- HBase编程 API入门系列之delete.deleteColumn和delete.deleteColumns区别(客户端而言)(4)

心得,写在前面的话,也许,中间会要多次执行,连接超时,多试试就好了. delete.deleteColumn和delete.deleteColumns区别是: deleteColumn是删除某一个列簇 ...

- HBase编程 API入门系列之modify(管理端而言)(10)

这里,我带领大家,学习更高级的,因为,在开发中,尽量不能去服务器上修改表. 所以,在管理端来修改HBase表.采用线程池的方式(也是生产开发里首推的) package zhouls.bigdata.H ...

随机推荐

- JAVA学习2:Eclipse集成Maven

我的环境: Eclipse:eclipse-jee-juno-SR2-win32 Maven:Maven3.0.5 1.Help->Eclipse Marketplace 2.选中要安装的插件, ...

- linux下对应mysql数据库的常用操作

ssh管理工具连接mysql数据库. 一.连接mysql数据库: 通过shh管理工具,登录linux的用户名,密码,进入ssh的命令行界面后,执行如下命令: mysql -u 数据库用户名 -p 然后 ...

- ugui的优化

参考文章 https://www.jianshu.com/p/061e67308e5f https://www.jianshu.com/p/8a9ccf34860e http://blog.jobbo ...

- 算法学习笔记之——priority queue、heapsort、symbol table、binary search trees

Priority Queue 类似一个Queue,但是按照priority的大小顺序来出队 一般存在两种方式来实施 排序法(ordered),在元素入队时即进行排序,这样插入操作为O(N),但出队为O ...

- CF520B——Two Buttons——————【广搜或找规律】

J - Two Buttons Time Limit:2000MS Memory Limit:262144KB 64bit IO Format:%I64d & %I64u Su ...

- ie6的display:inline-block实现

摘抄自原文链接 简单来说display:inline-block,就是可以让行内元素或块元素变成行内块元素,可以不float就能像块级元素一样设置宽高,又能像行内元素一样轻松居中. 在ie6中给div ...

- Python基础学习总结(四)

6.高阶特性 6.1迭代 如果给定一个list或tuple,我们可以通过for循环来遍历这个list或tuple,这种遍历我们称为迭代(Iteration).在Python中,迭代是通过for ... ...

- Hive Join

最近被朋友问到有关于Hive Join的问题,保守回答过后,来补充补充知识: Hive是基于Hadoop的一个数据仓库工具,可以将结构化的数据文件映射为一张数据库表,并提供类SQL查询功能. 一.Hi ...

- Java温故而知新(5)设计模式详解(23种)

一.设计模式的理解 刚开始“不懂”为什么要把很简单的东西搞得那么复杂.后来随着软件开发经验的增加才开始明白我所看到的“复杂”恰恰就是设计模式的精髓所在,我所理解的“简单”就是一把钥匙开一把锁的模式,目 ...

- golang 使用rrd的相关资料

一.简介 RRDtool是指Round Robin Database工具,即环状数据库.从功能上说,RRDtool可用于数据存储+数据展示.著名的网络流量绘图软件MRTG和集群监控系统Gan ...