深度神经网络入门教程Deep Neural Networks: A Getting Started Tutorial

Deep Neural Networks are the more computationally powerful cousins to regular neural networks. Learn exactly what DNNs are and why they are the hottest topic in machine learning research.

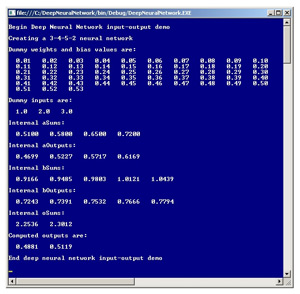

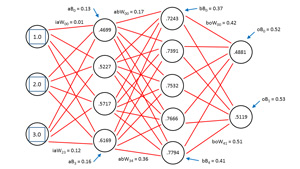

The term deep neural network can have several meanings, but one of the most common is to describe a neural network that has two or more layers of hidden processing neurons. This article explains how to create a deep neural network using C#. The best way to get a feel for what a deep neural network is and to see where this article is headed is to take a look at the demo program in Figure 1 and the associated diagram inFigure 2.

Both figures illustrate the input-output mechanism for a neural network that has three inputs, a first hidden layer ("A") with four neurons, a second hidden layer ("B") with five neurons and two outputs. "There are several different meanings for exactly what a deep neural network is, but one is just a neural network with two (or more) layers of hidden nodes." 3-4-5-2 neural network requires a total of (3 * 4) + 4 + (4 * 5) + 5 + (5 * 2) + 2 = 53 weights and bias values. In the demo, the weights and biases are set to dummy values of 0.01, 0.02, . . . , 0.53. The three inputs are arbitrarily set to 1.0, 2.0 and 3.0. Behind the scenes, the neural network uses the hyperbolic tangent activation function when computing the outputs of the two hidden layers, and the softmax activation function when computing the final output values. The two output values are 0.4881 and 0.5119.

Research in the field of deep neural networks is relatively new compared to classical statistical techniques. The so-called Cybenko theorem states, somewhat loosely, that a fully connected feed-forward neural network with a single hidden layer can approximate any continuous function. The point of using a neural network with two layers of hidden neurons rather than a single hidden layer is that a two-hidden-layer neural network can, in theory, solve certain problems that a single-hidden-layer network cannot. Additionally, a two-hidden-layer neural network can sometimes solve problems that would require a huge number of nodes in a single-hidden-layer network.

This article assumes you have a basic grasp of neural network concepts and terminology and at least intermediate-level programming skills. The demo is coded using C#, but you should be able to refactor the code to other languages such as JavaScript or Visual Basic .NET without too much difficulty. Most normal error checking has been omitted from the demo to keep the size of the code small and the main ideas as clear as possible.

The Input-Output Mechanism

The input-output mechanism for a deep neural network with two hidden layers is best explained by example. Take a look at Figure 2. Because of the complexity of the diagram, most of the weights and bias value labels have been omitted, but because the values are sequential -- from 0.01 through 0.53 -- you should be able to infer exactly what the unlabeled values are. Nodes, weights and biases are indexed (zero-based) from top to bottom. The first hidden layer is called layer A in the demo code and the second hidden layer is called layer B. For example, the top-most input node has index [0] and the bottom-most node in the second hidden layer has index [4].

In the diagram, label iaW00 means, "input to layer A weight from input node 0 to A node 0." Label aB0 means, "A layer bias value for A node 0." The output for layer-A node [0] is 0.4699 and is computed as follows (first, the sum of the node's inputs times associated with their weights is computed):

(1.0)(0.01) + (2.0)(0.05) + (3.0)(0.09) = 0.38

Next, the associated bias is added:

0.38 + 0.13 = 0.51

Then, the hyperbolic tangent function is applied to the sum to give the node's local output value:

tanh(0.51) = 0.4699

The three other values for the layer-A hidden nodes are computed in the same way, and are 0.5227, 0.5717 and 0.6169, as you can see in both Figure 1 and Figure 2. Notice that the demo treats bias values as separate constants, rather than the somewhat confusing and common alternative of treating bias values as special weights associated with dummy constant 1.0-value inputs.

The output for layer-B node [0] is 0.7243. The node's intermediate sum is:

(0.4699)(0.17) + (0.5227)(0.22) + (0.5717)(0.27) + (0.6169)(0.32) = 0.5466

The bias is added:

0.5466 + 0.37 = 0.9166

And the hyperbolic tangent is applied:

tanh(0.9166) = 0.7243

The same pattern is followed to compute the other layer-B hidden node values: 0.7391, 0.7532, 0.7666 and 0.7794. The values for final output nodes [0] and [1] are computed in a slightly different way because softmax activation is used to coerce the sum of the outputs to 1.0. Preliminary (before activation) output [0] is:

(0.7243)(0.42) + (0.7391)(0.44) + (0.7532)(0.46) + (0.7666)(0.48) + (0.7794)(0.50) + 0.52 = 2.2536

Similarly, preliminary output [1] is:

(0.7243)(0.43) + (0.7391)(0.45) + (0.7532)(0.47) + (0.7666)(0.49) + (0.7794)(0.51) + 0.53 = 2.3012 Applying softmax, final output [0] = exp(2.2536) / (exp(2.2536) + exp(2.3012)) = 0.4881. And final output [1] = exp(2.3012) / (exp(2.2536) + exp(2.3012)) = 0.5119

The two final output computations are illustrated using the math definition of softmax activation. The demo program uses a derivation of the definition to avoid arithmetic overflow.

Overall Program Structure

The overall structure of the demo program, with a few minor edits to save space, is presented in Listing 1. To create the demo, I launched Visual Studio and created a new project named DeepNeuralNetwork. The demo has no significant Microsoft .NET Framework version dependencies, so any relatively recent version of Visual Studio should work. After the template-generated code loaded into the editor, I removed all using statements except the one that references the top-level System namespace. In the Solution Explorer window I renamed the file Program.cs to the slightly more descriptive DeepNetProgram and Visual Studio automatically renamed class Program for me.

Listing 1: Overall Demo Program Structure

using System;

namespace DeepNeuralNetwork

{

class DeepNetProgram

{

static void Main(string[] args)

{

Console.WriteLine("Begin Deep Neural Network demo"); Console.WriteLine("Creating a 3-4-5-2 network");

int numInput = 3;

int numHiddenA = 4;

int numHiddenB = 5;

int numOutput = 2; DeepNeuralNetwork dnn =

new DeepNeuralNetwork(numInput,

numHiddenA, numHiddenB, numOutput); double[] weights = new double[] {

0.01, 0.02, 0.03, 0.04, 0.05, 0.06, 0.07, 0.08, 0.09, 0.10,

0.11, 0.12, 0.13, 0.14, 0.15, 0.16, 0.17, 0.18, 0.19, 0.20,

0.21, 0.22, 0.23, 0.24, 0.25, 0.26, 0.27, 0.28, 0.29, 0.30,

0.31, 0.32, 0.33, 0.34, 0.35, 0.36, 0.37, 0.38, 0.39, 0.40,

0.41, 0.42, 0.43, 0.44, 0.45, 0.46, 0.47, 0.48, 0.49, 0.50,

0.51, 0.52, 0.53 }; dnn.SetWeights(weights); double[] xValues = new double[] { 1.0, 2.0, 3.0 }; Console.WriteLine("Dummy weights and bias values are:");

ShowVector(weights, 10, 2, true); Console.WriteLine("Dummy inputs are:");

ShowVector(xValues, 3, 1, true); double[] yValues = dnn.ComputeOutputs(xValues); Console.WriteLine("Computed outputs are:");

ShowVector(yValues, 2, 4, true); Console.WriteLine("End deep neural network demo");

Console.ReadLine();

} static public void ShowVector(double[] vector, int valsPerRow,

int decimals, bool newLine)

{

for (int i = 0; i < vector.Length; ++i)

{

if (i % valsPerRow == 0) Console.WriteLine("");

Console.Write(vector[i].ToString("F" + decimals) + " ");

}

if (newLine == true) Console.WriteLine("");

}

} // Program public class DeepNeuralNetwork { . . } }

The program class consists of the Main entry point method and a ShowVector helper method. The deep neural network is encapsulated in a program-defined class named DeepNeuralNetwork. The Main method instantiates a 3-4-5-2 fully connected feed-forward neural network and assigns 53 dummy values for the network's weights and bias values using method SetWeights. After dummy inputs of 1.0, 2.0 and 3.0 are set up in array xValues, those inputs are fed to the network via method ComputeOutputs, which returns the outputs into array yValues. Notice that the demo illustrates only the deep neural network feed-forward mechanism, and doesn't perform any training.

The Deep Neural Network Class

The structure of the deep neural network class is presented in Listing 2. The network is hard-coded for two hidden layers. Neural networks with three or more hidden layers are rare, but can be easily created using the design pattern in this article. A challenge when working with deep neural networks is keeping the names of the many weights, biases, inputs and outputs straight. The input-to-layer-A weights are stored in matrix iaWeights, the layer-A-to-layer-B weights are stored in matrix abWeights, and the layer-B-to-output weights are stored in matrix boWeights.

Listing 2: Deep Neural Network Class Structure

public class DeepNeuralNetwork

{

private int numInput;

private int numHiddenA;

private int numHiddenB;

private int numOutput; private double[] inputs; private double[][] iaWeights;

private double[][] abWeights;

private double[][] boWeights; private double[] aBiases;

private double[] bBiases;

private double[] oBiases; private double[] aOutputs;

private double[] bOutputs;

private double[] outputs; private static Random rnd; public DeepNeuralNetwork(int numInput, int numHiddenA,

int numHiddenB, int numOutput) { . . }

private static double[][] MakeMatrix(int rows, int cols) { . . }

private void InitializeWeights() { . . }

public void SetWeights(double[] weights) { . . }

public double[] ComputeOutputs(double[] xValues) { . . }

private static double HyperTanFunction(double x) { . . }

private static double[] Softmax(double[] oSums) { . . }

} from: https://visualstudiomagazine.com/articles/2014/06/01/deep-neural-networks.aspx

深度神经网络入门教程Deep Neural Networks: A Getting Started Tutorial的更多相关文章

- 用matlab训练数字分类的深度神经网络Training a Deep Neural Network for Digit Classification

This example shows how to use Neural Network Toolbox™ to train a deep neural network to classify ima ...

- 深度学习概述教程--Deep Learning Overview

引言 深度学习,即Deep Learning,是一种学习算法(Learning algorithm),亦是人工智能领域的一个重要分支.从快速发展到实际应用,短短几年时间里, ...

- [译]深度神经网络的多任务学习概览(An Overview of Multi-task Learning in Deep Neural Networks)

译自:http://sebastianruder.com/multi-task/ 1. 前言 在机器学习中,我们通常关心优化某一特定指标,不管这个指标是一个标准值,还是企业KPI.为了达到这个目标,我 ...

- 吴恩达《深度学习》-第一门课 (Neural Networks and Deep Learning)-第四周:深层神经网络(Deep Neural Networks)-课程笔记

第四周:深层神经网络(Deep Neural Networks) 4.1 深层神经网络(Deep L-layer neural network) 有一些函数,只有非常深的神经网络能学会,而更浅的模型则 ...

- 吴恩达《深度学习》-课后测验-第一门课 (Neural Networks and Deep Learning)-Week 4 - Key concepts on Deep Neural Networks(第四周 测验 – 深层神经网络)

Week 4 Quiz - Key concepts on Deep Neural Networks(第四周 测验 – 深层神经网络) \1. What is the "cache" ...

- 为什么深度神经网络难以训练Why are deep neural networks hard to train?

Imagine you're an engineer who has been asked to design a computer from scratch. One day you're work ...

- Neural Networks and Deep Learning 课程笔记(第四周)深层神经网络(Deep Neural Networks)

1. 深层神经网络(Deep L-layer neural network ) 2. 前向传播和反向传播(Forward and backward propagation) 3. 总结 4. 深层网络 ...

- 深度学习(六十九)darknet 实现实验 Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffma

本文主要实验文献文献<Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization ...

- 《Improving Deep Neural Networks:Hyperparameter tuning, Regularization and Optimization》课堂笔记

Lesson 2 Improving Deep Neural Networks:Hyperparameter tuning, Regularization and Optimization 这篇文章其 ...

随机推荐

- c++继承详解

C++中的三种继承public,protected,private 三种访问权限 public:可以被任意实体访问 protected:只允许子类及本类的成员函数访问 private:只允许本类的成员 ...

- MySQL参数调优最佳实践

前言很多时候,RDS用户经常会问如何调优RDS MySQL的参数,为了回答这个问题,写一篇blog来进行解释: 哪一些参数不能修改,那一些参数可以修改:这些提供修改的参数是不是已经是最佳设置,如何才能 ...

- Java中main函数参数String args[] 和 String[] args 区别

其实没什么区别的:当初我也是这样的疑问,呵呵:非要说区别就看下面:执行效果上没有不同, 但在语法意义上略有不同. 比如, String与String[], 前者叫字符串类型而后者叫字符串数组类型. S ...

- 从零开始学ios开发(十四):Navigation Controllers and Table Views(上)

这一篇我们将学习一个新的控件Navigation Controller,很多时候Navigation Controller是和Table View紧密结合在一起的,因此在学习Navigation Co ...

- c语言编程之二叉树

利用链表建立二叉树,完成前序遍历.中序遍历.后序遍历. 建立二叉树用的是前序遍历建立二叉树: #include<stdio.h> #include<stdlib.h> #inc ...

- MITK Tutorial

MITK 设计来使用模块化和高度的代码重用,既能作为 pure software library或complete application framework.它的结构概览图如下: ITK:提供分 ...

- Oppotunity land---China

China is a land of opportunity.Following the development of China,every sector has made their contri ...

- bzoj 4010: [HNOI2015]菜肴制作 拓扑排序

题目链接: 题目 4010: [HNOI2015]菜肴制作 Time Limit: 5 Sec Memory Limit: 512 MB 问题描述 知名美食家小 A被邀请至ATM 大酒店,为其品评菜肴 ...

- display:none和visibility: hidden二三事

display:none属性后,HTML元素(对象)的宽度.高度等各种属性值都将“丢失”;而使用visibility:hidden属性后,HTML元素(对象)仅仅是在视觉上看不见(完全透明),而它所占 ...

- [转载]App.Config详解及读写操作

App.Config详解 应用程序配置文件是标准的 XML 文件,XML 标记和属性是区分大小写的.它是可以按需要更改的,开发人员可以使用配置文件来更改设置,而不必重编译应用程序.配置文件的根节点是c ...