[线性代数] 矩阵代数基础 Basic Matrix Algebra

Overview: Matrix algebra

Matrix algebra covers rules allowing matrices to be manipulated algebraically via addition, subtraction, multiplication and division. However, despite the manipulations illustrated in the following may seem to be like that of normal algebra, bear in mind that for everything we do in matrix algebra, we consider the manipulation of LINEAR COMBINATION, not individual elements in matrices. The difference would help rationalize many properties below. So, please bear in mind of this.

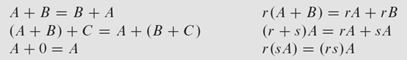

Matrix addition

When doing matrix addition, we have a requirement that all matrices being added along the way should be of same sizes. This requirement can be proved as the following: since all matrices having same size must have their columns in same size with each other as well. Thus, aj+bj+cj+…+nj = yj stands for all columns within the addition chain. Nothing special, everything simple enough.

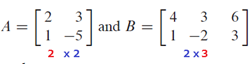

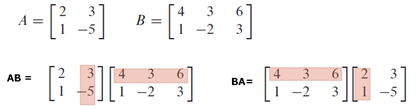

Matrix multiplication

While matrix multiplication does not involve the too-restrictive 'same size' assumption as that in matrix addition and subtraction, it still requires size of rows of preceding matrix = size of column of the matrix followed.

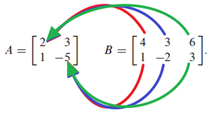

Noted that matrix multiplication is NOT COMMUNTATIVE, meaning  , a scratch of proof as follow:

, a scratch of proof as follow:

Indeed, matrix multiplication is like matrix-vector multiplication as follows. In Abi, each column on RHS matrix acts as a coefficient to the matrix comes before it, which means each element in bi acts as a coefficient to each column in A.

Sometimes in matrix multiplication, it's quite hard to recall to which element in the resulting matrix will the current operation result go. Fortunately, we have the row-column rules, which states each row of multiplicand corresponds to each row in resulting matrix after some linear combination.

Another mnemonic to help us remember the order :D

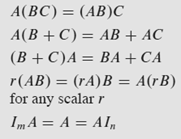

So we have the following 'seemingly trivial' matrix multiplication rules, but they are all reasonable. Noted that despite matrix multiplication is both left and right distributive, they are not commutative! Order must be strictly observed so the assumption of 'size of row of multiplicand = size of columns in multiplier' is upheld. Moreover, such non-commutativity also renders a dimensionality transformation of identity matrix in ImA=AIn.

Some sketches of proofs in terms of columns. Noted that (B+C) is essentially element-wise operations, which should not affect linear combination much.

Finally, there are few warnings about matrix multiplication. As mentioned, always bear in mind matrix multiplication is completely different from real-number multiplication, in a sense that the former considers the linear combination among columns in multiplicands weighted by elements in multiplier. Thus, we yield the following reminders about matrix multiplication:

Another aspect of matrix multiplication is matrix power, where matrices multiplied by themselves. Due to the chaining nature of matrix power, it requires each A to be of nxn square matrix.

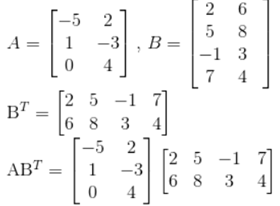

In the following, I'm going to introduce matrix transpose, which application is not yet explicit for the moment. Transposing a matrix allows the interchange of dimension of a matrix. Imagine a 3x2 matrix B, BT becomes 2x3. It sometimes become handy in matrix multiplication, like:

Matrix inverse

Warning: matrix inverse refers to any invertible matrices not limited to 2x2! Just that the calculation of invertibility of larger matrix is a little bit more involved. Interested readers may refer to here. Thus, the properties below actually apply to larger matrix.

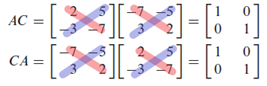

A non-singular matrix must be of nxn. Its inverse must be uniquely determined. Noted here that  , and the LHS and RHS of the following must be satisfied simultaneously. Otherwise, the matrix A can only be a singular matrix. This highlights the important property of matrix multiplication, that left multiply and right multiply do make a difference. This also justify why the nxn requirement for matrix to be invertible.

, and the LHS and RHS of the following must be satisfied simultaneously. Otherwise, the matrix A can only be a singular matrix. This highlights the important property of matrix multiplication, that left multiply and right multiply do make a difference. This also justify why the nxn requirement for matrix to be invertible.

We can conclude from below that indeed A-1 = C. Moreover, when observed closely the relation between the colored stripes above, we can draw inference about what determines a matrix to be invertible or not by simple inspection of the matrix we are interested in. In general, a invertible matrix satisfies:

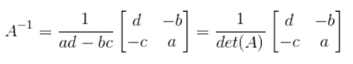

So, from above,  , A is invertible. Noted still, we have zero idea about who is its invertible. So the following formula comes into rescue:

, A is invertible. Noted still, we have zero idea about who is its invertible. So the following formula comes into rescue:

With the uniqueness of matrix inverse, we can answer the uniqueness problem of a matrix equation without going through the pain of row-reduction algorithm: for any nxn invertible matrix, Ax=b must have unique solution. A sketch of proof would be that A-1A=I, and identity matrix has no free variable and always have a pivot position at each column. This gives unique solution.

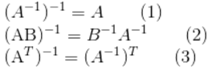

Below show some properties of invertible matrices:

(2) states if A and B are both invertible nxn matrices, AB is also invertible and its inverse is B-1A-1 .

Proof of (2):

Proof of (3):  . And that if A is invertible, so is AT.

. And that if A is invertible, so is AT.

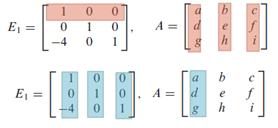

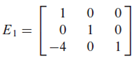

Elementary Matrix

It refers to an identity matrix In being applied a SINGLE row operation. Recalled the painful row operation to be performed when we need to row-reduce a matrix. Such trouble can be effectively avoided by multiplying elementary matrix onto the matrix A being row-reduced. Instead of directly applying row operations onto A, we apply it on identity matrix I, one at a time. As we know to get the reduced row echelon form of A, we have to undergo a series of row operations. This means we need to apply row operations onto a series of identity matrices before left-multiplying them on A, as follows:

Since row operation does not change the solution set of a matrix equation,  is row equivalent to A!

is row equivalent to A!

Recall that row operations are reversible and let E' denotes the reverse of row operation, we have IA=A:

This sounds familiar…doesn't it mean that elementary matrices are invertible? Indeed, find E' is equivalent of finding E-1. Unfortunately, our discussion of invertibility above only limited to 2x2. Then how can we invert a nxn matrix in general?

Despite it can be as computationally involved as illustrated in the link above, finding an inverse on E can be trivial. You can simply tell what row operation has been applied onto I to generate E1 above. Thus, we have the following:

Matrix A is invertible (AA-1=A-1A=In) if it can be row-reduced to In. And we claim that any sequence of elementary row operations reducing A to In also transform In to A-1. Assuming A is invertible, a sketch of proof as follow:

Thus, we have:

This has great implication, as we know in general,

But now we can apply the elementary matrices WITH SAME SEQUENCE on A to transform:

- A to In

- In to A-1

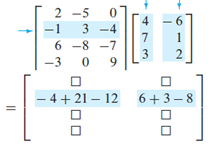

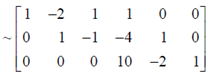

If you are just given a matrix A, how could you test if inverse exists? How to find one? Recalled from above invertibility means that:

And we also use determinants to test and find the inverse of 2x2 matrices. We also have that complicated procedure for dealing with matrix beyond 2x2. But from our discussion immediately above, we seem to find another way of dealing with nxn invertibility without going through the plain of that complex algorithm.

Why not putting them all together to get [A I], on which a sequence of elementary matrices is applied to yield [I A-1]? If the nxn matrix A does not have an inverse, [A I] cannot be row reduced to [I A-1]. Sometimes only certain column of A-1 is needed, we can even solve [A ei] instead of [A I] as we know [A I] = [A e1 e2 … en].

Characteristics of invertible matrices

Most of you may encounter the lengthy list of invertible properties when studying invertible matrices. However, everything can be boiled down into the following:

invertible == row equivalent to In == n pivot columns == columns all linearly independent == columns span Rn == linear transformation one-to-one for Ax=0 == at least one solution to Ax=b (at least have that for Ax=0)

However, there still one tricky property which requires some derivation:

The linear transformation x à Ax maps Rn to Rn

Let's consider the proof as follows: as A is invertible, A must be a nxn and all columns thus span Rn. Naturally, the above stands.

With these properties at our belt, we can quickly determine if a nxn matrix is invertible or not: when it can be row-reduced to a yield a unique solution/ to be an identity matrix, it must be invertible. After all, invertibility is guaranteed by matrices which are row equivalent to identity matrix.

Despite the universality of these characteristics linking the concepts of linear independence to solution uniqueness, one limitation is that all these only apply on square matrices. For non-square matrices, we still need to painfully row-reduce the matrix to decide the basic and free variables.

Invertible linear transformation

Definition first. A linear transformation T: Rn à Rn is invertible

- iff standard matrix of standard matrix of T, namely A, is invertible. è explains why T need to be Rn à Rn

- iff there exists a function S: RnàRn such the following holds FOR ALL x IN Rn:

S(T(x)) = x

T(S(x)) = x

Noted here we are talking about INVERSE of linear transformation, and the result of the applying the inverse of linear transformation onto another one recovers the input vector x. This becomes useful in solving matrix equations later.

If linear transformation T is invertible, S(x)=A-1x is a unique function satisfying S(T(x)) = x and T(S(x)) = x, namely:

Thus, it's like what we've seen, and S upholds the uniqueness of inverse.

Here, to summarize, we observed that for a linear transformation T: RnàRn, the columns of standard matrix must be linearly independent and span across Rn, and thus gives an onto mapping, hence T is an invertible linear transformation and there exists a unique inverse: S(x)=A-1x, which recovers the input vector x from T(x).

Some Examples

後續:例子不定期更新,矩陣代數進階版

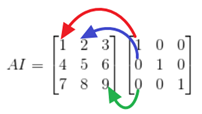

Ex 1. Proof of identity matrix multiplication: AI=A. As you can see, ai with non-one coefficient are zeroed-out.

Ex 2. Prove when a nxn matrix is invertible, Ax=b exists unique solution for all b belongs to Rn. From the following, we see the uniqueness of solution relies on uniqueness of the inverse, A-1.

Ex 3. We mentioned that by applying a series of elementary row operations on [A I], we get [I A-1]. If we are to row-reduce A-1 back into I again, we must apply the series in an reverse order:  . In other words, the same sequence can only be used to reduce A to I and I to A-1, but not the reverse.

. In other words, the same sequence can only be used to reduce A to I and I to A-1, but not the reverse.

Ex 4. When asking about if B=C where AB=BC, we must know if A is invertible or not. If A is invertible, then this B=C stands. Otherwise, it does not.

Ex 5. If AB, B is invertible, then A must be invertible. We first let C be the product of the invertible AB. By theorem, since AB invertible, C must be invertible as well. Noted in the final step we get A equals to the product of invertible matrices. Hence A is invertible. ■

Ex 6. Recalled that for invertible nxn matrix A, for any b in Rn, there exists a unique solution to Ax=b, namely x=A-1b. Also recall there's a unique sequence {Ei} which transform A to A-1. This justifies the uniqueness of x. As there's unique solution x to the system, the columns span Rn and we can conclude that for an invertible matrix, columns of A spans Rn and are linearly independent.

Ex 7. Careful when we try to row reduce [A I], sometimes A turns out to be singular.

Ex 8. General concepts for nxn matrix A

- if Ax=0 only has trivial solution, meaning there are only basic variables and thus all columns are pivot columns. Thus, they are linearly independent and reducible to/ row-equivalent to identity matrix.

- In other words, for a nxn matrix, if there are n pivot positions, it's definitely invertible and has at least one unique solution in Ax=b

- Recall a nxn matrix A has another nxn matrix D such that AD=I, there must exist a nxn matrix C where CA=I. Specifically, C=D due to inverse uniqueness.

- A linear transformation T: x à Ax mapping Rn to Rn, i.e. no dimensional changes.

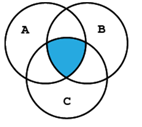

- When the columns in A are linearly independent, then they spans Rn. Recall definition of linear span of a set of vectors in a vector space. Span is the intersection of ALL linear subspaces each containing every vector within the subspace. Or, schematically, where A,B and C are all vector spaces.

Thus, with n linearly independent columns in a nxn matrix, they must form a basis vector set for Rn, all possible linear combination of these vectors must reconstruct the vector space Rn, thus they 'span' Rn.

- By the theorem, if there exists at least one solution to Ax=b, then the linear transformation is one-to-one. Noted that if only the former part of the statement is true, there still has chance to be a onto mapping but not one-to-one, if there exists free variable in matrix. But for a nxn matrix with at least one solution to Ax=b, there must be n pivot columns, according to the theorem. Thus, the mapping becomes one-to-one, as it is not possible to have free variables anymore.

[线性代数] 矩阵代数基础 Basic Matrix Algebra的更多相关文章

- 算法库:基础线性代数子程序库(Basic Linear Algebra Subprograms,BLAS)介绍

调试DeepFlow光流算法,由于作者给出的算法是基于Linux系统的,所以要在Windows上运行,不得不做大量的修改工作.移植到Windows平台,除了一些头文件找不到外,还有一些函数也找不到.这 ...

- [线性代数] 矩阵代数進階:矩阵分解 Matrix factorization

Matrix factorization 导语:承载上集的矩阵代数入门,今天来聊聊进阶版,矩阵分解.其他集数可在[线性代数]标籤文章找到.有空再弄目录什麽的. Matrix factorization ...

- R语言基础-list matrix array

列表可以包含多种类型,如数字/字符/向量/data.frame/list # 创建含一个向量元素的list list1 = list(c(1,2,3)) # list2有三个元素 list2 = li ...

- Python与线性代数——Numpy中的matrix()和array()的区别

Numpy中matrix必须是2维的,但是 numpy中array可以是多维的(1D,2D,3D····ND).matrix是array的一个小的分支,包含于array.所以matrix 拥有arra ...

- 目标检测之基础hessian matrix ---海森矩阵

就是海赛(海色)矩阵,在网上搜就有. 在数学中,海色矩阵是一个自变量为向量的实值函数的二阶偏导数组成的方块矩阵, Hessian矩阵是多维变量函数的二阶偏导数矩阵,H(i,j)=d^2(f)/(d(x ...

- [Java复习] Java基础 Basic

Q1面向对象 类.对象特征? 类:对事物逻辑算法或概念的抽象,描述一类对象的行为和状态. OOP三大特征,封装,继承,多态 封装:隐藏属性实现细节,只公开接口.将抽象的数据和行为结合,形成类.目的是简 ...

- A.Kaw矩阵代数初步学习笔记 4. Unary Matrix Operations

“矩阵代数初步”(Introduction to MATRIX ALGEBRA)课程由Prof. A.K.Kaw(University of South Florida)设计并讲授. PDF格式学习笔 ...

- A.Kaw矩阵代数初步学习笔记 3. Binary Matrix Operations

“矩阵代数初步”(Introduction to MATRIX ALGEBRA)课程由Prof. A.K.Kaw(University of South Florida)设计并讲授. PDF格式学习笔 ...

- 【线性代数】6-1:特征值介绍(Introduction to Eigenvalues)

title: [线性代数]6-1:特征值介绍(Introduction to Eigenvalues) categories: Mathematic Linear Algebra keywords: ...

随机推荐

- Linux mount/unmount 挂载和卸载指令

对于Linux用户来讲,不论有几个分区,分别分给哪一个目录使用,它总归就是一个根目录.一个独立且唯一的文件结构 Linux中每个分区都是用来组成整个文件系统的一部分,她在用一种叫做“挂载”的处理方法, ...

- CentOS+Linux部署.NET Core应用程序

工具: WinSCP+Xshell+VMware 1.安装CentOS 省略安装过程... 2. 安装.Net Core Sdk ①更新可用的安装包:sudo yum update ②安装.NET需要 ...

- IErrorHandler

/// <summary> /// WCF服务端异常处理器 /// </summary> public class WCF_ExceptionHandler : IErrorH ...

- kvm第二章--虚拟机管理

- webdispatch配置

PRDPISP01:/sapmnt/WIP/profile # su - wipadm PRDPISP01:wipadm 23> cdpro PRDPISP01:wipadm 24> ls ...

- Python排序算法(六)——归并排序(MERGE-SORT)

有趣的事,Python永远不会缺席! 如需转发,请注明出处:小婷儿的python https://www.cnblogs.com/xxtalhr/p/10800699.html 一.归并排序(MERG ...

- awk 常用选项及数组的用法和模拟生产环境数据统计

awk 常用选项总结 在 awk 中使用外部的环境变量 (-v) awk -v num2="$num1" -v var1="$var" 'BEGIN{print ...

- nginx 请求限制和访问控制

请求限制 限制主要有两种类型: 连接频率限制: limit_conn_module 请求频率限制: limit_req_module HTTP协议的连接与请求 HTTP协议是基于TCP的,如果要完成一 ...

- Nginx跨域访问场景配置和防盗链

跨域访问控制 跨域访问 为什么浏览器禁止跨域访问 不安全,容易出现CSRF攻击! 如果黑客控制的网站B在响应头里添加了让客户端去访问网站A的恶意信息,就会出现CSRF攻击 Nginx如何配置跨域访问 ...

- Vue动画操作

概述 Vue 在插入.更新或者移除 DOM 时,提供多种不同方式的应用过渡效果.包括以下工具: 在 CSS 过渡和动画中自动应用 class 可以配合使用第三方 CSS 动画库,如 Animate.c ...