Introducing Deep Reinforcement

The manuscript of Deep Reinforcement Learning is available now! It makes significant improvements to Deep Reinforcement Learning: An Overview, which has received 100+ citations, by extending its latest version more than one year ago from 70 pages to 150 pages.

It draws a big picture of deep reinforcement learning (RL) with many details. It covers contemporary work in historical contexts. It endeavours to answer the following questions: 1) Why deep? 2) What is the state of the art? and, 3) What are the issues, and potential solutions? It attempts to help those who want to get more familiar with deep RL, and to serve as a reference for people interested in this fascinating area, like professors, researchers, students, engineers, managers, investors, etc. Shortcomings and mistakes are inevitable; comments and criticisms are welcome.

The manuscript introduces AI, machine learning, and deep learning briefly, and provides a mini tutorial for reinforcement learning. The following figure illustrates relationships among these concepts, with major contents for machine learning and AI .Deep reinforcement learning is reinforcement learning integrated with deep learning, or deep artificial neural networks. A blog is dedicated to Resources for Deep Reinforcement Learning.

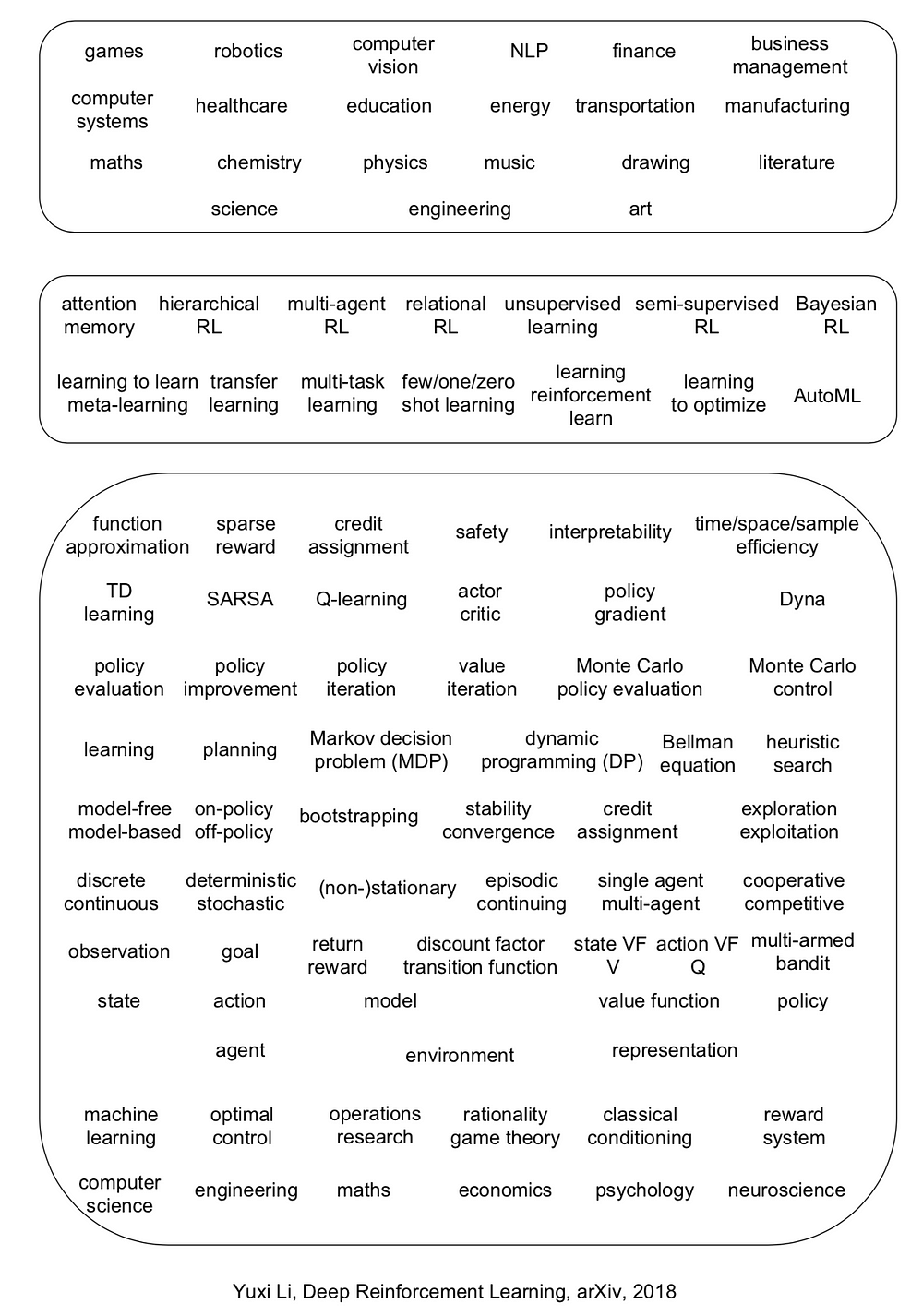

The manuscript covers six core elements: value function, policy, reward, model, exploration vs. exploitation, and representation; six important mechanisms: attention and memory, unsupervised learning, hierarchical RL, multi-agent RL, relational RL, and learning to learn; and twelve applications: games, robotics, natural language processing (NLP), computer vision, finance, business management, healthcare, education, energy, transportation, computer systems, and, science, engineering, and art.

Deep reinforcement learning has made exceptional achievements, e.g., DQN applying to Atari games ignited this wave of deep RL, and AlphaGo (Zero) and DeepStack set landmarks for AI. Deep RL has many newly invented algorithms/architectures, e.g., DQN, A3C, TRPO, PPO, DDPG, Trust-PCL, GPS, UNREAL, DNC, etc. Moreover, deep RL has been enjoying very abound and diverse applications, e.g., Capture the Flag, Dota 2, StarCraft II, robotics, character animation, conversational AI, neural architecture design (AutoML), data center cooling, recommender systems, data augmentation, model compression, combinatorial optimization, program synthesis, theorem proving, medical imaging, music, and chemical retrosynthesis, so on and so forth. A blog is dedicated to Reinforcement Learning applications.

In general, RL is probably helpful, if a problem can be regarded as or transformed to a sequential decision making problem, and states, actions, maybe rewards, can be constructed; sometimes the problem may not appear as an RL problem on the surface. Roughly speaking, if a task involves some manual designed “strategy”, then there is a chance for reinforcement learning to help. Creativity would push the frontiers of deep RL further with respect to core elements, important mechanisms, and applications.

Albeit being so successful, deep RL encounters many issues, like credit assignment, sparse reward, sample efficiency, instability, divergence, interpretability, safety, etc.; even reproducibility is an issue.

Six research directions are proposed as both challenges and opporrtunities. There are already some progress in these directions, e.g., Dopamine, TStarBots, MOREL, GQN, visual reasoning, neural-symbolic learning, UPN, causal InfoGAN, meta-gradient RL, along with many applications as above.

- systematic, comparative study of deep RL algorithms

- “solve” multi-agent problems

- learn from entities, but not just raw inputs

- design an optimal representation for RL

- AutoRL

- develop killer applications for (deep) RL

It is desirable to integrate RL more deeply with AI, with more intelligence in the end-to-end mapping from raw inputs to decisions, to incorporate knowledge, to have common sense, to be more efficient, to be more interpretable, and to avoid obvious mistakes, etc., rather than working as a blackbox.

Deep learning and reinforcement learning, being selected as one of the MIT Technology Review 10 Breakthrough Technologies in 2013 and 2017 respectively, will play their crucial roles in achieving artificial general intelligence. David Silver proposed a conjecture: artificial intelligence = reinforcement learning + deep learning (AI = RL + DL). We will see both deep learning and reinforcement learning prospering in the coming years and beyond. Deep learning is exploding. It is the right time to nurture, educate and lead the market for reinforcement learning.

Deep learning, in this third wave of AI, will have deeper influences, as we have already seen from its many achievements. Reinforcement learning, as a more general learning and decision making paradigm, will deeply influence deep learning, machine learning, and artificial intelligence in general.

Introducing Deep Reinforcement的更多相关文章

- 论文笔记之:Asynchronous Methods for Deep Reinforcement Learning

Asynchronous Methods for Deep Reinforcement Learning ICML 2016 深度强化学习最近被人发现貌似不太稳定,有人提出很多改善的方法,这些方法有很 ...

- (转) Playing FPS games with deep reinforcement learning

Playing FPS games with deep reinforcement learning 博文转自:https://blog.acolyer.org/2016/11/23/playing- ...

- (zhuan) Deep Reinforcement Learning Papers

Deep Reinforcement Learning Papers A list of recent papers regarding deep reinforcement learning. Th ...

- Learning Roadmap of Deep Reinforcement Learning

1. 知乎上关于DQN入门的系列文章 1.1 DQN 从入门到放弃 DQN 从入门到放弃1 DQN与增强学习 DQN 从入门到放弃2 增强学习与MDP DQN 从入门到放弃3 价值函数与Bellman ...

- (转) Deep Reinforcement Learning: Playing a Racing Game

Byte Tank Posts Archive Deep Reinforcement Learning: Playing a Racing Game OCT 6TH, 2016 Agent playi ...

- 论文笔记之:Dueling Network Architectures for Deep Reinforcement Learning

Dueling Network Architectures for Deep Reinforcement Learning ICML 2016 Best Paper 摘要:本文的贡献点主要是在 DQN ...

- getting started with building a ROS simulation platform for Deep Reinforcement Learning

Apparently, this ongoing work is to make a preparation for futural research on Deep Reinforcement Le ...

- (转) Deep Reinforcement Learning: Pong from Pixels

Andrej Karpathy blog About Hacker's guide to Neural Networks Deep Reinforcement Learning: Pong from ...

- 论文笔记之:Deep Reinforcement Learning with Double Q-learning

Deep Reinforcement Learning with Double Q-learning Google DeepMind Abstract 主流的 Q-learning 算法过高的估计在特 ...

随机推荐

- BitBlt函数的绘制属性

BOOL BitBlt(HDC hdcDest, int nXDest, int nYDest, int nWidth, int nHeight, HDC hdcSrc, int nXSrc, int ...

- web测试小结

今年5月份开始接触web测试,经过大半年的测试及学习,简单总结下 测试过程: 1.需求理解 2.测试策略.方案.用例编写及评审 3.测试环境搭建 4.测试执行 5.bug提单.问题跟踪 6.回归测试 ...

- matlab max()

max()函数 (1)可以找出矩阵元素中每列的最大值 max(A) ,max(A,[],dim ),带返回值的[C,I]=max(A).[C,I]=max(A,[],dim) max(A,[],dim ...

- Linux C single linked for any data type

/************************************************************************** * Linux C single linked ...

- BZOJ3473: 字符串【后缀数组+思维】

Description 给定n个字符串,询问每个字符串有多少子串(不包括空串)是所有n个字符串中至少k个字符串的子串? Input 第一行两个整数n,k. 接下来n行每行一个字符串. Output 一 ...

- VS2013、VS2015中,新建项目没有看到解决方案的问题(已解决)

问题描述:装好VS2013 专业版 for Update5不知怎么弄的,突然新建项目没有了解决方案,于是各种折腾,最后终于解决了! ================================== ...

- hdu 1723 DP/递推

题意:有一队人(人数 ≥ 1),开头一个人要将消息传到末尾一个人那里,规定每次最多可以向后传n个人,问共有多少种传达方式. 这道题我刚拿到手没有想过 DP ,我觉得这样传消息其实很像 Fibonacc ...

- python lambda匿名函数

Python的一个很重要的方面就是:函数式编程(functional programming),即可以再原本传递参数和值的地方传递函数. lambda x: x%3 == 0 和以下等价: def b ...

- JS 网页快捷键设置

我们希望能用快捷键代替鼠标点击做一些事情,例如一个典型的应用就是论坛上常用的Ctrl + Enter 快捷发帖子.就以Ctrl+Enter快捷发帖子为例,实质上呢,就是通过JS脚本,捕获系统的onke ...

- Java JNDI 学习

一.概念: 1.JNDI(JavaNaming and Directory Interface,Java命名和目录接口)是SUN公司提供的一种标准的Java命名系统接口,JNDI提供统一的客户端API ...