(转)The Evolved Transformer - Enhancing Transformer with Neural Architecture Search

The Evolved Transformer - Enhancing Transformer with Neural Architecture Search

2019-03-26 19:14:33

A new paper by Google Brain presents the first NAS to improve Transformer, one of the leading architecture for many Natural Language Processing tasks. The paper uses an evolution-based algorithm, with a novel approach to speed up the search process, to mutate the Transformer architecture to discover a better one — The Evolved Transformer (ET). The new architecture performs better than the original Transformer, especially when comparing small mobile-friendly models, and requires less training time. The concepts presented in the paper, such as the use of NAS to evolve human-designed models, has the potential to help researchers improve their architectures in many other areas.

Background

Transformers, first suggested in 2017, introduced an attention mechanism that processes the entire text input simultaneously to learn contextual relations between words. A Transformer includes two parts — an encoder that reads the text input and generates a lateral representation of it (e.g. a vector for each word), and a decoder that produces the translated text from that representation. The design has proven to be very effective and many of today’s state-of-the-art models (e.g. BERT, GPT-2) are based on Transformers. An in-depth review of Transformers can be found here.

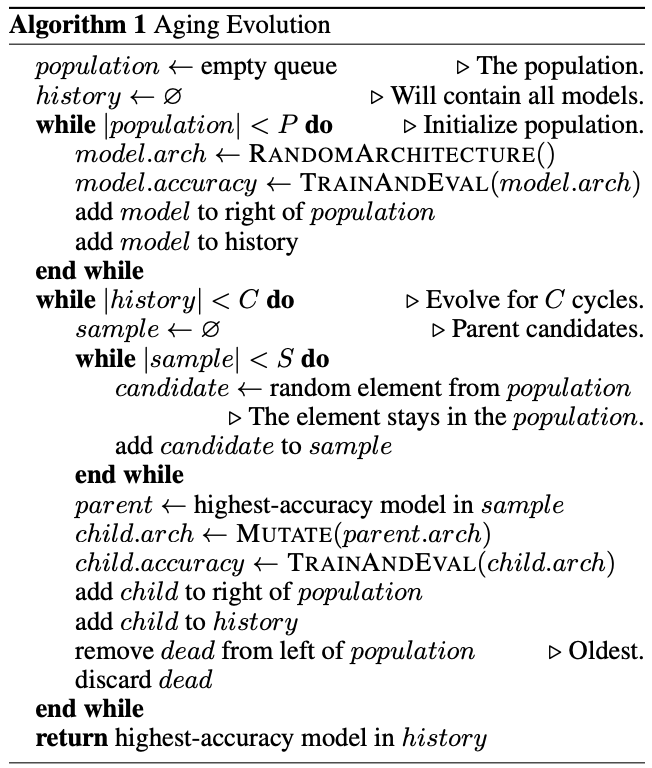

While the Transformer’s architecture was hand-crafted manually by talented researchers, an alternative is to use search algorithms. Their goal is to find the best architecture in the given search space — A space that defines the constraints of any model in it, such as number of layers, maximum number of parameters, etc. A known search algorithm is the evolution-based algorithm, Tournament Selection, in which the fittest architectures survive and mutate while the weakest die. The advantage of this algorithm is its simplicity while still being efficient. The paper relies on a version presented in Real et al. (see pseudo-code in Appendix A):

- The first pool of models is initialized by randomly sampling the search space or by using a known model as a seed.

- These models are trained for the given task and randomly sampled to create subpopulation.

- The best models are mutated by randomly changing a small part of their architecture, such as replacing a layer or changing the connection between two layers.

- The mutated models (child models) are added to the pool while the weakest model from the subpopulation is removed from the pool.

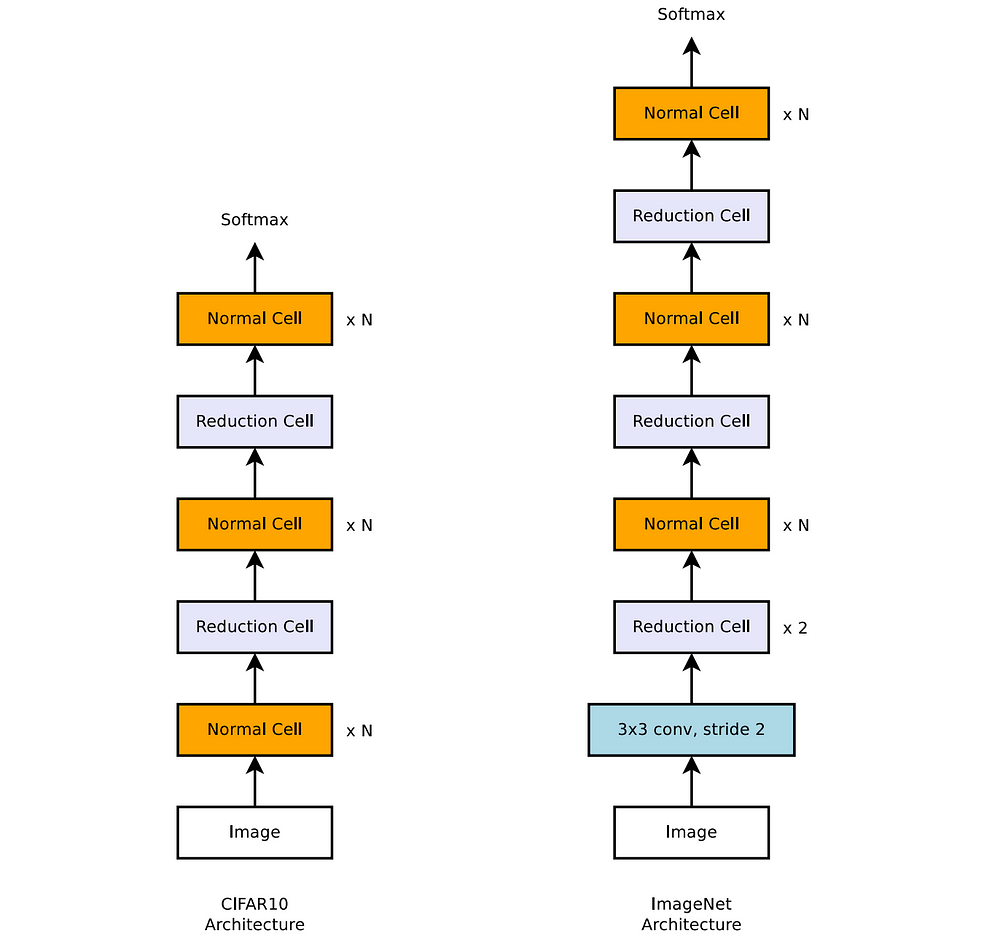

Defining the search space is an additional challenge when solving a search problem. If the space is too broad and undefined, the algorithm might not converge and find a better model in a reasonable amount of time. On the other hand, a space that is too narrow reduces the probability of finding an innovative model that outperforms the hand-crafted ones. The NASNet search architecture approaches this challenge by defining “stackable cells”. A cell can contain a set of operations on its input (e.g. convolution) from a predefined vocabulary and the model is built by stacking the same cell architecture several times. The goal of the search algorithm is only to find the best architecture of a cell.

An example of the NASNet search architecture for image classification task that contains two types of stackable cells (Normal and Reduction Cell). Source: Zoph et al.

How Evolved Transformer (ET) works

As the Transformer architecture has proven itself numerous times, the goal of the authors was to use a search algorithm to evolve it into an even better model. As a result, the model frame and the search space were designed to fit the original Transformer architecture in the following way:

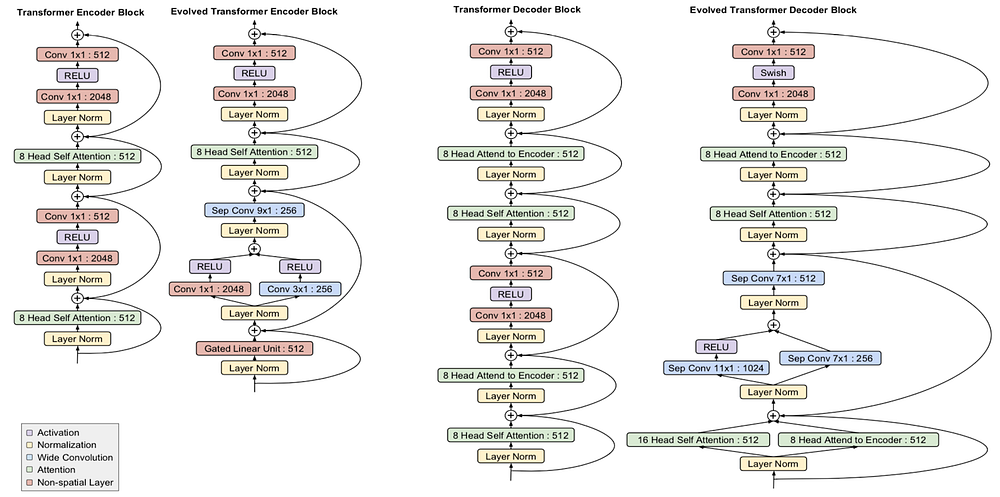

- The algorithm searches for two types of cells — one for the encoder with six copies (blocks) and another for the decoder with eight copies.

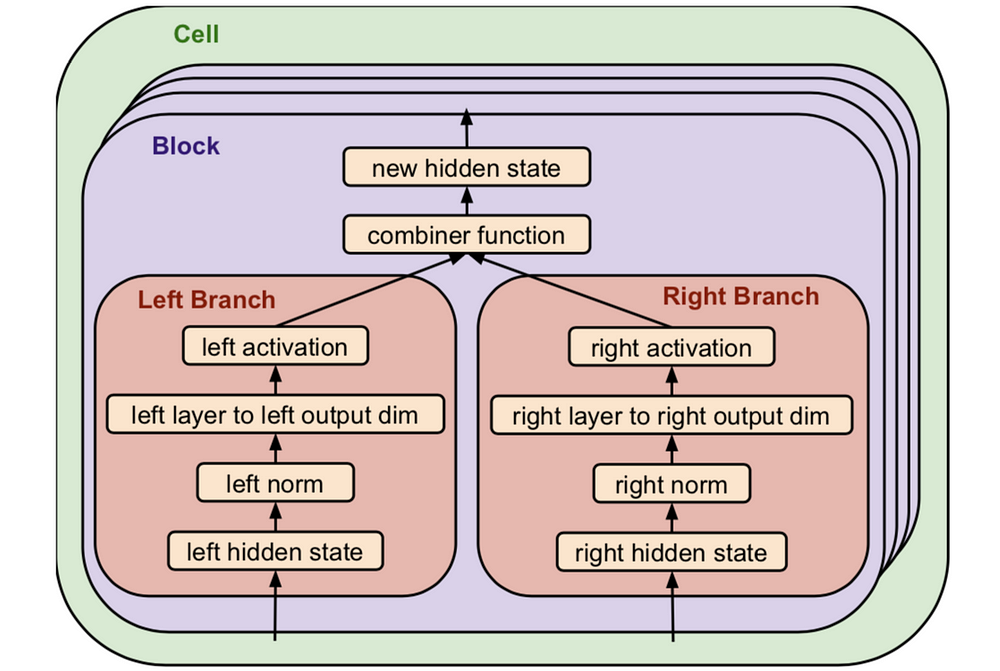

- Each block includes two branches of operations as shown in the following chart. For example, the inputs are any two outputs of the previous layers (blocks), a layer can be a standard convolution, attention head (see Transformer), etc, and activation can be ReLU and Leaky ReLU. Some elements can also be an identity operation or a dead-end.

- Each cell can be repeated up to six times.

In total, the search space adds up to ~7.3 * 10115 optional models. A detailed description of the space can be found in the appendix of the paper.

ET Stackable Cell format. Source: ET

Progressive Dynamic Hurdles (PDH)

Searching the entire space might take too long if the training and evaluation of each model are prolonged. It’s possible to overcome this problem in the field of image classification by performing the search on a proxy task, such as training a smaller dataset (e.g. CIFAR-10) before testing on a bigger dataset such as ImageNet. However, the authors couldn’t find an equivalent solution for translation models and therefore introduced an upgraded version of the tournament selection algorithm.

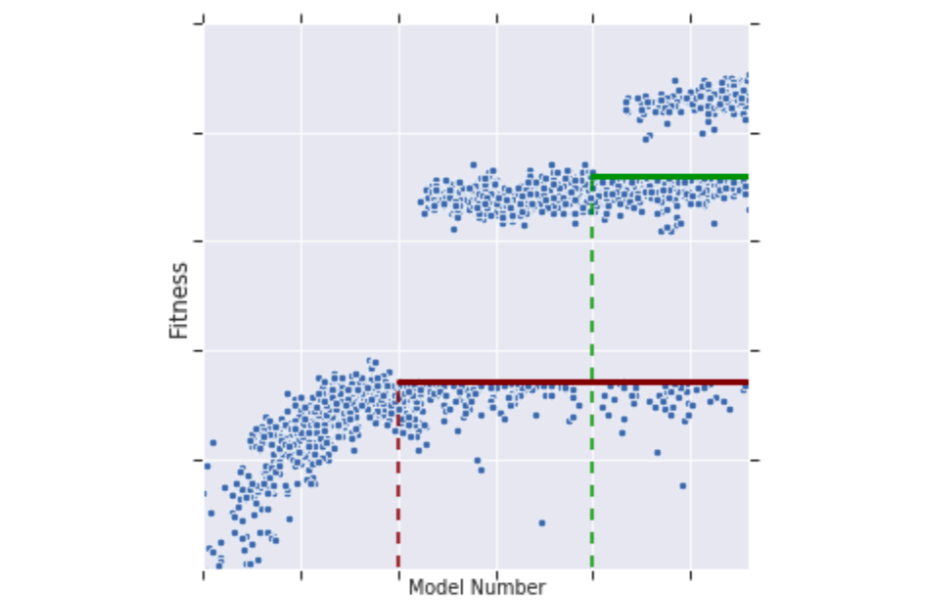

Instead of training each model in the pool on the entire dataset, a process that takes ~10 hours on a single TPU, the training is done gradually and only for the best models in the pool. The models in the pool are trained on a given amount of samples and more models are created according to the original tournament selection algorithm. Once there are enough models in the pool, a “fitness” threshold is calculated and only the models with better results (fitness) continue to the next step. These models will be trained on another batch of samples and the next models will be created and mutated based on them. As a result, PDH significantly reduces the training time spent on failing models and increases search efficiency. The downside is that “slow starters”, models that need more samples to achieve good results, might be missed.

An example of the tournament selection training process. The models above the fitness threshold are trained on more sample and therefore reach better fitness. The fitness threshold increases in steps as new models are created. Source: ET

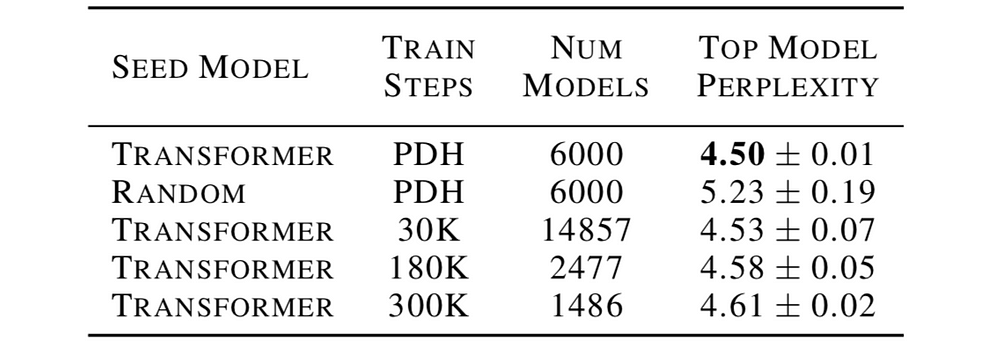

To “help” the search achieve high-quality results the authors initialized the search with the Transformer model instead of a complete random model. This step is necessary due to computing resources constraints. The table below compares the performance of the best model (using the perplexity metric, the lower the better) of different search techniques — Transformer vs. random initialization and PDH vs. regular tournament selection (with a given number of training steps per model).

Comparison of different search techniques. Source: ET

The authors kept the total training time of each technique fixed and therefore the number of models differs: more training steps per model -> fewer total number of models can be searched and vice-versa. The PDH technique achieves the best results on average while being more stable (low variance). When reducing the number of training steps (30K), the regular technique performs almost as good on average as PDH. However, it suffers from a higher variance as it’s more prone to mistakes in the search process.

Results

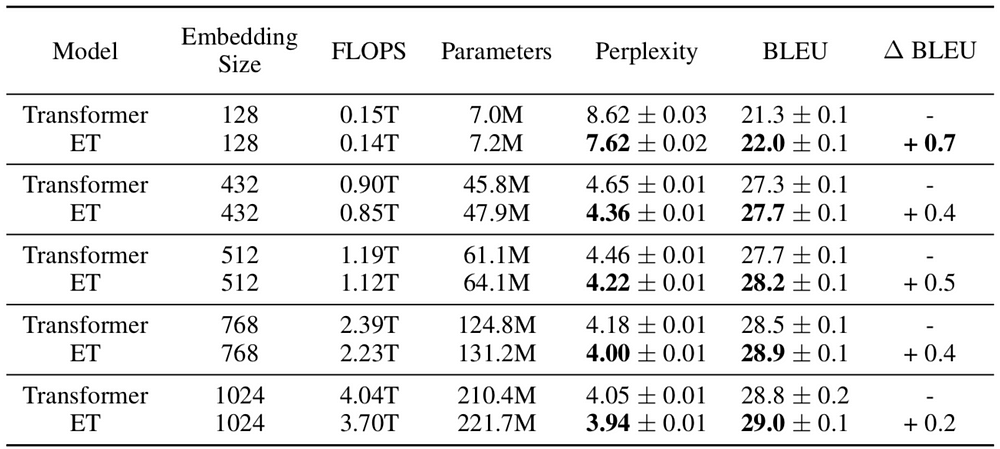

The paper uses the described search space and PDH to find a translation model that performs well on known datasets such as WMT’18. The search algorithm ran on 15,000 models using 270 TPUs for a total of almost 1 billion steps, while without PDH the total required steps would have been 3.6 billion. The best model found was named The Evolved Transformer (ET) and achieved better results compared to the original Transformer (perplexity of 3.95 vs 4.05) and required less training time. Its encoder and decoder block architectures are shown in the following chart (compared to the original ones).

Transformer and ET encoder and decoder architectures. Source: ET

While some of the ET components are similar to the original one, others are less conventional such as depth-wise separable convolutions, which is more parameter efficient but less powerful compared to normal convolution. Another interesting example is the use of parallel branches (e.g. two convolution and RELU layers for the same input) in both the decoder and the encoder. The authors also discovered in an ablation study that the superior performance cannot be attributed to any single mutation of the ET compared to the Transformer.

Both ET and Transformer are heavy models with over 200 million parameters. Their size can be reduced by changing the input embedding (i.e. a word vector) size and the rest of the layers accordingly. Interestingly, the smaller the model the bigger the advantage of ET over Transformer. For example, for the smallest model with only 7 million parameters, ET outperforms Transformer by 1 perplexity point (7.62 vs 8.62).

Comparison of Transformer and ET for different model sizes (according to embedding size). FLOPS represents the training duration of the model. Source: ET

Implementation details

As mentioned, the search algorithm required used over 200 Google’s TPUs in order to train thousands of models in a reasonable time. The training of the final ET model itself is faster than the original Transformer but still takes hours with a single TPU on the WMT’14 En-De dataset.

The code is open-source and is available for Tensorflow here.

Conclusion

Evolved Transformer shows the potential of combining hand-crafted with neural search algorithms to create architectures that are consistently better and faster to train. As computing resources are still limited (even for Google), researchers still need to carefully design the search space and improve the search algorithms to outperform human-designed models. However, this trend will undoubtedly just grow stronger over time.

To stay updated with the latest Deep Learning research, subscribe to my newsletter on LyrnAI

Appendix A - Tournament Selection Algorithm

The paper is based on the tournament selection algorithm from Real et al.except for the aging process of discarding the oldest models from the population:

(转)The Evolved Transformer - Enhancing Transformer with Neural Architecture Search的更多相关文章

- 论文笔记:Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells

Fast Neural Architecture Search of Compact Semantic Segmentation Models via Auxiliary Cells 2019-04- ...

- (转)Illustrated: Efficient Neural Architecture Search ---Guide on macro and micro search strategies in ENAS

Illustrated: Efficient Neural Architecture Search --- Guide on macro and micro search strategies in ...

- 论文笔记:ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware

ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware 2019-03-19 16:13:18 Pape ...

- 论文笔记:Progressive Neural Architecture Search

Progressive Neural Architecture Search 2019-03-18 20:28:13 Paper:http://openaccess.thecvf.com/conten ...

- 论文笔记:Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation

Auto-DeepLab: Hierarchical Neural Architecture Search for Semantic Image Segmentation2019-03-18 14:4 ...

- 论文笔记系列-Neural Architecture Search With Reinforcement Learning

摘要 神经网络在多个领域都取得了不错的成绩,但是神经网络的合理设计却是比较困难的.在本篇论文中,作者使用 递归网络去省城神经网络的模型描述,并且使用 增强学习训练RNN,以使得生成得到的模型在验证集上 ...

- 小米造最强超分辨率算法 | Fast, Accurate and Lightweight Super-Resolution with Neural Architecture Search

本篇是基于 NAS 的图像超分辨率的文章,知名学术性自媒体 Paperweekly 在该文公布后迅速跟进,发表分析称「属于目前很火的 AutoML / Neural Architecture Sear ...

- Research Guide for Neural Architecture Search

Research Guide for Neural Architecture Search 2019-09-19 09:29:04 This blog is from: https://heartbe ...

- Neural Architecture Search — Limitations and Extensions

Neural Architecture Search — Limitations and Extensions 2019-09-16 07:46:09 This blog is from: https ...

随机推荐

- Vue2.2版本学习小结

一.项目初始化继续参考这里 https://github.com/vuejs-templates/webpack-simple 或者 https://github.com/vuejs-template ...

- 软件开发项目组各职能介绍 & 测试人员在团队中的定位

前言 接触了许多非测试和新入行的测试从业者,听到最多的问题就是:“测试是否被需要?“ 团队职能介绍 <暗黑者1>中有句台词,“专案组有五个职能角色构成,侦探.网警.痕迹 ...

- Java安装及基础01

Java特性: (1)java语言是面向对象的语言 (2)编译一次,到处运行(跨平台) (3)高性能 配置环境变量: JAVA命名规则: (1)常量命名规则:每个字母都大写(POEPLE_PRE_NO ...

- python练习题-day23

1.人狗大战(组合) class Person: def __init__(self,name,hp,aggr,sex,money): self.name=name self.hp=hp self.a ...

- Docker File知识

- Openshift 错误解决 "修改docker cgroup driver"

一.Openshift 错误解决 "修改docker cgroup driver" 一.错误如下 failed to run Kubelet: failed to create k ...

- java面试题 wait和sleep区别

sleep() 方法是线程类(Thread)的静态方法,让调用线程进入睡眠状态,让出执行机会给其他线程,等到休眠时间结束后,线程进入就绪状态和其他线程一起竞争cpu的执行时间 wait()是Objec ...

- node中处理异步常用的方法,回调函数和events 模块处理异步

// npm install -g supervisor supervisor http.js就可以实现热更新的效果 //引入http模块 var http = require('http'); va ...

- 2017UGUI之slider

不让鼠标控制slider的滑动: 鼠标之所以可以控制滑动是因为slider具有interactable这个属性(下图红色的箭头的地方):如果取消了这个属性的运行的时候就不能滑动了.如果要代码去控制这个 ...

- Spring Boot:快速入门

上一篇讲述什么是Spring Boot,这一篇讲解怎么使用IDE工具快速搭建起来独立项目. 一.构建方式 快速搭建项目有三种方式,官方也有答案给到我们: 二.构建前准备 想要使用IDE运行起来自己的S ...