Kettle jdbc连接hive出现问题

jdbc连接时报如下错误:

Error connecting to database [k] : org.pentaho.di.core.exception.KettleDatabaseException:

Error occurred while trying to connect to the database Error connecting to database: (using class org.apache.hive.jdbc.HiveDriver)

Failed to open new session: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive from IP org.pentaho.di.core.exception.KettleDatabaseException:

Error occurred while trying to connect to the database Error connecting to database: (using class org.apache.hive.jdbc.HiveDriver)

Failed to open new session: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive from IP at org.pentaho.di.core.database.Database.normalConnect(Database.java:466)

at org.pentaho.di.core.database.Database.connect(Database.java:364)

at org.pentaho.di.core.database.Database.connect(Database.java:335)

at org.pentaho.di.core.database.Database.connect(Database.java:325)

at org.pentaho.di.core.database.DatabaseFactory.getConnectionTestReport(DatabaseFactory.java:80)

at org.pentaho.di.core.database.DatabaseMeta.testConnection(DatabaseMeta.java:2734)

at org.pentaho.ui.database.event.DataHandler.testDatabaseConnection(DataHandler.java:591)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.pentaho.ui.xul.impl.AbstractXulDomContainer.invoke(AbstractXulDomContainer.java:313)

at org.pentaho.ui.xul.impl.AbstractXulComponent.invoke(AbstractXulComponent.java:157)

at org.pentaho.ui.xul.impl.AbstractXulComponent.invoke(AbstractXulComponent.java:141)

at org.pentaho.ui.xul.swt.tags.SwtButton.access$500(SwtButton.java:43)

at org.pentaho.ui.xul.swt.tags.SwtButton$4.widgetSelected(SwtButton.java:137)

at org.eclipse.swt.widgets.TypedListener.handleEvent(Unknown Source)

at org.eclipse.swt.widgets.EventTable.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Widget.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.runDeferredEvents(Unknown Source)

at org.eclipse.swt.widgets.Display.readAndDispatch(Unknown Source)

at org.eclipse.jface.window.Window.runEventLoop(Window.java:820)

at org.eclipse.jface.window.Window.open(Window.java:796)

at org.pentaho.di.ui.xul.KettleDialog.show(KettleDialog.java:88)

at org.pentaho.di.ui.xul.KettleDialog.show(KettleDialog.java:55)

at org.pentaho.di.ui.core.database.dialog.XulDatabaseDialog.open(XulDatabaseDialog.java:116)

at org.pentaho.di.ui.core.database.dialog.DatabaseDialog.open(DatabaseDialog.java:60)

at org.pentaho.di.ui.spoon.delegates.SpoonDBDelegate.newConnection(SpoonDBDelegate.java:474)

at org.pentaho.di.ui.spoon.delegates.SpoonDBDelegate.newConnection(SpoonDBDelegate.java:461)

at org.pentaho.di.ui.spoon.Spoon.doubleClickedInTree(Spoon.java:3059)

at org.pentaho.di.ui.spoon.Spoon.doubleClickedInTree(Spoon.java:3029)

at org.pentaho.di.ui.spoon.Spoon.access$2400(Spoon.java:356)

at org.pentaho.di.ui.spoon.Spoon$27.widgetDefaultSelected(Spoon.java:6109)

at org.eclipse.swt.widgets.TypedListener.handleEvent(Unknown Source)

at org.eclipse.swt.widgets.EventTable.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Widget.sendEvent(Unknown Source)

at org.eclipse.swt.widgets.Display.runDeferredEvents(Unknown Source)

at org.eclipse.swt.widgets.Display.readAndDispatch(Unknown Source)

at org.pentaho.di.ui.spoon.Spoon.readAndDispatch(Spoon.java:1347)

at org.pentaho.di.ui.spoon.Spoon.waitForDispose(Spoon.java:7989)

at org.pentaho.di.ui.spoon.Spoon.start(Spoon.java:9269)

at org.pentaho.di.ui.spoon.Spoon.main(Spoon.java:662)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.pentaho.commons.launcher.Launcher.main(Launcher.java:92)

Caused by: org.pentaho.di.core.exception.KettleDatabaseException:

Error connecting to database: (using class org.apache.hive.jdbc.HiveDriver)

Failed to open new session: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive from IP at org.pentaho.di.core.database.Database.connectUsingClass(Database.java:579)

at org.pentaho.di.core.database.Database.normalConnect(Database.java:450)

... 46 more

Caused by: org.apache.hive.service.cli.HiveSQLException: Failed to open new session: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive from IP

at org.apache.hive.jdbc.Utils.verifySuccess(Utils.java:258)

at org.apache.hive.jdbc.Utils.verifySuccess(Utils.java:249)

at org.apache.hive.jdbc.HiveConnection.openSession(HiveConnection.java:565)

at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:171)

at org.apache.hive.jdbc.HiveDriver.connect(HiveDriver.java:105)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.pentaho.hadoop.shim.common.DriverProxyInvocationChain$DriverInvocationHandler.invoke(DriverProxyInvocationChain.java:206)

at com.sun.proxy.$Proxy64.connect(Unknown Source)

at org.apache.hive.jdbc.HiveDriver$1.call(HiveDriver.java:153)

at org.apache.hive.jdbc.HiveDriver$1.call(HiveDriver.java:150)

at org.pentaho.hadoop.hive.jdbc.JDBCDriverCallable.callWithDriver(JDBCDriverCallable.java:57)

at org.apache.hive.jdbc.HiveDriver.callWithActiveDriver(HiveDriver.java:130)

at org.apache.hive.jdbc.HiveDriver.connect(HiveDriver.java:150)

at java.sql.DriverManager.getConnection(DriverManager.java:571)

at java.sql.DriverManager.getConnection(DriverManager.java:233)

at org.pentaho.di.core.database.Database.connectUsingClass(Database.java:565)

... 47 more

Caused by: org.apache.hive.service.cli.HiveSQLException: Failed to open new session: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive from IP

at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:266)

at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:202)

at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:402)

at org.apache.hive.service.cli.thrift.ThriftCLIService.OpenSession(ThriftCLIService.java:297)

at org.apache.hive.service.cli.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1253)

at org.apache.hive.service.cli.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1238)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.hive.service.auth.TSetIpAddressProcessor.process(TSetIpAddressProcessor.java:56)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:285)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive from IP

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:83)

at org.apache.hive.service.cli.session.HiveSessionProxy.access$000(HiveSessionProxy.java:36)

at org.apache.hive.service.cli.session.HiveSessionProxy$1.run(HiveSessionProxy.java:63)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657)

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:59)

at com.sun.proxy.$Proxy25.open(Unknown Source)

at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:258)

... 12 more

Caused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.security.authorize.AuthorizationException): Unauthorized connection for super-user: hive from IP

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:494)

at org.apache.hive.service.cli.session.HiveSessionImpl.open(HiveSessionImpl.java:137)

at sun.reflect.GeneratedMethodAccessor11.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hive.service.cli.session.HiveSessionProxy.invoke(HiveSessionProxy.java:78)

... 20 more

Caused by: java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException:Unauthorized connection for super-user: hive from IP

at org.apache.hadoop.ipc.Client.call(Client.java:1427)

at org.apache.hadoop.ipc.Client.call(Client.java:1358)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:229)

at com.sun.proxy.$Proxy19.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:771)

at sun.reflect.GeneratedMethodAccessor7.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:252)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:104)

at com.sun.proxy.$Proxy20.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:2116)

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1315)

at org.apache.hadoop.hdfs.DistributedFileSystem$22.doCall(DistributedFileSystem.java:1311)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1311)

at org.apache.hadoop.fs.FileSystem.exists(FileSystem.java:1424)

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:568)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:526)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:480)

... 25 more Hostname :

Port : 10000

Database name : default

hive is not allowed to impersonate hive

上述2个错误,刚开始以为hive的jdbc驱动没有,结果看了一下有,并且同样的官网包直接解压在power服务器上没问题,这是在X86,整了半天,最后找到一个网站

http://www-01.ibm.com/support/docview.wss?uid=swg21972388

借鉴才解决。

原因:

cat /etc/hadoop/conf/core-site.xml

<property>

<name>hadoop.proxyuser.hive.groups</name>

<value>*</value>

</property> <property>

<name>hadoop.proxyuser.hive.hosts</name>

<value>lh-2.novalocal</value>

</property>

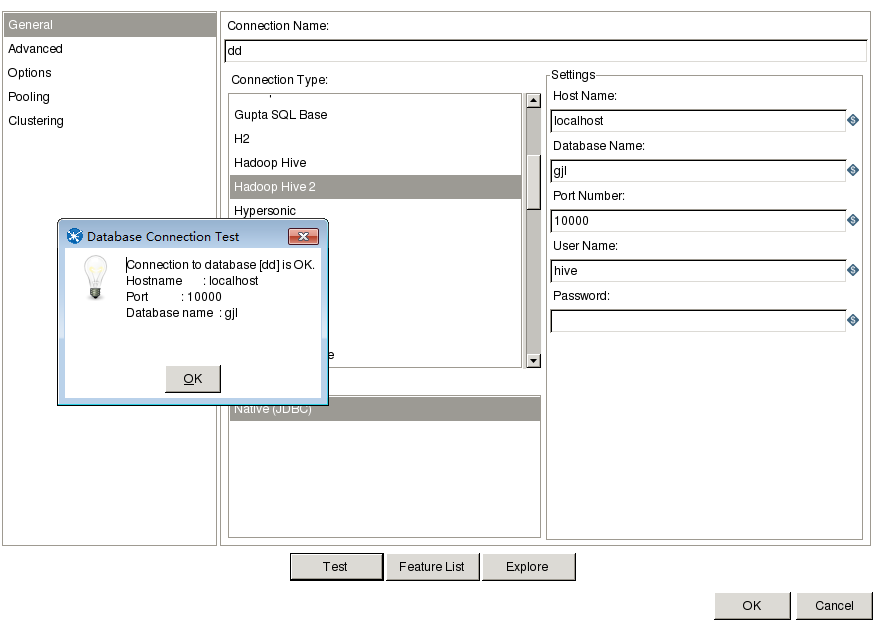

原来云主机重启将主机名改成加一个后缀.novalocal,比较坑,安装集群的时候也被坑过。我直接把lh-2.novalocal改成*了,在Ambari上改的。

然后,重连

成功!

Kettle jdbc连接hive出现问题的更多相关文章

- 通过JDBC连接hive

hive是大数据技术簇中进行数据仓库应用的基础组件,是其它类似数据仓库应用的对比基准.基础的数据操作我们可以通过脚本方式以hive-client进行处理.若需要开发应用程序,则需要使用hive的jdb ...

- Hive(3)-meta store和hdfs详解,以及JDBC连接Hive

一. Meta Store 使用mysql客户端登录hadoop100的mysql,可以看到库中多了一个metastore 现在尤其要关注这三个表 DBS表,存储的是Hive的数据库 TBLS表,存储 ...

- 大数据学习day28-----hive03------1. null值处理,子串,拼接,类型转换 2.行转列,列转行 3. 窗口函数(over,lead,lag等函数) 4.rank(行号函数)5. json解析函数 6.jdbc连接hive,企业级调优

1. null值处理,子串,拼接,类型转换 (1) 空字段赋值(null值处理) 当表中的某个字段为null时,比如奖金,当你要统计一个人的总工资时,字段为null的值就无法处理,这个时候就可以使用N ...

- Java使用JDBC连接Hive

最近一段时间,处理过一个问题,那就是hive jdbc的连接问题,其实也不是大问题,就是url写的不对,导致无法连接.问题在于HiveServer2增加了别的安全验证,导致正常的情况下,传递的参数无法 ...

- JDBC连接Hive数据库

一.依赖 pom <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncodi ...

- java使用JDBC连接hive(使用beeline与hiveserver2)

首先虚拟机上已经安装好hive. 下面是连接hive需要的操作. 一.配置. 1.查找虚拟机的ip 输入 ifconfig 2.配置文件 (1)配置hadoop目录下的core-site.xml和hd ...

- Hive记录-Impala jdbc连接hive和kudu参考

1.配置环境Eclipse和JDK 2.加载hive jar包或者impala jar包 备注:从CDH集群里面拷贝出来 下载地址:https://www.cloudera.com/downloads ...

- Zeppelin 用jdbc连接hive报错

日志: Could not establish connection to jdbc:hive2://192.168.0.51:10000: Required field 'serverProtoco ...

- 基于CDH5.x 下面使用eclipse 操作hive 。使用java通过jdbc连接HIVESERVICE 创建表

基于CDH5.x 下面使用eclipse 操作hive .使用java通过jdbc连接HIVESERVICE 创建表 import java.sql.Connection; import java.s ...

随机推荐

- [Python]-类型转换

1.字符串到数值的转换:int(s [,base ]) 将表达式s转换为一个整数 ,s可以是整数,与数字有关的字符串,布尔类型long(s [,base ]) 将表达式s转换为一个长整数 s可以是整数 ...

- schemes-universalLink-share_IOS-android-WeChat-chunleiDemo

schemes-universalLink-share_IOS-android-WeChat-chunleiDemo The mobile terminal share page start APP ...

- qwt的安装与使用

qwt简介 QWT,全称是Qt Widgets for Technical Applications,是一个基于LGPL版权协议的开源项目, 可生成各种统计图. 具体介绍,可参看官方网址:http:/ ...

- 【 Note 】GDB调试

GDB是在linux下的调试功能 命令: 启动文件: 普通调试 gdb 可执行文件 分屏调试 gdb -tui 可执行文件 ->调试: 运行 r 设置断点 b 删除断点 delete 断点编号 ...

- jquery 获取当前对象的id取巧验证的一种方法

<!doctype html><html><head><meta charset="utf-8"><title>titl ...

- AppCan 双击返回按钮退出应用

使用AppCan开发手机应用,拦截返回键实现自定义2秒内双击退出应用的操作 var c1c = 0; window.uexOnload = function(type){ uexWindow.setR ...

- 《css揭秘》

<css揭秘> 第一章:引言 引言 案例们 第二章:背景与边框 背景和边框 半透明边框(rgba/hsla.background-clip) 多重边框(box-shadow) 灵活的背景定 ...

- 基于Java 的增量与完全备份小工具

前段时间,因为各种原因,自己动手写了一个小的备份工具,用了一个星期,想想把它的设计思路放上来,当是笔记吧. 需求场景:这个工具起初的目的是为了解决朋友公司对其网络的限制(不可以用任何同步软件,git, ...

- (ASP.NET )去除字符串中的HTML标签

string strDoContent = "执行增加<a href="/AdminCX/Admin_CompanyDetail.aspx?CompanyGuid=cd8e1 ...

- HTML中判断手机是否安装某APP,跳转或下载该应用

有些时候在做前端输出的时候,需要和app的做些对接工作.就是在手机浏览器中下载某app时,能判断该用户是否安装了该应用.如果安装了该应用,就直接打开该应用:如果没有安装该应用,就下载该应用.那么下面就 ...