GPUImage API 文档之GPUImageOutput类

GPUImageOutput类将静态图像纹理上传到OpenGL ES中,然后使用这些纹理去处理进程链中的下一个对象。它的子类可以获得滤镜处理后的图片功能。[本文讲的很少,由于有许多地方不清楚,以后会更新]

方法

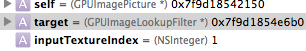

- (void)setInputFramebufferForTarget:(id<GPUImageInput>)target atIndex:(NSInteger)inputTextureIndex

说明:用于设置指定纹理的输入帧缓冲区的尺寸

参数:target是具体的对象,它接受了GPUImageInput的协议,例如:当我们使用[GPUImageLookupFilter]滤镜时,target就是一个GPUImageLookupFilter的实例。

- (void)addTarget:(id<GPUImageInput>)newTarget atTextureLocation:(NSInteger)textureLocation

说明:添加target

- (void)removeTarget:(id<GPUImageInput>)targetToRemove

说明:删除指定的target

- (void)removeAllTargets

说明:删除所有target

- (UIImage *)imageFromCurrentFramebufferWithOrientation:(UIImageOrientation)imageOrientation

说明:从帧缓冲区中取回对象,打印在屏幕中。

参数:imageOrientation时当前设备的手持方向

- (CGImageRef)newCGImageByFilteringCGImage:(CGImageRef)imageToFilter

说明:使用一张静态图片做滤镜纹理

完整代码

#import "GPUImageContext.h"

#import "GPUImageFramebuffer.h" #if TARGET_IPHONE_SIMULATOR || TARGET_OS_IPHONE

#import <UIKit/UIKit.h>

#else

// For now, just redefine this on the Mac

typedef NS_ENUM(NSInteger, UIImageOrientation) {

UIImageOrientationUp, // default orientation

UIImageOrientationDown, // 180 deg rotation

UIImageOrientationLeft, // 90 deg CCW

UIImageOrientationRight, // 90 deg CW

UIImageOrientationUpMirrored, // as above but image mirrored along other axis. horizontal flip

UIImageOrientationDownMirrored, // horizontal flip

UIImageOrientationLeftMirrored, // vertical flip

UIImageOrientationRightMirrored, // vertical flip

};

#endif void runOnMainQueueWithoutDeadlocking(void (^block)(void));

void runSynchronouslyOnVideoProcessingQueue(void (^block)(void));

void runAsynchronouslyOnVideoProcessingQueue(void (^block)(void));

void runSynchronouslyOnContextQueue(GPUImageContext *context, void (^block)(void));

void runAsynchronouslyOnContextQueue(GPUImageContext *context, void (^block)(void));

void reportAvailableMemoryForGPUImage(NSString *tag); @class GPUImageMovieWriter; /** GPUImage's base source object Images or frames of video are uploaded from source objects, which are subclasses of GPUImageOutput. These include: - GPUImageVideoCamera (for live video from an iOS camera)

- GPUImageStillCamera (for taking photos with the camera)

- GPUImagePicture (for still images)

- GPUImageMovie (for movies) Source objects upload still image frames to OpenGL ES as textures, then hand those textures off to the next objects in the processing chain.

*/

@interface GPUImageOutput : NSObject

{

GPUImageFramebuffer *outputFramebuffer; NSMutableArray *targets, *targetTextureIndices; CGSize inputTextureSize, cachedMaximumOutputSize, forcedMaximumSize; BOOL overrideInputSize; BOOL allTargetsWantMonochromeData;

BOOL usingNextFrameForImageCapture;

} @property(readwrite, nonatomic) BOOL shouldSmoothlyScaleOutput;

@property(readwrite, nonatomic) BOOL shouldIgnoreUpdatesToThisTarget;

@property(readwrite, nonatomic, retain) GPUImageMovieWriter *audioEncodingTarget;

@property(readwrite, nonatomic, unsafe_unretained) id<GPUImageInput> targetToIgnoreForUpdates;

@property(nonatomic, copy) void(^frameProcessingCompletionBlock)(GPUImageOutput*, CMTime);

@property(nonatomic) BOOL enabled;

@property(readwrite, nonatomic) GPUTextureOptions outputTextureOptions; /// @name Managing targets

- (void)setInputFramebufferForTarget:(id<GPUImageInput>)target atIndex:(NSInteger)inputTextureIndex;

- (GPUImageFramebuffer *)framebufferForOutput;

- (void)removeOutputFramebuffer;

- (void)notifyTargetsAboutNewOutputTexture; /** Returns an array of the current targets.

*/

- (NSArray*)targets; /** Adds a target to receive notifications when new frames are available. The target will be asked for its next available texture. See [GPUImageInput newFrameReadyAtTime:] @param newTarget Target to be added

*/

- (void)addTarget:(id<GPUImageInput>)newTarget; /** Adds a target to receive notifications when new frames are available. See [GPUImageInput newFrameReadyAtTime:] @param newTarget Target to be added

*/

- (void)addTarget:(id<GPUImageInput>)newTarget atTextureLocation:(NSInteger)textureLocation; /** Removes a target. The target will no longer receive notifications when new frames are available. @param targetToRemove Target to be removed

*/

- (void)removeTarget:(id<GPUImageInput>)targetToRemove; /** Removes all targets.

*/

- (void)removeAllTargets; /// @name Manage the output texture - (void)forceProcessingAtSize:(CGSize)frameSize;

- (void)forceProcessingAtSizeRespectingAspectRatio:(CGSize)frameSize; /// @name Still image processing - (void)useNextFrameForImageCapture;

- (CGImageRef)newCGImageFromCurrentlyProcessedOutput;

- (CGImageRef)newCGImageByFilteringCGImage:(CGImageRef)imageToFilter; // Platform-specific image output methods

// If you're trying to use these methods, remember that you need to set -useNextFrameForImageCapture before running -processImage or running video and calling any of these methods, or you will get a nil image

#if TARGET_IPHONE_SIMULATOR || TARGET_OS_IPHONE

- (UIImage *)imageFromCurrentFramebuffer;

- (UIImage *)imageFromCurrentFramebufferWithOrientation:(UIImageOrientation)imageOrientation;

- (UIImage *)imageByFilteringImage:(UIImage *)imageToFilter;

- (CGImageRef)newCGImageByFilteringImage:(UIImage *)imageToFilter;

#else

- (NSImage *)imageFromCurrentFramebuffer;

- (NSImage *)imageFromCurrentFramebufferWithOrientation:(UIImageOrientation)imageOrientation;

- (NSImage *)imageByFilteringImage:(NSImage *)imageToFilter;

- (CGImageRef)newCGImageByFilteringImage:(NSImage *)imageToFilter;

#endif - (BOOL)providesMonochromeOutput; @end

#import "GPUImageOutput.h"

#import "GPUImageMovieWriter.h"

#import "GPUImagePicture.h"

#import <mach/mach.h> void runOnMainQueueWithoutDeadlocking(void (^block)(void))

{

if ([NSThread isMainThread])

{

block();

}

else

{

dispatch_sync(dispatch_get_main_queue(), block);

}

} void runSynchronouslyOnVideoProcessingQueue(void (^block)(void))

{

dispatch_queue_t videoProcessingQueue = [GPUImageContext sharedContextQueue];

#if !OS_OBJECT_USE_OBJC

#pragma clang diagnostic push

#pragma clang diagnostic ignored "-Wdeprecated-declarations"

if (dispatch_get_current_queue() == videoProcessingQueue)

#pragma clang diagnostic pop

#else

if (dispatch_get_specific([GPUImageContext contextKey]))

#endif

{

block();

}else

{

dispatch_sync(videoProcessingQueue, block);

}

} void runAsynchronouslyOnVideoProcessingQueue(void (^block)(void))

{

dispatch_queue_t videoProcessingQueue = [GPUImageContext sharedContextQueue]; #if !OS_OBJECT_USE_OBJC

#pragma clang diagnostic push

#pragma clang diagnostic ignored "-Wdeprecated-declarations"

if (dispatch_get_current_queue() == videoProcessingQueue)

#pragma clang diagnostic pop

#else

if (dispatch_get_specific([GPUImageContext contextKey]))

#endif

{

block();

}else

{

dispatch_async(videoProcessingQueue, block);

}

} void runSynchronouslyOnContextQueue(GPUImageContext *context, void (^block)(void))

{

dispatch_queue_t videoProcessingQueue = [context contextQueue];

#if !OS_OBJECT_USE_OBJC

#pragma clang diagnostic push

#pragma clang diagnostic ignored "-Wdeprecated-declarations"

if (dispatch_get_current_queue() == videoProcessingQueue)

#pragma clang diagnostic pop

#else

if (dispatch_get_specific([GPUImageContext contextKey]))

#endif

{

block();

}else

{

dispatch_sync(videoProcessingQueue, block);

}

} void runAsynchronouslyOnContextQueue(GPUImageContext *context, void (^block)(void))

{

dispatch_queue_t videoProcessingQueue = [context contextQueue]; #if !OS_OBJECT_USE_OBJC

#pragma clang diagnostic push

#pragma clang diagnostic ignored "-Wdeprecated-declarations"

if (dispatch_get_current_queue() == videoProcessingQueue)

#pragma clang diagnostic pop

#else

if (dispatch_get_specific([GPUImageContext contextKey]))

#endif

{

block();

}else

{

dispatch_async(videoProcessingQueue, block);

}

} void reportAvailableMemoryForGPUImage(NSString *tag)

{

if (!tag)

tag = @"Default"; struct task_basic_info info; mach_msg_type_number_t size = sizeof(info); kern_return_t kerr = task_info(mach_task_self(), TASK_BASIC_INFO, (task_info_t)&info, &size);

if( kerr == KERN_SUCCESS ) {

NSLog(@"%@ - Memory used: %u", tag, (unsigned int)info.resident_size); //in bytes

} else {

NSLog(@"%@ - Error: %s", tag, mach_error_string(kerr));

}

} @implementation GPUImageOutput @synthesize shouldSmoothlyScaleOutput = _shouldSmoothlyScaleOutput;

@synthesize shouldIgnoreUpdatesToThisTarget = _shouldIgnoreUpdatesToThisTarget;

@synthesize audioEncodingTarget = _audioEncodingTarget;

@synthesize targetToIgnoreForUpdates = _targetToIgnoreForUpdates;

@synthesize frameProcessingCompletionBlock = _frameProcessingCompletionBlock;

@synthesize enabled = _enabled;

@synthesize outputTextureOptions = _outputTextureOptions; #pragma mark -

#pragma mark Initialization and teardown - (id)init;

{

if (!(self = [super init]))

{

return nil;

} targets = [[NSMutableArray alloc] init];

targetTextureIndices = [[NSMutableArray alloc] init];

_enabled = YES;

allTargetsWantMonochromeData = YES;

usingNextFrameForImageCapture = NO; // set default texture options

_outputTextureOptions.minFilter = GL_LINEAR;

_outputTextureOptions.magFilter = GL_LINEAR;

_outputTextureOptions.wrapS = GL_CLAMP_TO_EDGE;

_outputTextureOptions.wrapT = GL_CLAMP_TO_EDGE;

_outputTextureOptions.internalFormat = GL_RGBA;

_outputTextureOptions.format = GL_BGRA;

_outputTextureOptions.type = GL_UNSIGNED_BYTE; return self;

} - (void)dealloc

{

[self removeAllTargets];

} #pragma mark -

#pragma mark Managing targets - (void)setInputFramebufferForTarget:(id<GPUImageInput>)target atIndex:(NSInteger)inputTextureIndex;

{

[target setInputFramebuffer:[self framebufferForOutput] atIndex:inputTextureIndex];

} - (GPUImageFramebuffer *)framebufferForOutput;

{

return outputFramebuffer;

} - (void)removeOutputFramebuffer;

{

outputFramebuffer = nil;

} - (void)notifyTargetsAboutNewOutputTexture;

{

for (id<GPUImageInput> currentTarget in targets)

{

NSInteger indexOfObject = [targets indexOfObject:currentTarget];

NSInteger textureIndex = [[targetTextureIndices objectAtIndex:indexOfObject] integerValue]; [self setInputFramebufferForTarget:currentTarget atIndex:textureIndex];

}

} - (NSArray*)targets;

{

return [NSArray arrayWithArray:targets];

} - (void)addTarget:(id<GPUImageInput>)newTarget;

{

NSInteger nextAvailableTextureIndex = [newTarget nextAvailableTextureIndex];

[self addTarget:newTarget atTextureLocation:nextAvailableTextureIndex]; if ([newTarget shouldIgnoreUpdatesToThisTarget])

{

_targetToIgnoreForUpdates = newTarget;

}

} - (void)addTarget:(id<GPUImageInput>)newTarget atTextureLocation:(NSInteger)textureLocation;

{

if([targets containsObject:newTarget])

{

return;

} cachedMaximumOutputSize = CGSizeZero;

runSynchronouslyOnVideoProcessingQueue(^{

[self setInputFramebufferForTarget:newTarget atIndex:textureLocation];

[targets addObject:newTarget];

[targetTextureIndices addObject:[NSNumber numberWithInteger:textureLocation]]; allTargetsWantMonochromeData = allTargetsWantMonochromeData && [newTarget wantsMonochromeInput];

});

} - (void)removeTarget:(id<GPUImageInput>)targetToRemove;

{

if(![targets containsObject:targetToRemove])

{

return;

} if (_targetToIgnoreForUpdates == targetToRemove)

{

_targetToIgnoreForUpdates = nil;

} cachedMaximumOutputSize = CGSizeZero; NSInteger indexOfObject = [targets indexOfObject:targetToRemove];

NSInteger textureIndexOfTarget = [[targetTextureIndices objectAtIndex:indexOfObject] integerValue]; runSynchronouslyOnVideoProcessingQueue(^{

[targetToRemove setInputSize:CGSizeZero atIndex:textureIndexOfTarget];

[targetToRemove setInputRotation:kGPUImageNoRotation atIndex:textureIndexOfTarget]; [targetTextureIndices removeObjectAtIndex:indexOfObject];

[targets removeObject:targetToRemove];

[targetToRemove endProcessing];

});

} - (void)removeAllTargets;

{

cachedMaximumOutputSize = CGSizeZero;

runSynchronouslyOnVideoProcessingQueue(^{

for (id<GPUImageInput> targetToRemove in targets)

{

NSInteger indexOfObject = [targets indexOfObject:targetToRemove];

NSInteger textureIndexOfTarget = [[targetTextureIndices objectAtIndex:indexOfObject] integerValue]; [targetToRemove setInputSize:CGSizeZero atIndex:textureIndexOfTarget];

[targetToRemove setInputRotation:kGPUImageNoRotation atIndex:textureIndexOfTarget];

}

[targets removeAllObjects];

[targetTextureIndices removeAllObjects]; allTargetsWantMonochromeData = YES;

});

} #pragma mark -

#pragma mark Manage the output texture - (void)forceProcessingAtSize:(CGSize)frameSize;

{ } - (void)forceProcessingAtSizeRespectingAspectRatio:(CGSize)frameSize;

{

} #pragma mark -

#pragma mark Still image processing - (void)useNextFrameForImageCapture;

{ } - (CGImageRef)newCGImageFromCurrentlyProcessedOutput;

{

return nil;

} - (CGImageRef)newCGImageByFilteringCGImage:(CGImageRef)imageToFilter;

{

GPUImagePicture *stillImageSource = [[GPUImagePicture alloc] initWithCGImage:imageToFilter]; [self useNextFrameForImageCapture];

[stillImageSource addTarget:(id<GPUImageInput>)self];

[stillImageSource processImage]; CGImageRef processedImage = [self newCGImageFromCurrentlyProcessedOutput]; [stillImageSource removeTarget:(id<GPUImageInput>)self];

return processedImage;

} - (BOOL)providesMonochromeOutput;

{

return NO;

} #pragma mark -

#pragma mark Platform-specific image output methods #if TARGET_IPHONE_SIMULATOR || TARGET_OS_IPHONE - (UIImage *)imageFromCurrentFramebuffer;

{

UIDeviceOrientation deviceOrientation = [[UIDevice currentDevice] orientation];

UIImageOrientation imageOrientation = UIImageOrientationLeft;

switch (deviceOrientation)

{

case UIDeviceOrientationPortrait:

imageOrientation = UIImageOrientationUp;

break;

case UIDeviceOrientationPortraitUpsideDown:

imageOrientation = UIImageOrientationDown;

break;

case UIDeviceOrientationLandscapeLeft:

imageOrientation = UIImageOrientationLeft;

break;

case UIDeviceOrientationLandscapeRight:

imageOrientation = UIImageOrientationRight;

break;

default:

imageOrientation = UIImageOrientationUp;

break;

} return [self imageFromCurrentFramebufferWithOrientation:imageOrientation];

} - (UIImage *)imageFromCurrentFramebufferWithOrientation:(UIImageOrientation)imageOrientation;

{

CGImageRef cgImageFromBytes = [self newCGImageFromCurrentlyProcessedOutput];

UIImage *finalImage = [UIImage imageWithCGImage:cgImageFromBytes scale:1.0 orientation:imageOrientation];

CGImageRelease(cgImageFromBytes); return finalImage;

} - (UIImage *)imageByFilteringImage:(UIImage *)imageToFilter;

{

CGImageRef image = [self newCGImageByFilteringCGImage:[imageToFilter CGImage]];

UIImage *processedImage = [UIImage imageWithCGImage:image scale:[imageToFilter scale] orientation:[imageToFilter imageOrientation]];

CGImageRelease(image);

return processedImage;

} - (CGImageRef)newCGImageByFilteringImage:(UIImage *)imageToFilter

{

return [self newCGImageByFilteringCGImage:[imageToFilter CGImage]];

} #else - (NSImage *)imageFromCurrentFramebuffer;

{

return [self imageFromCurrentFramebufferWithOrientation:UIImageOrientationLeft];

} - (NSImage *)imageFromCurrentFramebufferWithOrientation:(UIImageOrientation)imageOrientation;

{

CGImageRef cgImageFromBytes = [self newCGImageFromCurrentlyProcessedOutput];

NSImage *finalImage = [[NSImage alloc] initWithCGImage:cgImageFromBytes size:NSZeroSize];

CGImageRelease(cgImageFromBytes); return finalImage;

} - (NSImage *)imageByFilteringImage:(NSImage *)imageToFilter;

{

CGImageRef image = [self newCGImageByFilteringCGImage:[imageToFilter CGImageForProposedRect:NULL context:[NSGraphicsContext currentContext] hints:nil]];

NSImage *processedImage = [[NSImage alloc] initWithCGImage:image size:NSZeroSize];

CGImageRelease(image);

return processedImage;

} - (CGImageRef)newCGImageByFilteringImage:(NSImage *)imageToFilter

{

return [self newCGImageByFilteringCGImage:[imageToFilter CGImageForProposedRect:NULL context:[NSGraphicsContext currentContext] hints:nil]];

} #endif #pragma mark -

#pragma mark Accessors - (void)setAudioEncodingTarget:(GPUImageMovieWriter *)newValue;

{

_audioEncodingTarget = newValue;

if( ! _audioEncodingTarget.hasAudioTrack )

{

_audioEncodingTarget.hasAudioTrack = YES;

}

} -(void)setOutputTextureOptions:(GPUTextureOptions)outputTextureOptions

{

_outputTextureOptions = outputTextureOptions; if( outputFramebuffer.texture )

{

glBindTexture(GL_TEXTURE_2D, outputFramebuffer.texture);

//_outputTextureOptions.format

//_outputTextureOptions.internalFormat

//_outputTextureOptions.magFilter

//_outputTextureOptions.minFilter

//_outputTextureOptions.type

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, _outputTextureOptions.wrapS);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, _outputTextureOptions.wrapT);

glBindTexture(GL_TEXTURE_2D, );

}

} @end

GPUImage API 文档之GPUImageOutput类的更多相关文章

- GPUImage API 文档之GPUImageFilter类

GPUImageFilter类 方法 - (id)initWithVertexShaderFromString:(NSString *)vertexShaderString fragmentShade ...

- GPUImage API文档之GPUImageFramebufferCache类

GPUImageFramebufferCache类负责管理GPUImageFramebuffer对象,是一个GPUImageFramebuffer对象的缓存. 方法 - (GPUImageFrameb ...

- GPUImage API 文档之GPUImagePicture类

GPUImagePicture类静态图像处理操作,它可以是需要处理的静态图像,也可以是一张作为纹理使用的图片,调用向它发送processImage消息,进行图像滤镜处理. 方法 - (id)initW ...

- GPUImage API文档之GPUImageContext类

GPUImageContext类,提供OpenGL ES基本环境,我们一般不会用到,所以讲的很简单. 属性 @property(readonly, nonatomic) dispatch_queue_ ...

- GPUImage API文档之GPUImageFramebuffer类

GPUImageFramebuffer类用于管理帧缓冲对象,负责帧缓冲对象的创建和销毁,读取帧缓冲内容 属性 @property(readonly) CGSize size 说明:只读属性,在实现中, ...

- GPUImage API文档之GLProgram类

GLProgram是GPUImage中代表openGL ES 中的program,具有glprogram功能. 属性 @property(readwrite, nonatomic) BOOL init ...

- GPUImage API文档之GPUImageInput协议

GPUImageInput协议主要包含一些输入需要渲染目标的操作. - (void)newFrameReadyAtTime:(CMTime)frameTime atIndex:(NSInteger)t ...

- 通过API文档查询Math类的方法,打印出近似圆,只要给定不同半径,圆的大小就会随之发生改变

package question; import java.util.Scanner; import java.lang.Math; public class MathTest { /** * 未搞懂 ...

- Java,面试题,简历,Linux,大数据,常用开发工具类,API文档,电子书,各种思维导图资源,百度网盘资源,BBS论坛系统 ERP管理系统 OA办公自动化管理系统 车辆管理系统 各种后台管理系统

Java,面试题,简历,Linux,大数据,常用开发工具类,API文档,电子书,各种思维导图资源,百度网盘资源BBS论坛系统 ERP管理系统 OA办公自动化管理系统 车辆管理系统 家庭理财系统 各种后 ...

随机推荐

- 《Go语言实战》摘录:6.1 并发 - 并行 与 并发

6.1 并行 与 并发

- 《Linux设备驱动开发详解(第3版)》(即《Linux设备驱动开发详解:基于最新的Linux 4.0内核》)--宋宝华

http://blog.csdn.net/21cnbao/article/details/45322629

- linux 内核开发环境搭建

http://blog.csdn.net/jemmy858585/article/details/46710299

- 面试题07_用两个栈实现队列——剑指offer系列

题目描写叙述: 用两个栈实现一个队列. 队列的声明例如以下,请实现它的两个函数appendTail 和 deleteHead.分别完毕在队列尾部插入结点和在队列头部删除结点的功能. 解题思路: 栈的特 ...

- 通过进程ID获取基地址

下面代码是通过进程ID来获取进程的基地址,创建一个进程快照后,读取进程模块,一般情况下第一个模块就是进程的基地址,下面的程序通过模块的字符串匹配来找到基地址.通过MODULEENTRY32来读取,下面 ...

- redis缓存web session

redis缓存web session 首先说下架构图.使用Redis作为会话服务器,统一管理Session.如图,集群里的WEB服务器共享存放在REDIS里面全部的客户端SESSION. 当然,反向代 ...

- excel System.Runtime.InteropServices.COMException (0x80010105): 服务器出现意外情况。 (异常来自 HRESULT:0x80010105 (RPC_E

System.Runtime.InteropServices.COMException (0x80010105): 服务器出现意外情况. (异常来自 HRESULT:0x80010105 (RPC_E ...

- 【CentOS】centos如何修改你的主机名

转载地址:https://www.linuxidc.com/Linux/2014-11/109238.htm ============================================= ...

- c++ #ifdef的用法

http://www.tuicool.com/articles/mIJnumB #ifdef的用法 灵活使用#ifdef指示符,我们可以区隔一些与特定头文件.程序库和其他文件版本有关的代码.代码举例: ...

- Weblogic12C 集群实现session同步

测试地址:http://vanatita.com/ 刷新可以看见效果 读取 Session ID=gnFx9OTVFkfNOWCXFqQqeZi07m9BdHhvnqCv0Cq1t3n1EA2ljUG ...