python之scrapy模块下载中间件

知识点

使用方法:

编写一个Downloader Middlewares和我们编写一个pipeline一样,定义一个类,然后在setting中开启 Downloader Middlewares默认的方法:

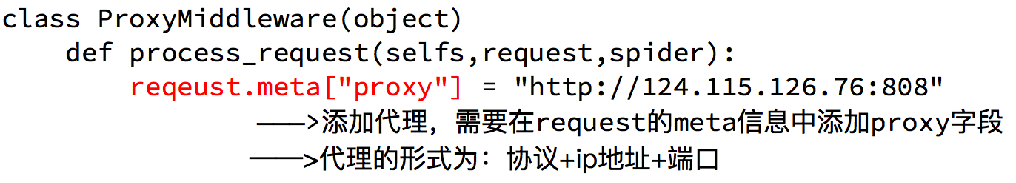

process_request(self, request, spider):

当每个request通过下载中间件时,该方法被调用。

process_response(self, request, response, spider):

当下载器完成http请求,传递响应给引擎的时候调用

1、学习官网网址

https://docs.scrapy.org/

2、settings文件,USER_AGENTS代理池

# -*- coding: utf-8 -*- # Scrapy settings for zjh project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://doc.scrapy.org/en/latest/topics/settings.html

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'zjh' SPIDER_MODULES = ['zjh.spiders']

NEWSPIDER_MODULE = 'zjh.spiders' LOG_LEVEL = "WARNING"

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'zjh (+http://www.yourdomain.com)'

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.131 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0)

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default)

#COOKIES_ENABLED = False # Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False # Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#} # Enable or disable spider middlewares

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'zjh.middlewares.ZjhSpiderMiddleware': 543,

#} # Enable or disable downloader middlewares

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

#开启下载中间件

DOWNLOADER_MIDDLEWARES = {

'zjh.middlewares.RandomUserAgentMiddleware': 543,

'zjh.middlewares.CheckUserAgent': 544,

} # Enable or disable extensions

# See https://doc.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#} # Configure item pipelines

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'zjh.pipelines.ZjhPipeline': 300,

#} # Enable and configure the AutoThrottle extension (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default)

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage' USER_AGENTS = [ "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)", "Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)", "Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)", "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)", "Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6", "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1", "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0", "Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5" ]

3、middleware.py处理代码池

# -*- coding: utf-8 -*- # Define here the models for your spider middleware

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals import random

class RandomUserAgentMiddleware:

"""

下载中间件一般用于反爬虫,代理IP,自定义USER_AGENTS

"""

def process_request(self,request,spider):

ua = random.choice(spider.settings.get("USER_AGENTS"))

request.headers["User-Agent"] = ua class CheckUserAgent:

def process_response(self,request,response,spider):

print(dir(response.request))

print(request.headers["User-Agent"])

# return 必须有,表示响应经过引擎交给爬虫

return response

4、参考学习

a)代理UserAgent

b) 代理ip

python之scrapy模块下载中间件的更多相关文章

- python之poplib模块下载并解析邮件

# -*- coding: utf-8 -*- #python 27 #xiaodeng #python之poplib模块下载并解析邮件 #https://github.com/michaelliao ...

- Scrapy——5 下载中间件常用函数、scrapy怎么对接selenium、常用的Setting内置设置有哪些

Scrapy——5 下载中间件常用的函数 Scrapy怎样对接selenium 常用的setting内置设置 对接selenium实战 (Downloader Middleware)下载中间件常用函数 ...

- UA池 代理IP池 scrapy的下载中间件

# 一些概念 - 在scrapy中如何给所有的请求对象尽可能多的设置不一样的请求载体身份标识 - UA池,process_request(request) - 在scrapy中如何给发生异常的请求设置 ...

- Scrapy的下载中间件

下载中间件 简介 下载器,无法执行js代码,本身不支持代理 下载中间件用来hooks进Scrapy的request/response处理过程的框架,一个轻量级的底层系统,用来全局修改scrapy的re ...

- python之scrapy模块scrapy-redis使用

1.redis的使用,自己可以多学习下,个人也是在学习 https://www.cnblogs.com/ywjfx/p/10262662.html官网可以自己搜索下. 2.下载安装scrapy-red ...

- python使用requests模块下载文件并获取进度提示

一.概述 使用python3写了一个获取某网站文件的小脚本,使用了requests模块的get方法得到内容,然后通过文件读写的方式保存到硬盘同时需要实现下载进度的显示 二.代码实现 安装模块 pip3 ...

- python使用you-get模块下载视频

pip install you-get # 安装先 怎么用 进入命令行: you-get url 暂停下载:ctrl + c ,继续下载重复 you-get url 官网地址:https:// ...

- python 安装 Scrapy 模块

环境的安装总是让人多愁善感,爱恨交叉... 本人安装环境:win7 64 + python2.7 先来几个网站 https://doc.scrapy.org/en/latest/intro/insta ...

- Python使用requests模块下载图片

MySQL中事先保存好爬取到的图片链接地址. 然后使用多线程把图片下载到本地. # coding: utf-8 import MySQLdb import requests import os imp ...

随机推荐

- SIFT算法相关资料

SIFT算法相关资料 一.SIFT算法的教程.源码及应用软件1.ubc:DAVID LOWE---SIFT算法的创始人,两篇巨经典经典的文章http://www.cs.ubc.ca/~lowe/ 2. ...

- linux数码管驱动程序和应用程序

- pxc5.7 报错:WSREP_SST: [ERROR] xtrabackup_checkpoints missing

PXC 5.7 WSREP_SST: [ERROR] xtrabackup_checkpoints missing PXC5.7,在启动其中的一个节点,碰到了 [ERROR] xtrabackup_c ...

- day_03比特币转账的运行原理

在2008年全球经济危机中,中本聪想如果能构建一个没有中心机构的货币发行体系,货币就不会被无限发行,大家都很公平公正,于是中本聪构建了比特币这样一个体系: 一.非中心化下的比特币发行机制 比特币的发行 ...

- 4'.deploy.prototxt

1: 神经网络中,我们通过最小化神经网络来训练网络,所以在训练时最后一层是损失函数层(LOSS), 在测试时我们通过准确率来评价该网络的优劣,因此最后一层是准确率层(ACCURACY). 但是当我们真 ...

- 201777010217-金云馨《面向对象程序设计(java)》第十七周学习总结

项目 内容 这个作业属于哪个课程 https://www.cnblogs.com/nwnu-daizh/ 这个作业的要求在哪里 https://www.cnblogs.com/nwnu-daizh/p ...

- CF487E Tourists[圆方树+树剖(线段树套set)]

做这题的时候有点怂..基本已经想到正解了..结果感觉做法有点假,还是看了正解题解.. 首先提到简单路径上经过的点,就想到了一个关于点双的结论:两点间简单路径上所有可能经过的点的并等于路径上所有点所在点 ...

- Modbus教程| Modbus协议,ASCII和RTU帧,Modbus工作

转载自:https://www.rfwireless-world.com/Tutorials/Modbus-Protocol-tutorial.html 这个Modbus教程涵盖了modbus协议基础 ...

- HDU 6073 - Matching In Multiplication | 2017 Multi-University Training Contest 4

/* HDU 6073 - Matching In Multiplication [ 图论 ] | 2017 Multi-University Training Contest 4 题意: 定义一张二 ...

- 2、CString与string借助char *互转

CString是MFC中的类,MFC前端界面中获得的字符串是CString类.标准C/C++库函数是不能直接对CString类型进行操作的. string是C++中的类. 安全性 CString &g ...