求指教--hadoop2.4.1集群搭建及管理遇到的问题

集群规划:

主机名 IP 安装的软件 运行的进程

hadooop 192.168.1.69 jdk、hadoop NameNode、DFSZKFailoverController(zkfc)

hadoop 192.168.1.70 jdk、hadoop NameNode、DFSZKFailoverController(zkfc)

RM01 192.168.1.71 jdk、hadoop ResourceManager

RM02 192.168.1.72 jdk、hadoop ResourceManager

DN01 192.168.1.73 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

DN02 192.168.1.74 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

DN03 192.168.1.75 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

1.1修改主机名

[root@NM03 conf]# vim /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=DN03

1.2设置静态IP地址

[root@NM03 conf]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

HWADDR=

TYPE=Ethernet

UUID=3304f091-1872-4c3b-8561-a70533743d88

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=none

IPADDR=192.168.1.75

NETMASK=255.255.255.0

GATEWAY=192.168.1.1

DNS1=8.8.8.8

IPV6INIT=no

USERCTL=no

1.3修改/etc/hosts里面要配置的是内网IP地址和主机名的映射关系

192.168.1.69 hadoop

192.168.1.70 hadoop

192.168.1.71 RM01

192.168.1.72 RM02

192.168.1.73 NM01

192.168.1.74 NM02

192.168.1.75 NM03

1.4关闭防火墙及清空防火墙规则,设置NetworkManager开机关闭(注意:NetworkManager如果是最小化安装的服务器默认没有安装也就不用设置了,有的话就关了它)

[root@NM03 conf]# iptables –F

[root@NM03 conf]# /etc/init.d/iptables save

iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ]

[root@NM03 conf]# vim /etc/selinux/config

SELINUX=disabled #这里改为disabled

1.5添加hadoop用户组实现ssh免密码登录

[root@NM03 conf]# groupadd hadoop

[root@NM03 conf]# useradd -g hadoop DN03

[root@NM03 conf]# echo 123456 | passwd --stdin DN03

Changing password for user DN03.

passwd: all authentication tokens updated successfully.

[DN03@NM03 ~]$ vim /etc/ssh/sshd_config

DN03@192.168.1.74's password:

Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password). #这里出错

解决办法

[DN03@NM03 ~]$ vim /etc/ssh/sshd_config

HostKey /etc/ssh/ssh_host_rsa_key #找到这几项,去掉注释启用!

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

[root@NM03 ~]# chown -R DN03:hadoop /home/DN03/.ssh/

[root@NM03 ~]# chown -R DN03:hadoop /home/DN03/

[root@NM03 ~]# chmod 700 /home/DN03/

[root@NM03 ~]# chmod 700 /home/DN03/.ssh/

[root@NM03 ~]# chmod 644 /home/DN03/.ssh/

id_rsa id_rsa.pub known_hosts

[root@NM03 ~]# chmod 600 /home/DN03/.ssh/id_rsa

[root@NM03 ~]# mkdir /home/DN03/.ssh/authorized_keys

[root@NM03 ~]# chmod 644 !$

chmod 644 /home/DN03/.ssh/authorized_keys

问题

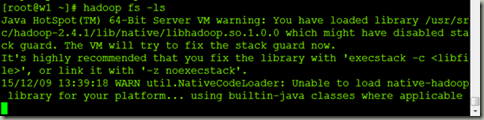

[root@w1 ~]# hadoop fs -put /etc/profile /profile

Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /usr/src/hadoop-2.4.1/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It's highly recommended that you fix the library with 'execstack -c <libfile>', or link it with '-z noexecstack'.

15/12/09 13:37:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

15/12/09 13:40:08 WARN retry.RetryInvocationHandler: Exception while invoking class org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo over w2/192.168.1.75:9000. Not retrying because failovers (15) exceeded maximum allowed (15)

java.net.ConnectException: Call From w1/192.168.1.74 to w2:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:422)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:783)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:730)

at org.apache.hadoop.ipc.Client.call(Client.java:1414)

at org.apache.hadoop.ipc.Client.call(Client.java:1363)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy9.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:699)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:190)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:103)

at com.sun.proxy.$Proxy10.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1762)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1124)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1120)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1120)

at org.apache.hadoop.fs.Globber.getFileStatus(Globber.java:57)

at org.apache.hadoop.fs.Globber.glob(Globber.java:248)

at org.apache.hadoop.fs.FileSystem.globStatus(FileSystem.java:1623)

at org.apache.hadoop.fs.shell.PathData.expandAsGlob(PathData.java:326)

at org.apache.hadoop.fs.shell.CommandWithDestination.getRemoteDestination(CommandWithDestination.java:113)

at org.apache.hadoop.fs.shell.CopyCommands$Put.processOptions(CopyCommands.java:199)

at org.apache.hadoop.fs.shell.Command.run(Command.java:153)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:255)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:308)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:529)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:493)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:604)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:699)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:367)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1462)

at org.apache.hadoop.ipc.Client.call(Client.java:1381)

... 27 more

put: Call From w1/192.168.1.74 to w2:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

求指教--hadoop2.4.1集群搭建及管理遇到的问题的更多相关文章

- 懒人记录 Hadoop2.7.1 集群搭建过程

懒人记录 Hadoop2.7.1 集群搭建过程 2016-07-02 13:15:45 总结 除了配置hosts ,和免密码互连之外,先在一台机器上装好所有东西 配置好之后,拷贝虚拟机,配置hosts ...

- hadoop2.7.2集群搭建

hadoop2.7.2集群搭建 1.修改hadoop中的配置文件 进入/usr/local/src/hadoop-2.7.2/etc/hadoop目录,修改hadoop-env.sh,core-sit ...

- hadoop2.8 ha 集群搭建

简介: 最近在看hadoop的一些知识,下面搭建一个ha (高可用)的hadoop完整分布式集群: hadoop的单机,伪分布式,分布式安装 hadoop2.8 集群 1 (伪分布式搭建 hadoop ...

- centos下hadoop2.6.0集群搭建详细过程

一 .centos集群环境配置 1.创建一个namenode节点,5个datanode节点 主机名 IP namenodezsw 192.168.129.158 datanode1zsw 192.16 ...

- Hadoop2.0 HA集群搭建步骤

上一次搭建的Hadoop是一个伪分布式的,这次我们做一个用于个人的Hadoop集群(希望对大家搭建集群有所帮助): 集群节点分配: Park01 Zookeeper NameNode (active) ...

- hadoop2.6.0集群搭建

p.MsoNormal { margin: 0pt; margin-bottom: .0001pt; text-align: justify; font-family: Calibri; font-s ...

- Hadoop2.6.5集群搭建

一. Hadoop的分布式模型 Hadoop通常有三种运行模式:本地(独立)模式.伪分布式(Pseudo-distributed)模式和完全分布式(Fully distributed)模式.安装完成后 ...

- redis集群搭建与管理

集群简介: Redis 集群是一个可以在多个 Redis 节点之间进行数据共享的设施(installation). Redis 集群不支持那些需要同时处理多个键的 Redis 命令, 因为执行这些命令 ...

- vmware10上三台虚拟机的Hadoop2.5.1集群搭建

由于官方版本的Hadoop是32位,若在64位Linux上安装,则必须先重新在64位环境下编译Hadoop源代码.本环境采用编译后的hadoop2.5.1 . 安装参考博客: 1 http://www ...

随机推荐

- Android KK后为何工厂模式下无法adb 无法重新启动机器 ?

前言 欢迎大家我分享和推荐好用的代码段~~ 声明 欢迎转载,但请保留文章原始出处: CSDN:http://www.csdn.net ...

- 实习生面试相关-b

面试要准备什么 有一位小伙伴面试阿里被拒后,面试官给出了这样的评价:“……计算机基础,以及编程基础能力上都有所欠缺……”.但这种笼统的回答并非是我们希望的答案,所谓的基础到底指的是什么? 作为一名 i ...

- HDU1251 统计难题 【trie树】

统计难题 Time Limit: 4000/2000 MS (Java/Others) Memory Limit: 131070/65535 K (Java/Others) Total Subm ...

- asp.net mvc + javascript导入文件内容

.近期做的是对现有项目进行重构.WEB FROM改成MVC,其实也算是推倒重来了. 里面有一个导入功能,将文件上传.原先的做法是有一个隐藏的iframe,在这个iframe的页面中设置一个表单form ...

- Axure安装fontawesome字体

http://www.fontawesome.com.cn/ 下载后,双击安装字体提示 不是有效的字体,百度 ..解决方法: 任务管理器--服务-- MpsSvc-Windows Firewall ...

- 必备java参考资源列表

现在开始正式介绍这些参考资源. Web 站点和开发人员 Web 门户 网络无疑改变了共享资源和出版的本质(对我也是一样:您正在网络上阅读这篇文章),因此,从每位 Java 开发人员都应该关注的关键 W ...

- Python代码分析工具

Python代码分析工具:PyChecker.Pylint - CSDN博客 https://blog.csdn.net/permike/article/details/51026156

- HDU 2512 一卡通大冒险(dp)

一卡通大冒险 Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 32768/32768 K (Java/Others)Total Subm ...

- POJ - 3352 Road Construction(边双连通分支)

1.给定一个连通的无向图G,至少要添加几条边,才能使其变为双连通图. 2.POJ - 3177 Redundant Paths(边双连通分支)(模板) 与这道题一模一样.代码就改了下范围,其他都没动 ...

- DGA域名可以是色情网站域名

恶意域名指传播蠕虫.病毒和特洛伊木马或是进行诈骗.色情内容传播等不法行为的网站域名. 恶意域名指传播蠕虫.病毒和特洛伊木马或是进行诈骗.色情内容传播等不法行为的网站域名.本文面临能够的挑战,就是恶意网 ...