[k8s]kubespray(ansible)自动化安装k8s集群

kubespray(ansible)自动化安装k8s集群

https://github.com/kubernetes-incubator/kubespray

https://kubernetes.io/docs/setup/pick-right-solution/

kubespray本质是一堆ansible的role文件,通过这种方式,即ansible方式可以自动化的安装高可用k8s集群,目前支持1.9.

安装完成后,k8s所有组件都是通过hyperkube的容器化来运行的.

最佳安装centos7

安装docker(kubespray默认给docker添加的启动参数)

/usr/bin/dockerd

--insecure-registry=10.233.0.0/18

--graph=/var/lib/docker

--log-opt max-size=50m

--log-opt max-file=5

--iptables=false

--dns 10.233.0.3

--dns 114.114.114.114

--dns-search default.svc.cluster.local

--dns-search svc.cluster.local

--dns-opt ndots:2

--dns-opt timeout:2

--dns-opt attempts:2

- 规划3(n1 n2 n3)主2从(n4 n5)

- hosts

192.168.2.11 n1.ma.com n1

192.168.2.12 n2.ma.com n2

192.168.2.13 n3.ma.com n3

192.168.2.14 n4.ma.com n4

192.168.2.15 n5.ma.com n5

192.168.2.16 n6.ma.com n6

- 1.9的ansible-playbook里涉及到这些镜像

gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.1.1

gcr.io/google_containers/pause-amd64:3.0

gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

gcr.io/google_containers/elasticsearch:v2.4.1

gcr.io/google_containers/fluentd-elasticsearch:1.22

gcr.io/google_containers/kibana:v4.6.1

gcr.io/kubernetes-helm/tiller:v2.7.2

gcr.io/google_containers/kubernetes-dashboard-amd64:v1.0.1

gcr.io/google_containers/kubernetes-dashboard-init-amd64:v1.7.1

quay.io/l23network/k8s-netchecker-agent:v1.0

quay.io/l23network/k8s-netchecker-server:v1.0

quay.io/coreos/etcd:v3.2.4

quay.io/coreos/flannel:v0.9.1

quay.io/coreos/flannel-cni:v0.3.0

quay.io/calico/ctl:v1.6.1

quay.io/calico/node:v2.6.2

quay.io/calico/cni:v1.11.0

quay.io/calico/kube-controllers:v1.0.0

quay.io/calico/routereflector:v0.4.0

quay.io/coreos/hyperkube:v1.9.0_coreos.0

quay.io/ant31/kargo:master

quay.io/external_storage/local-volume-provisioner-bootstrap:v1.0.0

quay.io/external_storage/local-volume-provisioner:v1.0.0

- 1.9镜像dockerhub版

lanny/gcr.io_google_containers_cluster-proportional-autoscaler-amd64:1.1.1

lanny/gcr.io_google_containers_pause-amd64:3.0

lanny/gcr.io_google_containers_k8s-dns-kube-dns-amd64:1.14.7

lanny/gcr.io_google_containers_k8s-dns-dnsmasq-nanny-amd64:1.14.7

lanny/gcr.io_google_containers_k8s-dns-sidecar-amd64:1.14.7

lanny/gcr.io_google_containers_elasticsearch:v2.4.1

lanny/gcr.io_google_containers_fluentd-elasticsearch:1.22

lanny/gcr.io_google_containers_kibana:v4.6.1

lanny/gcr.io_kubernetes-helm_tiller:v2.7.2

lanny/gcr.io_google_containers_kubernetes-dashboard-init-amd64:v1.0.1

lanny/gcr.io_google_containers_kubernetes-dashboard-amd64:v1.7.1

lanny/quay.io_l23network_k8s-netchecker-agent:v1.0

lanny/quay.io_l23network_k8s-netchecker-server:v1.0

lanny/quay.io_coreos_etcd:v3.2.4

lanny/quay.io_coreos_flannel:v0.9.1

lanny/quay.io_coreos_flannel-cni:v0.3.0

lanny/quay.io_calico_ctl:v1.6.1

lanny/quay.io_calico_node:v2.6.2

lanny/quay.io_calico_cni:v1.11.0

lanny/quay.io_calico_kube-controllers:v1.0.0

lanny/quay.io_calico_routereflector:v0.4.0

lanny/quay.io_coreos_hyperkube:v1.9.0_coreos.0

lanny/quay.io_ant31_kargo:master

lanny/quay.io_external_storage_local-volume-provisioner-bootstrap:v1.0.0

lanny/quay.io_external_storage_local-volume-provisioner:v1.0.0

- 配置文件

kubespray/inventory/group_vars/k8s-cluster.yml 为控制一些基础信息的配置文件。

修改为flannel

kubespray/inventory/group_vars/all.yml 控制一些需要详细配置的信息

修改为centos

- 替换flannel的vxlan为host-gw

roles/network_plugin/flannel/defaults/main.yml

可以通过grep -r 'vxlan' . 这种方式来找到

- 修改kube_api_pwd

vi roles/kubespray-defaults/defaults/main.yaml

kube_api_pwd: xxxx

- 证书时间

kubespray/roles/kubernetes/secrets/files/make-ssl.sh

- 修改image为个人仓库地址

可以通过grep -r 'gcr.io' . 这种方式来找到

sed -i 's#gcr\.io\/google_containers\/#lanny/gcr\.io_google_containers_#g' roles/download/defaults/main.yml

sed -i 's#gcr\.io\/google_containers\/#lanny/gcr\.io_google_containers_#g' roles/dnsmasq/templates/dnsmasq-autoscaler.yml.j2

sed -i 's#gcr\.io\/google_containers\/#lanny/gcr\.io_google_containers_#g' roles/kubernetes-apps/ansible/defaults/main.yml

sed -i 's#gcr\.io\/kubernetes-helm\/#lanny/gcr\.io_kubernetes-helm_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/coreos\/#lanny/quay\.io_coreos_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/calico\/#lanny/quay\.io_calico_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/l23network\/#lanny/quay\.io_l23network_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/l23network\/#lanny/quay\.io_l23network_#g' docs/netcheck.md

sed -i 's#quay\.io\/external_storage\/#lanny/quay\.io_external_storage_#g' roles/kubernetes-apps/local_volume_provisioner/defaults/main.yml

sed -i 's#quay\.io\/ant31\/kargo#lanny/quay\.io_ant31_kargo_#g' .gitlab-ci.yml

- 安装完后查看镜像(用到的镜像(calico))

nginx:1.13

quay.io/coreos/hyperkube:v1.9.0_coreos.0

gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

quay.io/calico/node:v2.6.2

gcr.io/google_containers/kubernetes-dashboard-init-amd64:v1.0.1

quay.io/calico/cniv:1.11.0

gcr.io/google_containers/kubernetes-dashboard-amd64:v1.7.1

quay.io/calico/ctlv:1.6.1

quay.io/calico/routereflectorv:0.4.0

quay.io/coreos/etcdv:3.2.4

gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.1.1

gcr.io/google_containers/pause-amd64:3.0

- kubespray生成配置所需的环境(python3 ansible) Ansible v2.4 (or newer) Jinja 2.9 (or newer)

yum install python34 python34-pip python-pip python-netaddr -y

cd

mkdir .pip

cd .pip

cat > pip.conf <<EOF

[global]

index-url = http://mirrors.aliyun.com/pypi/simple/

[install]

trusted-host=mirrors.aliyun.com

EOF

yum install gcc libffi-devel python-devel openssl-devel -y

pip install Jinja2-2.10-py2.py3-none-any.whl # https://pypi.python.org/pypi/Jinja2

pip install cryptography

pip install ansible

- 克隆

git clone https://github.com/kubernetes-incubator/kubespray.git

- 修改配置

1. 使docker能pull gcr的镜像

vim inventory/group_vars/all.yml

2 bootstrap_os: centos

95 http_proxy: "http://192.168.1.88:1080/"

2. 如果vm内存<=1G,如果>=3G,则无需修改

vim roles/kubernetes/preinstall/tasks/verify-settings.yml

52 - name: Stop if memory is too small for masters

53 assert:

54 that: ansible_memtotal_mb <= 1500

55 ignore_errors: "{{ ignore_assert_errors }}"

56 when: inventory_hostname in groups['kube-master']

57

58 - name: Stop if memory is too small for nodes

59 assert:

60 that: ansible_memtotal_mb <= 1024

61 ignore_errors: "{{ ignore_assert_errors }}"

3. 修改swap,

vim roles/download/tasks/download_container.yml

75 - name: Stop if swap enabled

76 assert:

77 that: ansible_swaptotal_mb == 0

78 when: kubelet_fail_swap_on|default(false)

所有机器执行: 关闭swap

swapoff -a

[root@n1 kubespray]# free -m

total used free shared buff/cache available

Mem: 2796 297 1861 8 637 2206

Swap: 0 0 0 #这栏为0,表示关闭

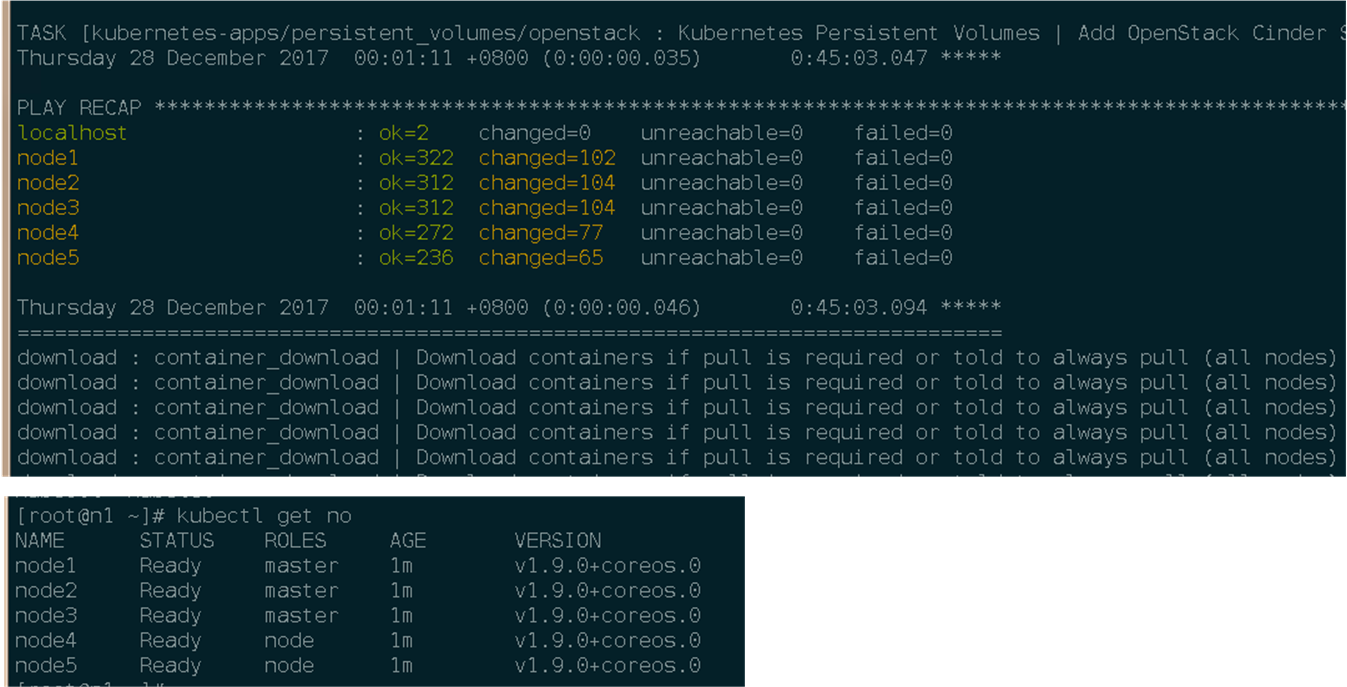

- 生成 kubespray配置,开始ansible安装k8s之旅(非常用时间,大到1h,小到20min)

cd kubespray

IPS=(192.168.2.11 192.168.2.12 192.168.2.13 192.168.2.14 192.168.2.15)

CONFIG_FILE=inventory/inventory.cfg python3 contrib/inventory_builder/inventory.py ${IPS[@]}

ansible-playbook -i inventory/inventory.cfg cluster.yml -b -v --private-key=~/.ssh/id_rsa

- 修改好的配置

git clone https://github.com/lannyMa/kubespray

run.sh 有启动命令

- 我的inventory.cfg, 我不想让node和master混在一起手动改了下

[root@n1 kubespray]# cat inventory/inventory.cfg

[all]

node1 ansible_host=192.168.2.11 ip=192.168.2.11

node2 ansible_host=192.168.2.12 ip=192.168.2.12

node3 ansible_host=192.168.2.13 ip=192.168.2.13

node4 ansible_host=192.168.2.14 ip=192.168.2.14

node5 ansible_host=192.168.2.15 ip=192.168.2.15

[kube-master]

node1

node2

node3

[kube-node]

node4

node5

[etcd]

node1

node2

node3

[k8s-cluster:children]

kube-node

kube-master

[calico-rr]

遇到的问题wait for the apiserver to be running

ansible-playbook -i inventory/inventory.ini cluster.yml -b -v --private-key=~/.ssh/id_rsa

...

<!-- We recommend using snippets services like https://gist.github.com/ etc. -->

RUNNING HANDLER [kubernetes/master : Master | wait for the apiserver to be running] ***

Thursday 23 March 2017 10:46:16 +0800 (0:00:00.468) 0:08:32.094 ********

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (10 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (10 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (9 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (9 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (8 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (8 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (7 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (7 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (6 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (6 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (5 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (5 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (4 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (4 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (3 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (3 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (2 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (2 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (1 retries left).

FAILED - RETRYING: HANDLER: kubernetes/master : Master | wait for the apiserver to be running (1 retries left).

fatal: [node1]: FAILED! => {"attempts": 10, "changed": false, "content": "", "failed": true, "msg": "Status code was not [200]: Request failed: <urlopen error [Errno 111] Connection refused>", "redirected": false, "status": -1, "url": "http://localhost:8080/healthz"}

fatal: [node2]: FAILED! => {"attempts": 10, "changed": false, "content": "", "failed": true, "msg": "Status code was not [200]: Request failed: <urlopen error [Errno 111] Connection refused>", "redirected": false, "status": -1, "url": "http://localhost:8080/healthz"}

to retry, use: --limit @/home/dev_dean/kargo/cluster.retry

解决: 所有节点关闭swap

swapoff -a

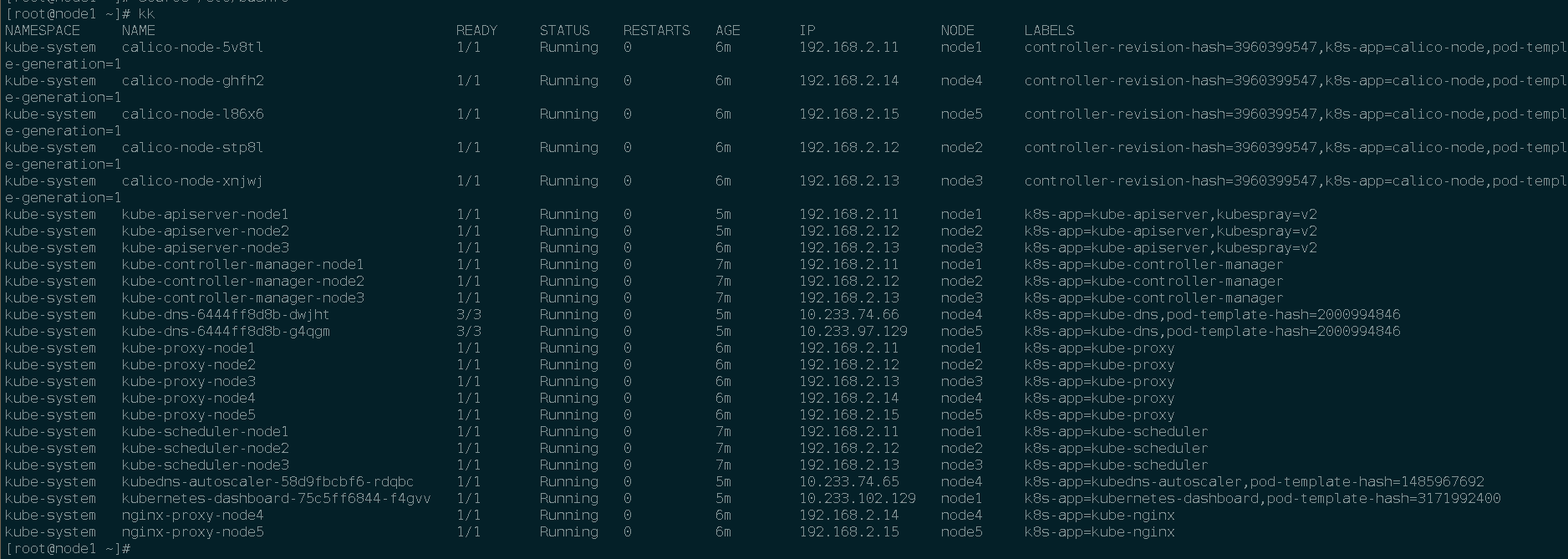

快捷命令

alias kk='kubectl get pod --all-namespaces -o wide --show-labels'

alias ks='kubectl get svc --all-namespaces -o wide'

alias kss='kubectl get svc --all-namespaces -o wide --show-labels'

alias kd='kubectl get deploy --all-namespaces -o wide'

alias wk='watch kubectl get pod --all-namespaces -o wide --show-labels'

alias kv='kubectl get pv -o wide'

alias kvc='kubectl get pvc -o wide --all-namespaces --show-labels'

alias kbb='kubectl run -it --rm --restart=Never busybox --image=busybox sh'

alias kbbc='kubectl run -it --rm --restart=Never curl --image=appropriate/curl sh'

alias kd='kubectl get deployment --all-namespaces --show-labels'

alias kcm='kubectl get cm --all-namespaces -o wide'

alias kin='kubectl get ingress --all-namespaces -o wide'

kubespray的默认启动参数

ps -ef|egrep "apiserver|controller-manager|scheduler"

/hyperkube apiserver \

--advertise-address=192.168.2.11 \

--etcd-servers=https://192.168.2.11:2379,https://192.168.2.12:2379,https://192.168.2.13:2379 \

--etcd-quorum-read=true \

--etcd-cafile=/etc/ssl/etcd/ssl/ca.pem \

--etcd-certfile=/etc/ssl/etcd/ssl/node-node1.pem \

--etcd-keyfile=/etc/ssl/etcd/ssl/node-node1-key.pem \

--insecure-bind-address=127.0.0.1 \

--bind-address=0.0.0.0 \

--apiserver-count=3 \

--admission-control=Initializers,NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ValidatingAdmissionWebhook,ResourceQuota \

--service-cluster-ip-range=10.233.0.0/18 \

--service-node-port-range=30000-32767 \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--profiling=false \

--repair-malformed-updates=false \

--kubelet-client-certificate=/etc/kubernetes/ssl/node-node1.pem \

--kubelet-client-key=/etc/kubernetes/ssl/node-node1-key.pem \

--service-account-lookup=true \

--tls-cert-file=/etc/kubernetes/ssl/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem \

--proxy-client-cert-file=/etc/kubernetes/ssl/apiserver.pem \

--proxy-client-key-file=/etc/kubernetes/ssl/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/apiserver-key.pem \

--secure-port=6443 \

--insecure-port=8080

--storage-backend=etcd3 \

--runtime-config=admissionregistration.k8s.io/v1alpha1 --v=2 \

--allow-privileged=true \

--anonymous-auth=False \

--authorization-mode=Node,RBAC \

--feature-gates=Initializers=False \

PersistentLocalVolumes=False

/hyperkube controller-manager \

--kubeconfig=/etc/kubernetes/kube-controller-manager-kubeconfig.yaml \

--leader-elect=true \

--service-account-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--enable-hostpath-provisioner=false \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=5m0s \

--profiling=false \

--terminated-pod-gc-threshold=12500 \

--v=2 \

--use-service-account-credentials=true \

--feature-gates=Initializers=False \

PersistentLocalVolumes=False

/hyperkube scheduler \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/kube-scheduler-kubeconfig.yaml \

--profiling=false --v=2 \

--feature-gates=Initializers=False \

PersistentLocalVolumes=False

/usr/local/bin/kubelet \

--logtostderr=true --v=2 \

--address=192.168.2.14 \

--node-ip=192.168.2.14 \

--hostname-override=node4 \

--allow-privileged=true \

--pod-manifest-path=/etc/kubernetes/manifests \

--cadvisor-port=0 \

--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0 \

--node-status-update-frequency=10s \

--docker-disable-shared-pid=True \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--tls-cert-file=/etc/kubernetes/ssl/node-node4.pem \

--tls-private-key-file=/etc/kubernetes/ssl/node-node4-key.pem \

--anonymous-auth=false \

--cgroup-driver=cgroupfs \

--cgroups-per-qos=True \

--fail-swap-on=True \

--enforce-node-allocatable= \

--cluster-dns=10.233.0.3 \

--cluster-domain=cluster.local \

--resolv-conf=/etc/resolv.conf \

--kubeconfig=/etc/kubernetes/node-kubeconfig.yaml \

--require-kubeconfig \

--kube-reserved cpu=100m,memory=256M \

--node-labels=node-role.kubernetes.io/node=true \

--feature-gates=Initializers=False,PersistentLocalVolumes=False \

--network-plugin=cni --cni-conf-dir=/etc/cni/net.d \

--cni-bin-dir=/opt/cni/bin

ps -ef|grep kube-porxy

hyperkube proxy --v=2 \

--kubeconfig=/etc/kubernetes/kube-proxy-kubeconfig.yaml\

--bind-address=192.168.2.14 \

--cluster-cidr=10.233.64.0/18 \

--proxy-mode=iptables

我把所需要的镜像v1.9推送到dockerhub了

lanny/gcr.io_google_containers_cluster-proportional-autoscaler-amd64:1.1.1

lanny/gcr.io_google_containers_pause-amd64:3.0

lanny/gcr.io_google_containers_k8s-dns-kube-dns-amd64:1.14.7

lanny/gcr.io_google_containers_k8s-dns-dnsmasq-nanny-amd64:1.14.7

lanny/gcr.io_google_containers_k8s-dns-sidecar-amd64:1.14.7

lanny/gcr.io_google_containers_elasticsearch:v2.4.1

lanny/gcr.io_google_containers_fluentd-elasticsearch:1.22

lanny/gcr.io_google_containers_kibana:v4.6.1

lanny/gcr.io_kubernetes-helm_tiller:v2.7.2

lanny/gcr.io_google_containers_kubernetes-dashboard-init-amd64:v1.0.1

lanny/gcr.io_google_containers_kubernetes-dashboard-amd64:v1.7.1

lanny/quay.io_l23network_k8s-netchecker-agent:v1.0

lanny/quay.io_l23network_k8s-netchecker-server:v1.0

lanny/quay.io_coreos_etcd:v3.2.4

lanny/quay.io_coreos_flannel:v0.9.1

lanny/quay.io_coreos_flannel-cni:v0.3.0

lanny/quay.io_calico_ctl:v1.6.1

lanny/quay.io_calico_node:v2.6.2

lanny/quay.io_calico_cni:v1.11.0

lanny/quay.io_calico_kube-controllers:v1.0.0

lanny/quay.io_calico_routereflector:v0.4.0

lanny/quay.io_coreos_hyperkube:v1.9.0_coreos.0

lanny/quay.io_ant31_kargo:master

lanny/quay.io_external_storage_local-volume-provisioner-bootstrap:v1.0.0

lanny/quay.io_external_storage_local-volume-provisioner:v1.0.0

gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.1.1

gcr.io/google_containers/pause-amd64:3.0

gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7

gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

gcr.io/google_containers/elasticsearch:v2.4.1

gcr.io/google_containers/fluentd-elasticsearch:1.22

gcr.io/google_containers/kibana:v4.6.1

gcr.io/kubernetes-helm/tiller:v2.7.2

gcr.io/google_containers/kubernetes-dashboard-amd64:v1.0.1

gcr.io/google_containers/kubernetes-dashboard-init-amd64:v1.7.1

quay.io/l23network/k8s-netchecker-agent:v1.0

quay.io/l23network/k8s-netchecker-server:v1.0

quay.io/coreos/etcd:v3.2.4

quay.io/coreos/flannel:v0.9.1

quay.io/coreos/flannel-cni:v0.3.0

quay.io/calico/ctl:v1.6.1

quay.io/calico/node:v2.6.2

quay.io/calico/cni:v1.11.0

quay.io/calico/kube-controllers:v1.0.0

quay.io/calico/routereflector:v0.4.0

quay.io/coreos/hyperkube:v1.9.0_coreos.0

quay.io/ant31/kargo:master

quay.io/external_storage/local-volume-provisioner-bootstrap:v1.0.0

quay.io/external_storage/local-volume-provisioner:v1.0.0

sed -i 's#gcr\.io\/google_containers\/#lanny/gcr\.io_google_containers_#g' roles/download/defaults/main.yml

sed -i 's#gcr\.io\/google_containers\/#lanny/gcr\.io_google_containers_#g' roles/dnsmasq/templates/dnsmasq-autoscaler.yml.j2

sed -i 's#gcr\.io\/google_containers\/#lanny/gcr\.io_google_containers_#g' roles/kubernetes-apps/ansible/defaults/main.yml

sed -i 's#gcr\.io\/kubernetes-helm\/#lanny/gcr\.io_kubernetes-helm_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/coreos\/#lanny/quay\.io_coreos_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/calico\/#lanny/quay\.io_calico_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/l23network\/#lanny/quay\.io_l23network_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/l23network\/#lanny/quay\.io_l23network_#g' docs/netcheck.md

sed -i 's#quay\.io\/external_storage\/#lanny/quay\.io_external_storage_#g' roles/kubernetes-apps/local_volume_provisioner/defaults/main.yml

sed -i 's#quay\.io\/ant31\/kargo#lanny/quay\.io_ant31_kargo_#g' .gitlab-ci.yml

参考: https://jicki.me/2017/12/08/kubernetes-kubespray-1.8.4/

构建etcd容器集群

/usr/bin/docker run

--restart=on-failure:5

--env-file=/etc/etcd.env

--net=host

-v /etc/ssl/certs:/etc/ssl/certs:ro

-v /etc/ssl/etcd/ssl:/etc/ssl/etcd/ssl:ro

-v /var/lib/etcd:/var/lib/etcd:rw

--memory=512M

--oom-kill-disable

--blkio-weight=1000

--name=etcd1

lanny/quay.io_coreos_etcd:v3.2.4

/usr/local/bin/etcd

[root@node1 ~]# cat /etc/etcd.env

ETCD_DATA_DIR=/var/lib/etcd

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.2.11:2379

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.2.11:2380

ETCD_INITIAL_CLUSTER_STATE=existing

ETCD_METRICS=basic

ETCD_LISTEN_CLIENT_URLS=https://192.168.2.11:2379,https://127.0.0.1:2379

ETCD_ELECTION_TIMEOUT=5000

ETCD_HEARTBEAT_INTERVAL=250

ETCD_INITIAL_CLUSTER_TOKEN=k8s_etcd

ETCD_LISTEN_PEER_URLS=https://192.168.2.11:2380

ETCD_NAME=etcd1

ETCD_PROXY=off

ETCD_INITIAL_CLUSTER=etcd1=https://192.168.2.11:2380,etcd2=https://192.168.2.12:2380,etcd3=https://192.168.2.13:2380

ETCD_AUTO_COMPACTION_RETENTION=8

# TLS settings

ETCD_TRUSTED_CA_FILE=/etc/ssl/etcd/ssl/ca.pem

ETCD_CERT_FILE=/etc/ssl/etcd/ssl/member-node1.pem

ETCD_KEY_FILE=/etc/ssl/etcd/ssl/member-node1-key.pem

ETCD_PEER_TRUSTED_CA_FILE=/etc/ssl/etcd/ssl/ca.pem

ETCD_PEER_CERT_FILE=/etc/ssl/etcd/ssl/member-node1.pem

ETCD_PEER_KEY_FILE=/etc/ssl/etcd/ssl/member-node1-key.pem

ETCD_PEER_CLIENT_CERT_AUTH=true

[root@node1 ssl]# docker exec b1159a1c6209 env|grep -i etcd

ETCD_DATA_DIR=/var/lib/etcd

ETCD_ADVERTISE_CLIENT_URLS=https://192.168.2.11:2379

ETCD_INITIAL_ADVERTISE_PEER_URLS=https://192.168.2.11:2380

ETCD_INITIAL_CLUSTER_STATE=existing

ETCD_METRICS=basic

ETCD_LISTEN_CLIENT_URLS=https://192.168.2.11:2379,https://127.0.0.1:2379

ETCD_ELECTION_TIMEOUT=5000

ETCD_HEARTBEAT_INTERVAL=250

ETCD_INITIAL_CLUSTER_TOKEN=k8s_etcd

ETCD_LISTEN_PEER_URLS=https://192.168.2.11:2380

ETCD_NAME=etcd1

ETCD_PROXY=off

ETCD_INITIAL_CLUSTER=etcd1=https://192.168.2.11:2380,etcd2=https://192.168.2.12:2380,etcd3=https://192.168.2.13:2380

ETCD_AUTO_COMPACTION_RETENTION=8

ETCD_TRUSTED_CA_FILE=/etc/ssl/etcd/ssl/ca.pem

ETCD_CERT_FILE=/etc/ssl/etcd/ssl/member-node1.pem

ETCD_KEY_FILE=/etc/ssl/etcd/ssl/member-node1-key.pem

ETCD_PEER_TRUSTED_CA_FILE=/etc/ssl/etcd/ssl/ca.pem

ETCD_PEER_CERT_FILE=/etc/ssl/etcd/ssl/member-node1.pem

ETCD_PEER_KEY_FILE=/etc/ssl/etcd/ssl/member-node1-key.pem

ETCD_PEER_CLIENT_CERT_AUTH=true

[root@node1 ssl]# tree /etc/ssl/etcd/ssl

/etc/ssl/etcd/ssl

├── admin-node1-key.pem

├── admin-node1.pem

├── admin-node2-key.pem

├── admin-node2.pem

├── admin-node3-key.pem

├── admin-node3.pem

├── ca-key.pem

├── ca.pem

├── member-node1-key.pem

├── member-node1.pem

├── member-node2-key.pem

├── member-node2.pem

├── member-node3-key.pem

├── member-node3.pem

├── node-node1-key.pem

├── node-node1.pem

├── node-node2-key.pem

├── node-node2.pem

├── node-node3-key.pem

├── node-node3.pem

├── node-node4-key.pem

├── node-node4.pem

├── node-node5-key.pem

└── node-node5.pem

[root@n2 ~]# tree /etc/ssl/etcd/ssl

/etc/ssl/etcd/ssl

├── admin-node2-key.pem

├── admin-node2.pem

├── ca-key.pem

├── ca.pem

├── member-node2-key.pem

├── member-node2.pem

├── node-node1-key.pem

├── node-node1.pem

├── node-node2-key.pem

├── node-node2.pem

├── node-node3-key.pem

├── node-node3.pem

├── node-node4-key.pem

├── node-node4.pem

├── node-node5-key.pem

└── node-node5.pem

admin-node1.crt

CN = etcd-admin-node1

DNS Name=localhost

DNS Name=node1

DNS Name=node2

DNS Name=node3

IP Address=192.168.2.11

IP Address=192.168.2.11

IP Address=192.168.2.12

IP Address=192.168.2.12

IP Address=192.168.2.13

IP Address=192.168.2.13

IP Address=127.0.0.1

member-node1.crt

CN = etcd-member-node1

DNS Name=localhost

DNS Name=node1

DNS Name=node2

DNS Name=node3

IP Address=192.168.2.11

IP Address=192.168.2.11

IP Address=192.168.2.12

IP Address=192.168.2.12

IP Address=192.168.2.13

IP Address=192.168.2.13

IP Address=127.0.0.1

node-node1.crt

CN = etcd-node-node1

DNS Name=localhost

DNS Name=node1

DNS Name=node2

DNS Name=node3

IP Address=192.168.2.11

IP Address=192.168.2.11

IP Address=192.168.2.12

IP Address=192.168.2.12

IP Address=192.168.2.13

IP Address=192.168.2.13

IP Address=127.0.0.1

[root@node1 bin]# tree

.

├── etcd

├── etcdctl

├── etcd-scripts

│ └── make-ssl-etcd.sh

├── kubectl

├── kubelet

└── kubernetes-scripts

├── kube-gen-token.sh

└── make-ssl.sh

[k8s]kubespray(ansible)自动化安装k8s集群的更多相关文章

- Redis自动化安装以及集群实现

Redis实例安装 安装说明:自动解压缩安装包,按照指定路径编译安装,复制配置文件模板到Redis实例路的数据径下,根据端口号修改配置文件模板 三个必须文件:1,配置文件,2,当前shell脚本,3, ...

- 利用ansible书写playbook在华为云上批量配置管理工具自动化安装ceph集群

首先在华为云上购买搭建ceph集群所需云主机: 然后购买ceph所需存储磁盘 将购买的磁盘挂载到用来搭建ceph的云主机上 在跳板机上安装ansible 查看ansible版本,检验ansible是否 ...

- Ansible自动化部署K8S集群

Ansible自动化部署K8S集群 1.1 Ansible介绍 Ansible是一种IT自动化工具.它可以配置系统,部署软件以及协调更高级的IT任务,例如持续部署,滚动更新.Ansible适用于管理企 ...

- Kubernetes(K8s) 安装(使用kubeadm安装Kubernetes集群)

背景: 由于工作发生了一些变动,很长时间没有写博客了. 概述: 这篇文章是为了介绍使用kubeadm安装Kubernetes集群(可以用于生产级别).使用了Centos 7系统. 一.Centos7 ...

- k8s中安装rabbitmq集群

官方文档地址:https://www.rabbitmq.com/kubernetes/operator/quickstart-operator.html 要求 1.k8s版本要1.18及其以上 2.能 ...

- 利用ansible进行自动化构建etcd集群

上一篇进行了手动安装etcd集群,此篇利用自动化工具ansible为三个节点构建etcd集群 环境: master:192.168.101.14,node1:192.168.101.15,node2: ...

- 简单了解一下K8S,并搭建自己的集群

距离上次更新已经有一个月了,主要是最近工作上的变动有点频繁,现在才暂时稳定下来.这篇博客的本意是带大家从零开始搭建K8S集群的.但是我后面一想,如果是我看了这篇文章,会收获什么?就是跟着步骤一步一走吗 ...

- lvs+keepalived部署k8s v1.16.4高可用集群

一.部署环境 1.1 主机列表 主机名 Centos版本 ip docker version flannel version Keepalived version 主机配置 备注 lvs-keepal ...

- Centos7.6部署k8s v1.16.4高可用集群(主备模式)

一.部署环境 主机列表: 主机名 Centos版本 ip docker version flannel version Keepalived version 主机配置 备注 master01 7.6. ...

随机推荐

- 浅谈Android五大布局(一)——LinearLayout、FrameLayout和AbsoulteLayout

Android的界面是有布局和组件协同完成的,布局好比是建筑里的框架,而组件则相当于建筑里的砖瓦.组件按照布局的要求依次排列,就组成了用户所看见的界面.Android的五大布局分别是LinearLay ...

- ZH奶酪:PHP遍历目录/文件的3种方法

其实PHP中内建函数scandir()就可以返回目录下全部文件和目录了... ========================== 1.使用$obj = dir($dir)返回目录对象$obj,然后使 ...

- GET 和 POST的区别

1.最普遍的答案 GET使用URL或Cookie传参.而POST将数据放在BODY中. GET的URL会有长度上的限制,则POST的数据则可以非常大. POST比GET安全,因为数据在地址栏上不可见. ...

- 解压版MySQL安装后初始化root密码

1: C:\Users\gechong>mysql

- $nextTick 宏任务 微任务 macrotasks microtasks

1.nextTick调用方法 首先看nextTick的调用方法: https://cn.vuejs.org/v2/api/#Vue-nextTick // 修改数据 vm.msg = 'Hello' ...

- element-ui 源码架构

1.项目结构 2.src下的入口文件 https://github.com/ElemeFE/element/blob/dev/src/index.js 入口文件实现的功能为: (1)国际化配置 (2) ...

- 解决Linux SSH登录慢

出现ssh登录慢一般有两个原因:DNS反向解析的问题和ssh的gssapi认证 :ssh的gssapi认证问题 GSSAPI ( Generic Security Services Applicati ...

- 【Docker】拉取Oracle 11g镜像配置

以下是基于阿里云服务器Centos 7操作 1.拉取Oracle11g镜像 docker pull registry.cn-hangzhou.aliyuncs.com/helowin/oracle_1 ...

- php 5.3 垃圾回收

1.引用计数器 php中的每个变量都存在一个zval的变量容器中, zval容易包括变量类型.值.is_ref(是否是引用).refercount(引用次数,也成为符号), 所有的符号存在一个符号表中 ...

- 交叉编译OpenWrt 定制固件

在Centos7上交叉编译生成OpenWrt固件 安装ss-* 获取最新的ss, 当前是 wget https://github.com/shadowsocks/shadowsocks-libev/a ...