云计算之openstack mitaka 配置详解(将疑难点都进行划分)

在配置openstack项目时很多人认为到处是坑,特别是新手,一旦进坑没有人指导,身体将会感觉一次次被掏空,作为菜鸟的我也感同身受,因为已经被掏空n次了。

以下也是我将整个openstack配置过程进行汇总,并对难点进行分析,希望对您们有所帮助,如果在配置过程中有疑问,也可以进行留言。

尝试自己配置前可阅读《菜鸟帮你跳过openstack配置过程中的坑http://www.cnblogs.com/yaohong/p/7352386.html》。

同时如果不想一步步安装,可以执行安装脚本:http://www.cnblogs.com/yaohong/p/7251852.html

一:环境

1.1主机网络

系统版本 CentOS7

控制节点: 1 处理器, 4 GB 内存, 及5 GB 存储

计算节点: 1 处理器, 2 GB 内存, 及10 GB 存储

说明:

1:以CentOS7为镜像,安装两台机器(怎样安装详见http://www.cnblogs.com/yaohong/p/7240387.html)并注意配置双网卡和控制两台机器的内存。

2:修改机器主机名分别为:controller和compute1

#hostnamectl set-hostname hostname

3:编辑controller和compute1的 /etc/hosts 文件

#vi /etc/hosts

4:验证

采取互ping以及ping百度的方式

1.2网络时间协议(NTP)

[控制节点安装NTP]

NTP主要为同步时间所用,时间不同步,可能造成你不能创建云主机

#yum install chrony(安装软件包)

#vi /etc/chrony.conf增加

server NTP_SERVER iburst

allow 你的ip地址网段 (可以去掉,指代允许你的ip地址网段可以访问NTP)

#systemctl enable chronyd.service (设置为系统自启动)

#systemctl start chronyd.service (启动NTP服务)

[计算节点安装NTP]

# yum install chrony

#vi /etc/chrony.conf`` 释除``server`` 值外的所有内容。修改它引用控制节点:server controller iburst

# systemctl enable chronyd.service (加入系统自启动)

# systemctl start chronyd.service (启动ntp服务)

[验证NTP]

控制节点和计算节点分别执行#chronyc sources,出现如下

1.3Openstack包

[openstack packages安装在控制和计算节点]

安装openstack最新的源:

#yum install centos-release-openstack-mitaka

#yum install https://repos.fedorapeople.org/repos/openstack/openstack-mitaka/rdo-release-mitaka-6.noarch.rpm

#yum upgrade (在主机上升级包)

#yum install python-openstackclient (安装opentack必须的插件)

#yum install openstack-selinux (可选则安装这个插件,我直接关闭了selinux,因为不熟,对后续不会有影响)

1.4SQL数据库

安装在控制节点,指南中的步骤依据不同的发行版使用MariaDB或 MySQL。OpenStack 服务也支持其他 SQL 数据库。

#yum install mariadb mariadb-server MySQL-python

#vi /etc/mysql/conf.d/mariadb_openstack.cnf

加入:

[mysqld]

bind-address = 192.168.1.73 (安装mysql的机器的IP地址,这里为controller地址)

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

character-set-server = utf8

#systemctl enable mariadb.service (将数据库服务设置为自启动)

#systemctl start mariadb.service (将数据库服务设置为开启)

设置mysql属性:

#mysql_secure_installation (此处参照http://www.cnblogs.com/yaohong/p/7352386.html,中坑一)

1.5消息队列

消息队列在openstack整个架构中扮演着至关重要(交通枢纽)的作用,正是因为openstack部署的灵活性、模块的松耦合、架构的扁平化,反而使openstack更加依赖于消息队列(不一定使用RabbitMQ,

可以是其他的消息队列产品),所以消息队列收发消息的性能和消息队列的HA能力直接影响openstack的性能。如果rabbitmq没有运行起来,你的整openstack平台将无法使用。rabbitmq使用5672端口。

#yum install rabbitmq-server

#systemctl enable rabbitmq-server.service(加入自启动)

#systemctl start rabbitmq-server.service(启动)

#rabbitmqctl add_user openstack RABBIT_PASS (增加用户openstack,密码自己设置替换掉RABBIT_PASS)

#rabbitmqctl set_permissions openstack ".*" ".*" ".*" (给新增的用户授权,没有授权的用户将不能接受和传递消息)

1.6Memcached

memcache为选择安装项目。使用端口11211

#yum install memcached python-memcached

#systemctl enable memcached.service

#systemctl start memcached.service

二:认证服务

[keystone认证服务]

注意:在之前需要设置好hosts解析,控制节点和计算节点都要做。我的为:

192.168.1.73 controller

192.168.1.74compute1

2.1安装和配置

登录数据库创建keystone数据库。

#mysql -u root -p

#CREATE DATABASE keystone;

设置授权用户和密码:

#GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY '密码';

#GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY '密码';

生成admin_token的随机值:

# openssl rand -hex 10

安全并配置组件

#yum install openstack-keystone httpd mod_wsgi

#vi /etc/keystone/keystone.conf

使用刚刚生成的随机值替换掉[DEFAULT]中的

#admin_token = 随机值 (主要为安全,也可以不用替换)

配置数据库连接

[database]

connection = mysql+pymysql://keystone:密码@controller/keystone

provider = fernet

初始化身份认证服务的数据库

# su -s /bin/sh -c "keystone-manage db_sync" keystone(一点要查看数据库是否生成表成功)

初始化keys:

#keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

配置apache:

#vi /etc/httpd/conf/httpd.conf

将ServerName 后面改成主机名,防止启动报错

ServerName controller

生成wsgi配置文件:

#vi /etc/httpd/conf.d/wsgi-keystone.conf加入:

Listen

Listen <VirtualHost *:>

WSGIDaemonProcess keystone-public processes= threads= user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined <Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost> <VirtualHost *:>

WSGIDaemonProcess keystone-admin processes= threads= user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-admin

WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat "%{cu}t %M"

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined <Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>

启动httpd:

#systemctl enable httpd.service

#systemctl start httpd.service

2.2创建服务实体和API端点

#export OS_TOKEN=上面生成的随机值

#export OS_URL=http://controller:35357/v3

#export OS_IDENTITY_API_VERSION=3

创建keystone的service:

#openstack service create --name keystone --description "OpenStack Identity" identity (identity这个认证类型一定不可以错)

创建keystone的endpoint:

#openstack endpoint create --region RegionOne \

identity public http://controller:5000/v3

#openstack endpoint create --region RegionOne \

identity internel http://controller:5000/v3

#openstack endpoint create --region RegionOne \

identity admin http://controller:35357/v3

2.3创建域、项目、用户和角色

创建默认域default:

openstack domain create --description "Default Domain" default

创建admin的租户:

#openstack project create --domain default \

--description "Admin Project" admin

创建admin用户:

#openstack user create --domain default \

--password-prompt admin(会提示输入密码为登录dashboard的密码)

创建admin角色:

#openstack role create admin

将用户租户角色连接起来:

#openstack role add --project admin --user admin admin

创建服务目录:

#openstack project create --domain default \

--description "Service Project" service

创建demo信息类似admin:

#openstack project create --domain default \

--description "Demo Project" demo

#openstack user create --domain default \

--password-prompt demo

#openstack role create user

#openstack role add --project demo --user demo user

2.4验证

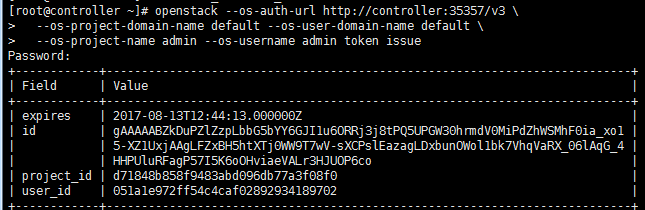

作为 admin 用户,请求认证令牌:

#openstack --os-auth-url http://controller:35357/v3 \

--os-project-domain-name default --os-user-domain-name default \

--os-project-name admin --os-username admin token issue

输入密码之后,有正确的输出即为配置正确。

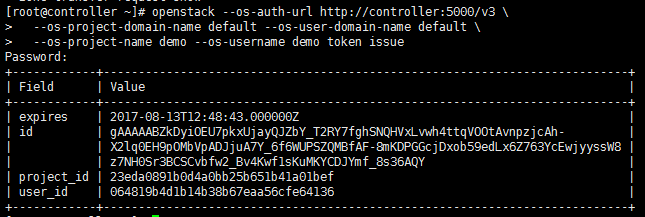

作为``demo`` 用户,请求认证令牌:

#openstack --os-auth-url http://controller:5000/v3 \

--os-project-domain-name default --os-user-domain-name default \

--os-project-name demo --os-username demo token issue

2.5创建 OpenStack 客户端环境脚本

可将环境变量设置为脚本:

#vi admin-openrc 加入:

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

#vi demo-openrc 加入:

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

运行使用 #. admin-openrc或者使用#source admin-openrc

验证输入命令:

openstack token issue

有正确的输出即为配置正确。

三:镜像服务

3.1安装配置

建立glance数据

登录mysql

#mysql -u root -p

#CREATE DATABASE glance;

授权

#GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \

IDENTIFIED BY '密码';

#GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \

IDENTIFIED BY '密码';

运行环境变量:

#. admin-openrc

创建glance用户信息:

openstack user create --domain default --password-prompt glance

openstack role add --project service --user glance admin

创建镜像服务目录:

#openstack service create --name glance \

--description "OpenStack Image" image

创建镜像endpoint:

#penstack endpoint create --region RegionOne \

image public http://controller:9292

#penstack endpoint create --region RegionOne \

image internal http://controller:9292

#penstack endpoint create --region RegionOne \

image admin http://controller:9292

安装:

#yum install openstack-glance

#vi /etc/glance/glance-api.conf

配置数据库连接:

connection = mysql+pymysql://glance:密码@controller/glance

找到[keystone_authtoken](配置认证)

加入:

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = xxxx

找到[paste_deploy]

flavor = keystone

找到[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

#vi /etc/glance/glance-registry.conf

找到[database]

connection = mysql+pymysql://glance:密码@controller/glance

找到[keystone_authtoken](配置认证)

加入:

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = control:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = xxxx

找到:[paste_deploy]

flavor = keystone

同步数据库:

#su -s /bin/sh -c "glance-manage db_sync" glance

启动glance:

#systemctl enable openstack-glance-api.service \

openstack-glance-registry.service

systemctl start openstack-glance-api.service \

openstack-glance-registry.service

3.2验证

运行环境变量:

#. admin-openrc

下载一个比较小的镜像:

#wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

上传镜像:

#openstack image create "cirros" \

--file cirros-0.3.4-x86_64-disk.img \

--disk-format qcow2 --container-format bare \

--public

查看:

#openstack image list

有输出 证明glance配置正确

四:计算服务

4.1安装并配置控制节点

建立nova的数据库:

#mysql -u root -p

#CREATE DATABASE nova_api;

#CREATE DATABASE nova;

授权:

#GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY '密码';

#GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY '密码';

#GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY '密码';

#GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY '密码';

运行环境变量:

#. admin-openrc

创建nova用户:

#openstack user create --domain default \

--password-prompt nova

#openstack role add --project service --user nova admin

创建计算服务:

#openstack service create --name nova \

--description "OpenStack Compute" compute

创建endpoint:

#openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1/%\(tenant_id\)s

#openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1/%\(tenant_id\)s

#openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1/%\(tenant_id\)s

安装:

#yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler

#vi /etc/nova/nova.conf

找到:[DEFAULT]

enabled_apis = osapi_compute,metadata

找到[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[DEFAULT]

rpc_backend = rabbit

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = xxx

[DEFAULT]

my_ip = ip地址

[DEFAULT]

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

同步数据库:

#nova-manage api_db sync

#nova-manage db sync

启动服务:

#systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

# systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

4.2安装并配置计算节点

#yum install openstack-nova-compute

#vi /etc/nova/nova.conf

[DEFAULT]

rpc_backend = rabbit

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = xxx

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = xxx

[DEFAULT]

my_ip =计算节点ip地址

[DEFAULT]

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

注意:

egrep -c '(vmx|svm)' /proc/cpuinfo

如果为0则需要修改/etc/nova/nova.conf

[libvirt]

virt_type = qemu

为大于0则不需要

启动:

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl start libvirtd.service openstack-nova-compute.service

4.3验证

在控制节点验证:

运行环境变量:

#. admin-openrc

#openstack compute service list

输出正常即为配置正确

五:Networking服务

5.1安装并配置控制节点

创建neutron数据库

#mysql -u root -p

#CREATE DATABASE neutron;

#GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'NEUTRON_DBPASS';

#GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'NEUTRON_DBPASS';

运行环境变量:

#. admin-openrc

创建用户:

#openstack user create --domain default --password-prompt neutron

#openstack role add --project service --user neutron admin

创建网络服务:

#openstack service create --name neutron \

--description "OpenStack Networking" network

创建neutron endpoint

#openstack endpoint create --region RegionOne \

network public http://controller:9696

#openstack endpoint create --region RegionOne \

network internal http://controller:9696

#openstack endpoint create --region RegionOne \

network admin http://controller:9696

创建vxlan网络:

#yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables

#vi /etc/neutron/neutron.conf

[database]

connection = mysql+pymysql://neutron:密码@controller/neutron

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

[DEFAULT]

rpc_backend = rabbit

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[DEFAULT]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = xxxx

[DEFAULT]

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = xxxx

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置ml2扩展:

#vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = True

配置网桥:

#vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:使用的网卡名称

[vxlan]

enable_vxlan = True

local_ip = OVERLAY_INTERFACE_IP_ADDRESS

l2_population = True

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

配置3层网络:

#vi /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

配置dhcp:

#vi /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

配置metadata agent

#vi /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = METADATA_SECRET

#vi /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = xxxx

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_SECRET

创建扩展连接:

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

启动:

#systemctl restart openstack-nova-api.service

#systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

#systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

# systemctl enable neutron-l3-agent.service

#systemctl start neutron-l3-agent.service

5.2安装并配置计算节点

#yum install openstack-neutron-linuxbridge ebtables ipset

#vi /etc/neutron/neutron.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = xxxx

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

配置vxlan

#vi /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

[vxlan]

enable_vxlan = True

local_ip = OVERLAY_INTERFACE_IP_ADDRESS

l2_population = True

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

#vi /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = xxxx

启动:

#systemctl restart openstack-nova-compute.service

#systemctl enable neutron-linuxbridge-agent.service

#systemctl enable neutron-linuxbridge-agent.service

5.3验证

运行环境变量:

#. admin-openrc

#neutron ext-list

输出正常即可

六:Dashboard

6.1配置

#yum install openstack-dashboard

#vi /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', ]

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

启动:

#systemctl restart httpd.service memcached.service

6.2登录

在网页上输入网址http://192.168.1.73/dashboard/auth/login

域:default

用户名:admin或者demo

密码:自己设置的

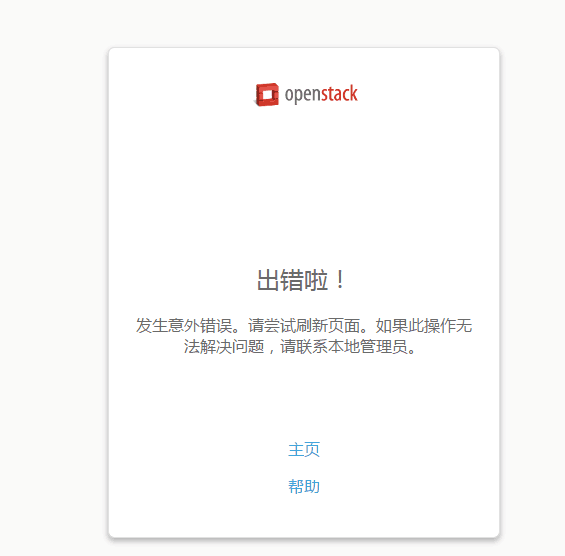

登录后会发现出现一下页面:此处可看http://www.cnblogs.com/yaohong/p/7352386.html中的坑四。

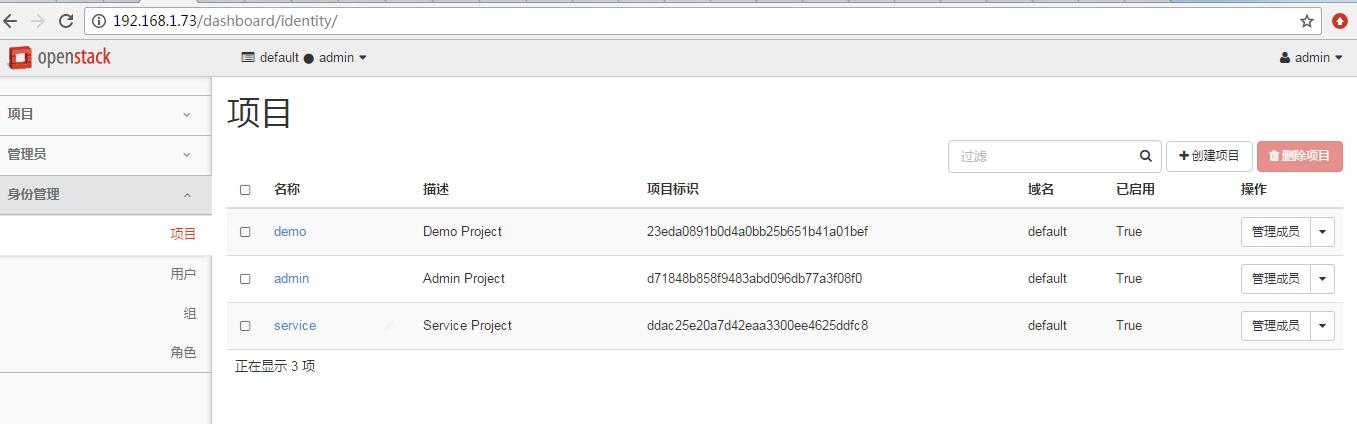

按照坑四解决后会出现一下页面,就可以玩了,但如果是笔记本会运行非常慢,等有了服务器继续玩吧!!

本文网址:http://www.cnblogs.com/yaohong/p/7368297.html

配置完后可尝试已经写好的脚本一键安装模式:http://www.cnblogs.com/yaohong/p/7251852.html

云计算之openstack mitaka 配置详解(将疑难点都进行划分)的更多相关文章

- Centos7上部署openstack mitaka配置详解(将疑难点都进行划分)

在配置openstack项目时很多人认为到处是坑,特别是新手,一旦进坑没有人指导,身体将会感觉一次次被掏空,作为菜鸟的我也感同身受,因为已经被掏空n次了. 以下也是我将整个openstack配置过程进 ...

- Centos7上部署openstack ocata配置详解

之前写过一篇<openstack mitaka 配置详解>然而最近使用发现阿里不再提供m版本的源,所以最近又开始学习ocata版本,并进行总结,写下如下文档 OpenStack ocata ...

- (转)Centos7上部署openstack ocata配置详解

原文:http://www.cnblogs.com/yaohong/p/7601470.html 随笔-124 文章-2 评论-82 Centos7上部署openstack ocata配置详解 ...

- OpenStack 部署步骤详解(mitaka/ocata/一键部署)

正文 OpenStack作为一个由NASA(美国国家航空航天局)和Rackspace合作研发并发起的,开放源代码项目的云计算管理平台项目.具体知识我会在后面文章中做出介绍,本章主要按步骤给大家演示在C ...

- 日志分析工具ELK配置详解

日志分析工具ELK配置详解 一.ELK介绍 1.1 elasticsearch 1.1.1 elasticsearch介绍 ElasticSearch是一个基于Lucene的搜索服务器.它提供了一个分 ...

- OpenStack各组件详解和通信流程

一.openstack由来 openstack最早由美国国家航空航天局NASA研发的Nova和Rackspace研发的swift组成.后来以apache许可证授权,旨在为公共及私有云平台建设.open ...

- Log4j配置详解(转)

一.Log4j简介 Log4j有三个主要的组件:Loggers(记录器),Appenders (输出源)和Layouts(布局).这里可简单理解为日志类别,日志要输出的地方和日志以何种形式输出.综合使 ...

- logback 常用配置详解<appender>

logback 常用配置详解 <appender> <appender>: <appender>是<configuration>的子节点,是负责写日志的 ...

- [转]阿里巴巴数据库连接池 druid配置详解

一.背景 java程序很大一部分要操作数据库,为了提高性能操作数据库的时候,又不得不使用数据库连接池.数据库连接池有很多选择,c3p.dhcp.proxool等,druid作为一名后起之秀,凭借其出色 ...

随机推荐

- 一个简单的python选课系统

下面介绍一下自己写的python程序,主要是的知识点为sys.os.json.pickle的模块应用,python程序包的的使用,以及关于类的使用. 下面是我的程序目录: bin是存放一些执行文件co ...

- accp8.0转换教材第9章JQuery相关知识理解与练习

自定义动画 一.单词部分: ①animate动画②remove移除③validity有效性 ④required匹配⑤pattern模式 二.预习部分 1.简述JavaScript事件和jquery事件 ...

- RabbitMQ系列教程之六:远程过程调用(RPC)

远程过程调用(Remote Proceddure call[RPC])(本实例都是使用的Net的客户端,使用C#编写) 在第二个教程中,我们学习了如何使用工作队列在多个工作实例之间分配耗时的任务. ...

- 64位Win10系统安装Mysql5.7.11

最近在装了64位Win10系统的mac book笔记本上用mysql-installer-community-5.7.11.0安装Mysql5.7.11,在配置mysql server时老是卡住,报错 ...

- 虚幻引擎UE4如何制作可拖动(Drag and Drop)的背包(Scrollbox)

本教程适合初学者(学习经历已有30天的UE4初学者). 最终效果 由于隐私保护,不想截实际的效果图,下面给出了示意图,左边是背包A,右边是背包B,将其中的子项目从左侧拖往右侧的背包,然后在插入位置放置 ...

- ELK日志框架(2):log4net.ElasticSearch+ Kibana实现日志记录和显示

环境说明 1. windows server 2012 R2 64位 2. log4net.ElasticSearch 3. kibana-5.5.0-windows-x86.zip 架构说明 数据采 ...

- java中变量赋值的理解

1.当赋值的值超出声明变量的范围时候,会报错! byte a =200 //会报错,因超出范围. byte a =(byte)200;//进行一个强制转换,就不会报错,不过会超出范围,超出部分会从头开 ...

- Spring MVC 文件下载时候 发现IE不支持

@RequestMapping("download") public ResponseEntity<byte[]> download(Long fileKey) thr ...

- Java之面向对象例子(二)

定义一个Book类,在定义一个JavaBook类继承他 //book类 package com.hanqi.maya.model; public class Book { public String ...

- docker~使用阿里加速器安centos

回到目录 上一篇说了hub.docker.com里拉个镜像太,而阿里云为我们做了不少本国镜像,这样下载的速度就很惊人了,下面看一下在centos7下配置阿里云加速器的方法 打开服务配置文件 vi /e ...