【Hadoop】Hadoop MR 自定义序列化类

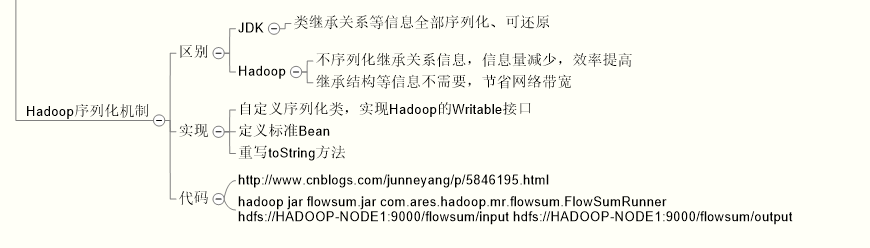

1、基本概念

2、Mapper代码

package com.ares.hadoop.mr.flowsum; import java.io.IOException; import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.util.StringUtils;

import org.apache.log4j.Logger; import com.ares.hadoop.mr.wordcount.MRTest; //Long, String, String, Long --> LongWritable, Text, Text, LongWritable

public class FlowSumMapper extends Mapper<LongWritable, Text, Text, FlowBean> {

private static final Logger LOGGER = Logger.getLogger(MRTest.class); private String line;

private int length;

private final static char separator = '\t'; private String phoneNum;

private long upFlow;

private long downFlow;

//private long sumFlow; private Text text = new Text();

private FlowBean flowBean = new FlowBean(); @Override

protected void map(LongWritable key, Text value,

Mapper<LongWritable, Text, Text, FlowBean>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

//super.map(key, value, context);

line = value.toString();

String[] fields = StringUtils.split(line, separator);

length = fields.length;

if (length != ) {

LOGGER.error(key.get() + ", " + line + " LENGTH INVALID, IGNORE...");

} phoneNum = fields[];

try {

upFlow = Long.parseLong(fields[length-]);

downFlow = Long.parseLong(fields[length-]);

//sumFlow = upFlow + downFlow;

} catch (Exception e) {

// TODO: handle exception

LOGGER.error(key.get() + ", " + line + " FLOW DATA INVALID, IGNORE...");

} flowBean.setPhoneNum(phoneNum);

flowBean.setUpFlow(upFlow);

flowBean.setDownFlow(downFlow);

//flowBean.setSumFlow(sumFlow); text.set(phoneNum);

context.write(text, flowBean);

}

}

3、Reducer代码

package com.ares.hadoop.mr.flowsum; import java.io.IOException; import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer; public class FlowSumReducer extends Reducer<Text, FlowBean, Text, FlowBean>{

//private static final Logger LOGGER = Logger.getLogger(MRTest.class); private FlowBean flowBean = new FlowBean(); @Override

protected void reduce(Text key, Iterable<FlowBean> values,

Reducer<Text, FlowBean, Text, FlowBean>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

//super.reduce(arg0, arg1, arg2);

long upFlowCounter = ;

long downFlowCounter = ; for (FlowBean flowBean : values) {

upFlowCounter += flowBean.getUpFlow();

downFlowCounter += flowBean.getDownFlow();

}

flowBean.setPhoneNum(key.toString());

flowBean.setUpFlow(upFlowCounter);

flowBean.setDownFlow(downFlowCounter);

flowBean.setSumFlow(upFlowCounter + downFlowCounter); context.write(key, flowBean);

}

}

4、序列化Bean代码

package com.ares.hadoop.mr.flowsum; import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException; import org.apache.hadoop.io.Writable; public class FlowBean implements Writable {

private String phoneNum;

private long upFlow;

private long downFlow;

private long sumFlow; public FlowBean() {

// TODO Auto-generated constructor stub

}

// public FlowBean(String phoneNum, long upFlow, long downFlow, long sumFlow) {

// super();

// this.phoneNum = phoneNum;

// this.upFlow = upFlow;

// this.downFlow = downFlow;

// this.sumFlow = sumFlow;

// } public String getPhoneNum() {

return phoneNum;

} public void setPhoneNum(String phoneNum) {

this.phoneNum = phoneNum;

} public long getUpFlow() {

return upFlow;

} public void setUpFlow(long upFlow) {

this.upFlow = upFlow;

} public long getDownFlow() {

return downFlow;

} public void setDownFlow(long downFlow) {

this.downFlow = downFlow;

} public long getSumFlow() {

return sumFlow;

} public void setSumFlow(long sumFlow) {

this.sumFlow = sumFlow;

} @Override

public void readFields(DataInput in) throws IOException {

// TODO Auto-generated method stub

phoneNum = in.readUTF();

upFlow = in.readLong();

downFlow = in.readLong();

sumFlow = in.readLong();

} @Override

public void write(DataOutput out) throws IOException {

// TODO Auto-generated method stub

out.writeUTF(phoneNum);

out.writeLong(upFlow);

out.writeLong(downFlow);

out.writeLong(sumFlow);

} @Override

public String toString() {

return "" + upFlow + "\t" + downFlow + "\t" + sumFlow;

} }

5、TestRunner代码

package com.ares.hadoop.mr.flowsum; import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import org.apache.log4j.Logger; public class FlowSumRunner extends Configured implements Tool {

private static final Logger LOGGER = Logger.getLogger(FlowSumRunner.class); @Override

public int run(String[] args) throws Exception {

// TODO Auto-generated method stub

LOGGER.debug("MRTest: MRTest STARTED..."); if (args.length != ) {

LOGGER.error("MRTest: ARGUMENTS ERROR");

System.exit(-);

} Configuration conf = new Configuration();

//FOR Eclipse JVM Debug

//conf.set("mapreduce.job.jar", "flowsum.jar");

Job job = Job.getInstance(conf); // JOB NAME

job.setJobName("flowsum"); // JOB MAPPER & REDUCER

job.setJarByClass(FlowSumRunner.class);

job.setMapperClass(FlowSumMapper.class);

job.setReducerClass(FlowSumReducer.class); // MAP & REDUCE

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(FlowBean.class);

// MAP

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FlowBean.class); // JOB INPUT & OUTPUT PATH

//FileInputFormat.addInputPath(job, new Path(args[0]));

FileInputFormat.setInputPaths(job, args[]);

FileOutputFormat.setOutputPath(job, new Path(args[])); // VERBOSE OUTPUT

if (job.waitForCompletion(true)) {

LOGGER.debug("MRTest: MRTest SUCCESSFULLY...");

return ;

} else {

LOGGER.debug("MRTest: MRTest FAILED...");

return ;

} } public static void main(String[] args) throws Exception {

int result = ToolRunner.run(new Configuration(), new FlowSumRunner(), args);

System.exit(result);

} }

参考资料:

http://www.cnblogs.com/robert-blue/p/4157768.html

http://www.cnblogs.com/qlee/archive/2011/05/18/2049610.html

http://blog.163.com/lzm07@126/blog/static/25705468201331611857190/

http://blog.csdn.net/lastsweetop/article/details/9193907

【Hadoop】Hadoop MR 自定义序列化类的更多相关文章

- Hadoop【MR开发规范、序列化】

Hadoop[MR开发规范.序列化] 目录 Hadoop[MR开发规范.序列化] 一.MapReduce编程规范 1.Mapper阶段 2.Reducer阶段 3.Driver阶段 二.WordCou ...

- hadoop提交作业自定义排序和分组

现有数据如下: 3 3 3 2 3 1 2 2 2 1 1 1 要求为: 先按第一列从小到大排序,如果第一列相同,按第二列从小到大排序 如果是hadoop默认的排序方式,只能比较key,也就是第一列, ...

- hadoop深入研究:(十三)——序列化框架

hadoop深入研究:(十三)--序列化框架 Mapreduce之序列化框架(转自http://blog.csdn.net/lastsweetop/article/details/9376495) 框 ...

- 在hadoop作业中自定义分区和归约

当遇到有特殊的业务需求时,需要对hadoop的作业进行分区处理 那么我们可以通过自定义的分区类来实现 还是通过单词计数的例子,JMapper和JReducer的代码不变,只是在JSubmit中改变了设 ...

- 【Hadoop】MapReduce自定义分区Partition输出各运营商的手机号码

MapReduce和自定义Partition MobileDriver主类 package Partition; import org.apache.hadoop.io.NullWritable; i ...

- 为什么hadoop中用到的序列化不是java的serilaziable接口去序列化而是使用Writable序列化框架

继上一个模块之后,此次分析的内容是来到了Hadoop IO相关的模块了,IO系统的模块可谓是一个比较大的模块,在Hadoop Common中的io,主要包括2个大的子模块构成,1个是以Writable ...

- Hadoop【MR的分区、排序、分组】

[toc] 一.分区 问题:按照条件将结果输出到不同文件中 自定义分区步骤 1.自定义继承Partitioner类,重写getPartition()方法 2.在job驱动Driver中设置自定义的Pa ...

- hadoop修改MR的提交的代码程序的副本数

hadoop修改MR的提交的代码程序的副本数 Under-Replicated Blocks的数量很多,有7万多个.hadoop fsck -blocks 检查发现有很多replica missing ...

- Hadoop streaming使用自定义python版本和第三方库

在使用Hadoop的过程中,遇到了自带python版本比较老的问题. 下面以python3.7为例,演示如何在hadoop上使用自定义的python版本以及第三方库. 1.在https://www.p ...

随机推荐

- 中英文混截,一个中文相当于n个英文

项目中遇到这么个需求,截取中英文字符串,一个中文相当于2个英文,全英文时截取12个英文字母,全中文时是6个中文汉字,中英文混合时是12个字节,在网上有找到这样的解决方案,但我没能静下心来研究懂,于是自 ...

- Codeforces Round #356 (Div. 2) C

C. Bear and Prime 100 time limit per test 1 second memory limit per test 256 megabytes input standar ...

- Extra Judicial Operation

Description The Suitably Protected Programming Contest (SPPC) is a multi-site contest in which conte ...

- code forces 999C Alphabetic Removals

C. Alphabetic Removals time limit per test 2 seconds memory limit per test 256 megabytes input stand ...

- stringutil的方法

StringUtils 源码,使用的是commons-lang3-3.1包. 下载地址 http://commons.apache.org/lang/download_lang.cgi 以下是Stri ...

- vue倒计时页面

https://www.cnblogs.com/sichaoyun/p/6645042.html https://blog.csdn.net/sinat_17775997/article/detail ...

- ES6特性以及代码demo

块级作用域let if(true){ let fruit = ‘apple’; } consoloe.log(fruit);//会报错,因为let只在if{ }的作用域有效,也就是块级作用域 恒量co ...

- iframe操作(跨域解决等)

note:当页面内嵌入一个iframe实际上是在dom上新建了一个新的完整的window对象 iframe中取得主窗体 window.top (顶级窗口的window对象) window.parent ...

- [ CodeVS冲杯之路 ] P1214

不充钱,你怎么AC? 题目:http://codevs.cn/problem/1214/ 这道题类似于最长区间覆盖,仅仅是将最长区间改成了最多线段,我们贪心即可 先将线段直接右边-1,然后按左边为第一 ...

- Linux内核内存管理-内存访问与缺页中断【转】

转自:https://yq.aliyun.com/articles/5865 摘要: 简单描述了x86 32位体系结构下Linux内核的用户进程和内核线程的线性地址空间和物理内存的联系,分析了高端内存 ...