ELK日志分析系统之Kibana7.x最新版安装与配置

3、Kibana的简介

Kibana 让您能够自由地选择如何呈现自己的数据。Kibana 核心产品搭载了一批经典功能:柱状图、线状图、饼图、旭日图等等。

3.1、软件包下载地址:https://www.elastic.co/cn/downloads/kibana

3.1.1 软件包tar压缩包,解压到/application目录中,并创建链接文件。

ln -s /application/kibana-7.3.-linux-x86_64 /application/kibana

3.2、配置Kibana服务的配置文件/application/kibana/config/kibana.yml

# Kibana is served by a back end server. This setting specifies the port to use.

server.port: 5601 #kibana端口 # Specifies the address to which the Kibana server will bind. IP addresses and host names are both valid values.

# The default is 'localhost', which usually means remote machines will not be able to connect.

# To allow connections from remote users, set this parameter to a non-loopback address.

server.host: "10.0.0.169" #绑定的主机IP地址 # Enables you to specify a path to mount Kibana at if you are running behind a proxy.

# Use the `server.rewriteBasePath` setting to tell Kibana if it should remove the basePath

# from requests it receives, and to prevent a deprecation warning at startup.

# This setting cannot end in a slash.

#server.basePath: "" # Specifies whether Kibana should rewrite requests that are prefixed with

# `server.basePath` or require that they are rewritten by your reverse proxy.

# This setting was effectively always `false` before Kibana 6.3 and will

# default to `true` starting in Kibana 7.0.

#server.rewriteBasePath: false # The maximum payload size in bytes for incoming server requests.

#server.maxPayloadBytes: # The Kibana server's name. This is used for display purposes.

#server.name: "your-hostname" # The URLs of the Elasticsearch instances to use for all your queries.

elasticsearch.hosts: ["http://10.0.0.169:9200"] #elasticsearch的主机IP # When this setting's value is true Kibana uses the hostname specified in the server.host

# setting. When the value of this setting is false, Kibana uses the hostname of the host

# that connects to this Kibana instance.

#elasticsearch.preserveHost: true # Kibana uses an index in Elasticsearch to store saved searches, visualizations and

# dashboards. Kibana creates a new index if the index doesn't already exist.

kibana.index: ".kibana" #开启此选项 # The default application to load.

#kibana.defaultAppId: "home" # If your Elasticsearch is protected with basic authentication, these settings provide

# the username and password that the Kibana server uses to perform maintenance on the Kibana

# index at startup. Your Kibana users still need to authenticate with Elasticsearch, which

# is proxied through the Kibana server.

#elasticsearch.username: "kibana"

#elasticsearch.password: "pass" # Enables SSL and paths to the PEM-format SSL certificate and SSL key files, respectively.

# These settings enable SSL for outgoing requests from the Kibana server to the browser.

#server.ssl.enabled: false

#server.ssl.certificate: /path/to/your/server.crt

#server.ssl.key: /path/to/your/server.key # Optional settings that provide the paths to the PEM-format SSL certificate and key files.

# These files validate that your Elasticsearch backend uses the same key files.

#elasticsearch.ssl.certificate: /path/to/your/client.crt

#elasticsearch.ssl.key: /path/to/your/client.key # Optional setting that enables you to specify a path to the PEM file for the certificate

# authority for your Elasticsearch instance.

#elasticsearch.ssl.certificateAuthorities: [ "/path/to/your/CA.pem" ] # To disregard the validity of SSL certificates, change this setting's value to 'none'.

#elasticsearch.ssl.verificationMode: full # Time in milliseconds to wait for Elasticsearch to respond to pings. Defaults to the value of

# the elasticsearch.requestTimeout setting.

#elasticsearch.pingTimeout: # Time in milliseconds to wait for responses from the back end or Elasticsearch. This value

# must be a positive integer.

#elasticsearch.requestTimeout: # List of Kibana client-side headers to send to Elasticsearch. To send *no* client-side

# headers, set this value to [] (an empty list).

#elasticsearch.requestHeadersWhitelist: [ authorization ] # Header names and values that are sent to Elasticsearch. Any custom headers cannot be overwritten

# by client-side headers, regardless of the elasticsearch.requestHeadersWhitelist configuration.

#elasticsearch.customHeaders: {} # Time in milliseconds for Elasticsearch to wait for responses from shards. Set to to disable.

#elasticsearch.shardTimeout: # Time in milliseconds to wait for Elasticsearch at Kibana startup before retrying.

#elasticsearch.startupTimeout: # Logs queries sent to Elasticsearch. Requires logging.verbose set to true.

#elasticsearch.logQueries: false # Specifies the path where Kibana creates the process ID file.

#pid.file: /var/run/kibana.pid # Enables you specify a file where Kibana stores log output.

#logging.dest: stdout # Set the value of this setting to true to suppress all logging output.

#logging.silent: false # Set the value of this setting to true to suppress all logging output other than error messages.

#logging.quiet: false # Set the value of this setting to true to log all events, including system usage information

# and all requests.

#logging.verbose: false # Set the interval in milliseconds to sample system and process performance

# metrics. Minimum is 100ms. Defaults to .

#ops.interval: # Specifies locale to be used for all localizable strings, dates and number formats.

# Supported languages are the following: English - en , by default , Chinese - zh-CN .

i18n.locale: "zh-CN" #kibana默认文字是英文,变更成中文

3.3、启动kibana

如果使用root用户启动服务,后面必须加--allow-root选项。

[root@harlan_ansible ~]# /application/kibana/bin/kibana --allow-root

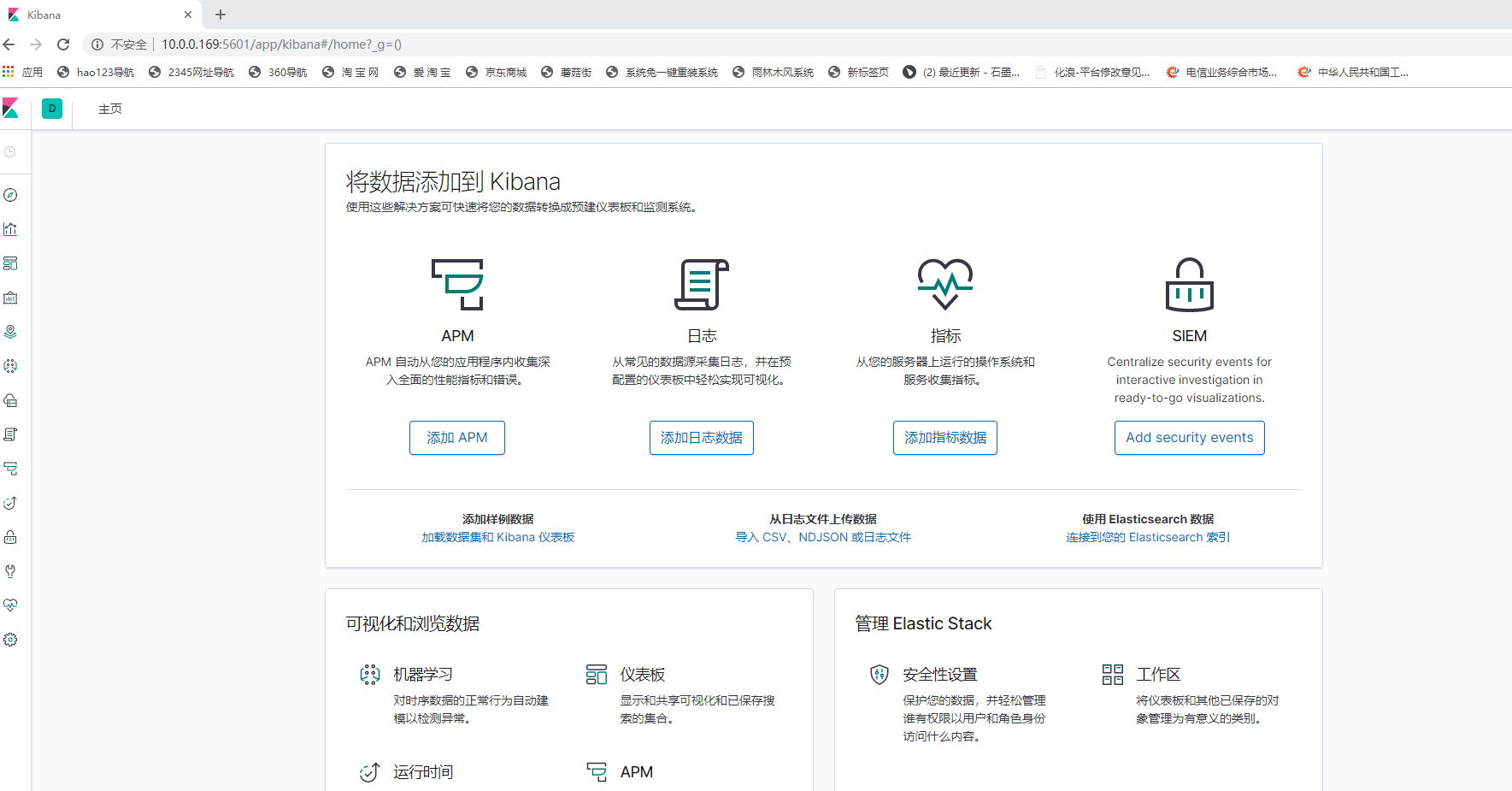

3.4、通过浏览器访问地址:http://10.0.0.169:5601

由上述可知,kibana服务安装和配置成功。

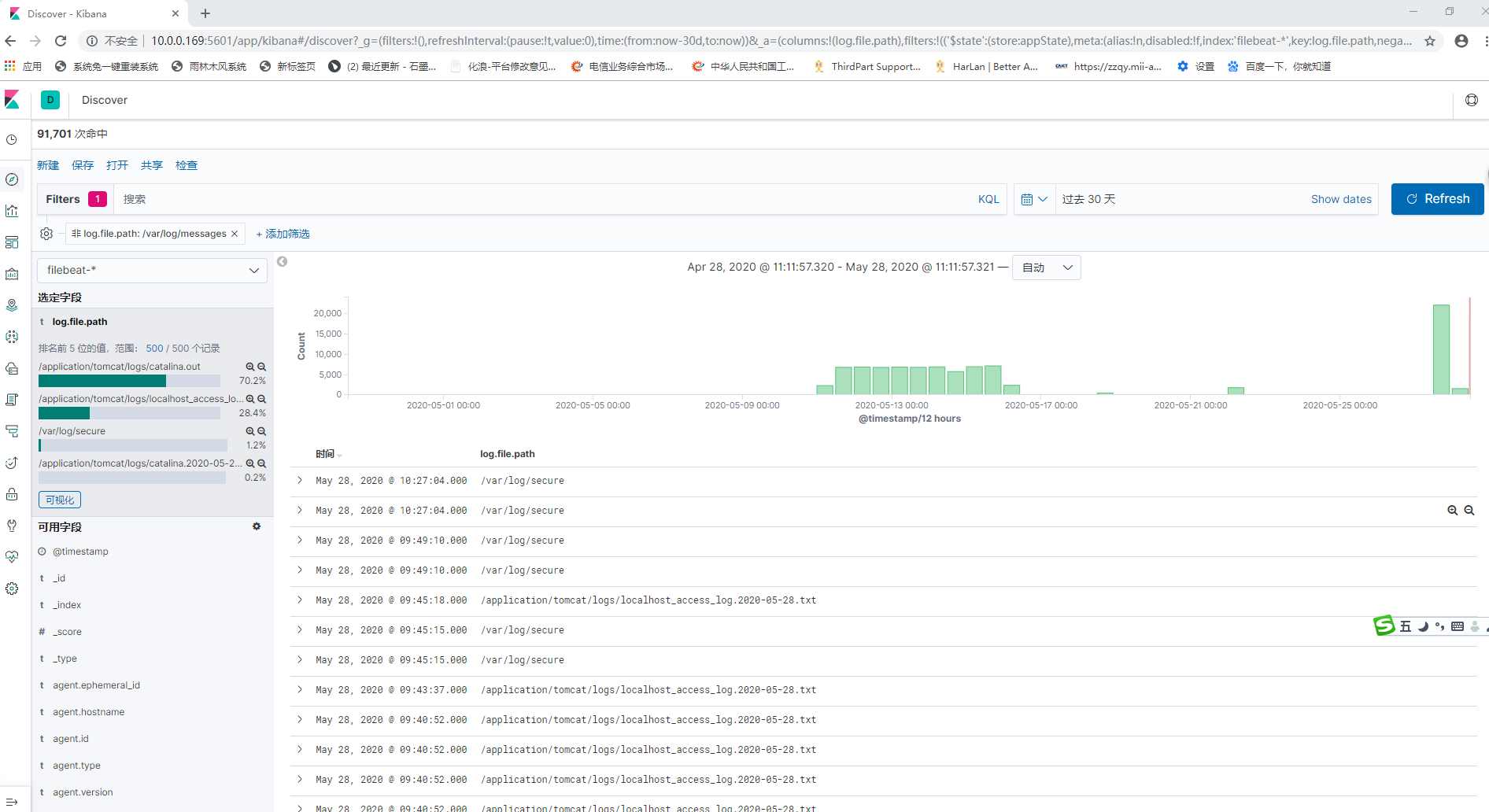

3.5、通过访问kibana浏览器,我们可以直接在需要收集日志的主机上安装Beats软件,不需要在ELK主机上安装Logstash软件包。

通过配置Beats服务的配置文件,来收集不同服务的日志。

3.5.1、例收集客户机的tomcat服务器访问日志文件

客户机IP:10.0.0.175

ELK服务器的IP:10.0.0.169

curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.3.2-x86_64.rpm

sudo rpm -vi filebeat-7.3.-x86_64.rpm

编辑配置

修改 /etc/filebeat/filebeat.yml 以设置连接信息:

###################### Filebeat Configuration Example #########################

# This file is an example configuration file highlighting only the most common

# options. The filebeat.reference.yml file from the same directory contains all the

# supported options with more comments. You can use it as a reference.

#

# You can find the full configuration reference here:

# https://www.elastic.co/guide/en/beats/filebeat/index.html

# For more available modules and options, please see the filebeat.reference.yml sample

# configuration file.

#=========================== Filebeat inputs =============================

filebeat.inputs:

# Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations.

- type: log

# Change to true to enable this input configuration.

enabled: true #开启,由false变成true

# Paths that should be crawled and fetched. Glob based paths.

paths:

- /var/log/*.log #默认系统日志路径

#- c:\programdata\elasticsearch\logs\*

# Exclude lines. A list of regular expressions to match. It drops the lines that are

# matching any regular expression from the list.

#exclude_lines: ['^DBG']

# Include lines. A list of regular expressions to match. It exports the lines that are

# matching any regular expression from the list.

#include_lines: ['^ERR', '^WARN']

# Exclude files. A list of regular expressions to match. Filebeat drops the files that

# are matching any regular expression from the list. By default, no files are dropped.

#exclude_files: ['.gz$']

# Optional additional fields. These fields can be freely picked

# to add additional information to the crawled log files for filtering

#fields:

# level: debug

# review: 1

### Multiline options

# Multiline can be used for log messages spanning multiple lines. This is common

# for Java Stack Traces or C-Line Continuation

# The regexp Pattern that has to be matched. The example pattern matches all lines starting with [

#multiline.pattern: ^\[

# Defines if the pattern set under pattern should be negated or not. Default is false.

#multiline.negate: false

# Match can be set to "after" or "before". It is used to define if lines should be append to a pattern

# that was (not) matched before or after or as long as a pattern is not matched based on negate.

# Note: After is the equivalent to previous and before is the equivalent to to next in Logstash

#multiline.match: after

#============================= Filebeat modules ===============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: flase

# Period on which files under path should be checked for changes

#reload.period: 10s

#==================== Elasticsearch template setting ==========================

setup.template.settings:

index.number_of_shards: 1

#index.codec: best_compression

#_source.enabled: false

# Defines if the pattern set under pattern should be negated or not. Default is false.

#multiline.negate: false

# Match can be set to "after" or "before". It is used to define if lines should be append to a pattern

# that was (not) matched before or after or as long as a pattern is not matched based on negate.

# Note: After is the equivalent to previous and before is the equivalent to to next in Logstash

#multiline.match: after

#============================= Filebeat modules ===============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: false

# Period on which files under path should be checked for changes

#reload.period: 10s

#==================== Elasticsearch template setting ==========================

setup.template.settings:

index.number_of_shards: 1

#index.codec: best_compression

#_source.enabled: false

#================================ General =====================================

# The name of the shipper that publishes the network data. It can be used to group

# all the transactions sent by a single shipper in the web interface.

#name:

# The tags of the shipper are included in their own field with each

# transaction published.

#tags: ["service-X", "web-tier"]

# Optional fields that you can specify to add additional information to the

# output.

#fields:

# env: staging

#============================== Dashboards =====================================

# These settings control loading the sample dashboards to the Kibana index. Loading

# the dashboards is disabled by default and can be enabled either by setting the

# options here or by using the `setup` command.

#setup.dashboards.enabled: false

# The URL from where to download the dashboards archive. By default this URL

# has a value which is computed based on the Beat name and version. For released

# versions, this URL points to the dashboard archive on the artifacts.elastic.co

# website.

#setup.dashboards.url:

#============================== Kibana =====================================

# Starting with Beats version 6.0.0, the dashboards are loaded via the Kibana API.

# This requires a Kibana endpoint configuration.

setup.kibana:

# Kibana Host

# Scheme and port can be left out and will be set to the default (http and 5601)

# In case you specify and additional path, the scheme is required: http://localhost:5601/path

# IPv6 addresses should always be defined as: https://[2001:db8::1]:5601

host: "10.0.0.169:5601" #添加上kibana的主机IP

# Kibana Space ID

# ID of the Kibana Space into which the dashboards should be loaded. By default,

# the Default Space will be used.

#space.id:

#============================= Elastic Cloud ==================================

# These settings simplify using Filebeat with the Elastic Cloud (https://cloud.elastic.co/).

# The cloud.id setting overwrites the `output.elasticsearch.hosts` and

# `setup.kibana.host` options.

# You can find the `cloud.id` in the Elastic Cloud web UI.

#cloud.id:

# The cloud.auth setting overwrites the `output.elasticsearch.username` and

# `output.elasticsearch.password` settings. The format is `<user>:<pass>`.

#cloud.auth:

#================================ Outputs =====================================

# Configure what output to use when sending the data collected by the beat.

#-------------------------- Elasticsearch output ------------------------------

output.elasticsearch:

# Array of hosts to connect to.

hosts: ["10.0.0.169:9200"] #Elasticsearch主机的IP地址

# Optional protocol and basic auth credentials.

#protocol: "https"

username: "es" #启动elasticsearch的用户

password: "harlan-herine" #启动elasticsearch的用户的密码

#----------------------------- Logstash output --------------------------------

#output.logstash:

# The Logstash hosts

#hosts: ["localhost:5044"]

# Optional SSL. By default is off.

# List of root certificates for HTTPS server verifications

#ssl.certificate_authorities: ["/etc/pki/root/ca.pem"]

# Certificate for SSL client authentication

#ssl.certificate: "/etc/pki/client/cert.pem"

# Client Certificate Key

#ssl.key: "/etc/pki/client/cert.key"

#================================ Processors =====================================

# Configure processors to enhance or manipulate events generated by the beat.

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

#================================ Logging =====================================

# Sets log level. The default log level is info.

# Available log levels are: error, warning, info, debug

#logging.level: debug

# At debug level, you can selectively enable logging only for some components.

# To enable all selectors use ["*"]. Examples of other selectors are "beat",

# "publish", "service".

#logging.selectors: ["*"]

#============================== Xpack Monitoring ===============================

# filebeat can export internal metrics to a central Elasticsearch monitoring

# cluster. This requires xpack monitoring to be enabled in Elasticsearch. The

# reporting is disabled by default.

# Set to true to enable the monitoring reporter.

#monitoring.enabled: false

# Sets the UUID of the Elasticsearch cluster under which monitoring data for this

# Filebeat instance will appear in the Stack Monitoring UI. If output.elasticsearch

# is enabled, the UUID is derived from the Elasticsearch cluster referenced by output.elasticsearch.

#monitoring.cluster_uuid:

# Uncomment to send the metrics to Elasticsearch. Most settings from the

# Elasticsearch output are accepted here as well.

# Note that the settings should point to your Elasticsearch *monitoring* cluster.

# Any setting that is not set is automatically inherited from the Elasticsearch

# output configuration, so if you have the Elasticsearch output configured such

# that it is pointing to your Elasticsearch monitoring cluster, you can simply

# uncomment the following line.

#monitoring.elasticsearch:

#================================= Migration ==================================

# This allows to enable 6.7 migration aliases

#migration.6_to_7.enabled: true

其中,<password> 是 elastic 用户的密码,<es_url> 是 Elasticsearch 的 URL,<kibana_url> 是 Kibana 的 URL。

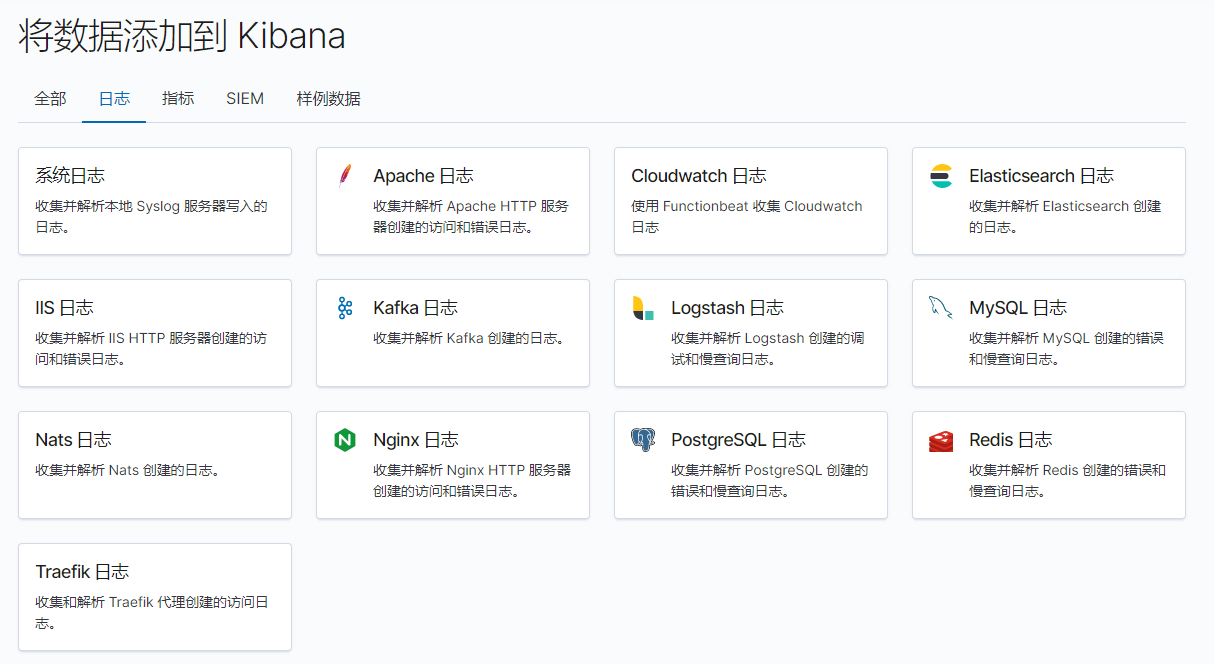

启用和配置apache模块来收集tomcat日志

filebeat modules enable apache

在 /etc/filebeat/modules.d/apache.yml 文件中修改设置。

[root@harlan-web ~]# vim /etc/filebeat/modules.d/apache.yml

# Module: apache

# Docs: https://www.elastic.co/guide/en/beats/filebeat/7.3/filebeat-module-apache.html - module: apache

# Access logs

access:

enabled: true

var.paths: ["/application/apache-tomcat-8.5.42/logs/*"] #web服务器tomcat日志存放目录

# Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths: # Error logs

error:

enabled: true # Set custom paths for the log files. If left empty,

# Filebeat will choose the paths depending on your OS.

#var.paths:

启动 Filebeat

setup 命令加载 Kibana 仪表板。如果仪表板已设置,请省略此命令。

sudo filebeat setup

sudo systemctl start filebeat.service

确认已从 Filebeat apache 模块成功收到数据

完成所有步骤后,您便可以随时浏览自己的数据。

ELK日志分析系统之Kibana7.x最新版安装与配置的更多相关文章

- ELK日志分析系统之elasticsearch7.x最新版安装与配置

1.Elasticsearch 1.1.elasticsearch的简介 ElasticSearch是一个基于Lucene的搜索服务器.它提供了一个分布式多用户能力的全文搜索引擎,基于RESTful ...

- ELK日志分析系统之logstash7.x最新版安装与配置

2 .Logstash的简介 2.1 logstash 介绍 LogStash由JRuby语言编写,基于消息(message-based)的简单架构,并运行在Java虚拟机(JVM)上.不同于分离的代 ...

- ELK日志分析系统简单部署

1.传统日志分析系统: 日志主要包括系统日志.应用程序日志和安全日志.系统运维和开发人员可以通过日志了解服务器软硬件信息.检查配置过程中的错误及错误发生的原因.经常分析日志可以了解服务器的负荷,性能安 ...

- Rsyslog+ELK日志分析系统

转自:https://www.cnblogs.com/itworks/p/7272740.html Rsyslog+ELK日志分析系统搭建总结1.0(测试环境) 因为工作需求,最近在搭建日志分析系统, ...

- 十分钟搭建和使用ELK日志分析系统

前言 为满足研发可视化查看测试环境日志的目的,准备采用EK+filebeat实现日志可视化(ElasticSearch+Kibana+Filebeat).题目为“十分钟搭建和使用ELK日志分析系统”听 ...

- ELK日志分析系统-Logstack

ELK日志分析系统 作者:Danbo 2016-*-* 本文是学习笔记,参考ELK Stack中文指南,链接:https://www.gitbook.com/book/chenryn/kibana-g ...

- elk 日志分析系统Logstash+ElasticSearch+Kibana4

elk 日志分析系统 Logstash+ElasticSearch+Kibana4 logstash 管理日志和事件的工具 ElasticSearch 搜索 Kibana4 功能强大的数据显示clie ...

- 《ElasticSearch6.x实战教程》之实战ELK日志分析系统、多数据源同步

第十章-实战:ELK日志分析系统 ElasticSearch.Logstash.Kibana简称ELK系统,主要用于日志的收集与分析. 一个完整的大型分布式系统,会有很多与业务不相关的系统,其中日志系 ...

- Docker笔记(十):使用Docker来搭建一套ELK日志分析系统

一段时间没关注ELK(elasticsearch —— 搜索引擎,可用于存储.索引日志, logstash —— 可用于日志传输.转换,kibana —— WebUI,将日志可视化),发现最新版已到7 ...

随机推荐

- spark复习笔记(7):sparkSQL

一.saprkSQL模块,使用类sql的方式访问Hadoop,实现mr计算,底层使用的是rdd 1.hive //hadoop mr sql 2.phenoix //hbase上构建sql的交互过 ...

- Latex--入门系列三

Latex 专业的参考 tex对于论文写作或者其他的一些需要排版的写作来说,还是非常有意义的.我在网上看到这个对于Latex的入门介绍还是比较全面的,Arbitrary reference .所以将会 ...

- 图像函数 imagecreatetruecolor()和imagecreate()的异同点

共同点:这两个函数都是用于创建画布 区别: 1.不同的是创建画布和为画布填充颜色的流程不一样; 用imagecreatetruecolor(int x,int y)创建的是一幅大小为 x和 y的图像( ...

- 如何同步发送put或者delete请求

1.必须把前端发送方式改为post . 2.在web.xml中配置一个filter:HiddenHttpMethodFilter过滤器 3.必须携带一个键值对,key=_method, value= ...

- JavaScript实现生成指定范围随机数和一个包含不重复数的随机数组

目前JavaScript里面还没有现成的方法可以实现这个简单地需求,我们就需要自己写代码了. 在js中有个函数:Math.random() 这个函数可以生成 [0,1) 的一个随机数. 我们的简单的改 ...

- 日志远程更新脚本shell

log_update.sh.bak: #!/bin/bash# To Update Logs... #/bin/rsync -avz --delete --exclude=warn.log @172. ...

- CreateMutex函数 (转)

CreateMutex函数 该函数找出当前系统是否已经存在指定进程的实例.如果没有则创建一个互斥体. CreateMutex()函数可用来创建一个有名或无名的互斥量对象,其函数原型为: HANDLE ...

- 算法-python

选择排序:一个列表被分为无序列表和有序列表,选择排序就是拿无序列表的第一个和后面的每一个相比较,每一趟选择出最小的一个,添加进有序列表. def select_sort(list): for i in ...

- OC中SEL,类别,继承,协议的使用

1.SEL SEL是selector的缩写,selector在OC中作用是定义一个方法变量,通过该方法变量来调用方法.我们在后面的UI中会经常用selector来调用事件方法.下面我将举两个例子来说明 ...

- 吐血整理 | 1000行MySQL学习笔记,不怕你不会,就怕你不学!

/ Windows服务 / / 连接与断开服务器 / / 数据库操作 / ------------------ / 表的操作 / ------------------ / 数据操作 / ------- ...