Spark SQL快速离线数据分析

拷贝hive-site.xml到spark的conf目录下面

打开spark的conf目录下的hive-site.xml文件

加上这段配置(我这里三个节点的spark都这样配置)

把hive中的mysql连接包放到spark中去

检查spark-env.sh的hadoop配置项

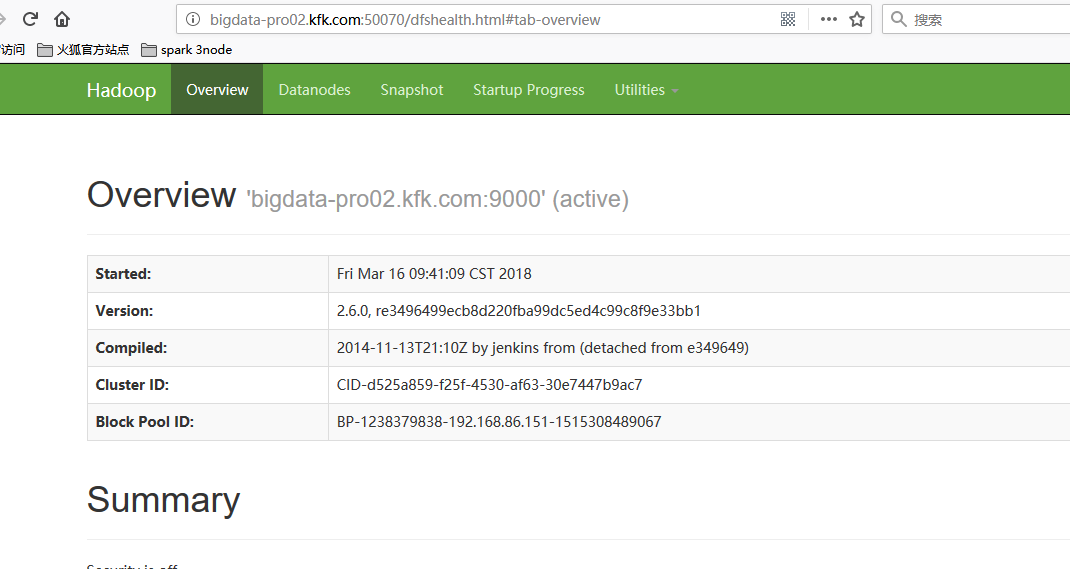

检查dfs是否启动了

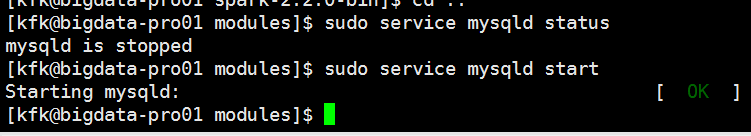

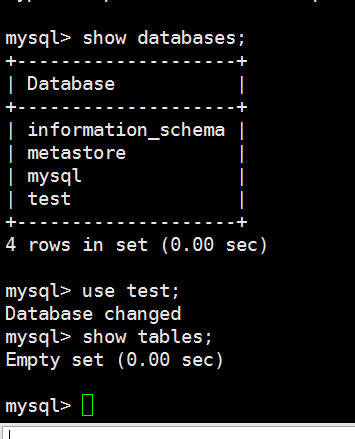

启动Mysql服务

启动hive metastore服务

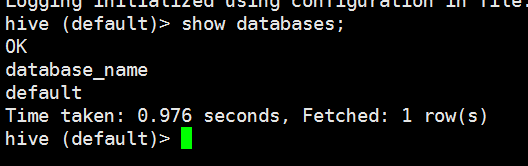

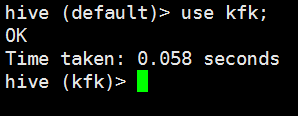

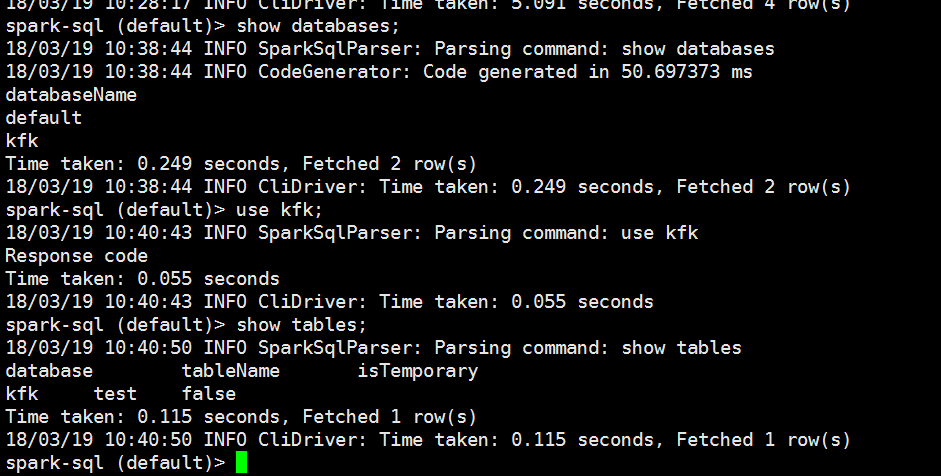

启动hive

创建一个自己的数据库

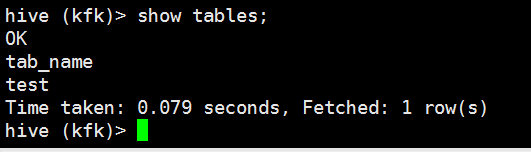

创建一个表

create table if not exists test(userid string,username string)ROW FORMAT DELIMITED FIELDS TERMINATED BY ' ' STORED AS textfile;

我们现在本地建一个数据文件

把数据加载进这个表里来

load data local inpath "/opt/datas/kfk.txt" into table test;

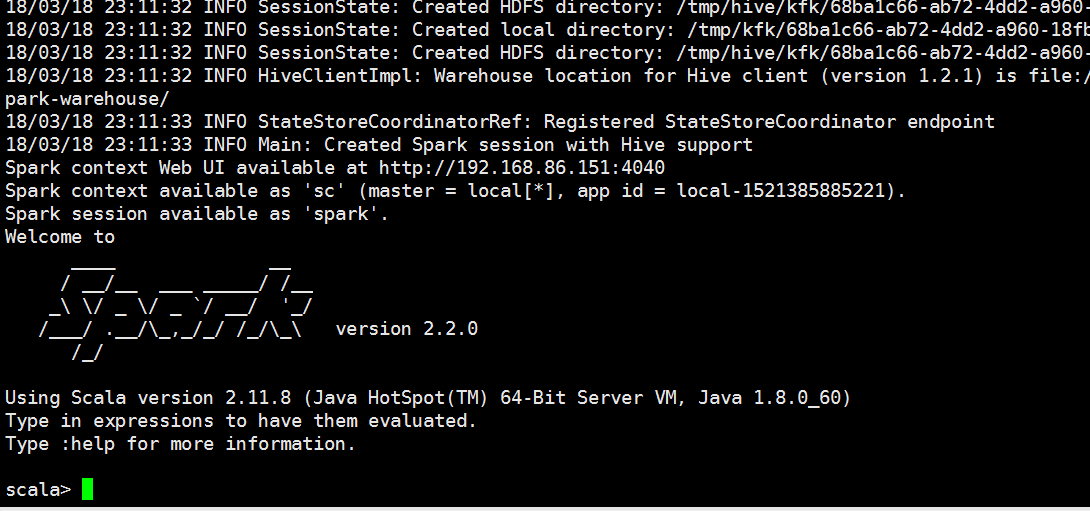

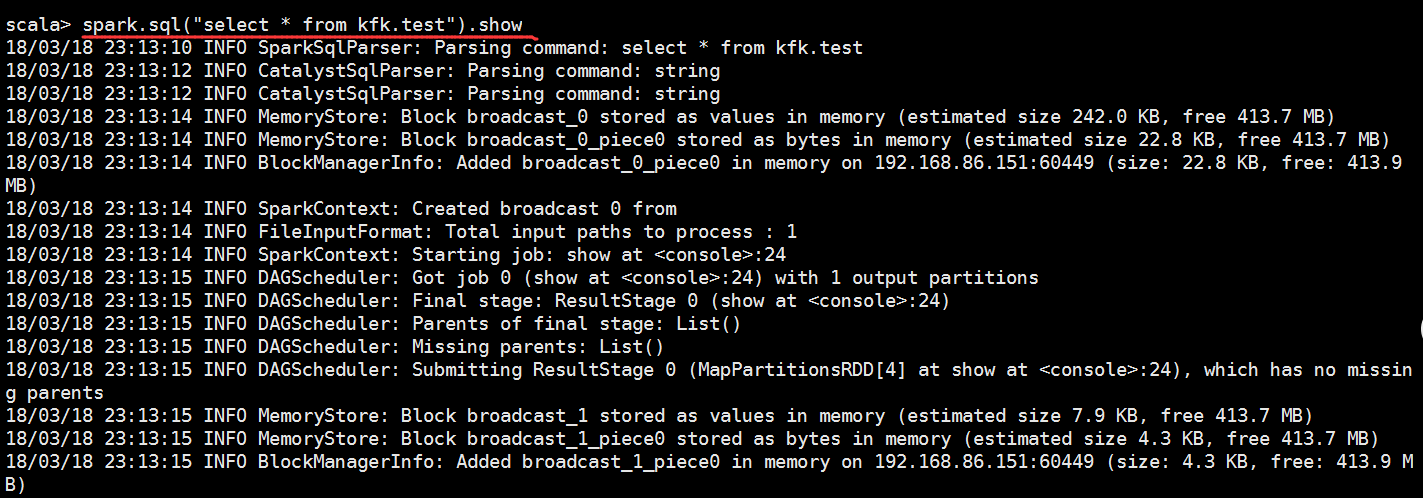

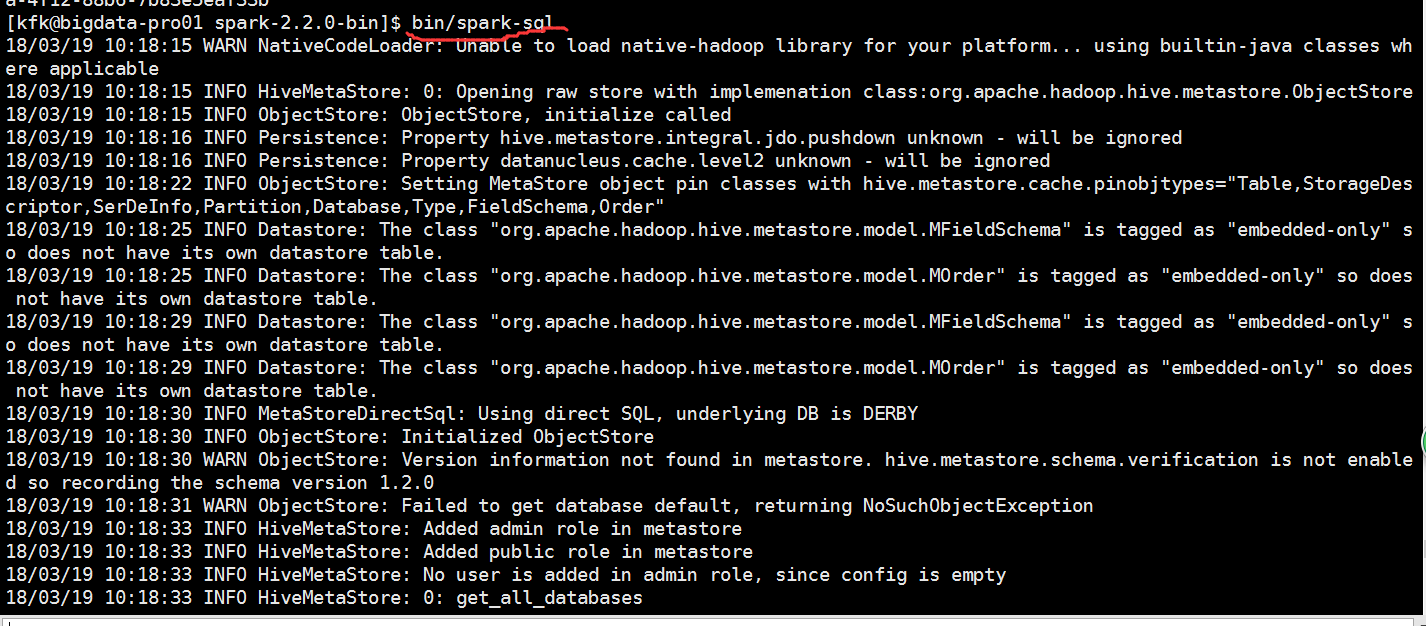

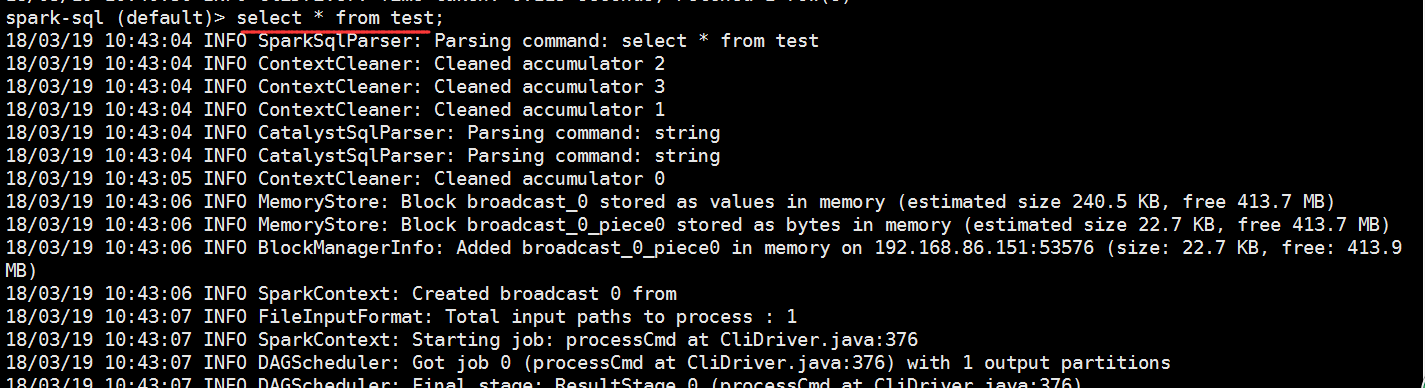

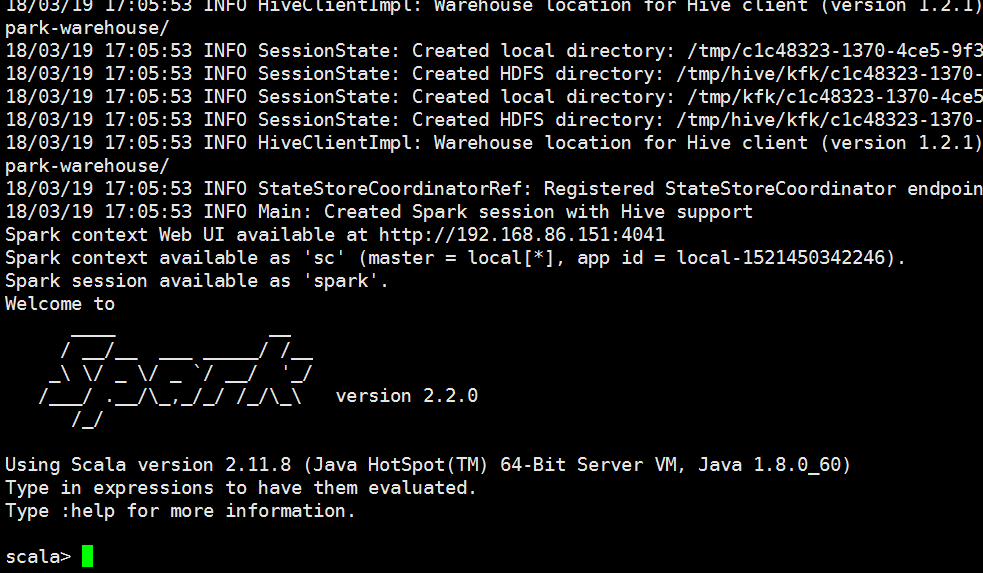

我们启动spark

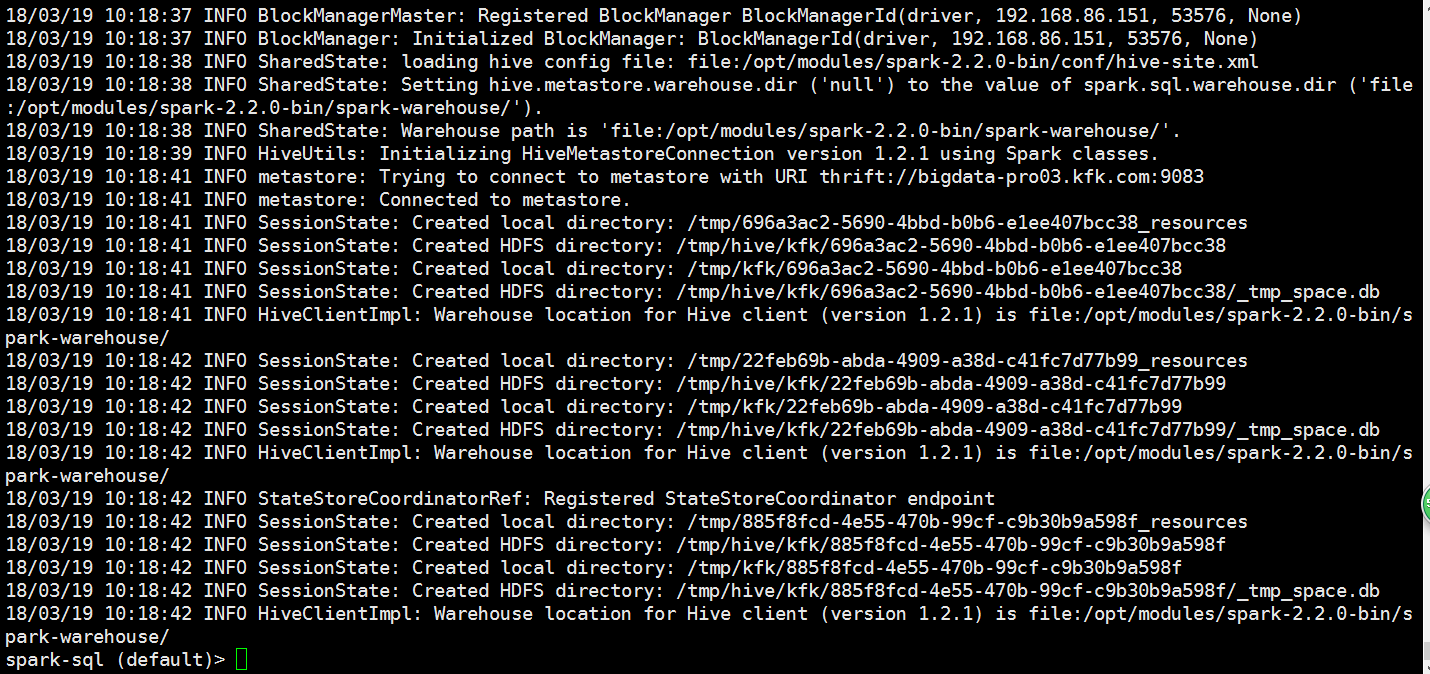

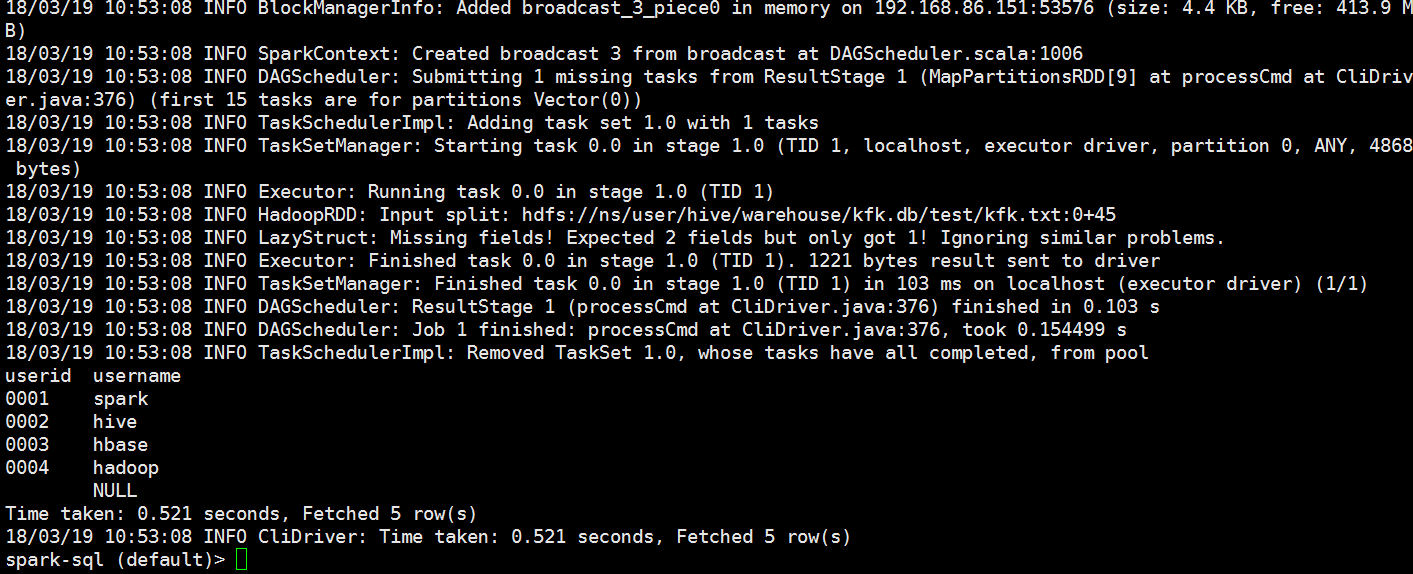

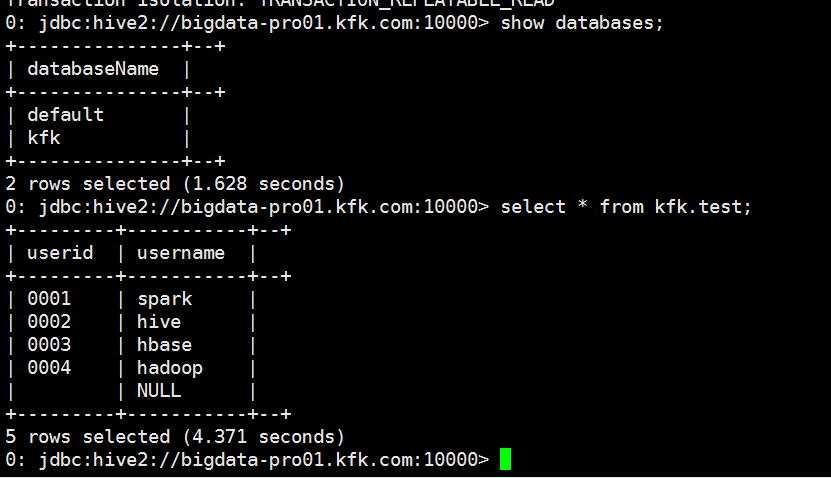

数据拿到了,说明我们的sparkSQL和hive集成是没有问题的

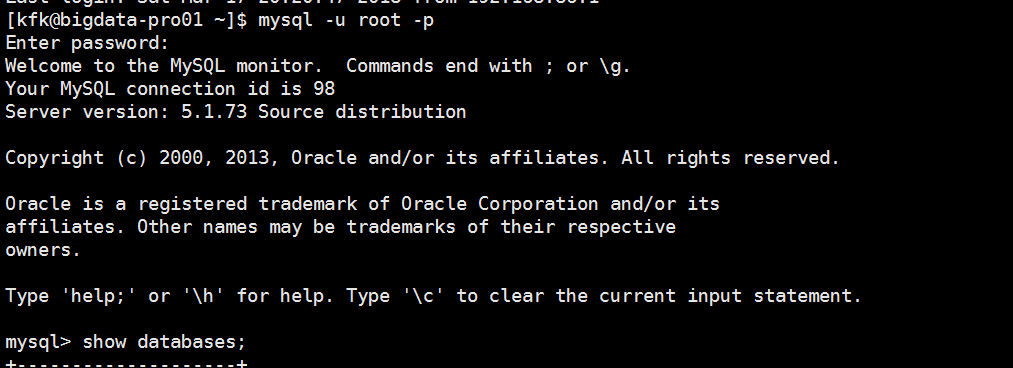

[kfk@bigdata-pro01 ~]$ mysql -u root -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is

Server version: 5.1. Source distribution Copyright (c) , , Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| metastore |

| mysql |

| test |

+--------------------+

rows in set (0.00 sec) mysql> use test;

Database changed

mysql> show tables;

Empty set (0.00 sec) mysql>

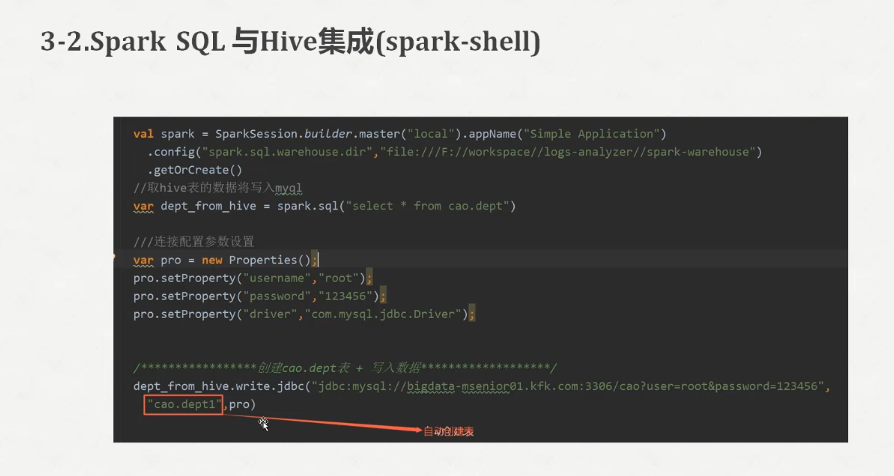

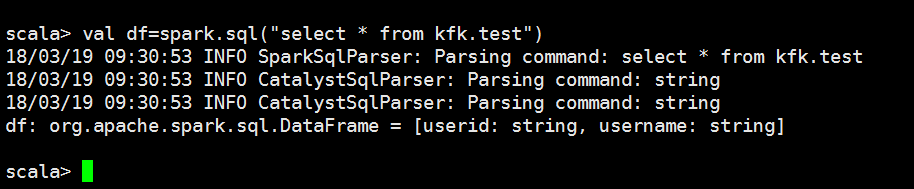

scala> val df=spark.sql("select * from kfk.test")

// :: INFO SparkSqlParser: Parsing command: select * from kfk.test

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

df: org.apache.spark.sql.DataFrame = [userid: string, username: string]

scala> import java.util.Properties

import java.util.Properties

scala> val pro = new Properties()

pro: java.util.Properties = {}

scala> pro.setProperty("driver","com.mysql.jdbc.Driver")

res1: Object = null

scala> df.write.jdbc("jdbc:mysql://bigdata-pro01.kfk.com/test?user=root&password=root","spark1",pro)

// :: INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 242.0 KB, free 413.7 MB)

// :: INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 22.8 KB, free 413.7 MB)

// :: INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.86.151: (size: 22.8 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from

// :: INFO FileInputFormat: Total input paths to process :

// :: INFO SparkContext: Starting job: jdbc at <console>:

// :: INFO DAGScheduler: Got job (jdbc at <console>:) with output partitions

// :: INFO DAGScheduler: Final stage: ResultStage (jdbc at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List()

// :: INFO DAGScheduler: Missing parents: List()

// :: INFO DAGScheduler: Submitting ResultStage (MapPartitionsRDD[] at jdbc at <console>:), which has no missing parents

// :: INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 13.3 KB, free 413.7 MB)

// :: INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 7.2 KB, free 413.6 MB)

// :: INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.86.151: (size: 7.2 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from ResultStage (MapPartitionsRDD[] at jdbc at <console>:) (first tasks are for partitions Vector())

// :: INFO TaskSchedulerImpl: Adding task set 0.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID , localhost, executor driver, partition , ANY, bytes)

// :: INFO Executor: Running task 0.0 in stage 0.0 (TID )

// :: INFO HadoopRDD: Input split: hdfs://ns/user/hive/warehouse/kfk.db/test/kfk.txt:0+45

// :: INFO TransportClientFactory: Successfully created connection to /192.168.86.151: after ms ( ms spent in bootstraps)

// :: INFO CodeGenerator: Code generated in 1278.378936 ms

// :: INFO CodeGenerator: Code generated in 63.186243 ms

// :: INFO LazyStruct: Missing fields! Expected fields but only got ! Ignoring similar problems.

// :: INFO Executor: Finished task 0.0 in stage 0.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID ) in ms on localhost (executor driver) (/)

// :: INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: ResultStage (jdbc at <console>:) finished in 2.764 s

// :: INFO DAGScheduler: Job finished: jdbc at <console>:, took 3.546682 s

scala>

在mysql里面我们可以看到多了一个表

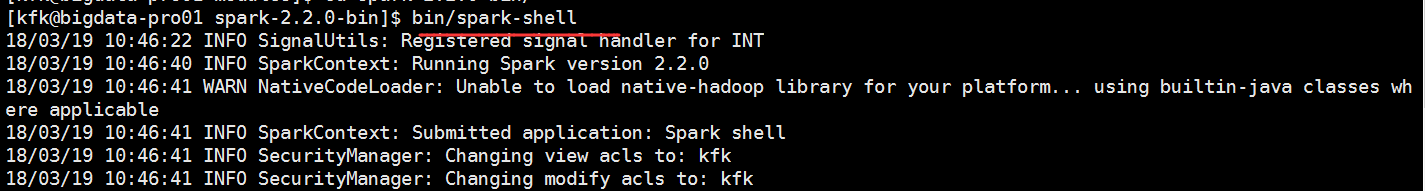

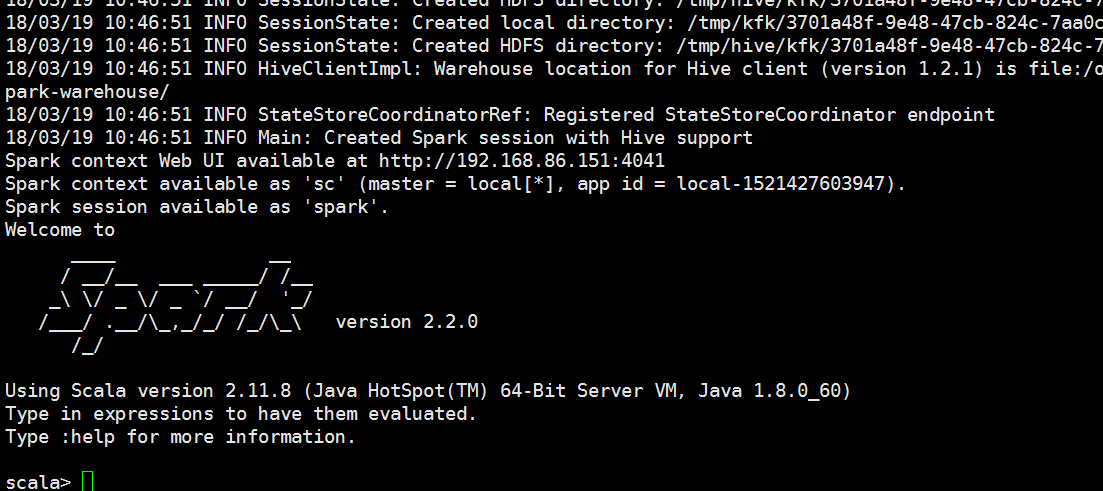

我们再启动一下spark-shell

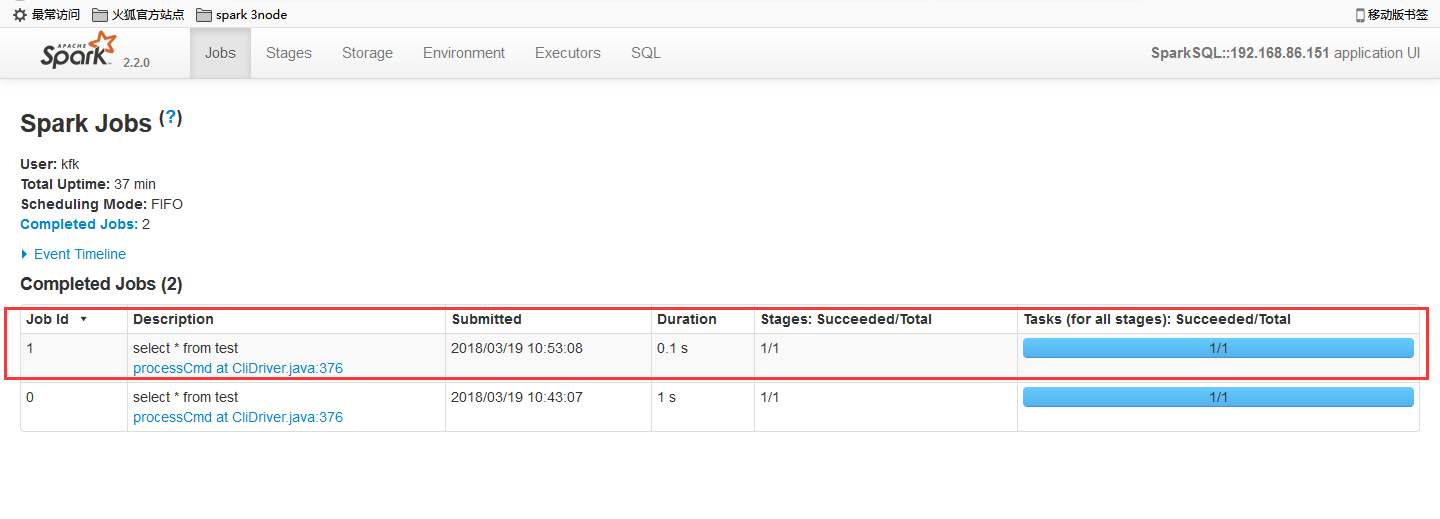

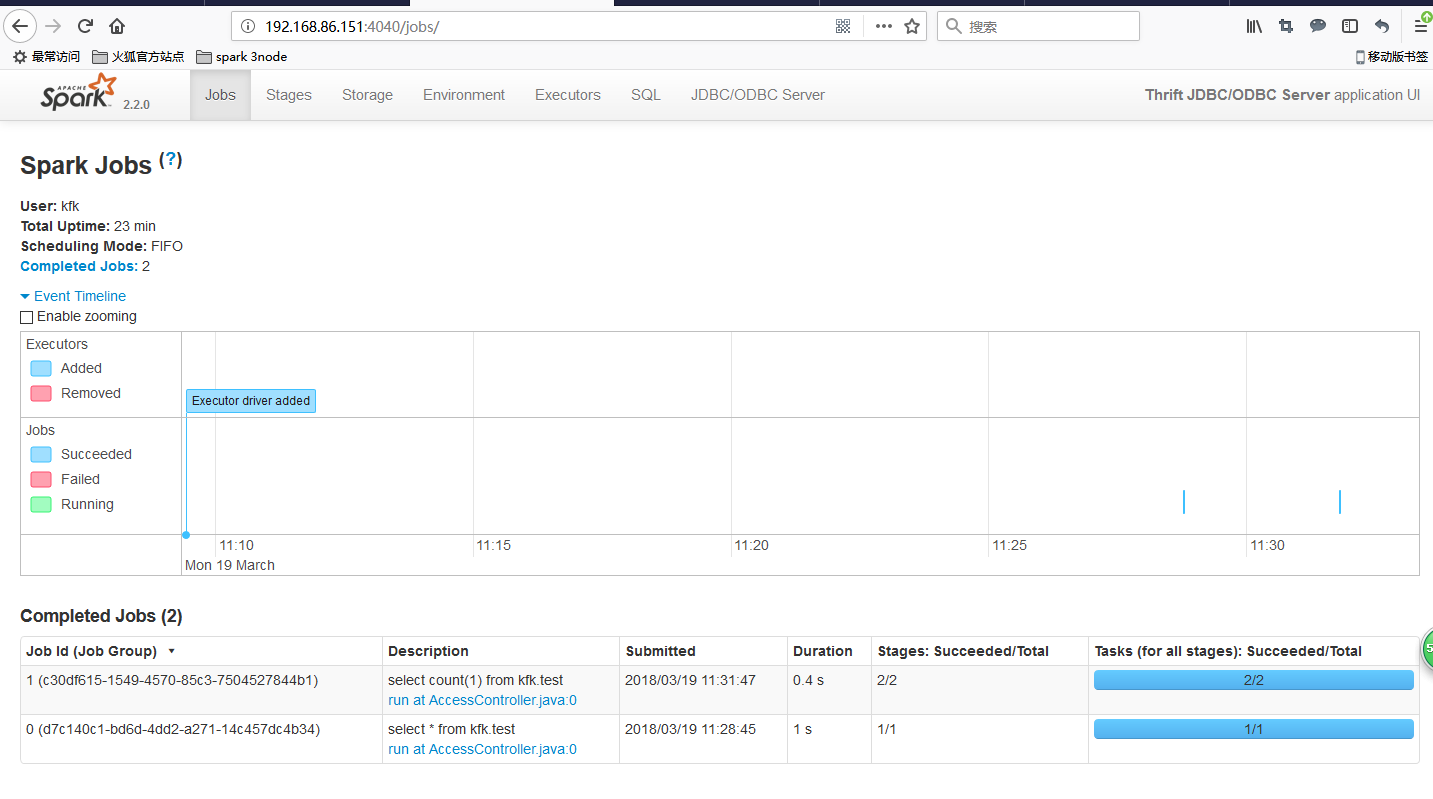

下面这个监控页面是spark-sql的

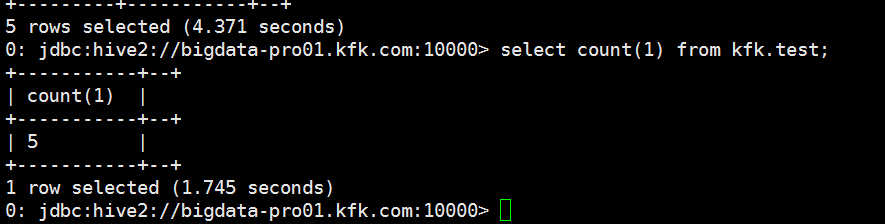

我们在spark-sql做以下操作

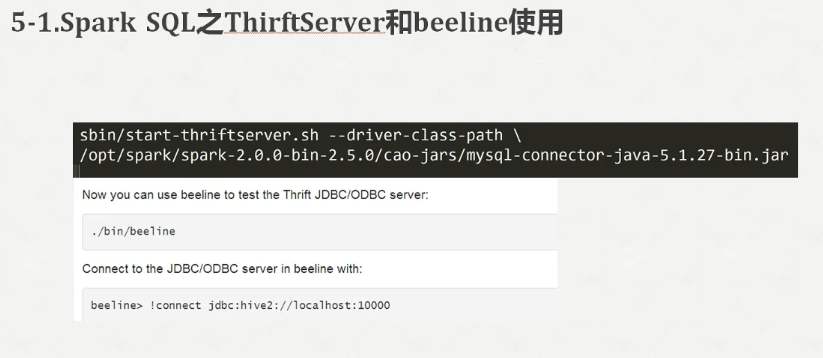

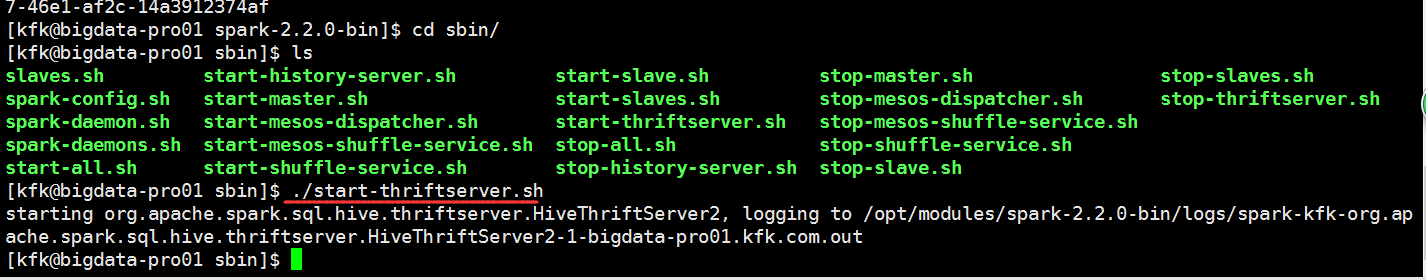

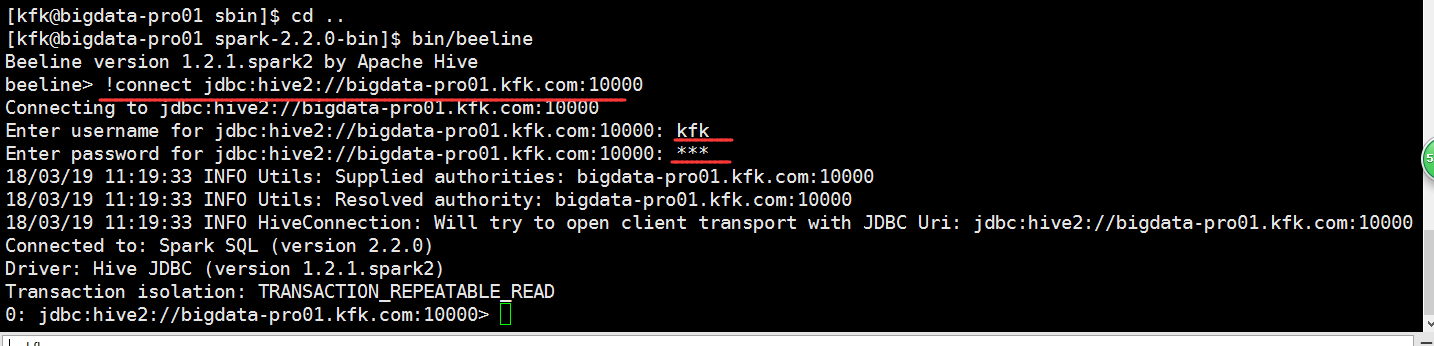

启动spark-thriftserver

输入的是当前的机器的用户名和密码

启动spark-shell

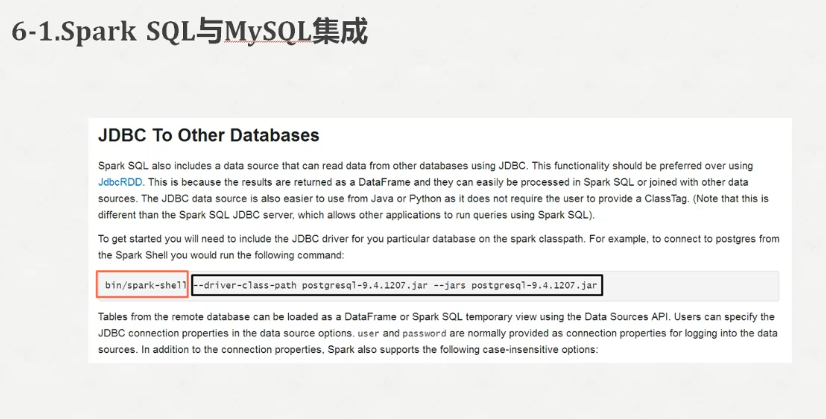

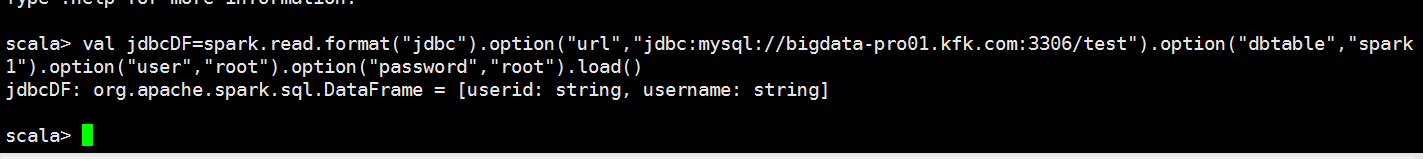

val jdbcDF=spark.read.format("jdbc").option("url","jdbc:mysql://bigdata-pro01.kfk.com:3306/test").option("dbtable","spark1").option("user","root").option("password","root").load()

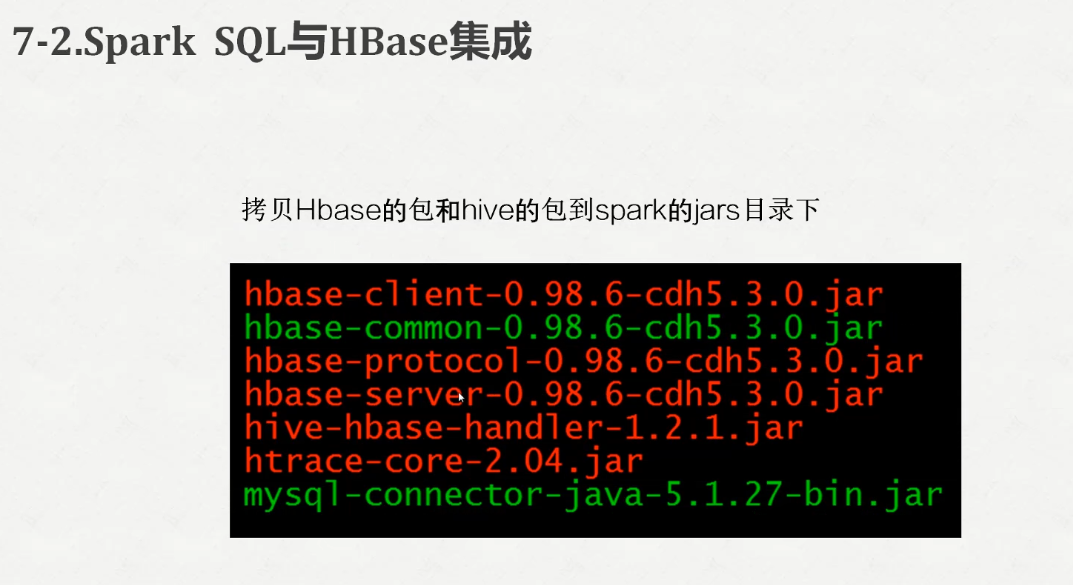

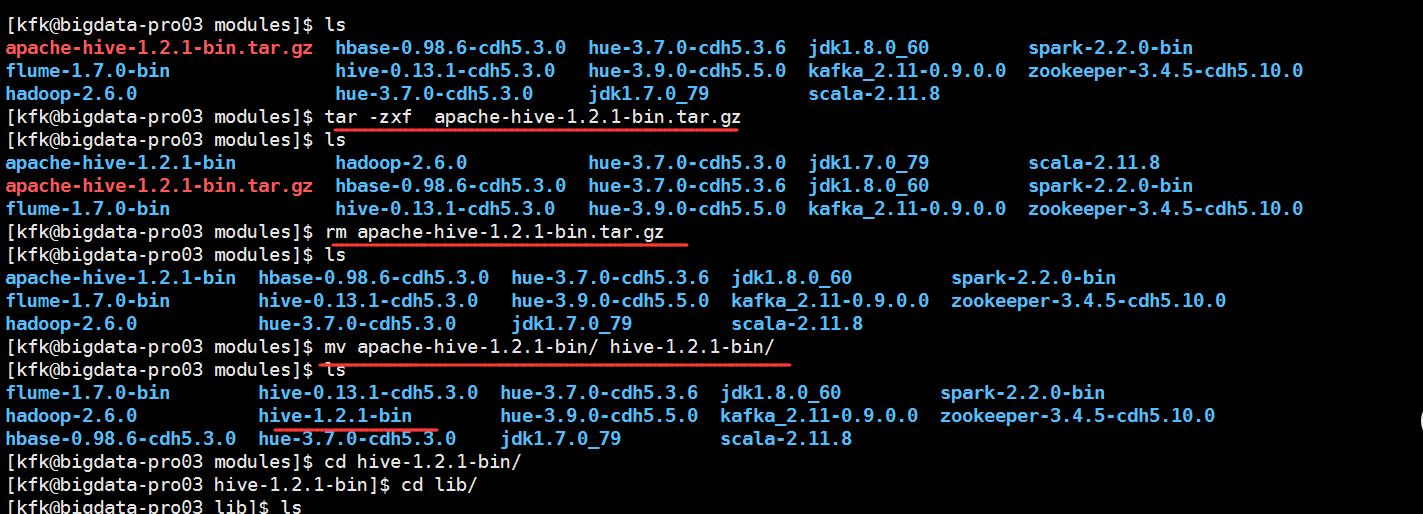

因为需要hive1.2.1版本的包,所以我们需要下载一个hive1.2.1版本下来把里面的包取出来

这个就是我下载的hive1.2.1,下载地址http://archive.apache.org/dist/hive/hive-1.2.1/

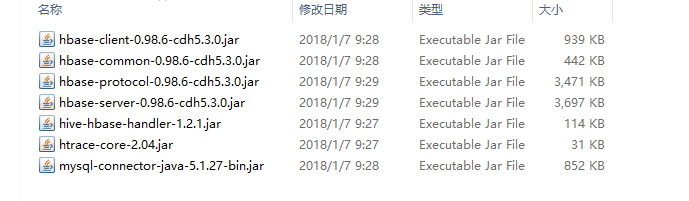

我把所有包都准备好了

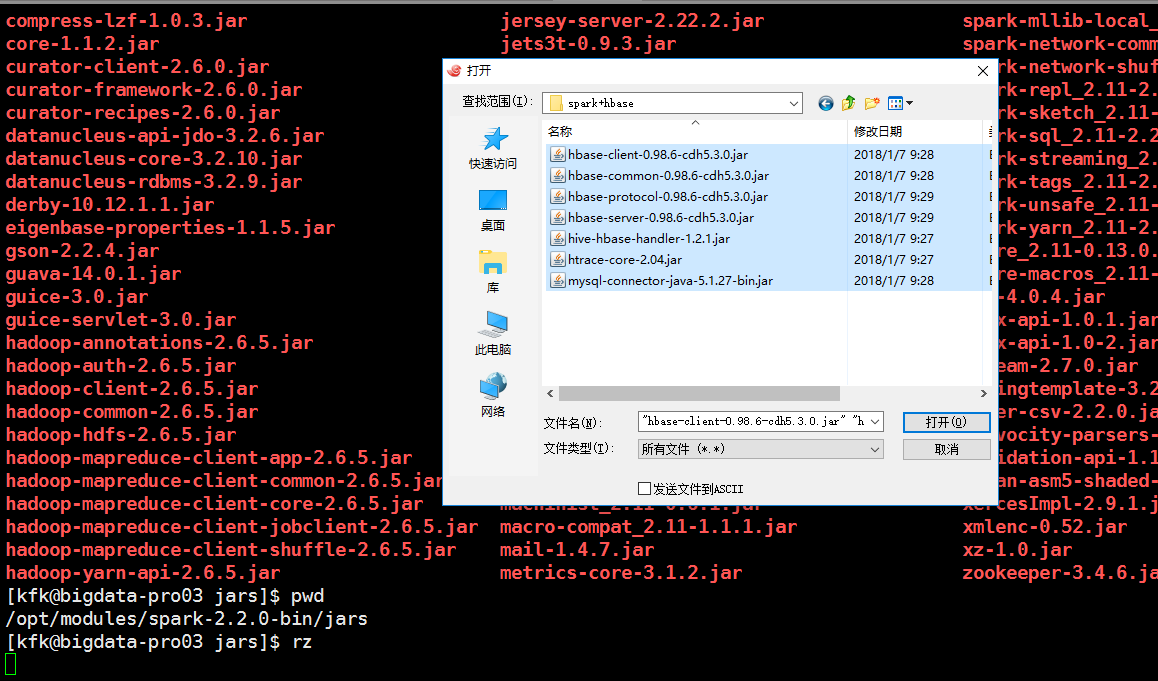

把这些包都上传到spark的jar目录下(3个节点都这样做)

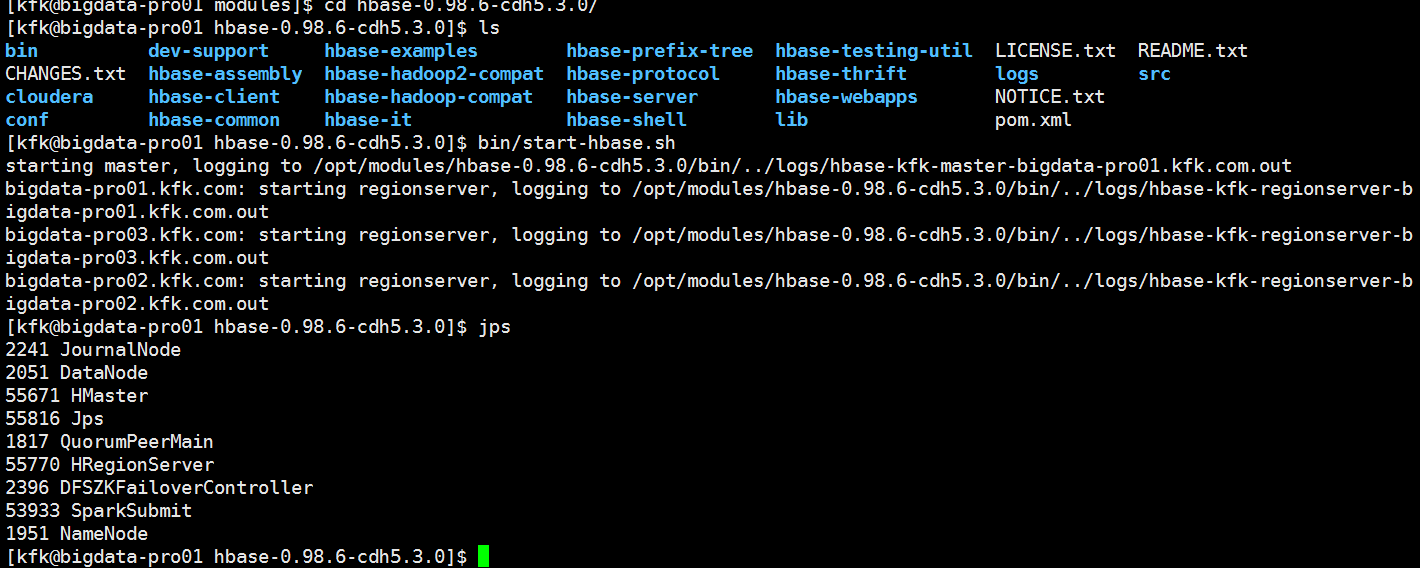

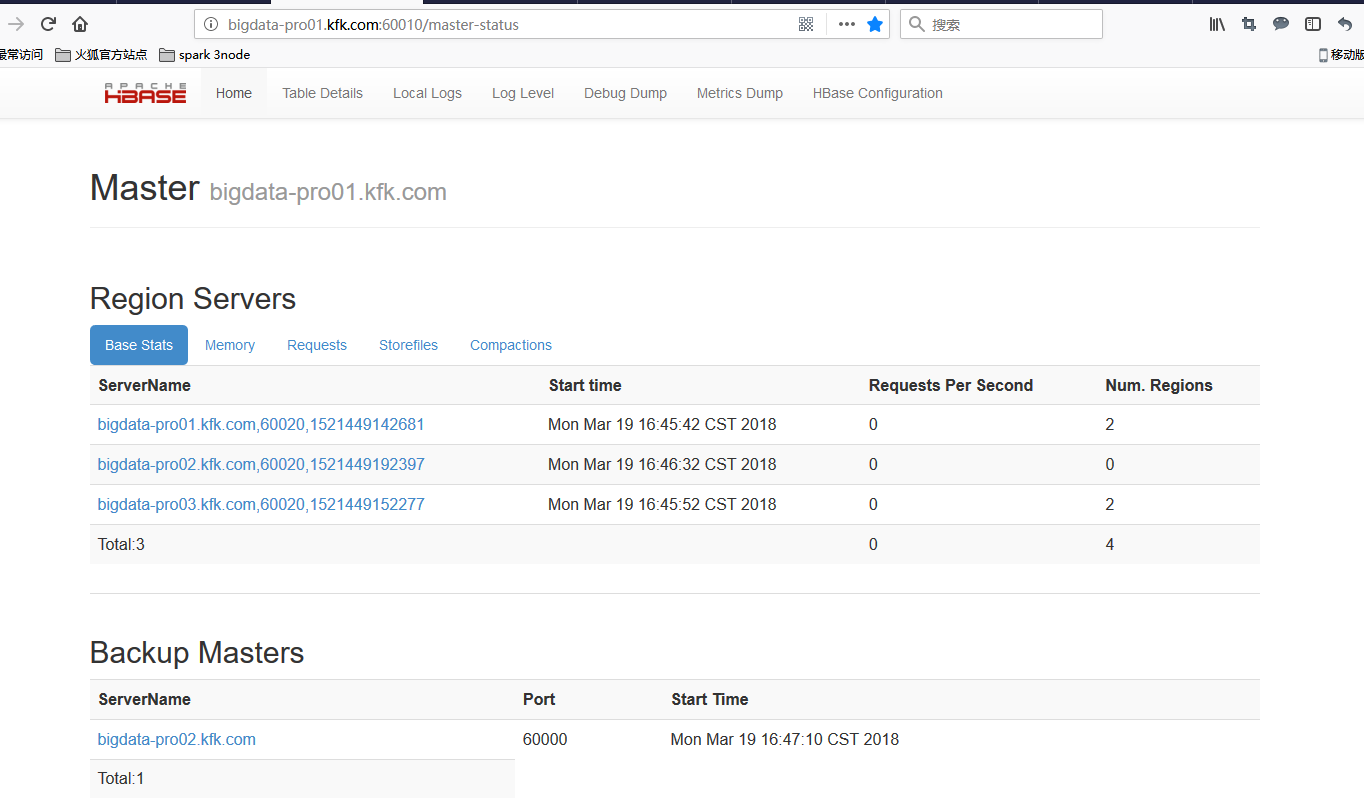

启动我们的hbase

再启动之前我们先修改一下jdk版本,原来我们用的是1.7,现在我们修改成1.8的(3个节点都修改)

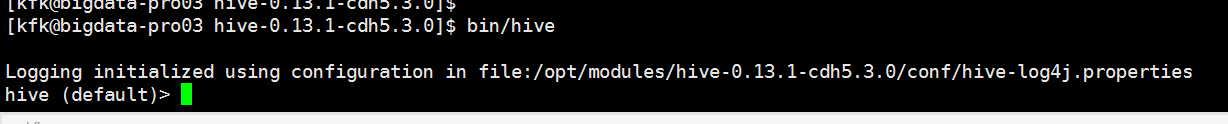

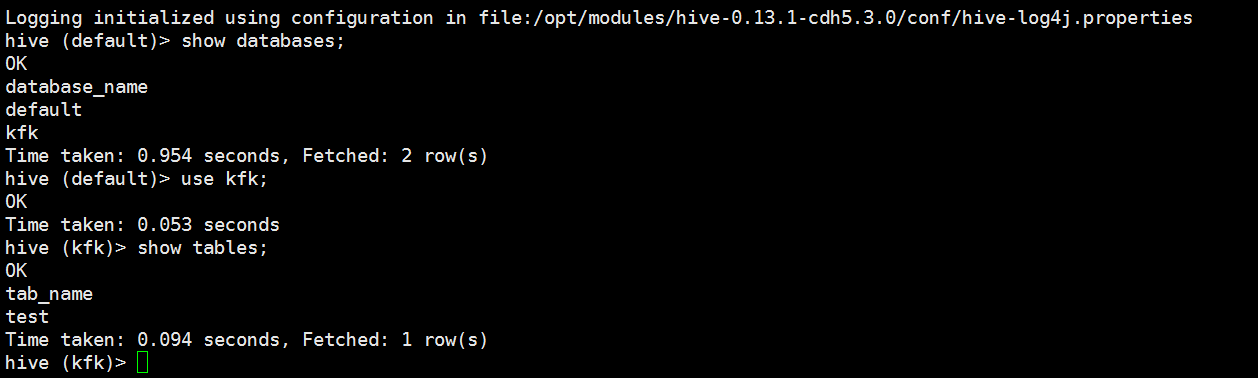

现在启动我们的hive

启动spark-shell

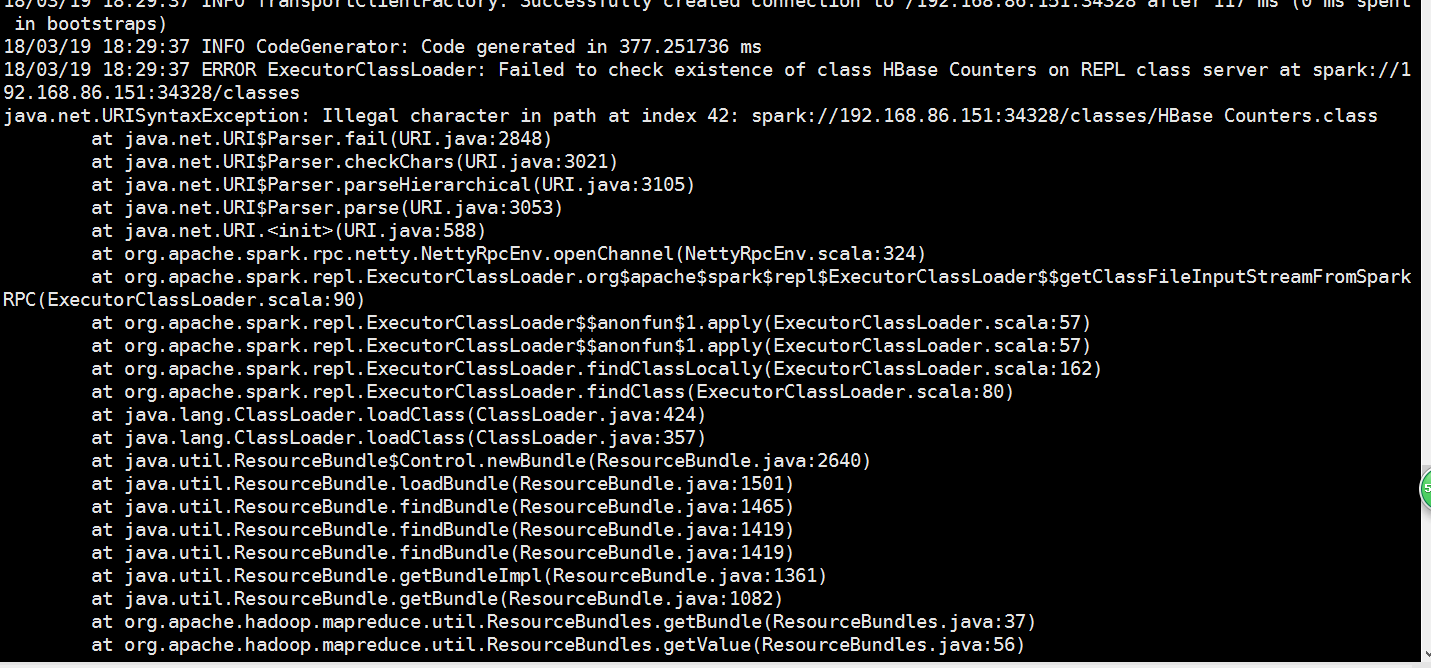

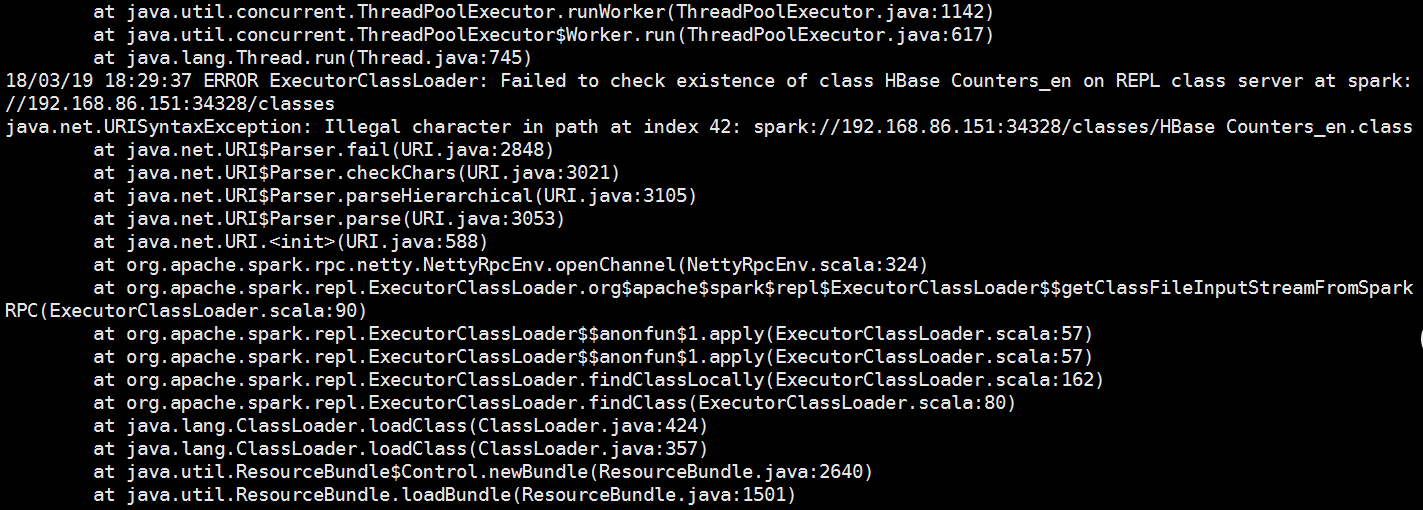

可以看到报错了

是因为我们的表数据太多了

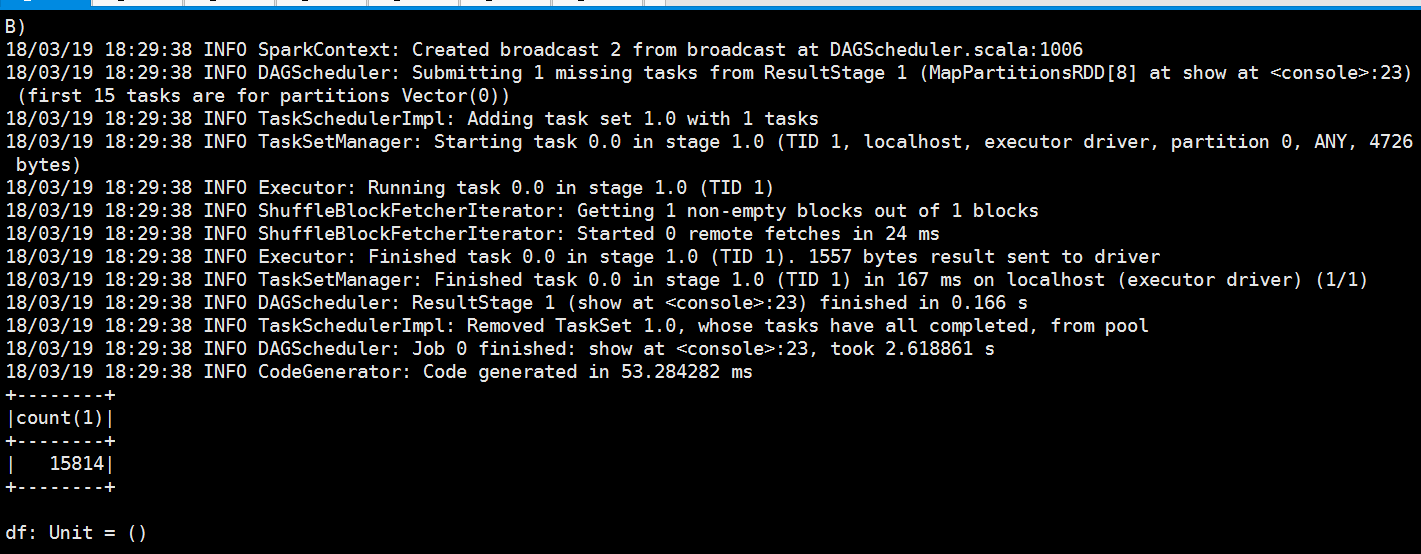

我们县直一下条数

scala> val df =spark.sql("select count(1) from weblogs").show

// :: INFO SparkSqlParser: Parsing command: select count() from weblogs

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CodeGenerator: Code generated in 607.706437 ms

// :: INFO CodeGenerator: Code generated in 72.215236 ms

// :: INFO ContextCleaner: Cleaned accumulator

// :: INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 242.1 KB, free 413.7 MB)

// :: INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 22.9 KB, free 413.7 MB)

// :: INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.86.151: (size: 22.9 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from

// :: INFO HBaseStorageHandler: Configuring input job properties

// :: INFO RecoverableZooKeeper: Process identifier=hconnection-0x490dfe25 connecting to ZooKeeper ensemble=bigdata-pro02.kfk.com:,bigdata-pro01.kfk.com:,bigdata-pro03.kfk.com:

// :: INFO ZooKeeper: Client environment:zookeeper.version=3.4.-, built on // : GMT

// :: INFO ZooKeeper: Client environment:host.name=bigdata-pro01.kfk.com

// :: INFO ZooKeeper: Client environment:java.version=1.8.0_60

// :: INFO ZooKeeper: Client environment:java.vendor=Oracle Corporation

// :: INFO ZooKeeper: Client environment:java.home=/opt/modules/jdk1..0_60/jre

// :: INFO ZooKeeper: Client environment:java.class.path=/opt/modules/spark-2.2.-bin/conf/:/opt/modules/spark-2.2.-bin/jars/htrace-core-3.0..jar:/opt/modules/spark-2.2.-bin/jars/jpam-1.1.jar:/opt/modules/spark-2.2.-bin/jars/mysql-connector-java-5.1.-bin.jar:/opt/modules/spark-2.2.-bin/jars/snappy-java-1.1.2.6.jar:/opt/modules/spark-2.2.-bin/jars/commons-compress-1.4..jar:/opt/modules/spark-2.2.-bin/jars/hbase-server-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/spark-sql_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/jersey-client-2.22..jar:/opt/modules/spark-2.2.-bin/jars/jetty-6.1..jar:/opt/modules/spark-2.2.-bin/jars/jackson-databind-2.6..jar:/opt/modules/spark-2.2.-bin/jars/javolution-5.5..jar:/opt/modules/spark-2.2.-bin/jars/opencsv-2.3.jar:/opt/modules/spark-2.2.-bin/jars/curator-framework-2.6..jar:/opt/modules/spark-2.2.-bin/jars/commons-collections-3.2..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-jobclient-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hk2-utils-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/metrics-graphite-3.1..jar:/opt/modules/spark-2.2.-bin/jars/pmml-model-1.2..jar:/opt/modules/spark-2.2.-bin/jars/compress-lzf-1.0..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-app-2.6..jar:/opt/modules/spark-2.2.-bin/jars/parquet-encoding-1.8..jar:/opt/modules/spark-2.2.-bin/jars/xz-1.0.jar:/opt/modules/spark-2.2.-bin/jars/datanucleus-core-3.2..jar:/opt/modules/spark-2.2.-bin/jars/guice-servlet-3.0.jar:/opt/modules/spark-2.2.-bin/jars/stax-api-1.0-.jar:/opt/modules/spark-2.2.-bin/jars/eigenbase-properties-1.1..jar:/opt/modules/spark-2.2.-bin/jars/metrics-jvm-3.1..jar:/opt/modules/spark-2.2.-bin/jars/stream-2.7..jar:/opt/modules/spark-2.2.-bin/jars/spark-mllib-local_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/derby-10.12.1.1.jar:/opt/modules/spark-2.2.-bin/jars/joda-time-2.9..jar:/opt/modules/spark-2.2.-bin/jars/parquet-common-1.8..jar:/opt/modules/spark-2.2.-bin/jars/ivy-2.4..jar:/opt/modules/spark-2.2.-bin/jars/slf4j-api-1.7..jar:/opt/modules/spark-2.2.-bin/jars/jetty-util-6.1..jar:/opt/modules/spark-2.2.-bin/jars/shapeless_2.-2.3..jar:/opt/modules/spark-2.2.-bin/jars/activation-1.1..jar:/opt/modules/spark-2.2.-bin/jars/jackson-module-scala_2.-2.6..jar:/opt/modules/spark-2.2.-bin/jars/libthrift-0.9..jar:/opt/modules/spark-2.2.-bin/jars/log4j-1.2..jar:/opt/modules/spark-2.2.-bin/jars/antlr4-runtime-4.5..jar:/opt/modules/spark-2.2.-bin/jars/chill-java-0.8..jar:/opt/modules/spark-2.2.-bin/jars/snappy-0.2.jar:/opt/modules/spark-2.2.-bin/jars/core-1.1..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-annotations-2.6..jar:/opt/modules/spark-2.2.-bin/jars/jersey-container-servlet-2.22..jar:/opt/modules/spark-2.2.-bin/jars/spark-network-shuffle_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-graphx_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/breeze_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/scala-compiler-2.11..jar:/opt/modules/spark-2.2.-bin/jars/aopalliance-1.0.jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/aopalliance-repackaged-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/commons-beanutils-core-1.8..jar:/opt/modules/spark-2.2.-bin/jars/jsr305-1.3..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/osgi-resource-locator-1.0..jar:/opt/modules/spark-2.2.-bin/jars/univocity-parsers-2.2..jar:/opt/modules/spark-2.2.-bin/jars/hive-exec-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/commons-crypto-1.0..jar:/opt/modules/spark-2.2.-bin/jars/metrics-json-3.1..jar:/opt/modules/spark-2.2.-bin/jars/minlog-1.3..jar:/opt/modules/spark-2.2.-bin/jars/JavaEWAH-0.3..jar:/opt/modules/spark-2.2.-bin/jars/json4s-jackson_2.-3.2..jar:/opt/modules/spark-2.2.-bin/jars/javax.ws.rs-api-2.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-dbcp-1.4.jar:/opt/modules/spark-2.2.-bin/jars/slf4j-log4j12-1.7..jar:/opt/modules/spark-2.2.-bin/jars/javax.inject-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/scala-xml_2.-1.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-pool-1.5..jar:/opt/modules/spark-2.2.-bin/jars/jaxb-api-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-network-common_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/gson-2.2..jar:/opt/modules/spark-2.2.-bin/jars/protobuf-java-2.5..jar:/opt/modules/spark-2.2.-bin/jars/objenesis-2.1.jar:/opt/modules/spark-2.2.-bin/jars/hive-metastore-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/jersey-container-servlet-core-2.22..jar:/opt/modules/spark-2.2.-bin/jars/stax-api-1.0..jar:/opt/modules/spark-2.2.-bin/jars/super-csv-2.2..jar:/opt/modules/spark-2.2.-bin/jars/metrics-core-3.1..jar:/opt/modules/spark-2.2.-bin/jars/scala-parser-combinators_2.-1.0..jar:/opt/modules/spark-2.2.-bin/jars/apacheds-i18n-2.0.-M15.jar:/opt/modules/spark-2.2.-bin/jars/spire_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/xbean-asm5-shaded-4.4.jar:/opt/modules/spark-2.2.-bin/jars/httpclient-4.5..jar:/opt/modules/spark-2.2.-bin/jars/hive-beeline-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/janino-3.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-beanutils-1.7..jar:/opt/modules/spark-2.2.-bin/jars/javax.annotation-api-1.2.jar:/opt/modules/spark-2.2.-bin/jars/curator-recipes-2.6..jar:/opt/modules/spark-2.2.-bin/jars/jackson-core-2.6..jar:/opt/modules/spark-2.2.-bin/jars/paranamer-2.6.jar:/opt/modules/spark-2.2.-bin/jars/hk2-locator-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/spark-hive_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/bonecp-0.8..RELEASE.jar:/opt/modules/spark-2.2.-bin/jars/parquet-column-1.8..jar:/opt/modules/spark-2.2.-bin/jars/calcite-linq4j-1.2.-incubating.jar:/opt/modules/spark-2.2.-bin/jars/commons-cli-1.2.jar:/opt/modules/spark-2.2.-bin/jars/javax.inject-.jar:/opt/modules/spark-2.2.-bin/jars/hbase-common-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/spark-tags_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/bcprov-jdk15on-1.51.jar:/opt/modules/spark-2.2.-bin/jars/stringtemplate-3.2..jar:/opt/modules/spark-2.2.-bin/jars/RoaringBitmap-0.5..jar:/opt/modules/spark-2.2.-bin/jars/hbase-client-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/commons-codec-1.10.jar:/opt/modules/spark-2.2.-bin/jars/hive-cli-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/scala-reflect-2.11..jar:/opt/modules/spark-2.2.-bin/jars/jline-2.12..jar:/opt/modules/spark-2.2.-bin/jars/jackson-core-asl-1.9..jar:/opt/modules/spark-2.2.-bin/jars/jersey-server-2.22..jar:/opt/modules/spark-2.2.-bin/jars/xercesImpl-2.9..jar:/opt/modules/spark-2.2.-bin/jars/parquet-format-2.3..jar:/opt/modules/spark-2.2.-bin/jars/jdo-api-3.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-lang-2.6.jar:/opt/modules/spark-2.2.-bin/jars/jta-1.1.jar:/opt/modules/spark-2.2.-bin/jars/commons-httpclient-3.1.jar:/opt/modules/spark-2.2.-bin/jars/pyrolite-4.13.jar:/opt/modules/spark-2.2.-bin/jars/jul-to-slf4j-1.7..jar:/opt/modules/spark-2.2.-bin/jars/api-util-1.0.-M20.jar:/opt/modules/spark-2.2.-bin/jars/hive-hbase-handler-1.2..jar:/opt/modules/spark-2.2.-bin/jars/commons-math3-3.4..jar:/opt/modules/spark-2.2.-bin/jars/jets3t-0.9..jar:/opt/modules/spark-2.2.-bin/jars/spark-catalyst_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/parquet-jackson-1.8..jar:/opt/modules/spark-2.2.-bin/jars/jackson-annotations-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-server-web-proxy-2.6..jar:/opt/modules/spark-2.2.-bin/jars/spark-hive-thriftserver_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/parquet-hadoop-1.8..jar:/opt/modules/spark-2.2.-bin/jars/apacheds-kerberos-codec-2.0.-M15.jar:/opt/modules/spark-2.2.-bin/jars/ST4-4.0..jar:/opt/modules/spark-2.2.-bin/jars/jackson-mapper-asl-1.9..jar:/opt/modules/spark-2.2.-bin/jars/machinist_2.-0.6..jar:/opt/modules/spark-2.2.-bin/jars/spark-mllib_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/scala-library-2.11..jar:/opt/modules/spark-2.2.-bin/jars/guava-14.0..jar:/opt/modules/spark-2.2.-bin/jars/javassist-3.18.-GA.jar:/opt/modules/spark-2.2.-bin/jars/api-asn1-api-1.0.-M20.jar:/opt/modules/spark-2.2.-bin/jars/antlr-2.7..jar:/opt/modules/spark-2.2.-bin/jars/jackson-module-paranamer-2.6..jar:/opt/modules/spark-2.2.-bin/jars/curator-client-2.6..jar:/opt/modules/spark-2.2.-bin/jars/arpack_combined_all-0.1.jar:/opt/modules/spark-2.2.-bin/jars/datanucleus-api-jdo-3.2..jar:/opt/modules/spark-2.2.-bin/jars/calcite-avatica-1.2.-incubating.jar:/opt/modules/spark-2.2.-bin/jars/avro-mapred-1.7.-hadoop2.jar:/opt/modules/spark-2.2.-bin/jars/hive-jdbc-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/breeze-macros_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/hbase-protocol-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/json4s-core_2.-3.2..jar:/opt/modules/spark-2.2.-bin/jars/spire-macros_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-client-2.6..jar:/opt/modules/spark-2.2.-bin/jars/mx4j-3.0..jar:/opt/modules/spark-2.2.-bin/jars/py4j-0.10..jar:/opt/modules/spark-2.2.-bin/jars/scalap-2.11..jar:/opt/modules/spark-2.2.-bin/jars/jersey-guava-2.22..jar:/opt/modules/spark-2.2.-bin/jars/jersey-media-jaxb-2.22..jar:/opt/modules/spark-2.2.-bin/jars/commons-configuration-1.6.jar:/opt/modules/spark-2.2.-bin/jars/json4s-ast_2.-3.2..jar:/opt/modules/spark-2.2.-bin/jars/htrace-core-2.04.jar:/opt/modules/spark-2.2.-bin/jars/hadoop-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/kryo-shaded-3.0..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-auth-2.6..jar:/opt/modules/spark-2.2.-bin/jars/commons-compiler-3.0..jar:/opt/modules/spark-2.2.-bin/jars/jtransforms-2.4..jar:/opt/modules/spark-2.2.-bin/jars/commons-net-2.2.jar:/opt/modules/spark-2.2.-bin/jars/jcl-over-slf4j-1.7..jar:/opt/modules/spark-2.2.-bin/jars/spark-launcher_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-core_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/antlr-runtime-3.4.jar:/opt/modules/spark-2.2.-bin/jars/spark-repl_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-streaming_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/datanucleus-rdbms-3.2..jar:/opt/modules/spark-2.2.-bin/jars/netty-3.9..Final.jar:/opt/modules/spark-2.2.-bin/jars/lz4-1.3..jar:/opt/modules/spark-2.2.-bin/jars/zookeeper-3.4..jar:/opt/modules/spark-2.2.-bin/jars/java-xmlbuilder-1.0.jar:/opt/modules/spark-2.2.-bin/jars/jersey-common-2.22..jar:/opt/modules/spark-2.2.-bin/jars/netty-all-4.0..Final.jar:/opt/modules/spark-2.2.-bin/jars/validation-api-1.1..Final.jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-core-2.6..jar:/opt/modules/spark-2.2.-bin/jars/avro-ipc-1.7..jar:/opt/modules/spark-2.2.-bin/jars/jodd-core-3.5..jar:/opt/modules/spark-2.2.-bin/jars/jackson-xc-1.9..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-client-2.6..jar:/opt/modules/spark-2.2.-bin/jars/guice-3.0.jar:/opt/modules/spark-2.2.-bin/jars/spark-yarn_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/parquet-hadoop-bundle-1.6..jar:/opt/modules/spark-2.2.-bin/jars/leveldbjni-all-1.8.jar:/opt/modules/spark-2.2.-bin/jars/hk2-api-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/javax.servlet-api-3.1..jar:/opt/modules/spark-2.2.-bin/jars/mysql-connector-java-5.1..jar:/opt/modules/spark-2.2.-bin/jars/libfb303-0.9..jar:/opt/modules/spark-2.2.-bin/jars/httpcore-4.4..jar:/opt/modules/spark-2.2.-bin/jars/chill_2.-0.8..jar:/opt/modules/spark-2.2.-bin/jars/spark-sketch_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/commons-lang3-3.5.jar:/opt/modules/spark-2.2.-bin/jars/mail-1.4..jar:/opt/modules/spark-2.2.-bin/jars/apache-log4j-extras-1.2..jar:/opt/modules/spark-2.2.-bin/jars/xmlenc-0.52.jar:/opt/modules/spark-2.2.-bin/jars/avro-1.7..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-server-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-api-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-hdfs-2.6..jar:/opt/modules/spark-2.2.-bin/jars/pmml-schema-1.2..jar:/opt/modules/spark-2.2.-bin/jars/calcite-core-1.2.-incubating.jar:/opt/modules/spark-2.2.-bin/jars/spark-unsafe_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/base64-2.3..jar:/opt/modules/spark-2.2.-bin/jars/jackson-jaxrs-1.9..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-shuffle-2.6..jar:/opt/modules/spark-2.2.-bin/jars/oro-2.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-digester-1.8.jar:/opt/modules/spark-2.2.-bin/jars/commons-io-2.4.jar:/opt/modules/spark-2.2.-bin/jars/commons-logging-1.1..jar:/opt/modules/spark-2.2.-bin/jars/macro-compat_2.-1.1..jar:/opt/modules/hadoop-2.6./etc/hadoop/

// :: INFO ZooKeeper: Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

// :: INFO ZooKeeper: Client environment:java.io.tmpdir=/tmp

// :: INFO ZooKeeper: Client environment:java.compiler=<NA>

// :: INFO ZooKeeper: Client environment:os.name=Linux

// :: INFO ZooKeeper: Client environment:os.arch=amd64

// :: INFO ZooKeeper: Client environment:os.version=2.6.-.el6.x86_64

// :: INFO ZooKeeper: Client environment:user.name=kfk

// :: INFO ZooKeeper: Client environment:user.home=/home/kfk

// :: INFO ZooKeeper: Client environment:user.dir=/opt/modules/spark-2.2.-bin

// :: INFO ZooKeeper: Initiating client connection, connectString=bigdata-pro02.kfk.com:,bigdata-pro01.kfk.com:,bigdata-pro03.kfk.com: sessionTimeout= watcher=hconnection-0x490dfe25, quorum=bigdata-pro02.kfk.com:,bigdata-pro01.kfk.com:,bigdata-pro03.kfk.com:, baseZNode=/hbase

// :: INFO ClientCnxn: Opening socket connection to server bigdata-pro02.kfk.com/192.168.86.152:. Will not attempt to authenticate using SASL (unknown error)

// :: INFO ClientCnxn: Socket connection established to bigdata-pro02.kfk.com/192.168.86.152:, initiating session

// :: INFO ClientCnxn: Session establishment complete on server bigdata-pro02.kfk.com/192.168.86.152:, sessionid = 0x2623d3a0dea0018, negotiated timeout =

// :: INFO RegionSizeCalculator: Calculating region sizes for table "weblogs".

// :: WARN TableInputFormatBase: Cannot resolve the host name for bigdata-pro03.kfk.com/192.168.86.153 because of javax.naming.NameNotFoundException: DNS name not found [response code ]; remaining name '153.86.168.192.in-addr.arpa'

// :: INFO SparkContext: Starting job: show at <console>:

// :: INFO DAGScheduler: Registering RDD (show at <console>:)

// :: INFO DAGScheduler: Got job (show at <console>:) with output partitions

// :: INFO DAGScheduler: Final stage: ResultStage (show at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage )

// :: INFO DAGScheduler: Missing parents: List(ShuffleMapStage )

// :: INFO DAGScheduler: Submitting ShuffleMapStage (MapPartitionsRDD[] at show at <console>:), which has no missing parents

// :: INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 18.9 KB, free 413.6 MB)

// :: INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 9.9 KB, free 413.6 MB)

// :: INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.86.151: (size: 9.9 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from ShuffleMapStage (MapPartitionsRDD[] at show at <console>:) (first tasks are for partitions Vector())

// :: INFO TaskSchedulerImpl: Adding task set 0.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID , localhost, executor driver, partition , ANY, bytes)

// :: INFO Executor: Running task 0.0 in stage 0.0 (TID )

// :: INFO HadoopRDD: Input split: bigdata-pro03.kfk.com:,

// :: INFO TableInputFormatBase: Input split length: M bytes.

// :: INFO TransportClientFactory: Successfully created connection to /192.168.86.151: after ms ( ms spent in bootstraps)

// :: INFO CodeGenerator: Code generated in 377.251736 ms

// :: ERROR ExecutorClassLoader: Failed to check existence of class HBase Counters on REPL class server at spark://192.168.86.151:34328/classes

java.net.URISyntaxException: Illegal character in path at index : spark://192.168.86.151:34328/classes/HBase Counters.class

at java.net.URI$Parser.fail(URI.java:)

at java.net.URI$Parser.checkChars(URI.java:)

at java.net.URI$Parser.parseHierarchical(URI.java:)

at java.net.URI$Parser.parse(URI.java:)

at java.net.URI.<init>(URI.java:)

at org.apache.spark.rpc.netty.NettyRpcEnv.openChannel(NettyRpcEnv.scala:)

at org.apache.spark.repl.ExecutorClassLoader.org$apache$spark$repl$ExecutorClassLoader$$getClassFileInputStreamFromSparkRPC(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader$$anonfun$.apply(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader$$anonfun$.apply(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader.findClassLocally(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader.findClass(ExecutorClassLoader.scala:)

at java.lang.ClassLoader.loadClass(ClassLoader.java:)

at java.lang.ClassLoader.loadClass(ClassLoader.java:)

at java.util.ResourceBundle$Control.newBundle(ResourceBundle.java:)

at java.util.ResourceBundle.loadBundle(ResourceBundle.java:)

at java.util.ResourceBundle.findBundle(ResourceBundle.java:)

at java.util.ResourceBundle.findBundle(ResourceBundle.java:)

at java.util.ResourceBundle.findBundle(ResourceBundle.java:)

at java.util.ResourceBundle.getBundleImpl(ResourceBundle.java:)

at java.util.ResourceBundle.getBundle(ResourceBundle.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getBundle(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getValue(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getCounterGroupName(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.counters.CounterGroupFactory.newGroup(CounterGroupFactory.java:)

at org.apache.hadoop.mapreduce.counters.AbstractCounters.getGroup(AbstractCounters.java:)

at org.apache.hadoop.mapreduce.counters.AbstractCounters.findCounter(AbstractCounters.java:)

at org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl$DummyReporter.getCounter(TaskAttemptContextImpl.java:)

at org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl.getCounter(TaskAttemptContextImpl.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.updateCounters(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.updateCounters(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.nextKeyValue(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReader.nextKeyValue(TableRecordReader.java:)

at org.apache.hadoop.hive.hbase.HiveHBaseTableInputFormat$.next(HiveHBaseTableInputFormat.java:)

at org.apache.hadoop.hive.hbase.HiveHBaseTableInputFormat$.next(HiveHBaseTableInputFormat.java:)

at org.apache.spark.rdd.HadoopRDD$$anon$.getNext(HadoopRDD.scala:)

at org.apache.spark.rdd.HadoopRDD$$anon$.getNext(HadoopRDD.scala:)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIterator.agg_doAggregateWithoutKey$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIterator.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$$$anon$.hasNext(WholeStageCodegenExec.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at org.apache.spark.shuffle.sort.BypassMergeSortShuffleWriter.write(BypassMergeSortShuffleWriter.java:)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:)

at org.apache.spark.scheduler.Task.run(Task.scala:)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:)

at java.lang.Thread.run(Thread.java:)

// :: ERROR ExecutorClassLoader: Failed to check existence of class HBase Counters_en on REPL class server at spark://192.168.86.151:34328/classes

java.net.URISyntaxException: Illegal character in path at index : spark://192.168.86.151:34328/classes/HBase Counters_en.class

at java.net.URI$Parser.fail(URI.java:)

at java.net.URI$Parser.checkChars(URI.java:)

at java.net.URI$Parser.parseHierarchical(URI.java:)

at java.net.URI$Parser.parse(URI.java:)

at java.net.URI.<init>(URI.java:)

at org.apache.spark.rpc.netty.NettyRpcEnv.openChannel(NettyRpcEnv.scala:)

at org.apache.spark.repl.ExecutorClassLoader.org$apache$spark$repl$ExecutorClassLoader$$getClassFileInputStreamFromSparkRPC(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader$$anonfun$.apply(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader$$anonfun$.apply(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader.findClassLocally(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader.findClass(ExecutorClassLoader.scala:)

at java.lang.ClassLoader.loadClass(ClassLoader.java:)

at java.lang.ClassLoader.loadClass(ClassLoader.java:)

at java.util.ResourceBundle$Control.newBundle(ResourceBundle.java:)

at java.util.ResourceBundle.loadBundle(ResourceBundle.java:)

at java.util.ResourceBundle.findBundle(ResourceBundle.java:)

at java.util.ResourceBundle.findBundle(ResourceBundle.java:)

at java.util.ResourceBundle.getBundleImpl(ResourceBundle.java:)

at java.util.ResourceBundle.getBundle(ResourceBundle.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getBundle(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getValue(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getCounterGroupName(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.counters.CounterGroupFactory.newGroup(CounterGroupFactory.java:)

at org.apache.hadoop.mapreduce.counters.AbstractCounters.getGroup(AbstractCounters.java:)

at org.apache.hadoop.mapreduce.counters.AbstractCounters.findCounter(AbstractCounters.java:)

at org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl$DummyReporter.getCounter(TaskAttemptContextImpl.java:)

at org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl.getCounter(TaskAttemptContextImpl.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.updateCounters(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.updateCounters(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.nextKeyValue(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReader.nextKeyValue(TableRecordReader.java:)

at org.apache.hadoop.hive.hbase.HiveHBaseTableInputFormat$.next(HiveHBaseTableInputFormat.java:)

at org.apache.hadoop.hive.hbase.HiveHBaseTableInputFormat$.next(HiveHBaseTableInputFormat.java:)

at org.apache.spark.rdd.HadoopRDD$$anon$.getNext(HadoopRDD.scala:)

at org.apache.spark.rdd.HadoopRDD$$anon$.getNext(HadoopRDD.scala:)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIterator.agg_doAggregateWithoutKey$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIterator.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$$$anon$.hasNext(WholeStageCodegenExec.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at org.apache.spark.shuffle.sort.BypassMergeSortShuffleWriter.write(BypassMergeSortShuffleWriter.java:)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:)

at org.apache.spark.scheduler.Task.run(Task.scala:)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:)

at java.lang.Thread.run(Thread.java:)

// :: ERROR ExecutorClassLoader: Failed to check existence of class HBase Counters_en_US on REPL class server at spark://192.168.86.151:34328/classes

java.net.URISyntaxException: Illegal character in path at index : spark://192.168.86.151:34328/classes/HBase Counters_en_US.class

at java.net.URI$Parser.fail(URI.java:)

at java.net.URI$Parser.checkChars(URI.java:)

at java.net.URI$Parser.parseHierarchical(URI.java:)

at java.net.URI$Parser.parse(URI.java:)

at java.net.URI.<init>(URI.java:)

at org.apache.spark.rpc.netty.NettyRpcEnv.openChannel(NettyRpcEnv.scala:)

at org.apache.spark.repl.ExecutorClassLoader.org$apache$spark$repl$ExecutorClassLoader$$getClassFileInputStreamFromSparkRPC(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader$$anonfun$.apply(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader$$anonfun$.apply(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader.findClassLocally(ExecutorClassLoader.scala:)

at org.apache.spark.repl.ExecutorClassLoader.findClass(ExecutorClassLoader.scala:)

at java.lang.ClassLoader.loadClass(ClassLoader.java:)

at java.lang.ClassLoader.loadClass(ClassLoader.java:)

at java.util.ResourceBundle$Control.newBundle(ResourceBundle.java:)

at java.util.ResourceBundle.loadBundle(ResourceBundle.java:)

at java.util.ResourceBundle.findBundle(ResourceBundle.java:)

at java.util.ResourceBundle.getBundleImpl(ResourceBundle.java:)

at java.util.ResourceBundle.getBundle(ResourceBundle.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getBundle(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getValue(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.util.ResourceBundles.getCounterGroupName(ResourceBundles.java:)

at org.apache.hadoop.mapreduce.counters.CounterGroupFactory.newGroup(CounterGroupFactory.java:)

at org.apache.hadoop.mapreduce.counters.AbstractCounters.getGroup(AbstractCounters.java:)

at org.apache.hadoop.mapreduce.counters.AbstractCounters.findCounter(AbstractCounters.java:)

at org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl$DummyReporter.getCounter(TaskAttemptContextImpl.java:)

at org.apache.hadoop.mapreduce.task.TaskAttemptContextImpl.getCounter(TaskAttemptContextImpl.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.updateCounters(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.updateCounters(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReaderImpl.nextKeyValue(TableRecordReaderImpl.java:)

at org.apache.hadoop.hbase.mapreduce.TableRecordReader.nextKeyValue(TableRecordReader.java:)

at org.apache.hadoop.hive.hbase.HiveHBaseTableInputFormat$.next(HiveHBaseTableInputFormat.java:)

at org.apache.hadoop.hive.hbase.HiveHBaseTableInputFormat$.next(HiveHBaseTableInputFormat.java:)

at org.apache.spark.rdd.HadoopRDD$$anon$.getNext(HadoopRDD.scala:)

at org.apache.spark.rdd.HadoopRDD$$anon$.getNext(HadoopRDD.scala:)

at org.apache.spark.util.NextIterator.hasNext(NextIterator.scala:)

at org.apache.spark.InterruptibleIterator.hasNext(InterruptibleIterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIterator.agg_doAggregateWithoutKey$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$GeneratedIterator.processNext(Unknown Source)

at org.apache.spark.sql.execution.BufferedRowIterator.hasNext(BufferedRowIterator.java:)

at org.apache.spark.sql.execution.WholeStageCodegenExec$$anonfun$$$anon$.hasNext(WholeStageCodegenExec.scala:)

at scala.collection.Iterator$$anon$.hasNext(Iterator.scala:)

at org.apache.spark.shuffle.sort.BypassMergeSortShuffleWriter.write(BypassMergeSortShuffleWriter.java:)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:)

at org.apache.spark.scheduler.ShuffleMapTask.runTask(ShuffleMapTask.scala:)

at org.apache.spark.scheduler.Task.run(Task.scala:)

at org.apache.spark.executor.Executor$TaskRunner.run(Executor.scala:)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:)

at java.lang.Thread.run(Thread.java:)

// :: INFO Executor: Finished task 0.0 in stage 0.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID ) in ms on localhost (executor driver) (/)

// :: INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: ShuffleMapStage (show at <console>:) finished in 1.772 s

// :: INFO DAGScheduler: looking for newly runnable stages

// :: INFO DAGScheduler: running: Set()

// :: INFO DAGScheduler: waiting: Set(ResultStage )

// :: INFO DAGScheduler: failed: Set()

// :: INFO DAGScheduler: Submitting ResultStage (MapPartitionsRDD[] at show at <console>:), which has no missing parents

// :: INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 6.9 KB, free 413.6 MB)

// :: INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 3.7 KB, free 413.6 MB)

// :: INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 192.168.86.151: (size: 3.7 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from ResultStage (MapPartitionsRDD[] at show at <console>:) (first tasks are for partitions Vector())

// :: INFO TaskSchedulerImpl: Adding task set 1.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID , localhost, executor driver, partition , ANY, bytes)

// :: INFO Executor: Running task 0.0 in stage 1.0 (TID )

// :: INFO ShuffleBlockFetcherIterator: Getting non-empty blocks out of blocks

// :: INFO ShuffleBlockFetcherIterator: Started remote fetches in ms

// :: INFO Executor: Finished task 0.0 in stage 1.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID ) in ms on localhost (executor driver) (/)

// :: INFO DAGScheduler: ResultStage (show at <console>:) finished in 0.166 s

// :: INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: Job finished: show at <console>:, took 2.618861 s

// :: INFO CodeGenerator: Code generated in 53.284282 ms

+--------+

|count()|

+--------+

| |

+--------+

df: Unit = ()

scala> val df =spark.sql("select * from weblogs limit 10").show

// :: INFO BlockManagerInfo: Removed broadcast_2_piece0 on 192.168.86.151: in memory (size: 3.7 KB, free: 413.9 MB)

// :: INFO SparkSqlParser: Parsing command: select * from weblogs limit

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 242.3 KB, free 413.4 MB)

// :: INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 23.0 KB, free 413.4 MB)

// :: INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on 192.168.86.151: (size: 23.0 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from

// :: INFO HBaseStorageHandler: Configuring input job properties

// :: INFO RegionSizeCalculator: Calculating region sizes for table "weblogs".

// :: WARN TableInputFormatBase: Cannot resolve the host name for bigdata-pro03.kfk.com/192.168.86.153 because of javax.naming.NameNotFoundException: DNS name not found [response code ]; remaining name '153.86.168.192.in-addr.arpa'

// :: INFO SparkContext: Starting job: show at <console>:

// :: INFO DAGScheduler: Got job (show at <console>:) with output partitions

// :: INFO DAGScheduler: Final stage: ResultStage (show at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List()

// :: INFO DAGScheduler: Missing parents: List()

// :: INFO DAGScheduler: Submitting ResultStage (MapPartitionsRDD[] at show at <console>:), which has no missing parents

// :: INFO MemoryStore: Block broadcast_4 stored as values in memory (estimated size 15.8 KB, free 413.4 MB)

// :: INFO MemoryStore: Block broadcast_4_piece0 stored as bytes in memory (estimated size 8.4 KB, free 413.4 MB)

// :: INFO BlockManagerInfo: Added broadcast_4_piece0 in memory on 192.168.86.151: (size: 8.4 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from ResultStage (MapPartitionsRDD[] at show at <console>:) (first tasks are for partitions Vector())

// :: INFO TaskSchedulerImpl: Adding task set 2.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID , localhost, executor driver, partition , ANY, bytes)

// :: INFO Executor: Running task 0.0 in stage 2.0 (TID )

// :: INFO HadoopRDD: Input split: bigdata-pro03.kfk.com:,

// :: INFO TableInputFormatBase: Input split length: M bytes.

// :: INFO CodeGenerator: Code generated in 94.66518 ms

// :: INFO Executor: Finished task 0.0 in stage 2.0 (TID ). bytes result sent to driver

// :: INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID ) in ms on localhost (executor driver) (/)

// :: INFO DAGScheduler: ResultStage (show at <console>:) finished in 0.361 s

// :: INFO DAGScheduler: Job finished: show at <console>:, took 0.421794 s

// :: INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

// :: INFO CodeGenerator: Code generated in 95.678505 ms

+--------------------+--------+-------------------+----------+--------+--------+--------------------+

| id|datatime| userid|searchname|retorder|cliorder| cliurl|

+--------------------+--------+-------------------+----------+--------+--------+--------------------+

|...|::|| [蒙古国地图]| | |maps.blogtt.com/m...|

|...|::|| [蒙古国地图]| | |maps.blogtt.com/m...|

|...|::|| [ppg]| | |www.ppg.cn/yesppg...|

|...|::|| [ppg]| | |www.ppg.cn/yesppg...|

|...|::|| [明星合成]| | |scsdcsadwa.blog.s...|

|...|::|| [明星合成]| | |scsdcsadwa.blog.s...|

|...|::|| [明星合成]| | | av.avbox.us/|

|...|::|| [明星合成]| | | av.avbox.us/|

|...|::|| [明星合成]| | |csdfhnuop.blog.so...|

|...|::|| [明星合成]| | |csdfhnuop.blog.so...|

+--------------------+--------+-------------------+----------+--------+--------+--------------------+

df: Unit = ()

scala> // :: INFO BlockManagerInfo: Removed broadcast_4_piece0 on 192.168.86.151: in memory (size: 8.4 KB, free: 413.9 MB)

// :: INFO BlockManagerInfo: Removed broadcast_3_piece0 on 192.168.86.151: in memory (size: 23.0 KB, free: 413.9 MB)

// :: INFO ContextCleaner: Cleaned accumulator

我们基于集群模式启动spark-shell

scala> spark.sql("select count(1) from weblogs").show

// :: INFO SparkSqlParser: Parsing command: select count() from weblogs

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO CatalystSqlParser: Parsing command: string

// :: INFO ContextCleaner: Cleaned accumulator

// :: INFO CodeGenerator: Code generated in 1043.849585 ms

// :: INFO CodeGenerator: Code generated in 79.914587 ms

// :: INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 242.2 KB, free 413.7 MB)

// :: INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 22.9 KB, free 413.7 MB)

// :: INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.86.151: (size: 22.9 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from

// :: INFO HBaseStorageHandler: Configuring input job properties

// :: INFO RecoverableZooKeeper: Process identifier=hconnection-0x25131637 connecting to ZooKeeper ensemble=bigdata-pro02.kfk.com:,bigdata-pro01.kfk.com:,bigdata-pro03.kfk.com:

// :: INFO ZooKeeper: Client environment:zookeeper.version=3.4.-, built on // : GMT

// :: INFO ZooKeeper: Client environment:host.name=bigdata-pro01.kfk.com

// :: INFO ZooKeeper: Client environment:java.version=1.8.0_60

// :: INFO ZooKeeper: Client environment:java.vendor=Oracle Corporation

// :: INFO ZooKeeper: Client environment:java.home=/opt/modules/jdk1..0_60/jre

// :: INFO ZooKeeper: Client environment:java.class.path=/opt/modules/spark-2.2.-bin/conf/:/opt/modules/spark-2.2.-bin/jars/htrace-core-3.0..jar:/opt/modules/spark-2.2.-bin/jars/jpam-1.1.jar:/opt/modules/spark-2.2.-bin/jars/mysql-connector-java-5.1.-bin.jar:/opt/modules/spark-2.2.-bin/jars/snappy-java-1.1.2.6.jar:/opt/modules/spark-2.2.-bin/jars/commons-compress-1.4..jar:/opt/modules/spark-2.2.-bin/jars/hbase-server-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/spark-sql_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/jersey-client-2.22..jar:/opt/modules/spark-2.2.-bin/jars/jetty-6.1..jar:/opt/modules/spark-2.2.-bin/jars/jackson-databind-2.6..jar:/opt/modules/spark-2.2.-bin/jars/javolution-5.5..jar:/opt/modules/spark-2.2.-bin/jars/opencsv-2.3.jar:/opt/modules/spark-2.2.-bin/jars/curator-framework-2.6..jar:/opt/modules/spark-2.2.-bin/jars/commons-collections-3.2..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-jobclient-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hk2-utils-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/metrics-graphite-3.1..jar:/opt/modules/spark-2.2.-bin/jars/pmml-model-1.2..jar:/opt/modules/spark-2.2.-bin/jars/compress-lzf-1.0..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-app-2.6..jar:/opt/modules/spark-2.2.-bin/jars/parquet-encoding-1.8..jar:/opt/modules/spark-2.2.-bin/jars/xz-1.0.jar:/opt/modules/spark-2.2.-bin/jars/datanucleus-core-3.2..jar:/opt/modules/spark-2.2.-bin/jars/guice-servlet-3.0.jar:/opt/modules/spark-2.2.-bin/jars/stax-api-1.0-.jar:/opt/modules/spark-2.2.-bin/jars/eigenbase-properties-1.1..jar:/opt/modules/spark-2.2.-bin/jars/metrics-jvm-3.1..jar:/opt/modules/spark-2.2.-bin/jars/stream-2.7..jar:/opt/modules/spark-2.2.-bin/jars/spark-mllib-local_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/derby-10.12.1.1.jar:/opt/modules/spark-2.2.-bin/jars/joda-time-2.9..jar:/opt/modules/spark-2.2.-bin/jars/parquet-common-1.8..jar:/opt/modules/spark-2.2.-bin/jars/ivy-2.4..jar:/opt/modules/spark-2.2.-bin/jars/slf4j-api-1.7..jar:/opt/modules/spark-2.2.-bin/jars/jetty-util-6.1..jar:/opt/modules/spark-2.2.-bin/jars/shapeless_2.-2.3..jar:/opt/modules/spark-2.2.-bin/jars/activation-1.1..jar:/opt/modules/spark-2.2.-bin/jars/jackson-module-scala_2.-2.6..jar:/opt/modules/spark-2.2.-bin/jars/libthrift-0.9..jar:/opt/modules/spark-2.2.-bin/jars/log4j-1.2..jar:/opt/modules/spark-2.2.-bin/jars/antlr4-runtime-4.5..jar:/opt/modules/spark-2.2.-bin/jars/chill-java-0.8..jar:/opt/modules/spark-2.2.-bin/jars/snappy-0.2.jar:/opt/modules/spark-2.2.-bin/jars/core-1.1..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-annotations-2.6..jar:/opt/modules/spark-2.2.-bin/jars/jersey-container-servlet-2.22..jar:/opt/modules/spark-2.2.-bin/jars/spark-network-shuffle_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-graphx_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/breeze_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/scala-compiler-2.11..jar:/opt/modules/spark-2.2.-bin/jars/aopalliance-1.0.jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/aopalliance-repackaged-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/commons-beanutils-core-1.8..jar:/opt/modules/spark-2.2.-bin/jars/jsr305-1.3..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/osgi-resource-locator-1.0..jar:/opt/modules/spark-2.2.-bin/jars/univocity-parsers-2.2..jar:/opt/modules/spark-2.2.-bin/jars/hive-exec-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/commons-crypto-1.0..jar:/opt/modules/spark-2.2.-bin/jars/metrics-json-3.1..jar:/opt/modules/spark-2.2.-bin/jars/minlog-1.3..jar:/opt/modules/spark-2.2.-bin/jars/JavaEWAH-0.3..jar:/opt/modules/spark-2.2.-bin/jars/json4s-jackson_2.-3.2..jar:/opt/modules/spark-2.2.-bin/jars/javax.ws.rs-api-2.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-dbcp-1.4.jar:/opt/modules/spark-2.2.-bin/jars/slf4j-log4j12-1.7..jar:/opt/modules/spark-2.2.-bin/jars/javax.inject-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/scala-xml_2.-1.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-pool-1.5..jar:/opt/modules/spark-2.2.-bin/jars/jaxb-api-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-network-common_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/gson-2.2..jar:/opt/modules/spark-2.2.-bin/jars/protobuf-java-2.5..jar:/opt/modules/spark-2.2.-bin/jars/objenesis-2.1.jar:/opt/modules/spark-2.2.-bin/jars/hive-metastore-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/jersey-container-servlet-core-2.22..jar:/opt/modules/spark-2.2.-bin/jars/stax-api-1.0..jar:/opt/modules/spark-2.2.-bin/jars/super-csv-2.2..jar:/opt/modules/spark-2.2.-bin/jars/metrics-core-3.1..jar:/opt/modules/spark-2.2.-bin/jars/scala-parser-combinators_2.-1.0..jar:/opt/modules/spark-2.2.-bin/jars/apacheds-i18n-2.0.-M15.jar:/opt/modules/spark-2.2.-bin/jars/spire_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/xbean-asm5-shaded-4.4.jar:/opt/modules/spark-2.2.-bin/jars/httpclient-4.5..jar:/opt/modules/spark-2.2.-bin/jars/hive-beeline-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/janino-3.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-beanutils-1.7..jar:/opt/modules/spark-2.2.-bin/jars/javax.annotation-api-1.2.jar:/opt/modules/spark-2.2.-bin/jars/curator-recipes-2.6..jar:/opt/modules/spark-2.2.-bin/jars/jackson-core-2.6..jar:/opt/modules/spark-2.2.-bin/jars/paranamer-2.6.jar:/opt/modules/spark-2.2.-bin/jars/hk2-locator-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/spark-hive_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/bonecp-0.8..RELEASE.jar:/opt/modules/spark-2.2.-bin/jars/parquet-column-1.8..jar:/opt/modules/spark-2.2.-bin/jars/calcite-linq4j-1.2.-incubating.jar:/opt/modules/spark-2.2.-bin/jars/commons-cli-1.2.jar:/opt/modules/spark-2.2.-bin/jars/javax.inject-.jar:/opt/modules/spark-2.2.-bin/jars/hbase-common-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/spark-tags_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/bcprov-jdk15on-1.51.jar:/opt/modules/spark-2.2.-bin/jars/stringtemplate-3.2..jar:/opt/modules/spark-2.2.-bin/jars/RoaringBitmap-0.5..jar:/opt/modules/spark-2.2.-bin/jars/hbase-client-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/commons-codec-1.10.jar:/opt/modules/spark-2.2.-bin/jars/hive-cli-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/scala-reflect-2.11..jar:/opt/modules/spark-2.2.-bin/jars/jline-2.12..jar:/opt/modules/spark-2.2.-bin/jars/jackson-core-asl-1.9..jar:/opt/modules/spark-2.2.-bin/jars/jersey-server-2.22..jar:/opt/modules/spark-2.2.-bin/jars/xercesImpl-2.9..jar:/opt/modules/spark-2.2.-bin/jars/parquet-format-2.3..jar:/opt/modules/spark-2.2.-bin/jars/jdo-api-3.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-lang-2.6.jar:/opt/modules/spark-2.2.-bin/jars/jta-1.1.jar:/opt/modules/spark-2.2.-bin/jars/commons-httpclient-3.1.jar:/opt/modules/spark-2.2.-bin/jars/pyrolite-4.13.jar:/opt/modules/spark-2.2.-bin/jars/jul-to-slf4j-1.7..jar:/opt/modules/spark-2.2.-bin/jars/api-util-1.0.-M20.jar:/opt/modules/spark-2.2.-bin/jars/hive-hbase-handler-1.2..jar:/opt/modules/spark-2.2.-bin/jars/commons-math3-3.4..jar:/opt/modules/spark-2.2.-bin/jars/jets3t-0.9..jar:/opt/modules/spark-2.2.-bin/jars/spark-catalyst_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/parquet-jackson-1.8..jar:/opt/modules/spark-2.2.-bin/jars/jackson-annotations-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-server-web-proxy-2.6..jar:/opt/modules/spark-2.2.-bin/jars/spark-hive-thriftserver_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/parquet-hadoop-1.8..jar:/opt/modules/spark-2.2.-bin/jars/apacheds-kerberos-codec-2.0.-M15.jar:/opt/modules/spark-2.2.-bin/jars/ST4-4.0..jar:/opt/modules/spark-2.2.-bin/jars/jackson-mapper-asl-1.9..jar:/opt/modules/spark-2.2.-bin/jars/machinist_2.-0.6..jar:/opt/modules/spark-2.2.-bin/jars/spark-mllib_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/scala-library-2.11..jar:/opt/modules/spark-2.2.-bin/jars/guava-14.0..jar:/opt/modules/spark-2.2.-bin/jars/javassist-3.18.-GA.jar:/opt/modules/spark-2.2.-bin/jars/api-asn1-api-1.0.-M20.jar:/opt/modules/spark-2.2.-bin/jars/antlr-2.7..jar:/opt/modules/spark-2.2.-bin/jars/jackson-module-paranamer-2.6..jar:/opt/modules/spark-2.2.-bin/jars/curator-client-2.6..jar:/opt/modules/spark-2.2.-bin/jars/arpack_combined_all-0.1.jar:/opt/modules/spark-2.2.-bin/jars/datanucleus-api-jdo-3.2..jar:/opt/modules/spark-2.2.-bin/jars/calcite-avatica-1.2.-incubating.jar:/opt/modules/spark-2.2.-bin/jars/avro-mapred-1.7.-hadoop2.jar:/opt/modules/spark-2.2.-bin/jars/hive-jdbc-1.2..spark2.jar:/opt/modules/spark-2.2.-bin/jars/breeze-macros_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/hbase-protocol-0.98.-cdh5.3.0.jar:/opt/modules/spark-2.2.-bin/jars/json4s-core_2.-3.2..jar:/opt/modules/spark-2.2.-bin/jars/spire-macros_2.-0.13..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-client-2.6..jar:/opt/modules/spark-2.2.-bin/jars/mx4j-3.0..jar:/opt/modules/spark-2.2.-bin/jars/py4j-0.10..jar:/opt/modules/spark-2.2.-bin/jars/scalap-2.11..jar:/opt/modules/spark-2.2.-bin/jars/jersey-guava-2.22..jar:/opt/modules/spark-2.2.-bin/jars/jersey-media-jaxb-2.22..jar:/opt/modules/spark-2.2.-bin/jars/commons-configuration-1.6.jar:/opt/modules/spark-2.2.-bin/jars/json4s-ast_2.-3.2..jar:/opt/modules/spark-2.2.-bin/jars/htrace-core-2.04.jar:/opt/modules/spark-2.2.-bin/jars/hadoop-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/kryo-shaded-3.0..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-auth-2.6..jar:/opt/modules/spark-2.2.-bin/jars/commons-compiler-3.0..jar:/opt/modules/spark-2.2.-bin/jars/jtransforms-2.4..jar:/opt/modules/spark-2.2.-bin/jars/commons-net-2.2.jar:/opt/modules/spark-2.2.-bin/jars/jcl-over-slf4j-1.7..jar:/opt/modules/spark-2.2.-bin/jars/spark-launcher_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-core_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/antlr-runtime-3.4.jar:/opt/modules/spark-2.2.-bin/jars/spark-repl_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/spark-streaming_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/datanucleus-rdbms-3.2..jar:/opt/modules/spark-2.2.-bin/jars/netty-3.9..Final.jar:/opt/modules/spark-2.2.-bin/jars/lz4-1.3..jar:/opt/modules/spark-2.2.-bin/jars/zookeeper-3.4..jar:/opt/modules/spark-2.2.-bin/jars/java-xmlbuilder-1.0.jar:/opt/modules/spark-2.2.-bin/jars/jersey-common-2.22..jar:/opt/modules/spark-2.2.-bin/jars/netty-all-4.0..Final.jar:/opt/modules/spark-2.2.-bin/jars/validation-api-1.1..Final.jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-core-2.6..jar:/opt/modules/spark-2.2.-bin/jars/avro-ipc-1.7..jar:/opt/modules/spark-2.2.-bin/jars/jodd-core-3.5..jar:/opt/modules/spark-2.2.-bin/jars/jackson-xc-1.9..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-client-2.6..jar:/opt/modules/spark-2.2.-bin/jars/guice-3.0.jar:/opt/modules/spark-2.2.-bin/jars/spark-yarn_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/parquet-hadoop-bundle-1.6..jar:/opt/modules/spark-2.2.-bin/jars/leveldbjni-all-1.8.jar:/opt/modules/spark-2.2.-bin/jars/hk2-api-2.4.-b34.jar:/opt/modules/spark-2.2.-bin/jars/javax.servlet-api-3.1..jar:/opt/modules/spark-2.2.-bin/jars/mysql-connector-java-5.1..jar:/opt/modules/spark-2.2.-bin/jars/libfb303-0.9..jar:/opt/modules/spark-2.2.-bin/jars/httpcore-4.4..jar:/opt/modules/spark-2.2.-bin/jars/chill_2.-0.8..jar:/opt/modules/spark-2.2.-bin/jars/spark-sketch_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/commons-lang3-3.5.jar:/opt/modules/spark-2.2.-bin/jars/mail-1.4..jar:/opt/modules/spark-2.2.-bin/jars/apache-log4j-extras-1.2..jar:/opt/modules/spark-2.2.-bin/jars/xmlenc-0.52.jar:/opt/modules/spark-2.2.-bin/jars/avro-1.7..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-server-common-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-yarn-api-2.6..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-hdfs-2.6..jar:/opt/modules/spark-2.2.-bin/jars/pmml-schema-1.2..jar:/opt/modules/spark-2.2.-bin/jars/calcite-core-1.2.-incubating.jar:/opt/modules/spark-2.2.-bin/jars/spark-unsafe_2.-2.2..jar:/opt/modules/spark-2.2.-bin/jars/base64-2.3..jar:/opt/modules/spark-2.2.-bin/jars/jackson-jaxrs-1.9..jar:/opt/modules/spark-2.2.-bin/jars/hadoop-mapreduce-client-shuffle-2.6..jar:/opt/modules/spark-2.2.-bin/jars/oro-2.0..jar:/opt/modules/spark-2.2.-bin/jars/commons-digester-1.8.jar:/opt/modules/spark-2.2.-bin/jars/commons-io-2.4.jar:/opt/modules/spark-2.2.-bin/jars/commons-logging-1.1..jar:/opt/modules/spark-2.2.-bin/jars/macro-compat_2.-1.1..jar:/opt/modules/hadoop-2.6./etc/hadoop/

// :: INFO ZooKeeper: Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

// :: INFO ZooKeeper: Client environment:java.io.tmpdir=/tmp

// :: INFO ZooKeeper: Client environment:java.compiler=<NA>

// :: INFO ZooKeeper: Client environment:os.name=Linux

// :: INFO ZooKeeper: Client environment:os.arch=amd64

// :: INFO ZooKeeper: Client environment:os.version=2.6.-.el6.x86_64

// :: INFO ZooKeeper: Client environment:user.name=kfk

// :: INFO ZooKeeper: Client environment:user.home=/home/kfk

// :: INFO ZooKeeper: Client environment:user.dir=/opt/modules/spark-2.2.-bin

// :: INFO ZooKeeper: Initiating client connection, connectString=bigdata-pro02.kfk.com:,bigdata-pro01.kfk.com:,bigdata-pro03.kfk.com: sessionTimeout= watcher=hconnection-0x25131637, quorum=bigdata-pro02.kfk.com:,bigdata-pro01.kfk.com:,bigdata-pro03.kfk.com:, baseZNode=/hbase

// :: INFO ClientCnxn: Opening socket connection to server bigdata-pro01.kfk.com/192.168.86.151:. Will not attempt to authenticate using SASL (unknown error)

// :: INFO ClientCnxn: Socket connection established to bigdata-pro01.kfk.com/192.168.86.151:, initiating session

// :: INFO ClientCnxn: Session establishment complete on server bigdata-pro01.kfk.com/192.168.86.151:, sessionid = 0x1623bd7ca740014, negotiated timeout =

// :: INFO RegionSizeCalculator: Calculating region sizes for table "weblogs".

// :: WARN TableInputFormatBase: Cannot resolve the host name for bigdata-pro03.kfk.com/192.168.86.153 because of javax.naming.CommunicationException: DNS error [Root exception is java.net.SocketTimeoutException: Receive timed out]; remaining name '153.86.168.192.in-addr.arpa'

// :: INFO SparkContext: Starting job: show at <console>:

// :: INFO DAGScheduler: Registering RDD (show at <console>:)

// :: INFO DAGScheduler: Got job (show at <console>:) with output partitions

// :: INFO DAGScheduler: Final stage: ResultStage (show at <console>:)

// :: INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage )

// :: INFO DAGScheduler: Missing parents: List(ShuffleMapStage )

// :: INFO DAGScheduler: Submitting ShuffleMapStage (MapPartitionsRDD[] at show at <console>:), which has no missing parents

// :: INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 18.9 KB, free 413.6 MB)

// :: INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 9.9 KB, free 413.6 MB)

// :: INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.86.151: (size: 9.9 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from ShuffleMapStage (MapPartitionsRDD[] at show at <console>:) (first tasks are for partitions Vector())

// :: INFO TaskSchedulerImpl: Adding task set 0.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID , 192.168.86.153, executor , partition , ANY, bytes)

// :: INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 192.168.86.153: (size: 9.9 KB, free: 413.9 MB)

// :: INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.86.153: (size: 22.9 KB, free: 413.9 MB)

// :: INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID ) in ms on 192.168.86.153 (executor ) (/)

// :: INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: ShuffleMapStage (show at <console>:) finished in 17.708 s

// :: INFO DAGScheduler: looking for newly runnable stages

// :: INFO DAGScheduler: running: Set()

// :: INFO DAGScheduler: waiting: Set(ResultStage )

// :: INFO DAGScheduler: failed: Set()

// :: INFO DAGScheduler: Submitting ResultStage (MapPartitionsRDD[] at show at <console>:), which has no missing parents

// :: INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 6.9 KB, free 413.6 MB)

// :: INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 3.7 KB, free 413.6 MB)

// :: INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 192.168.86.151: (size: 3.7 KB, free: 413.9 MB)

// :: INFO SparkContext: Created broadcast from broadcast at DAGScheduler.scala:

// :: INFO DAGScheduler: Submitting missing tasks from ResultStage (MapPartitionsRDD[] at show at <console>:) (first tasks are for partitions Vector())

// :: INFO TaskSchedulerImpl: Adding task set 1.0 with tasks

// :: INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID , 192.168.86.153, executor , partition , NODE_LOCAL, bytes)

// :: INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 192.168.86.153: (size: 3.7 KB, free: 413.9 MB)

// :: INFO MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle to 192.168.86.153:

// :: INFO MapOutputTrackerMaster: Size of output statuses for shuffle is bytes

// :: INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID ) in ms on 192.168.86.153 (executor ) (/)

// :: INFO DAGScheduler: ResultStage (show at <console>:) finished in 0.695 s

// :: INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

// :: INFO DAGScheduler: Job finished: show at <console>:, took 19.203658 s

// :: INFO CodeGenerator: Code generated in 57.823649 ms

+--------+

|count()|

+--------+

| |

+--------+

scala>

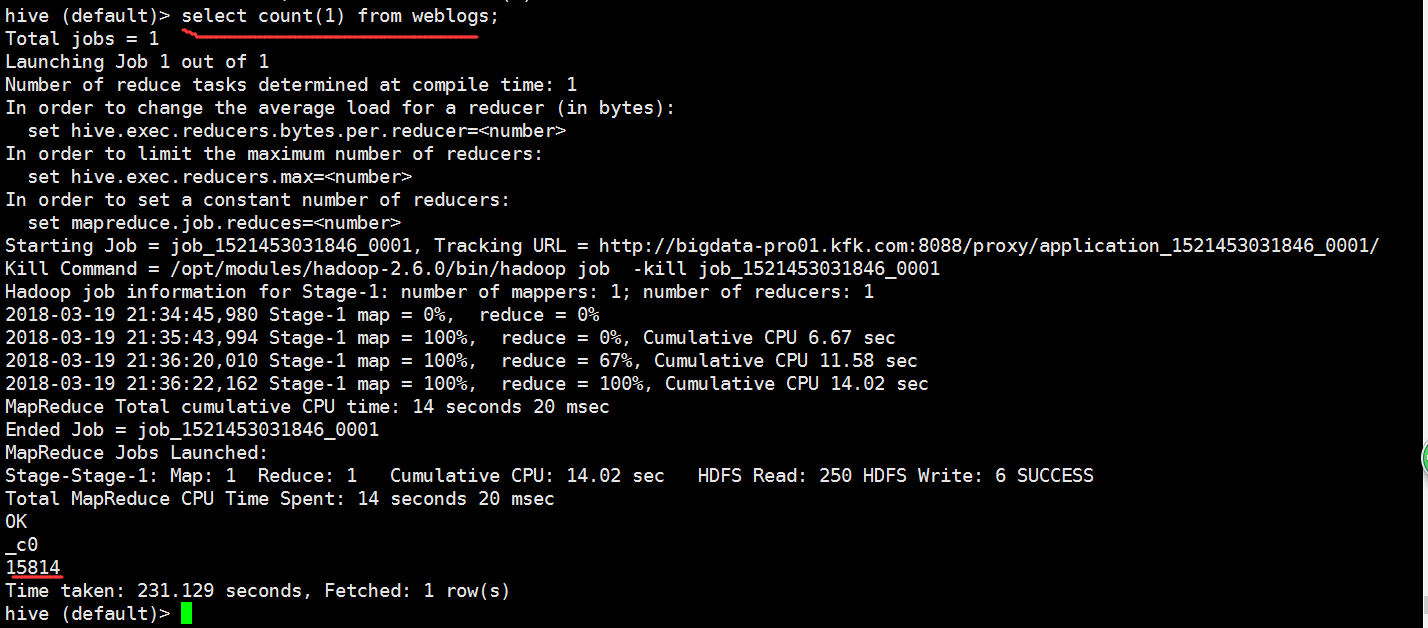

我们在hive里面查看一下统计的条数

可以看到统计的结果是一样的,证明我们的spark和hbase的集成是成功了

Spark SQL快速离线数据分析的更多相关文章

- 新闻网大数据实时分析可视化系统项目——18、Spark SQL快速离线数据分析

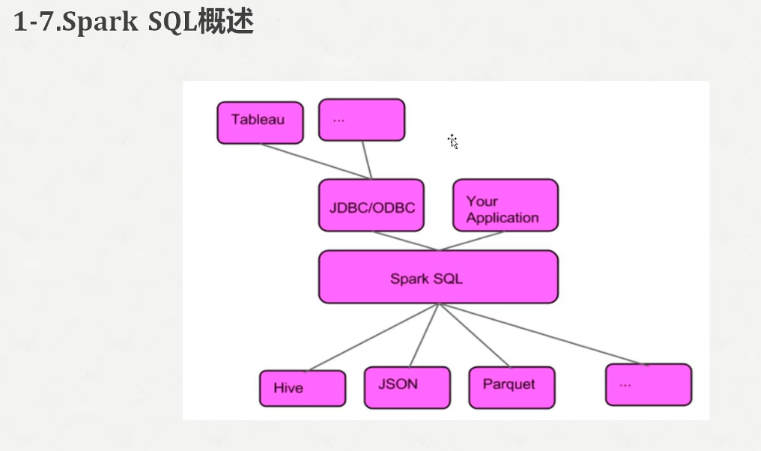

1.Spark SQL概述 1)Spark SQL是Spark核心功能的一部分,是在2014年4月份Spark1.0版本时发布的. 2)Spark SQL可以直接运行SQL或者HiveQL语句 3)B ...

- 新闻实时分析系统 SQL快速离线数据分析

1.Spark SQL概述1)Spark SQL是Spark核心功能的一部分,是在2014年4月份Spark1.0版本时发布的. 2)Spark SQL可以直接运行SQL或者HiveQL语句 3)BI ...

- Spark2.x学习笔记:Spark SQL快速入门

Spark SQL快速入门 本地表 (1)准备数据 [root@node1 ~]# mkdir /tmp/data [root@node1 ~]# cat data/ml-1m/users.dat | ...

- Spark SQL概念学习系列之为什么使用 Spark SQL?(二)

简单地说,Shark 的下一代技术 是Spark SQL. 由于 Shark 底层依赖于 Hive,这个架构的优势是对传统 Hive 用户可以将 Shark 无缝集成进现有系统运行查询负载. 但是也看 ...

- Spark SQL应用

Spark Shell启动后,就可以用Spark SQL API执行数据分析查询. 在第一个示例中,我们将从文本文件中加载用户数据并从数据集中创建一个DataFrame对象.然后运行DataFrame ...

- [spark 快速大数据分析读书笔记] 第一章 导论

[序言] Spark 基于内存的基本类型 (primitive)为一些应用程序带来了 100 倍的性能提升.Spark 允许用户程序将数据加载到 集群内存中用于反复查询,非常适用于大数据和机器学习. ...

- 大数据技术之_27_电商平台数据分析项目_02_预备知识 + Scala + Spark Core + Spark SQL + Spark Streaming + Java 对象池

第0章 预备知识0.1 Scala0.1.1 Scala 操作符0.1.2 拉链操作0.2 Spark Core0.2.1 Spark RDD 持久化0.2.2 Spark 共享变量0.3 Spark ...

- Spark 基础之SQL 快速上手

知识点 SQL 基本概念 SQL Context 的生成和使用 1.6 版本新API:Datasets 常用 Spark SQL 数学和统计函数 SQL 语句 Spark DataFrame 文件保存 ...

- SQL数据分析概览——Hive、Impala、Spark SQL、Drill、HAWQ 以及Presto+druid

转自infoQ! 根据 O’Reilly 2016年数据科学薪资调查显示,SQL 是数据科学领域使用最广泛的语言.大部分项目都需要一些SQL 操作,甚至有一些只需要SQL. 本文涵盖了6个开源领导者: ...

随机推荐

- 【转】iOS编译OpenSSL静态库(使用脚本自动编译)

原文网址:https://www.jianshu.com/p/651513cab181 本篇文章为大家推荐两个脚本,用来iOS系统下编译OpenSSL通用库,如果想了解编译具体过程,请参看<iO ...

- php+js实现重定向跳转并post传参

页面重定向跳转并post传参 $mdata=json_encode($mdata);//如果是字符串无需使用json echo " <form style='display:none; ...

- cvs报错: socket exception recv failed

连接都OK的. 也可以telnet到服务器上去. 网上的各种方法都试了,没法解决. 后来一直在乱试,居然解决了. 就是这样设置的,选中第一个复选框.

- java中length与length()

length是对数组而言的,指的是数组的长度. length()是对字符串而言的,指的是字符串所包含的字符个数. public class LengthDemo { public static voi ...

- Thinkphp 缓存和静态缓存局部缓存设置

1.S方法缓存设置 if(!$rows = S('indexBlog')){ //*$rows = S('indexBlog') $rows = D('blog')->select(); S(' ...

- vue element-ui 用checkebox 来模拟选值 1/0

https://jsfiddle.net/57dz2m3s/12/ 复制 粘贴 打开url就可以看到效果

- Eclipse创建一个mybatis工程实现连接数据库查询

Eclipse上创建第一mybatis工程实现数据库查询 步骤: 1.创建一个java工程 2.创建lib文件夹,加入mybatis核心包.依赖包.数据驱动包.并为jar包添加路径 3.创建resou ...

- 持续集成(Continuous Integration)基本概念与实践

本文由Markdown语法编辑器编辑完成. From https://blog.csdn.net/inter_peng/article/details/53131831 1. 持续集成的概念 持续集成 ...

- [蓝桥杯]ALGO-16.算法训练_进制转换

问题描述 我们可以用这样的方式来表示一个十进制数: 将每个阿拉伯数字乘以一个以该数字所处位置的(值减1)为指数,以10为底数的幂之和的形式.例如:123可表示为 1*102+2*101+3*100这样 ...

- [蓝桥杯]ALGO-116.算法训练_最大的算式

问题描述 题目很简单,给出N个数字,不改变它们的相对位置,在中间加入K个乘号和N-K-1个加号,(括号随便加)使最终结果尽量大.因为乘号和加号一共就是N-1个了,所以恰好每两个相邻数字之间都有一个符号 ...