Hive-1.2.1_01_安装部署

前言:该文章是基于 Hadoop2.7.6_01_部署 进行的。

1. Hive基本概念

1.1. 什么是Hive

Hive是基于Hadoop的一个数据仓库工具,可以将结构化的数据文件映射为一张数据库表,并提供类SQL查询功能。

1.2. 为什么使用Hive

直接使用hadoop所面临的问题

人员学习成本太高

项目周期要求太短

MapReduce实现复杂查询逻辑开发难度太大

为什么要使用Hive

操作接口采用类SQL语法,提供快速开发的能力。

避免了去写MapReduce,减少开发人员的学习成本。

扩展功能很方便。

1.3. Hive的特点

可扩展

Hive可以自由的扩展集群的规模,一般情况下不需要重启服务。

延展性

Hive支持用户自定义函数,用户可以根据自己的需求来实现自己的函数。

容错

良好的容错性,节点出现问题SQL仍可完成执行。

1.4. 架构图

1.5. 基本组成

用户接口:包括 CLI、JDBC/ODBC、WebGUI。

元数据存储:通常是存储在关系数据库如 mysql , derby中。

解释器、编译器、优化器、执行器。

1.6. 各组件的基本功能

用户接口主要由三个:CLI、JDBC/ODBC和WebGUI。其中,CLI为shell命令行;JDBC/ODBC是Hive的JAVA实现,与传统数据库JDBC类似;WebGUI是通过浏览器访问Hive。

元数据存储:Hive 将元数据存储在数据库中。Hive 中的元数据包括表的名字,表的列和分区及其属性,表的属性(是否为外部表等),表的数据所在目录等。

解释器、编译器、优化器完成 HQL 查询语句从词法分析、语法分析、编译、优化以及查询计划的生成。生成的查询计划存储在 HDFS 中,并在随后有 MapReduce 调用执行。

1.7. Hive的数据存储

1、Hive中所有的数据都存储在 HDFS 中,没有专门的数据存储格式(可支持Text,SequenceFile,ParquetFile,RCFILE等)

2、只需要在创建表的时候告诉 Hive 数据中的列分隔符和行分隔符,Hive 就可以解析数据。

3、Hive 中包含以下数据模型:DB、Table,External Table,Partition,Bucket。

db:在hdfs中表现为${hive.metastore.warehouse.dir}目录下一个文件夹

table:在hdfs中表现所属db目录下一个文件夹

external table:外部表, 与table类似,不过其数据存放位置可以在任意指定路径

普通表: 删除表后, hdfs上的文件都删了

External外部表删除后, hdfs上的文件没有删除, 只是把数据库中的元数据【描述细信息】删除了

partition:在hdfs中表现为table目录下的子目录

bucket:桶, 在hdfs中表现为同一个表目录下根据hash散列之后的多个文件, 会根据不同的文件把数据放到不同的文件中

2. 主机规划

|

主机名称 |

外网IP |

内网IP |

操作系统 |

安装软件 |

|

mini01 |

10.0.0.11 |

172.16.1.11 |

CentOS 7.4 |

Hadoop 【NameNode SecondaryNameNode】、Hive |

|

mini02 |

10.0.0.12 |

172.16.1.12 |

CentOS 7.4 |

Hadoop 【ResourceManager】 |

|

mini03 |

10.0.0.13 |

172.16.1.13 |

CentOS 7.4 |

Hadoop 【DataNode NodeManager】、Mariadb |

|

mini04 |

10.0.0.14 |

172.16.1.14 |

CentOS 7.4 |

Hadoop 【DataNode NodeManager】 |

|

mini05 |

10.0.0.15 |

172.16.1.15 |

CentOS 7.4 |

Hadoop 【DataNode NodeManager】 |

Linux添加hosts信息,保证每台都可以相互ping通

[yun@mini03 ~]$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

:: localhost localhost.localdomain localhost6 localhost6.localdomain6 10.0.0.11 mini01

10.0.0.12 mini02

10.0.0.13 mini03

10.0.0.14 mini04

10.0.0.15 mini05

Windows的hosts文件修改

# 文件位置C:\Windows\System32\drivers\etc 在hosts中追加如下内容

………………

10.0.0.11 mini01

10.0.0.12 mini02

10.0.0.13 mini03

10.0.0.14 mini04

10.0.0.15 mini05

3. Mariadb安装与配置

3.1. 数据库安装

# 在mini03 机器安装mariadb

[root@mini03 ~]# cat /etc/redhat-release # 也可以使用其他版本

CentOS Linux release 7.4. (Core)

[root@mini03 ~]# yum install -y mariadb mariadb-server # CentOS7的mysql数据库为mariadb

………………

[root@mini03 ~]# systemctl status mariadb.service

● mariadb.service - MariaDB database server

Loaded: loaded (/usr/lib/systemd/system/mariadb.service; disabled; vendor preset: disabled)

Active: inactive (dead)

[root@mini03 ~]# systemctl enable mariadb.service # 加入开机自启动

Created symlink from /etc/systemd/system/multi-user.target.wants/mariadb.service to /usr/lib/systemd/system/mariadb.service.

[root@mini03 ~]# systemctl start mariadb.service # 启动mariadb

[root@mini03 ~]# systemctl status mariadb.service # 查看mariadb服务状态

● mariadb.service - MariaDB database server

Loaded: loaded (/usr/lib/systemd/system/mariadb.service; enabled; vendor preset: disabled)

Active: active (running) since Mon -- :: CST; 2s ago

Process: ExecStartPost=/usr/libexec/mariadb-wait-ready $MAINPID (code=exited, status=/SUCCESS)

Process: ExecStartPre=/usr/libexec/mariadb-prepare-db-dir %n (code=exited, status=/SUCCESS)

Main PID: (mysqld_safe)

………………

3.2. 数据库配置查看

# 进入数据库

[root@mini03 ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is

Server version: 5.5.-MariaDB MariaDB Server Copyright (c) , , Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. # 查看数据库版本

MariaDB [(none)]> select version();

+----------------+

| version() |

+----------------+

| 5.5.-MariaDB |

+----------------+

row in set (0.00 sec) # 支持哪些字符集

MariaDB [(none)]> show CHARACTER SET;

## 字符集 描述 默认校对规则 最大长度

+----------+-----------------------------+---------------------+--------+

| Charset | Description | Default collation | Maxlen |

+----------+-----------------------------+---------------------+--------+

| big5 | Big5 Traditional Chinese | big5_chinese_ci | |

| dec8 | DEC West European | dec8_swedish_ci | |

| cp850 | DOS West European | cp850_general_ci | |

| hp8 | HP West European | hp8_english_ci | |

| koi8r | KOI8-R Relcom Russian | koi8r_general_ci | |

| latin1 | cp1252 West European | latin1_swedish_ci | |

| latin2 | ISO - Central European | latin2_general_ci | |

| swe7 | 7bit Swedish | swe7_swedish_ci | |

| ascii | US ASCII | ascii_general_ci | |

| ujis | EUC-JP Japanese | ujis_japanese_ci | |

| sjis | Shift-JIS Japanese | sjis_japanese_ci | |

| hebrew | ISO - Hebrew | hebrew_general_ci | |

| tis620 | TIS620 Thai | tis620_thai_ci | |

| euckr | EUC-KR Korean | euckr_korean_ci | |

| koi8u | KOI8-U Ukrainian | koi8u_general_ci | |

| gb2312 | GB2312 Simplified Chinese | gb2312_chinese_ci | |

| greek | ISO - Greek | greek_general_ci | |

| cp1250 | Windows Central European | cp1250_general_ci | |

| gbk | GBK Simplified Chinese | gbk_chinese_ci | |

| latin5 | ISO - Turkish | latin5_turkish_ci | |

| armscii8 | ARMSCII- Armenian | armscii8_general_ci | |

| utf8 | UTF- Unicode | utf8_general_ci | |

| ucs2 | UCS- Unicode | ucs2_general_ci | |

| cp866 | DOS Russian | cp866_general_ci | |

| keybcs2 | DOS Kamenicky Czech-Slovak | keybcs2_general_ci | |

| macce | Mac Central European | macce_general_ci | |

| macroman | Mac West European | macroman_general_ci | |

| cp852 | DOS Central European | cp852_general_ci | |

| latin7 | ISO - Baltic | latin7_general_ci | |

| utf8mb4 | UTF- Unicode | utf8mb4_general_ci | |

| cp1251 | Windows Cyrillic | cp1251_general_ci | |

| utf16 | UTF- Unicode | utf16_general_ci | |

| cp1256 | Windows Arabic | cp1256_general_ci | |

| cp1257 | Windows Baltic | cp1257_general_ci | |

| utf32 | UTF- Unicode | utf32_general_ci | |

| binary | Binary pseudo charset | binary | |

| geostd8 | GEOSTD8 Georgian | geostd8_general_ci | |

| cp932 | SJIS for Windows Japanese | cp932_japanese_ci | |

| eucjpms | UJIS for Windows Japanese | eucjpms_japanese_ci | |

+----------+-----------------------------+---------------------+--------+

rows in set (0.00 sec) # 当前数据库默认字符集

MariaDB [(none)]> show variables like '%character_set%';

+--------------------------+----------------------------+

| Variable_name | Value |

+--------------------------+----------------------------+

| character_set_client | utf8 | ## 客户端来源数据使用的字符集

| character_set_connection | utf8 | ## 连接层字符集

| character_set_database | latin1 | ## 当前选中数据库的默认字符集

| character_set_filesystem | binary |

| character_set_results | utf8 | ## 查询结果返回字符集

| character_set_server | latin1 | ## 默认的内部操作字符集【服务端(数据库)字符】

| character_set_system | utf8 | ## 系统元数据(字段名等)字符集【Linux系统字符集】

| character_sets_dir | /usr/share/mysql/charsets/ |

+--------------------------+----------------------------+

rows in set (0.00 sec)

注意:最好不要修改数据库的字符集,因为hive建库时需要使用latin1字符集。

3.3. 建库并授权

# 这里没有创建数据库hive,该数据库可以等hive启动时自行创建

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| test |

+--------------------+

rows in set (0.00 sec) # 创建用户与授权用于远程访问 格式为:mysql -hmini03 -uhive -phive 或者 mysql -h10.0.0. -uhive -phive

MariaDB [(none)]> grant all on hive.* to hive@'%' identified by 'hive';

Query OK, rows affected (0.00 sec) # 创建用户与授权用于本地访问 格式为:mysql -hmini03 -uhive -phive 或者 mysql -h10.0.0. -uhive -phive

MariaDB [(none)]> grant all on hive.* to hive@'mini03' identified by 'hive';

Query OK, rows affected (0.00 sec) # 刷新权限信息

MariaDB [(none)]> flush privileges;

Query OK, rows affected (0.00 sec) MariaDB [(none)]> show grants for hive@'%' ;

+-----------------------------------------------------------------------------------------------------+

| Grants for hive@% |

+-----------------------------------------------------------------------------------------------------+

| GRANT USAGE ON *.* TO 'hive'@'%' IDENTIFIED BY PASSWORD '*4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC' |

| GRANT ALL PRIVILEGES ON `hive`.* TO 'hive'@'%' |

+-----------------------------------------------------------------------------------------------------+

rows in set (0.00 sec) # 查看用户表信息

MariaDB [(none)]> select user,host from mysql.user;

+------+-----------+

| user | host |

+------+-----------+

| hive | % |

| root | 127.0.0.1 |

| root | :: |

| | localhost |

| root | localhost |

| | mini03 |

| hive | mini03 |

| root | mini03 |

+------+-----------+

rows in set (0.00 sec)

4. hive-1.2.1的安装与配置

4.1. 软件安装

[yun@mini01 software]$ pwd

/app/software

[yun@mini01 software]$ ll

total

-rw-r--r-- yun yun May : apache-hive-1.2.-bin.tar.gz

-rw-r--r-- yun yun Jun : CentOS7.4_hadoop-2.7..tar.gz

-rw-r--r-- yun yun Jul : mysql-connector-java-5.1..jar

drwxrwxr-x yun yun Jun : zhangliang

[yun@mini01 software]$

[yun@mini01 software]$ tar xf apache-hive-1.2.-bin.tar.gz

[yun@mini01 software]$ mv apache-hive-1.2.-bin /app/hive-1.2.

[yun@mini01 software]$ cd /app/

[yun@mini01 ~]$ ln -s hive-1.2./ hive

[yun@mini01 ~]$ ll

total

drwxr-xr-x yun yun May : bigdata

lrwxrwxrwx yun yun Jun : hadoop -> hadoop-2.7./

drwxr-xr-x yun yun Jun : hadoop-2.7.

lrwxrwxrwx yun yun Jul : hive -> hive-1.2./

drwxrwxr-x yun yun Jul : hive-1.2.

lrwxrwxrwx yun yun May : jdk -> jdk1..0_112

drwxr-xr-x yun yun Sep jdk1..0_112

4.2. 环境变量

# 使用root权限

[root@mini01 profile.d]# pwd

/etc/profile.d

[root@mini01 profile.d]# cat hive.sh

export HIVE_HOME="/app/hive"

export PATH=$HIVE_HOME/bin:$PATH

4.3. 配置修改

[yun@mini01 conf]$ pwd

/app/hive/conf

[yun@mini01 conf]$ vim hive-site.xml # 默认没有,新建该配置文件

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<!-- 如果hive库不存在,则创建 -->

<value>jdbc:mysql://mini03:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property> <property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property> <property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>username to use against metastore database</description>

</property> <property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

<description>password to use against metastore database</description>

</property> <!-- 显示当前使用的数据库 -->

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

<description>Whether to include the current database in the Hive prompt.</description>

</property> </configuration>

4.4. 启动

# 添加了环境变量

[yun@mini01 ~]$ hive Logging initialized using configuration in jar:file:/app/hive-1.2./lib/hive-common-1.2..jar!/hive-log4j.properties

Exception in thread "main" java.lang.RuntimeException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.hadoop.util.RunJar.run(RunJar.java:)

at org.apache.hadoop.util.RunJar.main(RunJar.java:)

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:)

... more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:)

at java.lang.reflect.Constructor.newInstance(Constructor.java:)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:)

... more

Caused by: javax.jdo.JDOFatalInternalException: Error creating transactional connection factory

NestedThrowables:

java.lang.reflect.InvocationTargetException

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.createPersistenceManagerFactory(JDOPersistenceManagerFactory.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.getPersistenceManagerFactory(JDOPersistenceManagerFactory.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at javax.jdo.JDOHelper$.run(JDOHelper.java:)

at java.security.AccessController.doPrivileged(Native Method)

at javax.jdo.JDOHelper.invoke(JDOHelper.java:)

at javax.jdo.JDOHelper.invokeGetPersistenceManagerFactoryOnImplementation(JDOHelper.java:)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:)

at javax.jdo.JDOHelper.getPersistenceManagerFactory(JDOHelper.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.getPMF(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.getPersistenceManager(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:)

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:)

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:)

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.<init>(RawStoreProxy.java:)

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStore(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:)

... more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:)

at java.lang.reflect.Constructor.newInstance(Constructor.java:)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:)

at org.datanucleus.store.AbstractStoreManager.registerConnectionFactory(AbstractStoreManager.java:)

at org.datanucleus.store.AbstractStoreManager.<init>(AbstractStoreManager.java:)

at org.datanucleus.store.rdbms.RDBMSStoreManager.<init>(RDBMSStoreManager.java:)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:)

at java.lang.reflect.Constructor.newInstance(Constructor.java:)

at org.datanucleus.plugin.NonManagedPluginRegistry.createExecutableExtension(NonManagedPluginRegistry.java:)

at org.datanucleus.plugin.PluginManager.createExecutableExtension(PluginManager.java:)

at org.datanucleus.NucleusContext.createStoreManagerForProperties(NucleusContext.java:)

at org.datanucleus.NucleusContext.initialise(NucleusContext.java:)

at org.datanucleus.api.jdo.JDOPersistenceManagerFactory.freezeConfiguration(JDOPersistenceManagerFactory.java:)

... more

Caused by: org.datanucleus.exceptions.NucleusException: Attempt to invoke the "BONECP" plugin to create a ConnectionPool gave an error : The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.generateDataSources(ConnectionFactoryImpl.java:)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.initialiseDataSources(ConnectionFactoryImpl.java:)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.<init>(ConnectionFactoryImpl.java:)

... more

Caused by: org.datanucleus.store.rdbms.connectionpool.DatastoreDriverNotFoundException: The specified datastore driver ("com.mysql.jdbc.Driver") was not found in the CLASSPATH. Please check your CLASSPATH specification, and the name of the driver.

at org.datanucleus.store.rdbms.connectionpool.AbstractConnectionPoolFactory.loadDriver(AbstractConnectionPoolFactory.java:)

at org.datanucleus.store.rdbms.connectionpool.BoneCPConnectionPoolFactory.createConnectionPool(BoneCPConnectionPoolFactory.java:)

at org.datanucleus.store.rdbms.ConnectionFactoryImpl.generateDataSources(ConnectionFactoryImpl.java:)

... more # 原因: 缺少连接数据库包,把 /app/software/mysql-connector-java-5.1..jar 拷贝过去

[yun@mini01 ~]$ cp -a /app/software/mysql-connector-java-5.1..jar /app/hive/lib/

[yun@mini01 ~]$ hive # 再次启动hive Logging initialized using configuration in jar:file:/app/hive-1.2./lib/hive-common-1.2..jar!/hive-log4j.properties

# 启动成功

hive> show databases;

OK

default

Time taken: 0.774 seconds, Fetched: row(s)

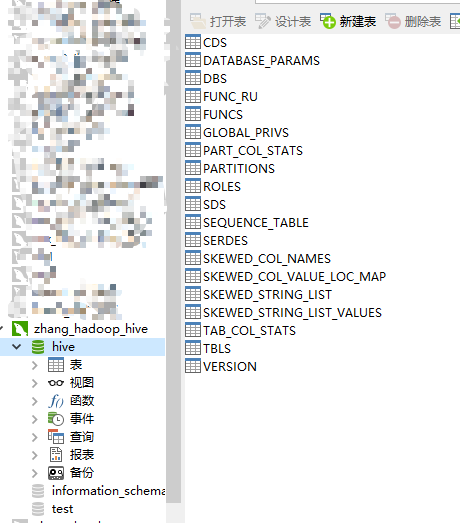

4.4.1. 通过Navicat查看,hive库已经被创建

4.4.2. 在mini03查看MySQL建表语句

[root@mini03 ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is

Server version: 5.5.-MariaDB MariaDB Server Copyright (c) , , Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]>

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| hive |

| mysql |

| performance_schema |

| test |

+--------------------+

rows in set (0.00 sec) MariaDB [(none)]> show create database hive;

# 注意字符集为 latin1,不然使用时可能报错★★★

+----------+-----------------------------------------------------------------+

| Database | Create Database |

+----------+-----------------------------------------------------------------+

| hive | CREATE DATABASE `hive` /*!40100 DEFAULT CHARACTER SET latin1 */ |

+----------+-----------------------------------------------------------------+

row in set (0.00 sec)

Hive-1.2.1_01_安装部署的更多相关文章

- Hive基础概念、安装部署与基本使用

1. Hive简介 1.1 什么是Hive Hives是基于Hadoop的一个数据仓库工具,可以将结构化的数据文件映射为一张数据库表,并提供类SQL查询功能. 1.2 为什么使用Hive ① 直接使用 ...

- hive的本地安装部署,元数据存储到mysql中

要想使用Hive先要有hadoop集群的支持,使用本地把元数据存储在mysql中. mysql要可以远程连接: 可以设置user表,把localhost改为%,所有可连接.记住删除root其他用户,不 ...

- Hive 系列(一)安装部署

Hive 系列(一)安装部署 Hive 官网:http://hive.apache.org.参考手册 一.环境准备 JDK 1.8 :从 Oracle 官网下载,设置环境变量(JAVA_HOME.PA ...

- Hive环境的安装部署(完美安装)(集群内或集群外都适用)(含卸载自带mysql安装指定版本)

Hive环境的安装部署(完美安装)(集群内或集群外都适用)(含卸载自带mysql安装指定版本) Hive 安装依赖 Hadoop 的集群,它是运行在 Hadoop 的基础上. 所以在安装 Hive 之 ...

- Hive 环境的安装部署

Hive在客户端上的安装部署 一.客户端准备: 到这我相信大家都已经打过三节点集群了,如果是的话则可以跳过一,直接进入二.如果不是则按流程来一遍! 1.克隆虚拟机,见我的博客:虚拟机克隆及网络配置 2 ...

- Hive介绍和安装部署

搭建环境 部署节点操作系统为CentOS,防火墙和SElinux禁用,创建了一个shiyanlou用户并在系统根目录下创建/app目录,用于存放 Hadoop等组件运行包.因为该目录用于安装h ...

- Hive安装部署与配置

Hive安装部署与配置 1.1 Hive安装地址 1)Hive官网地址: http://hive.apache.org/ 2)文档查看地址: https://cwiki.apache.org/conf ...

- 【Hadoop离线基础总结】Hive的安装部署以及使用方式

Hive的安装部署以及使用方式 安装部署 Derby版hive直接使用 cd /export/softwares 将上传的hive软件包解压:tar -zxvf hive-1.1.0-cdh5.14. ...

- Hive_初步见解,安装部署与测试

一.hive是什么东东 1. 个人理解 hive就是一个基于hdfs运行于MapReduce上的一个java项目, 这个项目封装了jdbc,根据hdfs编写了处理数据库的DDL/DML,自带的 二进制 ...

随机推荐

- js判断客户端是pc还是手机及获取浏览器版本

//判断是pc还是移动端 function browserRedirect() { var sUserAgent = navigator.userAgent.toLowerCase(); var bI ...

- Linux_CentOS-服务器搭建 <五> 补充

O:文件的编码格式 1.文件转码问题 Windows中默认的文件格式是GBK(gb2312),而Linux一般都是UTF-8. 那么先说,如何查看吧.这时候强大的vi说,I can do that.( ...

- Vue + Element UI 实现权限管理系统 前端篇(十一):第三方图标库

使用第三方图标库 用过Elment的同鞋都知道,Element UI提供的字体图符少之又少,实在是不够用啊,幸好现在有不少丰富的第三方图标库可用,引入也不会很麻烦. Font Awesome Font ...

- [转]微擎load()文件加载器

本文转自:https://blog.csdn.net/qq_32737755/article/details/78124534 微擎中加载文件需要用到 load() 在官网找到官方对load()的解释 ...

- 漫画 | Spring AOP的底层原理是什么?

1.Spring中配置的bean是在什么时候实例化的? 2.描述一下Spring中的IOC.AOP和DI IOC和AOP是Spring的两大核心思想 3.谈谈IOC.AOP和DI在项目开发中的应用场景 ...

- Ajax实现的城市二级联动一

前一篇是把省份和城市都写在JS里,这里把城市放在PHP里,通过发送Ajax请求城市数据渲染到页面. 1.html <select id="province"> < ...

- HDU3567

Eight II Time Limit: 4000/2000 MS (Java/Others) Memory Limit: 130000/65536 K (Java/Others)Total S ...

- csharp: DefaultValueAttribute Class

public class CalendarEvent { public int id { get; set; } public string title { get; set; } public st ...

- element-ui select组件中复选时以字符串形式显示

我使用的element-ui的版本是1.4.13. 如上图所示,使用el-select组件,要实现可搜索.可复选.可创建条目时,展示样式是如上图所示,输入框的高度会撑开,影响页面布局,按照产品的需求, ...

- python 类函数,实例函数,静态函数

一.实现方法 class Function(object): # 在类定义中定义变量 cls_variable = "class varibale" def __init__(se ...