6.Spark streaming技术内幕 : Job动态生成原理与源码解析

原创文章,转载请注明:转载自 周岳飞博客(http://www.cnblogs.com/zhouyf/)

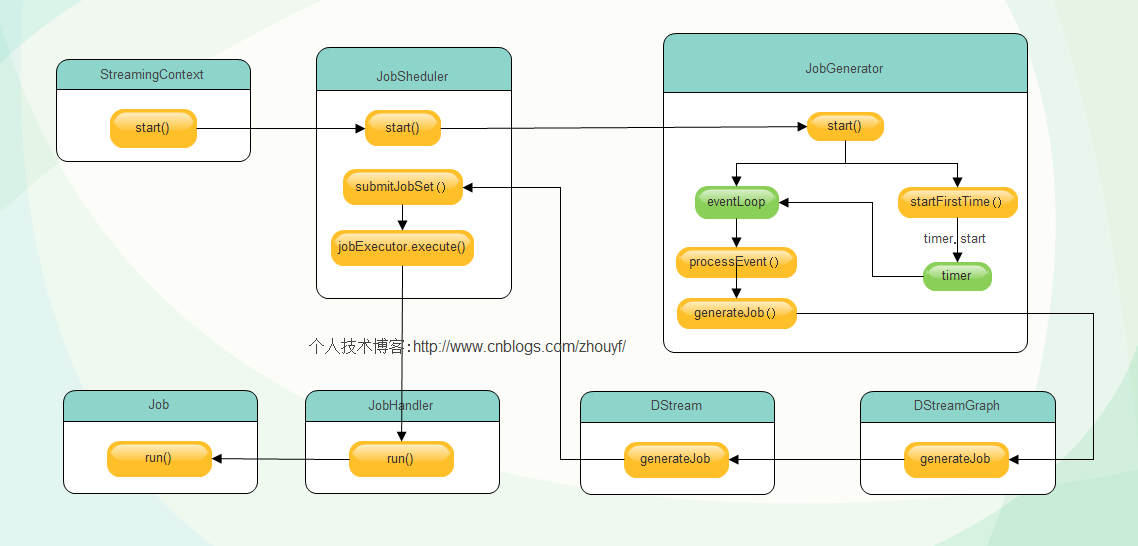

try {validate()// Start the streaming scheduler in a new thread, so that thread local properties// like call sites and job groups can be reset without affecting those of the// current thread.ThreadUtils.runInNewThread("streaming-start") {sparkContext.setCallSite(startSite.get)sparkContext.clearJobGroup()sparkContext.setLocalProperty(SparkContext.SPARK_JOB_INTERRUPT_ON_CANCEL, "false")savedProperties.set(SerializationUtils.clone(sparkContext.localProperties.get()).asInstanceOf[Properties])scheduler.start()}state = StreamingContextState.ACTIVE} catch {case NonFatal(e) =>logError("Error starting the context, marking it as stopped", e)scheduler.stop(false)state = StreamingContextState.STOPPEDthrow e}

eventLoop = new EventLoop[JobSchedulerEvent]("JobScheduler") {override protected def onReceive(event: JobSchedulerEvent): Unit = processEvent(event)override protected def onError(e: Throwable): Unit = reportError("Error in job scheduler", e)}eventLoop.start()// attach rate controllers of input streams to receive batch completion updatesfor {inputDStream <- ssc.graph.getInputStreamsrateController <- inputDStream.rateController} ssc.addStreamingListener(rateController)listenerBus.start()receiverTracker = new ReceiverTracker(ssc)inputInfoTracker = new InputInfoTracker(ssc)executorAllocationManager = ExecutorAllocationManager.createIfEnabled(ssc.sparkContext,receiverTracker,ssc.conf,ssc.graph.batchDuration.milliseconds,clock)executorAllocationManager.foreach(ssc.addStreamingListener)receiverTracker.start()jobGenerator.start()executorAllocationManager.foreach(_.start())logInfo("Started JobScheduler")

private def processEvent(event: JobSchedulerEvent) {try {event match {case JobStarted(job, startTime) => handleJobStart(job, startTime)case JobCompleted(job, completedTime) => handleJobCompletion(job, completedTime)case ErrorReported(m, e) => handleError(m, e)}} catch {case e: Throwable =>reportError("Error in job scheduler", e)}}

/** Start generation of jobs */

def start(): Unit = synchronized {

if (eventLoop != null) return // generator has already been started // Call checkpointWriter here to initialize it before eventLoop uses it to avoid a deadlock.

// See SPARK-10125

checkpointWriter eventLoop = new EventLoop[JobGeneratorEvent]("JobGenerator") {

override protected def onReceive(event: JobGeneratorEvent): Unit = processEvent(event) override protected def onError(e: Throwable): Unit = {

jobScheduler.reportError("Error in job generator", e)

}

}

eventLoop.start() if (ssc.isCheckpointPresent) {

restart()

} else {

startFirstTime()

}

}

/** Generate jobs and perform checkpoint for the given `time`. */private def generateJobs(time: Time) {// Checkpoint all RDDs marked for checkpointing to ensure their lineages are// truncated periodically. Otherwise, we may run into stack overflows (SPARK-6847).ssc.sparkContext.setLocalProperty(RDD.CHECKPOINT_ALL_MARKED_ANCESTORS, "true")Try {jobScheduler.receiverTracker.allocateBlocksToBatch(time) // allocate received blocks to batchgraph.generateJobs(time) // generate jobs using allocated block} match {case Success(jobs) =>val streamIdToInputInfos = jobScheduler.inputInfoTracker.getInfo(time)jobScheduler.submitJobSet(JobSet(time, jobs, streamIdToInputInfos))case Failure(e) =>jobScheduler.reportError("Error generating jobs for time " + time, e)}eventLoop.post(DoCheckpoint(time, clearCheckpointDataLater = false))}

def generateJobs(time: Time): Seq[Job] = {logDebug("Generating jobs for time " + time)val jobs = this.synchronized {outputStreams.flatMap { outputStream =>val jobOption = outputStream.generateJob(time)jobOption.foreach(_.setCallSite(outputStream.creationSite))jobOption}}logDebug("Generated " + jobs.length + " jobs for time " + time)jobs}

def submitJobSet(jobSet: JobSet) {if (jobSet.jobs.isEmpty) {logInfo("No jobs added for time " + jobSet.time)} else {listenerBus.post(StreamingListenerBatchSubmitted(jobSet.toBatchInfo))jobSets.put(jobSet.time, jobSet)jobSet.jobs.foreach(job => jobExecutor.execute(new JobHandler(job)))logInfo("Added jobs for time " + jobSet.time)}}

Attachment List

6.Spark streaming技术内幕 : Job动态生成原理与源码解析的更多相关文章

- Spark streaming技术内幕6 : Job动态生成原理与源码解析

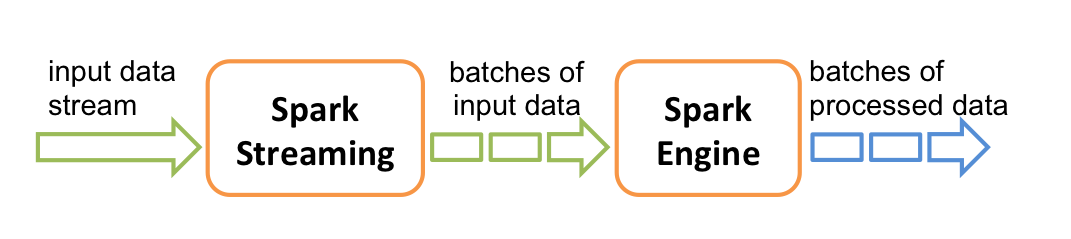

原创文章,转载请注明:转载自 周岳飞博客(http://www.cnblogs.com/zhouyf/) Spark streaming 程序的运行过程是将DStream的操作转化成RDD的操作,S ...

- Spark技术内幕: Task向Executor提交的源码解析

在上文<Spark技术内幕:Stage划分及提交源码分析>中,我们分析了Stage的生成和提交.但是Stage的提交,只是DAGScheduler完成了对DAG的划分,生成了一个计算拓扑, ...

- 7.spark Streaming 技术内幕 : 从DSteam到RDD全过程解析

原创文章,转载请注明:转载自 听风居士博客(http://www.cnblogs.com/zhouyf/) 上篇博客讨论了Spark Streaming 程序动态生成Job的过程,并留下一个疑问: ...

- Spark技术内幕:Stage划分及提交源码分析

http://blog.csdn.net/anzhsoft/article/details/39859463 当触发一个RDD的action后,以count为例,调用关系如下: org.apache. ...

- 9. Spark Streaming技术内幕 : Receiver在Driver的精妙实现全生命周期彻底研究和思考

原创文章,转载请注明:转载自 听风居士博客(http://www.cnblogs.com/zhouyf/) Spark streaming 程序需要不断接收新数据,然后进行业务逻辑 ...

- JDK1.8 动态代理机制及源码解析

动态代理 a) jdk 动态代理 Proxy, 核心思想:通过实现被代理类的所有接口,生成一个字节码文件后构造一个代理对象,通过持有反射构造被代理类的一个实例,再通过invoke反射调用被代理类实例的 ...

- Spark集群任务提交流程----2.1.0源码解析

Spark的应用程序是通过spark-submit提交到Spark集群上运行的,那么spark-submit到底提交了什么,集群是怎样调度运行的,下面一一详解. 0. spark-submit提交任务 ...

- 贯通Spark Streaming JobScheduler内幕实现和深入思考

本节主要内容: 一.SparkStreaming Job生成深度思考 二.SparkStreaming Job生成源码解析 JobScheduler的地位非常的重要,所有的关键都在JobSchedul ...

- Spark Streaming运行流程及源码解析(一)

本系列主要描述Spark Streaming的运行流程,然后对每个流程的源码分别进行解析 之前总听同事说Spark源码有多么棒,咱也不知道,就是疯狂点头.今天也来撸一下Spark源码. 对Spark的 ...

随机推荐

- mac之os x系统下搭建nodejs+express4.x+mongodb+gruntjs整套前端工程

第一次在Mac OS X上搭建前端开发环境,做一个小小记录,包括一些与windows系统的区别和常用快捷键 首先,在进行环境搭建之前先来看一下苹果系统的“cmd”,也就是Terminal(终端). 打 ...

- [LeetCode] 递推思想的美妙 Best Time to Buy and Sell Stock I, II, III O(n) 解法

题记:在求最大最小值的类似题目中,递推思想的奇妙之处,在于递推过程也就是比较求值的过程,从而做到一次遍历得到结果. LeetCode 上面的这三道题最能展现递推思想的美丽之处了. 题1 Best Ti ...

- 2017 济南综合班 Day 5

毕业考试 (exam.cpp/c/pas) (1s/256M) 问题描述 快毕业了,Barry希望能通过期末的N门考试来顺利毕业.如果他的N门考试平均分能够达到V分,则他能够成功毕业.现在已知每门的分 ...

- iOS 网络请求--- 配置info.plist文件

一.配置info.plist <key>NSAppTransportSecurity</key> <dict> <key>NSAllowsArbitra ...

- 【BZOJ4236】JOIOJI [DP]

JOIOJI Time Limit: 10 Sec Memory Limit: 256 MB[Submit][Status][Discuss] Description JOIOJI桑是JOI君的叔叔 ...

- Billboard HDU 2795 (线段树)

题目链接 Problem Description At the entrance to the university, there is a huge rectangular billboard of ...

- 纠结于arch+xfce还是xubuntu

现在用的是ubuntu gnome版 http://www.tuicool.com/articles/6r22eyU 现在纠结于arch+xfce还是xubuntu,因为不想在gnome下面搞什么美化 ...

- vim 实现括号以及引号的自动补全

编辑文件/etc/vim/vimrc sudo vim /etc/vim/vimrc 在最后添加 inoremap ( ()<ESC>i inoremap [ []<ESC>i ...

- libSVM笔记之(一)在matlab环境下安装配置libSVM

本文为原创作品,转载请注明出处 欢迎关注我的博客:http://blog.csdn.net/hit2015spring和http://www.cnblogs.com/xujianqing 台湾林智仁教 ...

- Vue-$emit的用法

1.父组件可以使用 props 把数据传给子组件.2.子组件可以使用 $emit 触发父组件的自定义事件. vm.$emit( event, arg ) //触发当前实例上的事件 vm.$on( ev ...