cloudera-hdfs 告警处理

2018-03-13 11:15:17,215 WARN [org.apache.flume.sink.hdfs.HDFSEventSink] - HDFS IO error

org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.ipc.StandbyException): Operation category WRITE is not supported in state standby

at org.apache.hadoop.hdfs.server.namenode.ha.StandbyState.checkOperation(StandbyState.java:87)

at org.apache.hadoop.hdfs.server.namenode.NameNode$NameNodeHAContext.checkOperation(NameNode.java:1754)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkOperation(FSNamesystem.java:1337)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFileInt(FSNamesystem.java:2492)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.startFile(FSNamesystem.java:2446)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.create(NameNodeRpcServer.java:566)

at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.create(AuthorizationProviderProxyClientProtocol.java:108)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.create(ClientNamenodeProtocolServerSideTranslatorPB.java:388)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:587)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1026)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2013)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2009)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1678)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2007) at org.apache.hadoop.ipc.Client.call(Client.java:1411)

at org.apache.hadoop.ipc.Client.call(Client.java:1364)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy14.create(Unknown Source)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:187)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:102)

at com.sun.proxy.$Proxy14.create(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.create(ClientNamenodeProtocolTranslatorPB.java:264)

at org.apache.hadoop.hdfs.DFSOutputStream.newStreamForCreate(DFSOutputStream.java:1612)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1488)

at org.apache.hadoop.hdfs.DFSClient.create(DFSClient.java:1413)

at org.apache.hadoop.hdfs.DistributedFileSystem$6.doCall(DistributedFileSystem.java:387)

at org.apache.hadoop.hdfs.DistributedFileSystem$6.doCall(DistributedFileSystem.java:383)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:383)

at org.apache.hadoop.hdfs.DistributedFileSystem.create(DistributedFileSystem.java:327)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:906)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:887)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:784)

at org.apache.hadoop.fs.FileSystem.create(FileSystem.java:773)

at org.apache.flume.sink.hdfs.HDFSDataStream.doOpen(HDFSDataStream.java:86)

at org.apache.flume.sink.hdfs.HDFSDataStream.open(HDFSDataStream.java:113)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:273)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:262)

at org.apache.flume.sink.hdfs.BucketWriter$9$1.run(BucketWriter.java:722)

at org.apache.flume.sink.hdfs.BucketWriter.runPrivileged(BucketWriter.java:183)

at org.apache.flume.sink.hdfs.BucketWriter.access$1700(BucketWriter.java:59)

at org.apache.flume.sink.hdfs.BucketWriter$9.call(BucketWriter.java:719)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:748)

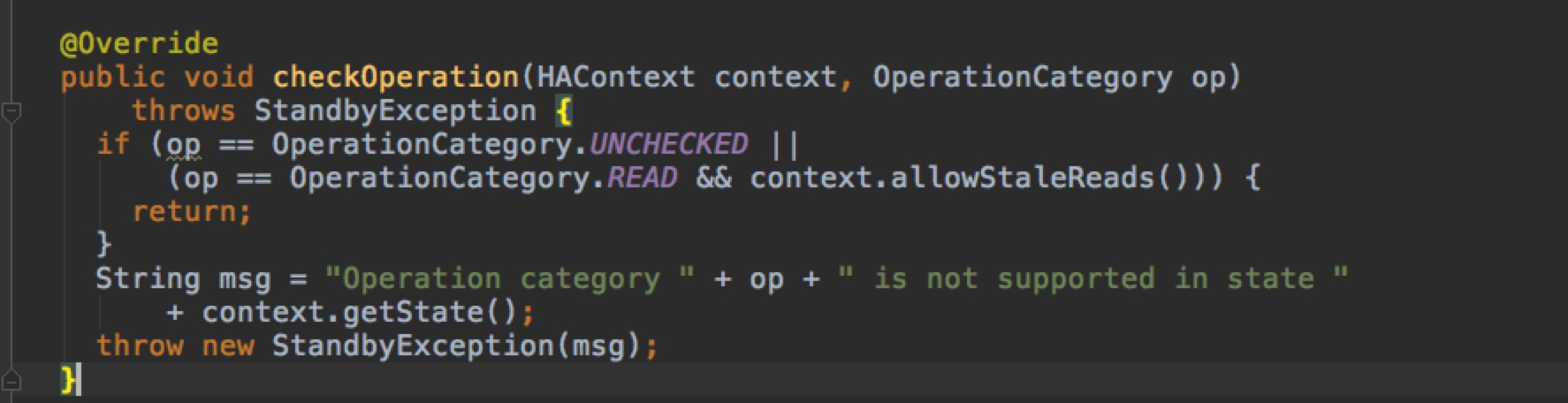

查看hadoop源码:

发些是写HDFS 无法写入权限,空间不足引起

参考:http://blog.csdn.net/xueyao0201/article/details/79530130

cloudera-hdfs 告警处理的更多相关文章

- Cloudera Certified Associate Administrator案例之Configure篇

Cloudera Certified Associate Administrator案例之Configure篇 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.下载CDH集群中最 ...

- 大数据平台R语言web UI应用架构 设计与开发

1. 系统拓扑图 在日常业务分析中,R是非常常用的分析工具,而当数据量较大时,用R语言需要需用更多的时间来完成训练模型,spark作为大规模数据处理框架,采用内存计算,可以短时间内完成大量的数据的处理 ...

- namenode No valid image files

1,角色日志报错 Encountered exception loading fsimage java.io.FileNotFoundException: No valid image files f ...

- 即将上线的Spark服务器面临的一系列填坑笔记

即将上线的Spark服务器面临的一系列填坑笔记 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 把kafka和flume倒腾玩了,以为可以轻松一段时间了,没想到使用CDH部署的spa ...

- Impala SQL 语言元素(翻译)[转载]

原 Impala SQL 语言元素(翻译) 本文来源于http://my.oschina.net/weiqingbin/blog/189413#OSC_h2_2 摘要 http://www.cloud ...

- Impala SQL 语言元素(翻译)

摘要: http://www.cloudera.com/content/cloudera-content/cloudera-docs/Impala/latest/Installing-and-Usin ...

- 报错:Error, CM server guid updated, expected xxxxx, received xxxxx (未解决)

报错背景: CDH断电重启后,cloudera-scm-server启动报错, cloudera-scm-server 已死,但 pid 文件仍存 由于没有成熟的解决方案,于是我就重新安装了MySQL ...

- 安装CDH5.11.2集群

master 192.168.1.30 saver1 192.168.1.40 saver2 192.168.1.50 首先,时间同步 然后,ssh互通 接下来开始: 1.安装MySQL5.6. ...

- 使用Cloudera Manager搭建HDFS完全分布式集群

使用Cloudera Manager搭建HDFS完全分布式集群 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 关于Cloudera Manager的搭建我这里就不再赘述了,可以参考 ...

- Problem of Uninstall Cloudera: Can't Add Hdfs and Reported Cannot Find CDH's bigtop-detect-javahome

[toc] 1. Problem We wrote a shell script to uninstall Cloudera Manager(CM) that run in a cluster wit ...

随机推荐

- spring集成mybatis的mybatis参考配置

<?xml version="1.0" encoding="UTF-8"?><!DOCTYPE configuration PUBLIC &q ...

- 尚硅谷redis学习1-NOSQL简介

本系列是自己学习尚硅谷redis视频的记录,防止遗忘,供以后用到时快速回忆起来,照抄视频和资料而已,没什么技术含量,仅给自己入门了解,我是对着视频看一遍再写的,视频地址如下:尚硅谷Redis视频 背景 ...

- 单件模式——Head First

一.定义 单件模式(Singleton Pattern)确保一个类只有一个实例,并提供一个全局访问点. 二.适用性 1.当类只能有一个实例而且客户可以从一个众所周知的访问点访问它时. 2.当这个唯一实 ...

- python实现排序算法一:快速排序

##快速排序算法##基本思想:分治法,将数组分为大于和小于该值的两部分数据,然后在两部分数据中进行递归排序,直到只有一个数据结束## step1: 取数组第一个元素为key值,设置两个变量,i = 0 ...

- 封装jQuery下载文件组件

使用jQuery导出文档文件 jQuery添加download组件 jQuery.download = function(url, data, method){ if( url && ...

- js固定底部菜单

<!DOCTYPE HTML> <html> <head> <meta http-equiv="Content-Type" content ...

- linux移植常见问题

*************1.给板子添加新的驱动**************** 一. 驱动程序编译进内核的步骤在 linux 内核中增加程序需要完成以下三项工作:1. 将编写的源代码复制 ...

- NPM安装依赖速度慢问题

[NPM安装依赖速度慢问题] npm config set registry http://registry.npm.taobao.org 参考:http://blog.csdn.net/rongbo ...

- macaca自动化测试以及配置环境问题

macaca 测试和环境问题 标签(空格分隔): macaca自动化配置环境问题 macaca环境变量配置 基本环境需要准备的东西: JDK的安装及环境配置:(1.8) Node.js的安装及环境配置 ...

- pip安装离线包

离线包从pypi.org下载 pip download -r requirements.txt -d /tmp/paks/ 在linux下 1.下载指定的包到指定文件夹. ...