SciTech-Mathematics-Probability+Statistics-Comparison:Chance + Possibility + Likelihood + Probability

https://www.geeksforgeeks.org/what-is-the-difference-between-likelihood-and-probability/

1. Chance

2. Possibility

3. Likelihood

4. Probability

In summary:

- \(\large probability\) quantifies the \(\large likelihood\) of future events.

- while \(\large likelihood\) quantifies the \(\large probability\) of past observations given a specific model or hypothesis.

Understanding the distinction between these concepts is crucial for conducting \(\large statistical\ inference\) and interpreting the results of \(\large statistical\ analyses\) accurately.

Difference: "Likelihood" and "Probability"

Last Updated : 30 Jul, 2024

https://www.geeksforgeeks.org/what-is-the-difference-between-likelihood-and-probability/

Answer: In statistics,

- comparing to "Resolve" and "Determination":

Likelihood similar to Resolve, Probability similar to Determination - "likelihood": refers to the chance of observing data given a particular model or hypothesis.

- "probability": represents the chance of an event(of a Experiment) occurring a head of time.

Likelihood vs Probability: Comparison

| Feature | Likelihood | Probability |

|---|---|---|

| Definition | The probability of observing data given a specific model or hypothesis. | The measure of the likelihood that an event will occur before it happens. |

| Application | Used in statistical inference to assess the plausibility of different parameter values given observed data. | Used in probability theory to quantify uncertainty associated with the occurrence of future events. |

| Directionality | Backward-looking: concerns the probability of past observations given a model. | Forward-looking: concerns the likelihood of future events. |

| Parameterization | Associated with the likelihood of parameter values given observed data. | Associated with the likelihood of outcomes of random experiments or events. |

| Interpretation | Interpreted as a measure of support for different parameter values given observed data. | Interpreted as a measure of belief or uncertainty about future events. |

| Example | In \(\large linear\ regression\), the \(\large likelihood\ function\) measures the \(\large probability\) of observing the given set of data points under the assumption that they are generated from a linear relationship between the variables. | The \(\large probability\) of \(\large \text{rolling a six on a fair six-sided}\ die\) is 1661 because there is one favorable outcome (rolling a six) out of six equally likely possible outcomes. |

Similar Questions

Q1. What is the difference between likelihood and probability in statistical analysis?

Q2. How does likelihood relate to parameter estimation in statistical models?

Q3. Can you provide another example of how likelihood is used in statistical inference?

Q4. How is probability used to make predictions about future events?

Q5. What role does probability play in hypothesis testing?

Q6. How does Bayesian inference use the concept of likelihood?

Q7. What is the likelihood function in the context of maximum likelihood estimation?

Q8. How do likelihood and probability differ in their application to random experiments?

Q9. Can you explain the role of likelihood in model selection and comparison?

Q10. How does the concept of probability apply to everyday decision-making scenarios?

Difference: Likelihood vs. Probability

BY ZACH BOBBITTPOSTED ON AUGUST 18, 2021

Two terms that students often confuse in statistics are likelihood and probability.

THREE STEPS TO SOLVE A PROBLEM WITH STATISTICAL MODEL:

Modeling:

- Initialization: Select A Model from a list of available models.

- Conditions

- Parameterization: Parameters, Types, Ranges, Values, Limit of Permissible Variation.

Verification of Model:

- Observation: Make a Sample from observations of the selected model and Parameters.

- Verification: trying to determine if we can trust the Parameters and the Model, from the Sample Data we have observed.

- we can identify the possible outcomes for next step: Experiment and Event.

Observation and Verification makes possible outcomes are sure. - it would be desirable to be able to make A Precise Statement of the Likelihoods of the Different Possible numbers of the each outcomes.

Probability+Statistics

- Experiment and Event

- Assign Probabilities to possible outcomes.

Here's the difference in a nutshell:

Probability refers to the chance that a particular outcome occurs based on the values of parameters in a model.

When calculating the probability of some outcome, we assume the parameters in a model are trustworthy.Likelihood refers to how well a Sample Data provides Support for particular values of a parameter in a model.

However, when we calculate likelihood we're trying to determine if we can trust the parameters in a model based on the sample data that we've observed.

The following examples illustrate the difference between probability and likelihood in various scenarios.

Example 1: Likelihood vs. Probability in Coin Tosses

Suppose(Modeling):

- we have a coin that is assumed to be fair.

![]()

![]()

- If we flip the coin one time, the probability that it will land on heads is 0.5.

Now suppose(Model Verification):

we flip the coin 100 times and it only lands on heads 17 times. - We would say that the likelihood that the coin is fair is quite low.

If the coin was actually fair, we would expect it to land on heads much more often. - When calculating the probability of a coin landing on heads,

we simply assume that P(heads) = 0.5 on a given toss. - When calculating the likelihood we're trying to determine if the model parameter (p = 0.5) is actually correctly specified.

In the example above, a coin landing on heads only 17 out of 100 times,

makes us highly suspicious that,

the truly probability of the coin landing on heads on a given toss is actually p = 0.5.

Example 2: Likelihood vs. Probability in Spinners

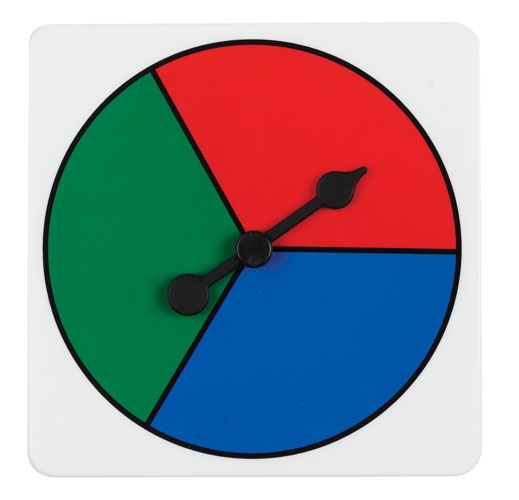

Suppose we have a spinner split into thirds with three colors on it: red, green, and blue.

Suppose we assume that it's equally likely for the spinner to land on any of the three colors.

If we spin it one time, the probability that it lands on red is 1/3.

| a spinner split into thirds with three colors on it: red, green, and blue. |  |

|---|

Now suppose we spin it 100 times and it lands on red 2 times, green 90 times, and blue 8 times. * * We would say that the likelihood that "the spinner is actually equally likely to land on each color" is very low.

- When calculating the probability of the spinner landing on red, we simply assume that P(red) = 1/3 on a given spin.

- When calculating the likelihood we're trying to determine if the model parameters (P(red) = 1/3, P(green) = 1/3, P(blue) = 1/3) are actually correctly specified.

In the example above, the results of the 100 spins make us highly suspicious that each color is equally likely to occur.

Example 3: Likelihood vs. Probability in Gambling

Suppose a casino claims that the probability of winning money on a certain slot machine is 40% for each turn.

If we take one turn, the probability that we will win money is 0.40.

Now suppose we take 100 turns and we win 42 times. We would conclude that the likelihood that the probability of winning in 40% of turns seems to be fair.

- When calculating the probability of winning on a given turn, we simply assume that P(winning) =0.40 on a given turn.

- When calculating the likelihood we're trying to determine if the model parameter P(winning) = 0.40 is actually correctly specified.

In the example above, winning 42 times out of 100 makes us believe that a probability of winning 40% of the time seems reasonable. ( Believe? )

Additional Resources

The following tutorials provide addition information about probability:

What is a Probability Distribution Table?

What is the Law of Total Probability?

How to Find the Mean of a Probability Distribu

SciTech-Mathematics-Probability+Statistics-Comparison:Chance + Possibility + Likelihood + Probability的更多相关文章

- 贝叶斯统计(Bayesian statistics) vs 频率统计(Frequentist statistics):marginal likelihood(边缘似然)

1. Bayesian statistics 一组独立同分布的数据集 X=(x1,-,xn)(xi∼p(xi|θ)),参数 θ 同时也是被另外分布定义的随机变量 θ∼p(θ|α),此时: p(X|α) ...

- [Statistics] Comparison of Three Correlation Coefficient: Pearson, Kendall, Spearman

There are three popular metrics to measure the correlation between two random variables: Pearson's c ...

- Probability&Statistics 概率论与数理统计(1)

基本概念 样本空间: 随机试验E的所有可能结果组成的集合, 为E的样本空间, 记为S 随机事件: E的样本空间S的子集为E的随机事件, 简称事件, 由一个样本点组成的单点集, 称为基本事件 对立事件/ ...

- Probability和Likelihood的区别

Bayes for Beginners: Probability and Likelihood 好好看,非常有用. 以前死活都不理解Probability和Likelihood的区别,为什么这两个东西 ...

- How do I learn mathematics for machine learning?

https://www.quora.com/How-do-I-learn-mathematics-for-machine-learning How do I learn mathematics f ...

- Maximum Likelihood及Maximum Likelihood Estimation

1.What is Maximum Likelihood? 极大似然是一种找到最可能解释一组观测数据的函数的方法. Maximum Likelihood is a way to find the mo ...

- 似然和对数似然Likelihood & LogLikelihood

One of the most fundamental concepts of modern statistics is that of likelihood. In each of the disc ...

- Bayesian Statistics for Genetics | 贝叶斯与遗传学

Common sense reduced to computation - Pierre-Simon, marquis de Laplace (1749–1827) Inventor of Bayes ...

- Study notes for Discrete Probability Distribution

The Basics of Probability Probability measures the amount of uncertainty of an event: a fact whose o ...

- Statistics : Data Distribution

1.Normal distribution In probability theory, the normal (or Gaussian or Gauss or Laplace–Gauss) dist ...

随机推荐

- GitLab——重置(reset)和还原(revert)

Git 命令 reset 和 revert 的区别 - 知乎 (zhihu.com) 总结: git reset --hard 9201d9b19dbf5b4ceaf90f92fd4e4019b685 ...

- 揭秘AI自动化框架Browser-use(终):利用MCP与Spring AI,3行代码复刻Browser-use实现

技术背景与目标 在前几篇文章中,我们深入解析了Browser-use框架的核心机制,包括DOM树遍历与分析.提示词构造.任务分解与规划.以及浏览器操作的函数调用.我们将通过Spring AI和Play ...

- 解决 Dify 部署中 Podman WSL 容器文件权限问题

解决 Dify 部署中 Podman WSL 容器文件权限问题 在使用 Podman 进行 Dify 部署时,遇到了一个关键问题:启动服务时出现 initdb: error: could not ch ...

- 遇到的问题之“使用get请求时,请求参数中存在#导致后端request获取不到值”

一.问题 使用get请求时,请求参数中存在#导致后端request获取不到值 发出参数带#的请求 后端接收不到SKU的值,连后面platformId的值都没有了 二.原因 1.有些符号[参数包含有特殊 ...

- dom绑定事件操作

s7.html <!DOCTYPE html><html lang="en"><head> <meta charset=" ...

- 操作系统:CPU工作模式-- 执行程序的三种模式

按照 CPU 功能升级迭代的顺序,CPU 的工作模式有实模式.保护模式.长模式,这几种工作模式下 CPU 执行程序的方式截然不同,下面我们一起来探讨这几种工作模式. 从一段死循环的代码说起 int m ...

- Data Preparation in Pandas

Data Preparation in Pandas Data cleaning import pandas as pd import numpy as np string_data=pd.Serie ...

- Python实现http接口请求数据后,往RabbitMQ里面插入数据

python实现http接口请求数据服务后,往RABBITmq里面插入数据 import time import requests import pika import datetime base_u ...

- js格式化货币方法

闲来无事自己基于原生js方法封装了一个可用于常见货币格式化的方法,具体方法封装如下: /** * 格式化人民币金额 * @param number num 数字金额 * @param string s ...

- ArkUI-X框架LogInterface使用指南

ArkUI-X框架支持日志拦截能力,Android侧提供原生接口,用于注入LogInterface接口,框架日志及ts日志通过该接口输出,本文的核心内容是介绍如何在Android平台上有效利用ArkU ...