ural1772 Ski-Trails for Robots

Ski-Trails for Robots

Memory limit: 64 MB

Input

Output

Sample

| input | output |

|---|---|

5 3 2 |

6 |

分析:参考http://blog.csdn.net/xcszbdnl/article/details/38494201;

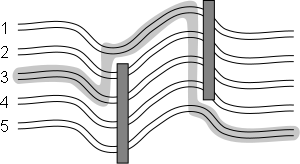

对于当前障碍物,在障碍物旁边的点必然是可到达的最短的路程的点;

代码:

#include <iostream>

#include <cstdio>

#include <cstdlib>

#include <cmath>

#include <algorithm>

#include <climits>

#include <cstring>

#include <string>

#include <set>

#include <map>

#include <queue>

#include <stack>

#include <vector>

#include <list>

#define rep(i,m,n) for(i=m;i<=n;i++)

#define rsp(it,s) for(set<int>::iterator it=s.begin();it!=s.end();it++)

#define mod 1000000007

#define inf 0x3f3f3f3f

#define vi vector<int>

#define pb push_back

#define mp make_pair

#define fi first

#define se second

#define ll long long

#define pi acos(-1.0)

#define pii pair<int,int>

#define Lson L, mid, rt<<1

#define Rson mid+1, R, rt<<1|1

const int maxn=1e5+;

const int dis[][]={{,},{-,},{,-},{,}};

using namespace std;

ll gcd(ll p,ll q){return q==?p:gcd(q,p%q);}

ll qpow(ll p,ll q){ll f=;while(q){if(q&)f=f*p%mod;p=p*p%mod;q>>=;}return f;}

int n,m,k,t,s;

set<int>p,q;

set<int>::iterator now,pr,la;

ll dp[maxn];

int main()

{

int i,j;

scanf("%d%d%d",&n,&s,&k);

rep(i,,n+)dp[i]=1e18;

p.insert(),p.insert(n+),p.insert(s);

dp[s]=;

while(k--)

{

int a,b;

scanf("%d%d",&a,&b);

if(a>)

{

a--;

p.insert(a);

now=p.find(a);

pr=--now;

++now;

la=++now;

--now;

if(dp[*now]>dp[*pr]+(*now)-(*pr))dp[*now]=dp[*pr]+(*now)-(*pr);

if(dp[*now]>dp[*la]+(*la)-(*now))dp[*now]=dp[*la]+(*la)-(*now);

a++;

}

if(b<n)

{

b++;

p.insert(b);

now=p.find(b);

pr=--now;

++now;

la=++now;

--now;

if(dp[*now]>dp[*pr]+(*now)-(*pr))dp[*now]=dp[*pr]+(*now)-(*pr);

if(dp[*now]>dp[*la]+(*la)-(*now))dp[*now]=dp[*la]+(*la)-(*now);

b--;

}

q.clear();

for(now=p.lower_bound(a);now!=p.end()&&*now<=b;now++)q.insert(*now);

for(int x:q)p.erase(x),dp[x]=1e18;

}

ll mi=1e18;

rep(i,,n)if(mi>dp[i])mi=dp[i];

printf("%lld\n",mi);

//system("pause");

return ;

}

ural1772 Ski-Trails for Robots的更多相关文章

- 网站 robots.txt 文件编写

网站 robots.txt 文件编写 Intro robots.txt 是网站根目录下的一个纯文本文件,在这个文件中网站管理者可以声明该网站中不想被robots访问的部分,或者指定搜索引擎只收录指定的 ...

- Robots.txt - 禁止爬虫(转)

Robots.txt - 禁止爬虫 robots.txt用于禁止网络爬虫访问网站指定目录.robots.txt的格式采用面向行的语法:空行.注释行(以#打头).规则行.规则行的格式为:Field: v ...

- (转载)robots.txt写法大全和robots.txt语法的作用

1如果允许所有搜索引擎访问网站的所有部分的话 我们可以建立一个空白的文本文档,命名为robots.txt放在网站的根目录下即可.robots.txt写法如下:User-agent: *Disallow ...

- 2016 ccpc 网络选拔赛 F. Robots

Robots Time Limit: 2000/1000 MS (Java/Others) Memory Limit: 65536/65536 K (Java/Others)Total Subm ...

- Codeforces 209 C. Trails and Glades

Vasya went for a walk in the park. The park has n glades, numbered from 1 to n. There are m trails b ...

- robots.txt文件没错,为何总提示封禁

大家好,我的robots.txt文件没错,为何百度总提示封禁,哪位高人帮我看看原因,在此谢过. 我的站点www.haokda.com,robots.txt如下: ## robots.txt for P ...

- robots笔记以免忘记

html头部标签写法: <meta name="robots" content="index,follow" /> content中的值决定允许抓取 ...

- [题解]USACO 1.3 Ski Course Design

Ski Course Design Farmer John has N hills on his farm (1 <= N <= 1,000), each with an integer ...

- springMVC robots.txt 处理

正常情况这样就好使 <mvc:resources mapping="/robots.txt" location="/lib/robots.txt"/> ...

随机推荐

- android应用的优化建议(转载)

首先,这是我在http://www.oschina.net/translate/40-developer-tips-for-android-optimization看到的一片文章,感觉挺有道理的,所以 ...

- aspx中如何加入javascript

Response.Write("<script>window.location ='aaa.aspx';</script>"); Response.Writ ...

- SQL Server 完美SPLIT函数

-- SQL Server Split函数 -- Author:zc_0101 -- 说明: -- 支持分割符多字节 -- 使用方法 -- Select * FROM DBO. ...

- PHP中字符串转换为数值 可能会遇到的坑

今天看到一个老外最喜欢的一段代码 <?php $string = 'zero'; $zero = 0; echo ($string == $zero) ? 'Why? Just why?!' : ...

- Windows环境下google protobuf入门

我使用的是最新版本的protobuf(protobuf-2.6.1),编程工具使用VS2010.简单介绍下google protobuf: google protobuf 主要用于通讯,是google ...

- Log4j 简介及初步应用

使用2.5版本有问题,暂时没有解决,也许是JDK版本不兼容的原因.因此使用的是log4j-1.2.8.jar 1.三个组件 日志记录器 —— Logger.输出目的地 —— Appender.输出 ...

- Java中的一些术语的解释

一 API(Application Programming Interface,应用程序编程接口) 简单来说,就是其他人开发出来一块程序,你想用,他会告诉你调用哪个函数,给这个函数传什么参数,然后又 ...

- win8.1下安装ubuntu 14.0 4LTS

1.前奏 电脑上已经安装了win8.1系统 2.准备工作 关闭win8.1的快速启动 步骤: 控制面板->电源选项->选择电源按钮的功能->更改不可用的设置,然后把"启用快 ...

- 做.net的早晚会用到,并且网上还没有这方面的正确资料或几乎很少

原文网址:http://www.cnblogs.com/langu/archive/2012/03/23/2413990.html 一直以来,找安装程序的msi源文件路径得到的都是“system32” ...

- HTML之组件margin、padding

1. HTML之组件可以通过CSS里的width height进行大小控制 2.HTML之组件可以通过CSS里的margin.padding进行组件和组件间的间距 margin/padding:(u ...