How Many Partitions Does An RDD Have

From https://databricks.gitbooks.io/databricks-spark-knowledge-base/content/performance_optimization/how_many_partitions_does_an_rdd_have.html

For tuning and troubleshooting, it's often necessary to know how many paritions an RDD represents. There are a few ways to find this information:

View Task Execution Against Partitions Using the UI

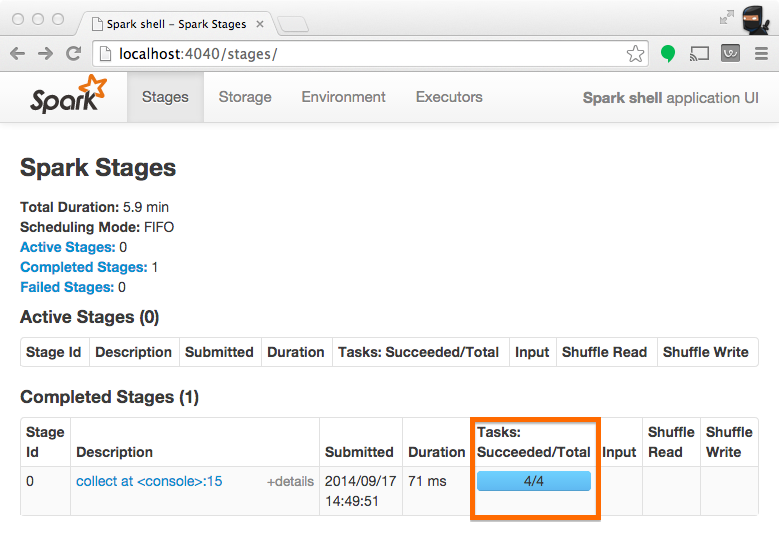

When a stage executes, you can see the number of partitions for a given stage in the Spark UI. For example, the following simple job creates an RDD of 100 elements across 4 partitions, then distributes a dummy map task before collecting the elements back to the driver program:

scala> val someRDD = sc.parallelize(1 to 100, 4)

someRDD: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:12

scala> someRDD.map(x => x).collect

res1: Array[Int] = Array(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100)

In Spark's application UI, you can see from the following screenshot that the "Total Tasks" represents the number of partitions:

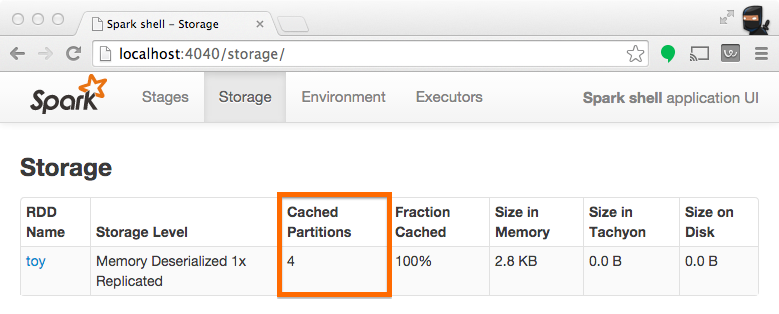

View Partition Caching Using the UI

When persisting (a.k.a. caching) RDDs, it's useful to understand how many partitions have been stored. The example below is identical to the one prior, except that we'll now cache the RDD prior to processing it. After this completes, we can use the UI to understand what has been stored from this operation.

scala> someRDD.setName("toy").cache

res2: someRDD.type = toy ParallelCollectionRDD[0] at parallelize at <console>:12

scala> someRDD.map(x => x).collect

res3: Array[Int] = Array(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100)

Note from the screenshot that there are four partitions cached.

Inspect RDD Partitions Programatically

In the Scala API, an RDD holds a reference to it's Array of partitions, which you can use to find out how many partitions there are:

scala> val someRDD = sc.parallelize(1 to 100, 30)

someRDD: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:12

scala> someRDD.partitions.size

res0: Int = 30

In the python API, there is a method for explicitly listing the number of partitions:

In [1]: someRDD = sc.parallelize(range(101),30)

In [2]: someRDD.getNumPartitions()

Out[2]: 30

Note in the examples above, the number of partitions was intentionally set to 30 upon initialization.

How Many Partitions Does An RDD Have的更多相关文章

- Spark核心概念之RDD

RDD: Resilient Distributed Dataset RDD的特点: 1.A list of partitions 一系列的分片:比如说64M一片:类似于Hadoop中的s ...

- RDD的依赖关系

RDD的依赖关系 Rdd之间的依赖关系通过rdd中的getDependencies来进行表示, 在提交job后,会通过在DAGShuduler.submitStage-->getMissingP ...

- RDD.scala(源码)

---- map. --- flatMap.fliter.distinct.repartition.coalesce.sample.randomSplit.randomSampleWithRange. ...

- Spark函数详解系列之RDD基本转换

摘要: RDD:弹性分布式数据集,是一种特殊集合 ‚ 支持多种来源 ‚ 有容错机制 ‚ 可以被缓存 ‚ 支持并行操作,一个RDD代表一个分区里的数据集 RDD有两种操作算子: ...

- Spark编程模型及RDD操作

转载自:http://blog.csdn.net/liuwenbo0920/article/details/45243775 1. Spark中的基本概念 在Spark中,有下面的基本概念.Appli ...

- 【原创】大数据基础之Spark(4)RDD原理及代码解析

一 简介 spark核心是RDD,官方文档地址:https://spark.apache.org/docs/latest/rdd-programming-guide.html#resilient-di ...

- Spark源码系列:RDD repartition、coalesce 对比

在上一篇文章中 Spark源码系列:DataFrame repartition.coalesce 对比 对DataFrame的repartition.coalesce进行了对比,在这篇文章中,将会对R ...

- 【Spark-core学习之二】 RDD和算子

环境 虚拟机:VMware 10 Linux版本:CentOS-6.5-x86_64 客户端:Xshell4 FTP:Xftp4 jdk1.8 scala-2.10.4(依赖jdk1.8) spark ...

- spark 算子之RDD

map map(func) Return a new distributed dataset formed by passing each element of the source through ...

随机推荐

- 打包c++项目

InstallShield Limited Edition for Visual Studio 2013 图文教程(教你如何打包.NET程序) InstallShield 2015 Limited E ...

- Jmeter中使用CSV Data Set Config

A

- python中的全局变量、局部变量、实例变量

1.全局变量:在模块内,在所有函数.类外面. 2.局部变量:在函数内,在类方法内(未加self修饰的) 3.静态变量:在类内,但不在类方法内.[共同类所有,值改变后,之后所有的实例对象也改变] 4.实 ...

- 搞定PHP面试 - 变量知识点整理

一.变量的定义 1. 变量的命名规则 变量名可以包含字母.数字.下划线,不能以数字开头. $Var_1 = 'foo'; // 合法 $var1 = 'foo'; // 合法 $_var1 = 'fo ...

- TP框架 mysql子查询

一些比较复杂的业务关系,用子查询解决. 比循环便利要好的多哈. 比如下面这句 select 和where in 语句都用了子查询. 因为父查询在select里,所以用了select的字段当子查询的条件 ...

- MySQL Query Cache 相关的问题

最近经常有人问我 MySQL Query Cache 相关的问题,就整理一点 MySQL Query Cache 的内容,以供参考. 顾名思义,MySQL Query Cache 就是用来缓存和 Qu ...

- gcc 源代码分析-前端篇2

2. 对ID及保留字的处理 在c语言中,系统预留了非常多keyword.也被称为保留字,比方表示数据类型的int,short,char,控制分支运行的if,then等. 不论什么keyword, ...

- 重定向标准输出到socket的方法

- 小P寻宝记——好基友一起走

小P寻宝记--好基友一起走 Time Limit: 1000ms Memory limit: 65536K 有疑问?点这里^_^ 题目描写叙述 话说.上次小P到伊利哇呀国旅行得到了一批宝藏.他是 ...

- .NET 图片解密为BASE64

#region 图片加密 /// <summary> /// 加密本地文件 /// </summary> /// <param name="inputname& ...