Flink之Stateful Operators

Implementing Stateful Functions

source function的stateful看官网,要加lock

Declaring Keyed State at the RuntimeContext

state可通过 rich functions 、Listcheckpoint和CheckpointFunction获得。

在Flink中,当对某个数据进行处理时,从上下文中获取state时,只会获取该数据key对应的state。

四种Keyed State:

ValueState[T]:ValueState[T]holds a single value of typeT..value() 和 update(value: T)

ListState[T]:ListState[T]holds a list of elements of typeT..add(value: T) or .addAll(values: java.util.List[T])

.get() which returns an

Iterable[T]over all state elements..update(values: java.util.List[T]),没有删除

MapState[K, V]:MapState[K, V]holds a map of keys and values. The state primitive offers many methods of a regular Java Map such asget(key: K),put(key: K, value: V),contains(key: K),remove(key: K), and iterators over the contained entries, keys, and values.ReducingState[T]:ReducingState[T]offers the same methods asListState[T](except foraddAll()andupdate()) but instead of appending values to a list,ReducingState.add(value: T)immediately aggregatesvalueusing aReduceFunction. The iterator returned byget()returns anIterablewith a single entry, which is the reduced value.AggregatingState[I, O]:AggregatingState[I, O]it uses the more generalAggregateFunctionto aggregate values.

// 启动警报,如果两次测量的差距过大

val keyedData: KeyedStream[SensorReading, String] = sensorData

.keyBy(_.id)

.flatMap(new TemperatureAlertFunction(1.1))

// --------------------------------------------------------------

class TemperatureAlertFunction(val threshold: Double)

extends RichFlatMapFunction[SensorReading, (String, Double, Double)] {

// 定义state的类型

private var lastTempState: ValueState[Double] = _

override def open(parameters: Configuration): Unit = {

// create state descriptor,实例化state,需要state的名字和类

val lastTempDescriptor =

new ValueStateDescriptor[Double]("lastTemp", classOf[Double])

// obtain the state handle and register state for last temperature 登记时会检查state backend是否有这个函数的data、state with the given name and type,从checkpoint恢复有可能有,有就关联

lastTempState = getRuntimeContext

.getState[Double](lastTempDescriptor) // 只能取得当前数据的key相关的state。如果上面定义类ListState,那就getListState

}

override def flatMap(

in: SensorReading,

out: Collector[(String, Double, Double)]): Unit = {

// fetch the last temperature from state

val lastTemp = lastTempState.value()

// check if we need to emit an alert

if (lastTemp > 0d && (in.temperature / lastTemp) > threshold) {

// temperature increased by more than the threshold

out.collect((in.id, in.temperature, lastTemp))

}

// update lastTemp state

this.lastTempState.update(in.temperature)

}

}

// 简化

val alerts: DataStream[(String, Double, Double)] = keyedSensorData

.flatMapWithState[(String, Double, Double), Double] {

case (in: SensorReading, None) =>

// no previous temperature defined.

// Just update the last temperature

(List.empty, Some(in.temperature))

case (in: SensorReading, lastTemp: Some[Double]) =>

// compare temperature difference with threshold

if (lastTemp.get > 0 && (in.temperature / lastTemp.get) > 1.4) {

// threshold exceeded. Emit an alert and update the last temperature

(List((in.id, in.temperature, lastTemp.get)), Some(in.temperature))

} else {

// threshold not exceeded. Just update the last temperature

(List.empty, Some(in.temperature))

}

}

Implementing Operator List State with the ListCheckpointed Interface

函数需要实现ListCheckpointed接口才能处理list state。这个接口有两个方法:

- snapshotState:当Flink请求checkpoint时调用。方法要返回a list of serializable state objects

- restoreState:函数state初始化时调用,例如从checkpoint恢复。

上面两个方法在调整并发度时也要用到。

class HighTempCounter(val threshold: Double)

extends RichFlatMapFunction[SensorReading, (Int, Long)]

with ListCheckpointed[java.lang.Long] {

// index of the subtask

private lazy val subtaskIdx = getRuntimeContext

.getIndexOfThisSubtask

// local count variable

private var highTempCnt = 0L

override def flatMap(

in: SensorReading,

out: Collector[(Int, Long)]): Unit = {

if (in.temperature > threshold) {

// increment counter if threshold is exceeded

highTempCnt += 1

// emit update with subtask index and counter

out.collect((subtaskIdx, highTempCnt))

}

}

override def restoreState(

state: util.List[java.lang.Long]): Unit = {

highTempCnt = 0

// restore state by adding all longs of the list

for (cnt <- state.asScala) { // 因为 ListCheckpointed 是Java的

highTempCnt += cnt

}

}

override def snapshotState(

chkpntId: Long,

ts: Long): java.util.List[java.lang.Long] = {

// split count into ten partial counts,为了提高并发度时,state能够均匀分布,而非有些从0开始

val div = highTempCnt / 10

val mod = (highTempCnt % 10).toInt

// return count as ten parts

(List.fill(mod)(new java.lang.Long(div + 1)) ++

List.fill(10 - mod)(new java.lang.Long(div))).asJava

}

}

Using Connected Broadcast State

这个功能checkpoint时会保存多份相同的,为避免恢复时所有task都读取一个文件。但调整并法度时和理论一样,只传递一份。

步骤:

- 用DataStream.broadcast()得到BroadcastStream,其中参数为一或多个MapStateDescriptor对象,每个descriptor代表一个单独的broadcast state用于后续的操作。

- DataStream或KeyedStream.connect(BroadcastStream)

- 应用函数到connected stream,keyed或non-keyed的接口不同

// 下面例子是根据thresholds动态调整alert的阈值。

val thresholds: DataStream[ThresholdUpdate] = env.fromElements(

ThresholdUpdate("sensor_1", 5.0d),

ThresholdUpdate("sensor_2", 2.0d),

ThresholdUpdate("sensor_1", 1.2d))

val keyedSensorData: KeyedStream[SensorReading, String] =

sensorData.keyBy(_.id)

// the descriptor of the broadcast state

val broadcastStateDescriptor =

new MapStateDescriptor[String, Double](

"thresholds",

classOf[String],

classOf[Double])

// create a BroadcastStream

val broadcastThresholds: BroadcastStream[ThresholdUpdate] =

thresholds.broadcast(broadcastStateDescriptor)

// connect keyed sensor stream and broadcasted rules stream

val alerts: DataStream[(String, Double, Double)] = keyedSensorData

.connect(broadcastThresholds)

.process(new UpdatableTempAlertFunction(4.0d))

// --------------------------------------------------------------

// 如果非KeyedStream,用BroadcastProcessFunction,但它没有timer服务来登记和调用onTimer

class UpdatableTempAlertFunction(val defaultThreshold: Double)

extends KeyedBroadcastProcessFunction[String, SensorReading, ThresholdUpdate, (String, Double, Double)] {

// the descriptor of the broadcast state

private lazy val thresholdStateDescriptor =

new MapStateDescriptor[String, Double](

"thresholds",

classOf[String],

classOf[Double])

// the keyed state handle

private var lastTempState: ValueState[Double] = _

override def open(parameters: Configuration): Unit = {

// create keyed state descriptor

val lastTempDescriptor = new ValueStateDescriptor[Double](

"lastTemp",

classOf[Double])

// obtain the keyed state handle

lastTempState = getRuntimeContext

.getState[Double](lastTempDescriptor)

}

// 下面方法不能调用key state,因为broadcast不是key Stream。要对key state进行操作的话,用keyedCtx.applyToKeyedState(StateDescriptor, KeyedStateFunction)。

override def processBroadcastElement(

update: ThresholdUpdate,

keyedCtx: KeyedBroadcastProcessFunction[String, SensorReading, ThresholdUpdate, (String, Double, Double)]#KeyedContext,

out: Collector[(String, Double, Double)]): Unit = {

if (update.threshold >= 1.0d) {

// configure a new threshold of the sensor

thresholds.put(update.id, update.threshold)

} else {

// remove sensor specific threshold

thresholds.remove(update.id)

}

}

override def processElement(

reading: SensorReading,

keyedReadOnlyCtx: KeyedBroadcastProcessFunction[String, SensorReading, ThresholdUpdate, (String, Double, Double)]#KeyedReadOnlyContext,

out: Collector[(String, Double, Double)]): Unit = {

// get 只读 broadcast state

val thresholds: MapState[String, Double] = keyedReadOnlyCtx

.getBroadcastState(thresholdStateDescriptor)

// get threshold for sensor

val sensorThreshold: Double =

if (thresholds.contains(reading.id)) {

thresholds.get(reading.id)

} else {

defaultThreshold

}

// fetch the last temperature from keyed state

val lastTemp = lastTempState.value()

// check if we need to emit an alert

if (lastTemp > 0 &&

(reading.temperature / lastTemp) > sensorThreshold) {

// temperature increased by more than the threshold

out.collect((reading.id, reading.temperature, lastTemp))

}

// update lastTemp state

this.lastTempState.update(reading.temperature)

}

}

Using the CheckpointedFunction Interface

它是最底层的stateful functions接口,是唯一提供list union state的接口。它有两个方法:

initializeState():作业启动或重启时调用,包含了重启时的恢复逻辑。它的调用伴随FunctionInitializationContext,提供了OperatorStateStore和 KeyedStateStore的访问,它们都是用于登记state。snapshotState():在checkpoint时调用,接收FunctionSnapshotContext参数,用于访问checkpoint的创建时间、id等。这个方法是为了在checkpoint前完成对state的更新。和CheckpointListener接口结合,能写数据到外部存储时和checkpoint实现同步。

// 创建一个具有key和operator状态的函数,该函数按key和operator实例计算有多少传感器读数超过指定阈值。

class HighTempCounter(val threshold: Double)

extends FlatMapFunction[SensorReading, (String, Long, Long)]

with CheckpointedFunction {

// local variable for the operator high temperature cnt

var opHighTempCnt: Long = 0

var keyedCntState: ValueState[Long] = _

var opCntState: ListState[Long] = _

override def flatMap(

v: SensorReading,

out: Collector[(String, Long, Long)]): Unit = {

// check if temperature is high

if (v.temperature > threshold) {

// update local operator high temp counter

opHighTempCnt += 1

// update keyed high temp counter

val keyHighTempCnt = keyedCntState.value() + 1

keyedCntState.update(keyHighTempCnt)

// emit new counters

out.collect((v.id, keyHighTempCnt, opHighTempCnt))

}

}

override def initializeState(

initContext: FunctionInitializationContext): Unit = {

// initialize keyed state

val keyCntDescriptor = new ValueStateDescriptor[Long](

"keyedCnt",

createTypeInformation[Long])

keyedCntState = initContext.getKeyedStateStore

.getState(keyCntDescriptor)

// initialize operator state

val opCntDescriptor = new ListStateDescriptor[Long](

"opCnt",

createTypeInformation[Long])

opCntState = initContext.getOperatorStateStore

.getListState(opCntDescriptor)

// initialize local variable with state

opHighTempCnt = opCntState.get().asScala.sum

}

override def snapshotState(

snapshotContext: FunctionSnapshotContext): Unit = {

// update operator state with local state

opCntState.clear()

opCntState.add(opHighTempCnt)

}

}

// 对于sink function的例子

class BufferingSink(threshold: Int = 0)

extends SinkFunction[(String, Int)]

with CheckpointedFunction {

@transient

private var checkpointedState: ListState[(String, Int)] = _

private val bufferedElements = ListBuffer[(String, Int)]()

override def invoke(value: (String, Int)): Unit = {

bufferedElements += value

if (bufferedElements.size == threshold) {

for (element <- bufferedElements) {

// send it to the sink

}

bufferedElements.clear()

}

}

override def snapshotState(context: FunctionSnapshotContext): Unit = {

// checkpoint前清理旧的state

checkpointedState.clear()

for (element <- bufferedElements) {

checkpointedState.add(element)

}

}

override def initializeState(context: FunctionInitializationContext): Unit = {

val descriptor = new ListStateDescriptor[(String, Int)](

"buffered-elements",

TypeInformation.of(new TypeHint[(String, Int)]() {})

)

// 用UnionListState时,getUnionListState

checkpointedState = context.getOperatorStateStore.getListState(descriptor)

if(context.isRestored) {

for(element <- checkpointedState.get()) {

bufferedElements += element

}

}

}

}

Receiving Notifications about Completed Checkpoints

应用程序的状态永远不会处于一致状态,除非采用checkpoint的逻辑时间点。对于一些operator,知道checkpoint什么时候完成是重要的。例如,将数据写入具有一次性保证的外部系统的sink functions必须仅发出在成功checkpoint之前接收的记录,以确保在发生故障时不会重新计算接收到的数据。

CheckpointListener接口的notifyCheckpointComplete方法被JM调用,登记checkpoint完成,例如当所有operators成功将他们的state复制到远程。Flink不保证每个完成的checkpoint都调用这个方法,所以有可能有的task错失这个提醒。

Robustness and Performance of Stateful Applications

Flink有三种state backend: InMemoryStateBackend, FsStateBackend, and RocksDBStateBackend。也可以实现StateBackend 接口来自定义。IM和Fs将state作为一般的对象存储在TM的JVM进程。RDB则序列化所有state为RocksDB instance,当有大量state时适合使用。

val env = StreamExecutionEnvironment.getExecutionEnvironment

val checkpointPath: String = ???

val incrementalCheckpoints: Boolean = true

// configure path for checkpoints on the remote filesystem

val backend = new RocksDBStateBackend(

checkpointPath,

incrementalCheckpoints)

// configure path for local RocksDB instance on worker

backend.setDbStoragePath(dbPath)

// configure RocksDB options

backend.setOptions(optionsFactory)

// configure state backend

env.setStateBackend(backend)

Enabling Checkpointing

当开启后,JM会定期实例化checkpoint。更多调整看C9

val env = StreamExecutionEnvironment.getExecutionEnvironment

// set checkpointing interval to 10 seconds (10000 milliseconds)

env.enableCheckpointing(10000L).set.... // 其他选项

Updating Stateful Operators

这个功能的关键在于成功反序列化savepoint的数据和正确地映射数据到state。

- 正确映射

默认情况下,identifier 根据operator的属性及其前驱属性计算为唯一哈希值。 因此,如果operator或其前驱更改,那Flink将无法映射先前保存点的状态,则identifier 将不可避免地发生变化。为了能够删除或增加operator,必须手动分配唯一identifier

val alerts: DataStream[(String, Double, Double)] = sensorData

.keyBy(_.id)

// apply stateful FlatMap and set unique ID

.flatMap(new TemperatureAlertFunction(1.1)).uid("alertFunc")

默认情况下,从savepoint中恢复要读取所有保存的state,但更新后可能不需要,则可以做调整。

- 反序列化

序列化的兼容性和任何有state的 operator相关,如果输入和输出被改变,就会影响更新的兼容性。建议将带有支持版本控制的编码的数据类型用作内置DataStream operators with state的输入类型,这样改变就不会有问题。如果之前没有使用serializers with versioned encodings,Flink也能通过TypeSerializer 接口的两个方法实现。详细要另找资料。

Tuning the Performance of Stateful Applications

对于RocksDBStateBackend ,VS是的访问和更新完全反序列化和序列化的;LS访问时需要反序列化所有entries,更新时只需序列化更新的节点,所以如果经常添加state由很少访问,LS优于ValueState[List[X]];MS的访问和更新只涉及被访问的k-v的反序列化和序列化,遍历时,entries是从RocksDB预取的,只有实际访问key或v时才会反序列化。用MapState[X, Y] 优于ValueState[HashMap[X, Y]]

每次函数调用只更新一次状态?Since checkpoints are synchronized with function invocations, multiple state updates do not provide any benefits but can cause additional serialization overhead

Preventing Leaking State

清除无用的key state。这个问题涉及到自定义stateful function和一些内置DataStream API,例如aggregates on a KeyedStream,反正无法定期清理state的函数都要考虑。否则只有key有限或者state不会无限扩大时这些函数才能使用。 count-based的window也有同样问题,time-bassed就没有,因会定时触发清除state。

具有key state的函数只有在收到带有该键的记录时才能访问key state,由于不知道某条数据所否是该key最后一条数据,所以不能直接删除key state。但可以通过回调来进行key state的删除,即过了一段时间,没有该key的信息,就代表该key无用了。实现上通过登记一个timer来定时清除该key的state。目前支持登记timers的有Trigger interface for windows and the ProcessFunction.

// 启动警报,如果两次测量的差距过大,并且如果某个id如果在1小时内没有更新timer,就会触发onTime,并清除该key的state

class StateCleaningTemperatureAlertFunction(val threshold: Double)

extends ProcessFunction[SensorReading, (String, Double, Double)] {

// the keyed state handle for the last temperature

private var lastTempState: ValueState[Double] = _

// the keyed state handle for the last registered timer

private var lastTimerState: ValueState[Long] = _

override def open(parameters: Configuration): Unit = {

// register state for last temperature

val lastTempDescriptor =

new ValueStateDescriptor[Double]("lastTemp", classOf[Double])

// enable queryable state and set its external identifier

lastTempDescriptor.setQueryable("lastTemperature")

lastTempState = getRuntimeContext

.getState[Double](lastTempDescriptor)

// register state for last timer

val timerDescriptor: ValueStateDescriptor[Long] =

new ValueStateDescriptor[Long]("timerState", classOf[Long])

lastTimerState = getRuntimeContext

.getState(timerDescriptor)

}

override def processElement(

in: SensorReading,

ctx: ProcessFunction[SensorReading, (String, Double, Double)]#Context,

out: Collector[(String, Double, Double)]) = {

// get current watermark and add one hour

val checkTimestamp =

ctx.timerService().currentWatermark() + (3600 * 1000)

// register new timer.

// Only one timer per timestamp will be registered. Timers with identical timestamps are deduplicated. That is also the reason why we compute the clean-up time based on the watermark and not on the record timestamp.

ctx.timerService().registerEventTimeTimer(checkTimestamp)

// update timestamp of last timer

lastTimerState.update(checkTimestamp)

// fetch the last temperature from state

val lastTemp = lastTempState.value()

// check if we need to emit an alert

if (lastTemp > 0.0d && (in.temperature / lastTemp) > threshold) {

// temperature increased by more than the threshold

out.collect((in.id, in.temperature, lastTemp))

}

// update lastTemp state

this.lastTempState.update(in.temperature)

}

override def onTimer(

ts: Long,

ctx: ProcessFunction[SensorReading, (String, Double, Double)]#OnTimerContext,

out: Collector[(String, Double, Double)]): Unit = {

// get timestamp of last registered timer

val lastTimer = lastTimerState.value()

// check if the last registered timer fired 因为上面每个数据处理时timer不是被覆盖,而是登记了新的timer。执行期间,PF会保留a list of all registered timers

if (lastTimer != null.asInstanceOf[Long] && lastTimer == ts) {

// clear all state for the key

lastTempState.clear()

lastTimerState.clear()

}

}

}

State Time-To-Live (TTL)(v1.6了解,未完善)

TTL可以分配到key state,如果key state超过TTL设定的时间,而且被读取时.value(),会被清理。collection state能实现entries独立,即一个entries一个TTL。时间目前只支持process。增加这个功能意味着更多内存消耗。不会被checkpoint。

The map state with TTL currently supports null user values only if the user value serializer can handle null values. If the serializer does not support null values, it can be wrapped with NullableSerializer at the cost of an extra byte in the serialized form.

val ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(1))

.setUpdateType(StateTtlConfig.UpdateType.OnCreateAndWrite)

// whether the expired value is returned on read access,其他选项StateTtlConfig.StateVisibility.ReturnExpiredIfNotCleanedUp / ReturnExpiredIfNotCleanedUp

.setStateVisibility(StateTtlConfig.StateVisibility.NeverReturnExpired)

.build

// 其他选项

// returned if still available

setStateVisibility()

val stateDescriptor = new ValueStateDescriptor[String]("text state", classOf[String])

stateDescriptor.enableTimeToLive(ttlConfig)

Queryable State

只支持key-point-queries

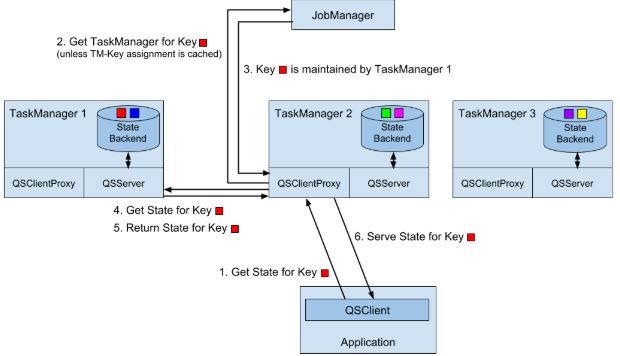

Architecture and Enabling Queryable State

为了开启这个功能,要添加flink-queryable-state-runtime JAR 到TM进程的classpath。This is done by copying it from the ./opt folder of you installation into the ./lib folder. 相关端口和参数设置在./conf/flink-conf.yaml。

Exposing Queryable State

方法1:看上面例子中的open()

方法2:

val tenSecsMaxTemps: DataStream[(String, Double)] = sensorData

// project to sensor id and temperature

.map(r => (r.id, r.temperature))

// compute every 10 seconds the max temperature per sensor

.keyBy(_._1)

.timeWindow(Time.seconds(10))

.max(1)

// store max temperature of the last 10 secs for each sensor in a queryable state.

tenSecsMaxTemps

// key by sensor id

.keyBy(_._1)

.asQueryableState("maxTemperature")

其他重载方法:

asQueryableState(id: String, stateDescriptor: ValueStateDescriptor[T])can be used to configure theValueStatein more detail, e.g., to configure a custom serializer.asQueryableState(id: String, stateDescriptor: ReducingStateDescriptor[T])configures aReducingStateinstead of aValueState. TheReducingStateis also updated for each incoming record. However, in contrast to theValueState, the new record does not replace the existing value but is instead combined with the previous version using the state’sReduceFunction.

Querying State from External Applications

任何JVM-based application可以查询queryable state通过使用QueryableStateClient。添加依赖即可flink-queryable-state-client-java_2.11

当获取到client后,调用getKvState(),参数为JobID of the running application(REST API, the web UI, or the log files), the state identifier, the key for which the state should be fetched, the TypeInformation for the key, and the StateDescriptorof the queried state. 返回结果是 CompletableFuture[S] where S is the type of the state, e.g., ValueState[_] or MapState[_, _]

object TemperatureDashboard {

// assume local setup and TM runs on same machine as client

val proxyHost = "127.0.0.1"

val proxyPort = 9069

// jobId of running QueryableStateJob. can be looked up in logs of running job or the web UI

val jobId = "d2447b1a5e0d952c372064c886d2220a"

// how many sensors to query

val numSensors = 5

// how often to query the state

val refreshInterval = 10000

def main(args: Array[String]): Unit = {

// configure client with host and port of queryable state proxy

val client = new QueryableStateClient(proxyHost, proxyPort)

val futures = new Array[

CompletableFuture[ValueState[(String, Double)]]](numSensors)

val results = new Array[Double](numSensors)

// print header line of dashboard table

val header =

(for (i <- 0 until numSensors) yield "sensor_" + (i + 1))

.mkString("\t| ")

println(header)

// loop forever

while (true) {

// send out async queries

for (i <- 0 until numSensors) {

futures(i) = queryState("sensor_" + (i + 1), client)

}

// wait for results

for (i <- 0 until numSensors) {

results(i) = futures(i).get().value()._2

}

// print result

val line = results.map(t => f"$t%1.3f").mkString("\t| ")

println(line)

// wait to send out next queries

Thread.sleep(refreshInterval)

}

client.shutdownAndWait()

}

def queryState(

key: String,

client: QueryableStateClient)

: CompletableFuture[ValueState[(String, Double)]] = {

client

.getKvState[String, ValueState[(String, Double)], (String, Double)](

JobID.fromHexString(jobId),

"maxTemperature",

key,

Types.STRING,

new ValueStateDescriptor[(String, Double)](

"", // state name not relevant here

createTypeInformation[(String, Double)]))

}

}

参考

Stream Processing with Apache Flink by Vasiliki Kalavri; Fabian Hueske

Flink之Stateful Operators的更多相关文章

- Flink学习笔记:Operators串烧

本文为<Flink大数据项目实战>学习笔记,想通过视频系统学习Flink这个最火爆的大数据计算框架的同学,推荐学习课程: Flink大数据项目实战:http://t.cn/EJtKhaz ...

- Flink - state管理

在Flink – Checkpoint 没有描述了整个checkpoint的流程,但是对于如何生成snapshot和恢复snapshot的过程,并没有详细描述,这里补充 StreamOperato ...

- Flink - Working with State

All transformations in Flink may look like functions (in the functional processing terminology), but ...

- Flink DataStream API Programming Guide

Example Program The following program is a complete, working example of streaming window word count ...

- 3 differences between Savepoints and Checkpoints in Apache Flink

https://mp.weixin.qq.com/s/nQOxsZUZSiPi7Sx40mgwsA 20181104 3 differences between Savepoints and Chec ...

- Flink架构及其工作原理

目录 System Architecture Data Transfer in Flink Event Time Processing State Management Checkpoints, Sa ...

- Flink系统之Table API 和 SQL

Flink提供了像表一样处理的API和像执行SQL语句一样把结果集进行执行.这样很方便的让大家进行数据处理了.比如执行一些查询,在无界数据和批处理的任务上,然后将这些按一定的格式进行输出,很方便的让大 ...

- 9、flink的状态与容错

1.理解State(状态) 1.1.State 对象的状态 Flink中的状态:一般指一个具体的task/operator某时刻在内存中的状态(例如某属性的值) 注意:State和Checkpoint ...

- Flink - DataStream

先看例子, final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); D ...

随机推荐

- Python学习第二阶段,Day2,import导入模块方法和内部原理

怎样导入模块和导入包?? 1.模块定义:代码越来越多的时候,所有代码放在一个py文件无法维护.而将代码拆分成多个py文件,同一个名字的变量互不影响,模块本质上是一个.py文件或者".py&q ...

- linux命令 info

info命令是Linux下info格式的帮助指令. 就内容来说,info页面比man page编写得要更好.更容易理解,也更友好,但man page使用起来确实要更容易得多.一个man page只有一 ...

- plotting and saving over line in paraView

probe -- provides the field values in a particular location in space To save plotoverline to csv fil ...

- 洛谷 4251 [SCOI2015]小凸玩矩阵

[题解] 二分答案+二分图匹配. 先二分最小值Min,然后扫一遍这个矩阵,把满足a[i][j]<=Min的i,j连边,之后跑二分图匹配,如果最大匹配数大于等于n-k+1,当前的Min即是合法的. ...

- 使用lombok提高编码效率-----不用写get set

使用lombok提高编码效率-----不用写get set https://blog.csdn.net/v2sking/article/details/73431364

- BNUOJ 26224 Covered Walkway

Covered Walkway Time Limit: 10000ms Memory Limit: 131072KB This problem will be judged on HDU. Origi ...

- Cx的治疗

题目背景 「Cx的故事」众所周知,Cx是一个宇宙大犇.由于Cx在空中花园失足摔下,导致他那蕴含着无穷智慧的大脑受到了严重的损伤,许多的脑神经断裂.于是,Cx的wife(有么?)决定请巴比伦最好的医师治 ...

- PHP输出缓冲控制 - Output Control 函 应用详解

简介 说到输出缓冲,首先要说的是一个叫做缓冲器(buffer)的东西.举个简单的例子说明他的作用:我们在编辑一篇文档时,在我们没有保存之前,系统是不会向磁盘写入的,而是写到buffer中,当buffe ...

- 这段百度问答,对我相关有对啊!!!----如何获取Windows系统登陆用户名

如何获取Windows系统登陆用户名 http://zhidao.baidu.com/link?url=Hva9PkVwYZv8KSEWftSqTWe8fqM1dhoq59BurnfADmcOvFjF ...

- B. Code For 1 分治

time limit per test 2 seconds memory limit per test 256 megabytes input standard input output standa ...