【论文阅读】End to End Learning for Self-Driving Cars

前言引用

[1] End to End Learning for Self-Driving Cars从这里开始

[1.1] 这个是相关的博客:2016:DRL前沿之:End to End Learning for Self-Driving Cars

[1.2] 其中提到的视频:GTC 2016: Self-Driving Car Demo, Roborace and Wrapping Up (part 11)

摘要

万事从摘要开始:

We trained a convolutional neural network (CNN) to map raw pixels from a single front-facing camera directly to steering commands. This end-to-end approach proved surprisingly powerful. With minimum training data from humans the system learns to drive in traffic on local roads with or without lane markings and on highways. It also operates in areas with unclear visual guidance such as in parking lots and on unpaved roads.

The system automatically learns internal representations of the necessary processing steps such as detecting useful road features with only the human steering angle as the training signal. We never explicitly trained it to detect, for example, the outline of roads.

Compared to explicit decomposition of the problem, such as lane marking detection, path planning, and control, our end-to-end system optimizes all processing steps simultaneously. We argue that this will eventually lead to better performance and smaller systems. Better performance will result because the internal components self-optimize to maximize overall system performance, instead of optimizing human-selected intermediate criteria, e. g., lane detection. Such criteria understandably are selected for ease of human interpretation which doesn't automatically guarantee maximum system performance. Smaller networks are possible because the system learns to solve the problem with the minimal number of processing steps.

We used an NVIDIA DevBox and Torch 7 for training and an NVIDIA DRIVETM PX self-driving car computer also running Torch 7 for determining where to drive. The system operates at 30 frames per second (FPS).

碎碎念:这一篇不是专门的会议或者是期刊论文,所以我觉得看起来没啥难度?毕竟本科毕设的时候看了3、4篇关于slam的 一脸懵逼 真的是一脸懵逼; 这一篇呢,也是因为随意点,所以摘要比以往要长,做的事:直接拿CNN 卷积网络来走,测试场景有晴天、雨天、雾天、黑夜、白天等,一共72个小时的人开数据,论文中公式也不多,非常适合了解end-to-end的第一篇,(所以我其实是偷偷改了改顺序的,从简单的开始比较适合... 初入)

Purpose

1.The primary motivation for this work is to avoid the need to recognize specific human-designed features

2. avoid having to create a collection of "if, then, else" rules 这个好真实哦 hhh

Method

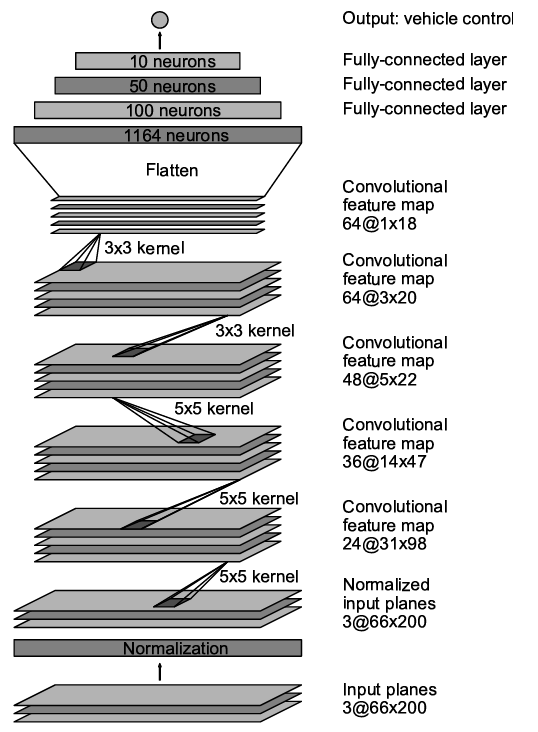

1.首先介绍了CNN -> pattern recognition;这是总图 其实神经网络和卷积学过可能都能看懂?然而我学卷积的时候太快了,基本忘完了 也不影响整体的阅读(但是复现要求肯定还是需要理论彻底)

2.一句话分析了89年的那一篇就是从0开始的那里提到的ALVINN【ALVINN used a fully-connected network which is tiny by today's standard】从这句话里听到了NVIDIA的设备高级感

3.steering command 在这里是以\(\frac{1}{r}\),\(r\)是转弯的半径 用分号的形式是为了避免singularity. 【其实我这里想问为什么嘛不直接以方向盘的旋转角度?】

4. We train the weights of our network to minimize the mean squared error between the steering command output by the network and the command of either the human driver, or the adjusted steering command for off-center and rotated images. 通过系统学习的和人开的图的中心偏移的方差误差进行学习调整steering command【这里我对于学习所获的图表示怀疑,就是系统学习输出的动作,作为图像的输入,怎么得出的动作后的图像从而进行对比? 这一点论文里没有提?在simulation 就说了一个词generates images emmm 这个我就很疑惑】

5.就是对autonomy 的定义公式:

\]

到这里就是最后一步了,都到评价了(人工介入的次数)

6. 是有趣的发现,发现第一二个特征识别的layers学习后的权重输出的特征图 恰巧就是路边边缘,也侧面证实了 确实是按着人的思路去开的(就是我们开车也是第一步识别路边缘,进行跟随路边缘行驶)

Limitation

1.It's not possible to make a clean break between which parts of the network function primarily as feature extractor and which serve as controller. 就是有点分不清哪里是决定特征的哪里是决定动作的(决定性输出 毕竟是端到端嘛)

2.more work is needed to improve the robustness of the network to find methods to verify the robustness, and to improve visualization of the network-internal processing steps. 系统的鲁棒性问题,不过这个点出来有点笼统

以上,遗留的两个问题看我后面能不能回来回答它们了,另外大概的顺序都是从以前的到现在的,这样也好看出一步步的进步。

自己的一些想法

1.和师兄讨论发现,这个点主要是缺乏可解释性,从而让很多学者不敢在实车上进行测试后,在实车上测试end2end都是一大群公司的工程师 - 比如这篇论文NVIDIA 我取的名字是暴力学习hhhh,而end2end这个方向也是一个很大的工程类方向,大是因为他不好划分,比如细致的划分(因为这样我们就又回到传统了)所以怎么把握这个点 emm

2.还有就是limitation提到的第一点,这也就是上一点,有重复之处,cpd哥后面指出了uber那边2020年的论文里有细分,但是还是在end2end 所以那么下一次见

【论文阅读】End to End Learning for Self-Driving Cars的更多相关文章

- 【论文阅读】Deep Mutual Learning

文章:Deep Mutual Learning 出自CVPR2017(18年最佳学生论文) 文章链接:https://arxiv.org/abs/1706.00384 代码链接:https://git ...

- 论文阅读 | Recurrent Attentional Reinforcement Learning for Multi-label Image Recognition

源地址 arXiv:1712.07465: Recurrent Attentional Reinforcement Learning for Multi-label Image Recognition ...

- 论文阅读 Dynamic Graph Representation Learning Via Self-Attention Networks

4 Dynamic Graph Representation Learning Via Self-Attention Networks link:https://arxiv.org/abs/1812. ...

- [论文阅读] A Discriminative Feature Learning Approach for Deep Face Recognition (Center Loss)

原文: A Discriminative Feature Learning Approach for Deep Face Recognition 用于人脸识别的center loss. 1)同时学习每 ...

- 论文阅读 | DeepDrawing: A Deep Learning Approach to Graph Drawing

作者:Yong Wang, Zhihua Jin, Qianwen Wang, Weiwei Cui, Tengfei Ma and Huamin Qu 本文发表于VIS2019, 来自于香港科技大学 ...

- Deep Reinforcement Learning for Dialogue Generation 论文阅读

本文来自李纪为博士的论文 Deep Reinforcement Learning for Dialogue Generation. 1,概述 当前在闲聊机器人中的主要技术框架都是seq2seq模型.但 ...

- 论文阅读笔记 Improved Word Representation Learning with Sememes

论文阅读笔记 Improved Word Representation Learning with Sememes 一句话概括本文工作 使用词汇资源--知网--来提升词嵌入的表征能力,并提出了三种基于 ...

- 论文阅读:Face Recognition: From Traditional to Deep Learning Methods 《人脸识别综述:从传统方法到深度学习》

论文阅读:Face Recognition: From Traditional to Deep Learning Methods <人脸识别综述:从传统方法到深度学习> 一.引 ...

- 【论文阅读】Learning Dual Convolutional Neural Networks for Low-Level Vision

论文阅读([CVPR2018]Jinshan Pan - Learning Dual Convolutional Neural Networks for Low-Level Vision) 本文针对低 ...

- [论文阅读笔记] metapath2vec: Scalable Representation Learning for Heterogeneous Networks

[论文阅读笔记] metapath2vec: Scalable Representation Learning for Heterogeneous Networks 本文结构 解决问题 主要贡献 算法 ...

随机推荐

- .Net RabbitMQ实战指南——进阶(一)

备份交换器 备份交换器,英文名称为Alternate Exchange,简称AE.通过在声明交换器(调用channel.ExchangeDeclare方法)时添加alternate-exchange参 ...

- vulhub-struct2-s2-005

0x00 漏洞原理 s2-005漏洞的起源源于S2-003(受影响版本: 低于Struts 2.0.12),struts2会将http的每个参数名解析为OGNL语句执行(可理解为java代码).O ...

- 四、SSL虚拟证书

沿用练习三,配置基于加密网站的虚拟主机,实现以下目标: 域名为www.c.com 该站点通过https访问 通过私钥.证书对该站点所有数据加密 4.2 方案 源码安装Nginx时必须使用--with- ...

- 彻底删除Docker

yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-la ...

- 谁能干掉了if else

很多人觉得自己写的是业务代码,按照逻辑写下去,再把公用的方法抽出来复用就可以了,设计模式根本就没必要用,更没必要学. 一开始的时候,我也是这么想,直到我遇到... 举个例子 我们先看一个普通的下单拦截 ...

- 【题解】Luogu p2016 战略游戏 (最小点覆盖)

题目描述 Bob喜欢玩电脑游戏,特别是战略游戏.但是他经常无法找到快速玩过游戏的办法.现在他有个问题. 他要建立一个古城堡,城堡中的路形成一棵树.他要在这棵树的结点上放置最少数目的士兵,使得这些士兵能 ...

- OO unit1 summary

Unit 1 summary 一.前言 三周左右的学习,OO第一单元顺利结束了,个人认为有必要写个blog来反思总结一下自己第一单元的学习情况,以便更好地进行后面的学习. 之前从来没有写blog的习惯 ...

- MySQL 为什么使用 B+ 树来作索引?

什么是索引? 所谓的索引,就是帮助 MySQL 高效获取数据的排好序的数据结构.因此,根据索引的定义,构建索引其实就是数据排序的过程. 平时常见的索引数据结构有: 二叉树 红黑树 哈希表 B Tree ...

- Unity il2cpp加密

防市面上百分之九十的破解者,下面是Mono改为il2cpp的面板 点击:File > BuildSettings > PlayerSettings > OtherSettings & ...

- Mac nasm 汇编入门

下载 brew install nasm code SECTION .data msg: db "hello world", 0x0a len: equ $-msg SECTION ...