Distributed Deep Learning

安利一下刘铁岩老师的《分布式机器学习》这本书

以及一个大神的blog:

https://zhuanlan.zhihu.com/p/29032307

https://zhuanlan.zhihu.com/p/30976469

分布式深度学习原理

在很多教程中都有介绍DL training的原理。我们来简单回顾一下:

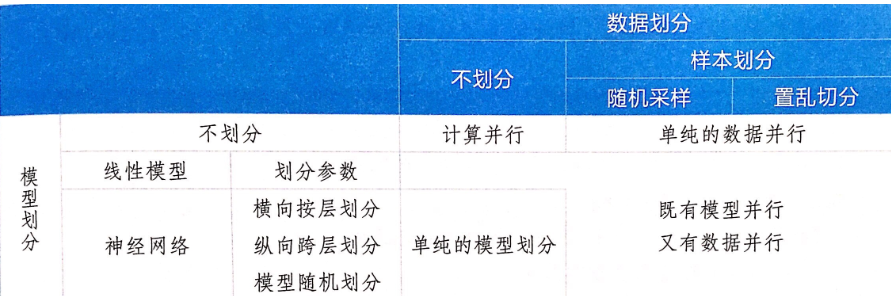

那么如果scale太大,需要分布式呢?分布式机器学习大致有以下几个思路:

- 对于计算量太大的场景(计算并行),可以多线程/多节点并行计算。常用的一个算法就是同步随机梯度下降(synchronous stochastic gradient descent),含义大致相当于K个(K是节点数)mini-batch SGD [ch6.2]

- 对于训练数据太多的场景(数据并行,也是最主要的场景),需要将数据划分到多个节点上训练。每个节点先用本地的数据先训练出一个子模型,同时和其他节点保持通信(比如更新参数)以保证最终可以有效整合来自各个节点的训练结果,并得到全局的ML模型。 [ch6.3]

- 对于模型太大的场景,需要把模型(例如NN中的不同层)划分到不同节点上进行训练。此时不同节点之间可能需要频繁的sync。 [ch6.4]

它们可以总结为下图:

以数据并行为例,整个pipeline如下:

- 划分数据到不同节点

- 每个节点单机训练

- 节点之间的通信以及整个拓扑结构设计 【ch7】

- 多个训练好的子模型的聚合 【ch8】

Distributed DL model

目前工业界常见的Distributed DL方法有以下三种:【ch7.3】

1. PyTorch: AllReduce Model

MPI is a common method of distributed computing framework to implement distributed machine learning system. The main idea is to use AllReduce API to synchronize message and it also supports operations which satisfy Reduce rules. The common polymerization method for machine learning models is addition and average, so AllReduce logic is suitable to deal with it. The standard API of AllReduce have various implemented methods.

AllReduce mode is simple and convenient which is beneficial for paralleling training in synchronization algorithm. Till now, there are many deep learning systems still use it to complete communication function in distributed training, such as gloo communication library from Caffe2, DeepSpeech system in Baidu and NCCL communication library in Nvidia.

However, AllReduce can only support synchronizing communication and the logic of all working nodes are same which means every working node should handle completed model. It is unsuitable for large scale model.

Limitation of AllReduce:

When working nodes in system is increasing and the computing is unbalance, the training speed is decided by the slowest node in this system; once a working node does not work, the whole system has to stop.

Also, when the number of parameters of models in machine learning task is too large, it will exceed the memory capacity of single machine.

2. MXNet: Parameter Server Model

In the parameter server framework, all nodes in system are divided into worker and server logically. The main task of each worker is to take charge of local training task and communicate with parameter server through server interface. In this way, they can obtain latest model parameters from parameter server or send latest local training model to parameter server. With this parameter server, machine learning can be synchronous or asynchronous, or even mixed.

3. TensorFlow: Dataflow Model

Computational graph model in TensorFlow: Computation is described as a directed acyclic data flow graph. The nodes in the figure represent compute nodes and the edges represent data flow.

Distributed machine learning system based on data flow draws on the flexibility of DAG-based big data processing system, it describes the computing task as a directed acyclic data flow graph. The nodes in the figure represent the operations on the data and the edges in the figure represent the dependencies of the operation.

The system automatically provides distributed execution of the dataflow graph, so the user cares about how to design the appropriate dataflow graph to represent the algorithmic logic that is to be executed.

Below, it will take a data flow diagram representing the data flow system in TensorFlow as an example to introduce a typical data flow diagram.

分布式机器学习算法

【ch9】

Distributed Deep Learning的更多相关文章

- (转)分布式深度学习系统构建 简介 Distributed Deep Learning

HOME ABOUT CONTACT SUBSCRIBE VIA RSS DEEP LEARNING FOR ENTERPRISE Distributed Deep Learning, Part ...

- 英特尔深度学习框架BigDL——a distributed deep learning library for Apache Spark

BigDL: Distributed Deep Learning on Apache Spark What is BigDL? BigDL is a distributed deep learning ...

- CoRR 2018 | Horovod: Fast and Easy Distributed Deep Learning in Tensorflow

将深度学习模型的训练从单GPU扩展到多GPU主要面临以下问题:(1)训练框架必须支持GPU间的通信,(2)用户必须更改大量代码以使用多GPU进行训练.为了克服这些问题,本文提出了Horovod,它通过 ...

- Install PaddlePaddle (Parallel Distributed Deep Learning)

Step 1: Install docker on your linux system (My linux is fedora) https://docs.docker.com/engine/inst ...

- NeurIPS 2017 | TernGrad: Ternary Gradients to Reduce Communication in Distributed Deep Learning

在深度神经网络的分布式训练中,梯度和参数同步时的网络开销是一个瓶颈.本文提出了一个名为TernGrad梯度量化的方法,通过将梯度三值化为\({-1, 0, 1}\)来减少通信量.此外,本文还使用逐层三 ...

- 【深度学习Deep Learning】资料大全

最近在学深度学习相关的东西,在网上搜集到了一些不错的资料,现在汇总一下: Free Online Books by Yoshua Bengio, Ian Goodfellow and Aaron C ...

- (转) Awesome Deep Learning

Awesome Deep Learning Table of Contents Free Online Books Courses Videos and Lectures Papers Tutori ...

- 机器学习(Machine Learning)&深度学习(Deep Learning)资料

<Brief History of Machine Learning> 介绍:这是一篇介绍机器学习历史的文章,介绍很全面,从感知机.神经网络.决策树.SVM.Adaboost到随机森林.D ...

- 机器学习(Machine Learning)&深入学习(Deep Learning)资料

<Brief History of Machine Learning> 介绍:这是一篇介绍机器学习历史的文章,介绍很全面,从感知机.神经网络.决策树.SVM.Adaboost 到随机森林. ...

随机推荐

- Python 爬虫十六式 - 第五式:BeautifulSoup-美味的汤

BeautifulSoup 美味的汤 学习一时爽,一直学习一直爽! Hello,大家好,我是Connor,一个从无到有的技术小白.上一次我们说到了 Xpath 的使用方法.Xpath 我觉得还是 ...

- C++ - 操作运算符

一.操作运算符 操作运算符:在C++中,编译器有能力将数据.对象和操作符共同组成表达式,解释为对全局或成员函数的调用 该全局或成员函数被称为操作符函数,程序员可以通过重定义函数操作符函数,来达到自己想 ...

- 以Emacs Org mode为核心的任务管理方案

前言 如今用于任务管理的方法与工具越来越多,如纸笔系统.日历与任务列表.Emacs Org mode系统,以及移动设备上的诸多应用.这些解决方案各具特色,在一定程度上能够形成互补作用.但是,它们彼此之 ...

- BZOJ 2716 [Violet 3]天使玩偶 (CDQ分治、树状数组)

题目链接: https://www.lydsy.com/JudgeOnline/problem.php?id=2716 怎么KD树跑得都那么快啊..我写的CDQ分治被暴虐 做四遍CDQ分治,每次求一个 ...

- EMC存储同时分配空间到两台LINUX服务器路径不一致导致双机盘符大小不一致

操作系统:Centos linux6.6 当我们从EMC存储上划分空间同时分配给两台或者多台服务器上时,有的时候会出现在服务器上所生成的磁盘路径是不一致的,这样就会导致盘符名称不一致或者是盘符对应的大 ...

- Selenium 上手:Selenium扫盲区

Selenium 自述Selenium 是由Jason Huggins软件工程师编写的一个开源的浏览器自动化测试框架.主要用于测试自动化Web UI应用程序. Selenium 工作原理通过编程语言( ...

- 什么是webpack以及为什么使用它

什么是webpack以及为什么使用它 新建 模板 小书匠 在ES6之前,我们要想进行模块化开发,就必须借助于其他的工具.因为开发时用的是高级语法开发,效率非常高,但很可惜的是,浏览器未必会支持或认识 ...

- 【web前端】面题整理(2)

今天是520,单身狗在这里祝各位520快乐! DOM节点统计 DOM 的体积过大会影响页面性能,假如你想在用户关闭页面时统计(计算并反馈给服务器)当前页面中元素节点的数量总和.元素节点的最大嵌套深度以 ...

- Hello World!----html

最近要做一个小网站,今晚想起来还是先看看前端终于抑制住惰性,开始看了. 看了一下html,写了个hello world.老实讲,我竟然还有些小激动 <html> <hea ...

- R-CNN, Fast R-CNN, Faster R-CNN, Mask R-CNN

最近在看 Mask R-CNN, 这个分割算法是基于 Faster R-CNN 的,决定看一下这个 R-CNN 系列论文,好好理一下 R-CNN 2014 1. 论文 Rich feature hie ...