使用python爬虫爬取链家潍坊市二手房项目

使用python爬虫爬取链家潍坊市二手房项目

需求分析

需要将潍坊市各县市区页面所展示的二手房信息按要求爬取下来,同时保存到本地。

流程设计

- 明确目标网站URL( https://wf.lianjia.com/ )

- 确定爬取二手房哪些具体信息(字段名)

- python爬虫关键实现:requests库和lxml库

- 将爬取的数据存储到CSV或数据库中

实现过程

项目目录

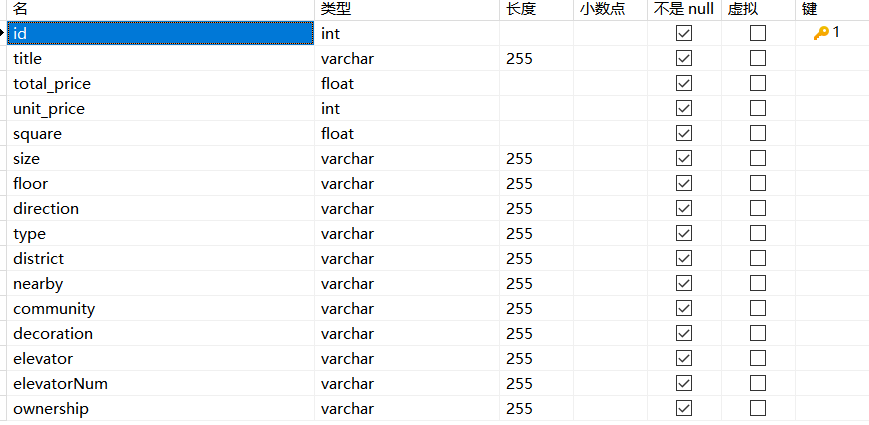

1、在数据库中创建数据表

我电脑上使用的是MySQL8.0,图形化工具用的是Navicat.

数据库字段对应

id-编号、title-标题、total_price-房屋总价、unit_price-房屋单价、

square-面积、size-户型、floor-楼层、direction-朝向、type-楼型、

district-地区、nearby-附近区域、community-小区、elevator-电梯有无、

elevatorNum-梯户比例、ownership-房屋性质

该图显示的是字段名、数据类型、长度等信息。

2、自定义数据存储函数

这部分代码放到Spider_wf.py文件中

通过write_csv函数将数据存入CSV文件,通过write_db函数将数据存入数据库

点击查看代码

import csv

import pymysql

#写入CSV

def write_csv(example_1):

csvfile = open('二手房数据.csv', mode='a', encoding='utf-8', newline='')

fieldnames = ['title', 'total_price', 'unit_price', 'square', 'size', 'floor','direction','type',

'BuildTime','district','nearby', 'community', 'decoration', 'elevator','elevatorNum','ownership']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writerow(example_1)

#写入数据库

def write_db(example_2):

conn = pymysql.connect(host='127.0.0.1',port= 3306,user='changziru',

password='ru123321',database='secondhouse_wf',charset='utf8mb4'

)

cursor =conn.cursor()

title = example_2.get('title', '')

total_price = example_2.get('total_price', '0')

unit_price = example_2.get('unit_price', '')

square = example_2.get('square', '')

size = example_2.get('size', '')

floor = example_2.get('floor', '')

direction = example_2.get('direction', '')

type = example_2.get('type', '')

BuildTime = example_2.get('BuildTime','')

district = example_2.get('district', '')

nearby = example_2.get('nearby', '')

community = example_2.get('community', '')

decoration = example_2.get('decoration', '')

elevator = example_2.get('elevator', '')

elevatorNum = example_2.get('elevatorNum', '')

ownership = example_2.get('ownership', '')

cursor.execute('insert into wf (title, total_price, unit_price, square, size, floor,direction,type,BuildTime,district,nearby, community, decoration, elevator,elevatorNum,ownership)'

'values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)',

[title, total_price, unit_price, square, size, floor,direction,type,

BuildTime,district,nearby, community, decoration, elevator,elevatorNum,ownership])

conn.commit()#传入数据库

conn.close()#关闭数据库

3、爬虫程序实现

这部分代码放到lianjia_house.py文件,调用项目Spider_wf.py文件中的write_csv和write_db函数

点击查看代码

#爬取链家二手房详情页信息

import time

from random import randint

import requests

from lxml import etree

from secondhouse_spider.Spider_wf import write_csv,write_db

#模拟浏览器操作

USER_AGENTS = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52",

]

#随机USER_AGENTS

random_agent = USER_AGENTS[randint(0, len(USER_AGENTS) - 1)]

headers = {'User-Agent': random_agent,}

class SpiderFunc:

def __init__(self):

self.count = 0

def spider(self ,list):

for sh in list:

response = requests.get(url=sh, params={'param':'1'},headers={'Connection':'close'}).text

tree = etree.HTML(response)

li_list = tree.xpath('//ul[@class="sellListContent"]/li[@class="clear LOGVIEWDATA LOGCLICKDATA"]')

for li in li_list:

# 获取每套房子详情页的URL

detail_url = li.xpath('.//div[@class="title"]/a/@href')[0]

try:

# 向每个详情页发送请求

detail_response = requests.get(url=detail_url, headers={'Connection': 'close'}).text

except Exception as e:

sleeptime = randint(15,30)

time.sleep(sleeptime)#随机时间延迟

print(repr(e))#打印异常信息

continue

else:

detail_tree = etree.HTML(detail_response)

item = {}

title_list = detail_tree.xpath('//div[@class="title"]/h1/text()')

item['title'] = title_list[0] if title_list else None # 1简介

total_price_list = detail_tree.xpath('//span[@class="total"]/text()')

item['total_price'] = total_price_list[0] if total_price_list else None # 2总价

unit_price_list = detail_tree.xpath('//span[@class="unitPriceValue"]/text()')

item['unit_price'] = unit_price_list[0] if unit_price_list else None # 3单价

square_list = detail_tree.xpath('//div[@class="area"]/div[@class="mainInfo"]/text()')

item['square'] = square_list[0] if square_list else None # 4面积

size_list = detail_tree.xpath('//div[@class="base"]/div[@class="content"]/ul/li[1]/text()')

item['size'] = size_list[0] if size_list else None # 5户型

floor_list = detail_tree.xpath('//div[@class="base"]/div[@class="content"]/ul/li[2]/text()')

item['floor'] = floor_list[0] if floor_list else None#6楼层

direction_list = detail_tree.xpath('//div[@class="type"]/div[@class="mainInfo"]/text()')

item['direction'] = direction_list[0] if direction_list else None # 7朝向

type_list = detail_tree.xpath('//div[@class="area"]/div[@class="subInfo"]/text()')

item['type'] = type_list[0] if type_list else None # 8楼型

BuildTime_list = detail_tree.xpath('//div[@class="transaction"]/div[@class="content"]/ul/li[5]/span[2]/text()')

item['BuildTime'] = BuildTime_list[0] if BuildTime_list else None # 9房屋年限

district_list = detail_tree.xpath('//div[@class="areaName"]/span[@class="info"]/a[1]/text()')

item['district'] = district_list[0] if district_list else None # 10地区

nearby_list = detail_tree.xpath('//div[@class="areaName"]/span[@class="info"]/a[2]/text()')

item['nearby'] = nearby_list[0] if nearby_list else None # 11区域

community_list = detail_tree.xpath('//div[@class="communityName"]/a[1]/text()')

item['community'] = community_list[0] if community_list else None # 12小区

decoration_list = detail_tree.xpath('//div[@class="base"]/div[@class="content"]/ul/li[9]/text()')

item['decoration'] = decoration_list[0] if decoration_list else None # 13装修

elevator_list = detail_tree.xpath('//div[@class="base"]/div[@class="content"]/ul/li[11]/text()')

item['elevator'] = elevator_list[0] if elevator_list else None # 14电梯

elevatorNum_list = detail_tree.xpath('//div[@class="base"]/div[@class="content"]/ul/li[10]/text()')

item['elevatorNum'] = elevatorNum_list[0] if elevatorNum_list else None # 15梯户比例

ownership_list = detail_tree.xpath('//div[@class="transaction"]/div[@class="content"]/ul/li[2]/span[2]/text()')

item['ownership'] = ownership_list[0] if ownership_list else None # 16交易权属

self.count += 1

print(self.count,title_list)

# 将爬取到的数据存入CSV文件

write_csv(item)

# 将爬取到的数据存取到MySQL数据库中

write_db(item)

#循环目标网站

count =0

for page in range(1,101):

if page <=40:

url_qingzhoushi = 'https://wf.lianjia.com/ershoufang/qingzhoushi/pg' + str(page) # 青州市40

url_hantingqu = 'https://wf.lianjia.com/ershoufang/hantingqu/pg' + str(page) # 寒亭区 76

url_fangzi = 'https://wf.lianjia.com/ershoufang/fangziqu/pg' + str(page) # 坊子区

url_kuiwenqu = 'https://wf.lianjia.com/ershoufang/kuiwenqu/pg' + str(page) # 奎文区

url_gaoxin = 'https://wf.lianjia.com/ershoufang/gaoxinjishuchanyekaifaqu/pg' + str(page) # 高新区

url_jingji = 'https://wf.lianjia.com/ershoufang/jingjijishukaifaqu2/pg' + str(page) # 经济技术85

url_shouguangshi = 'https://wf.lianjia.com/ershoufang/shouguangshi/pg' + str(page) # 寿光市 95

url_weichengqu = 'https://wf.lianjia.com/ershoufang/weichengqu/pg' + str(page) # 潍城区

list_wf = [url_qingzhoushi, url_hantingqu,url_jingji, url_shouguangshi, url_weichengqu, url_fangzi, url_kuiwenqu, url_gaoxin]

SpiderFunc().spider(list_wf)

elif page <=76:

url_hantingqu = 'https://wf.lianjia.com/ershoufang/hantingqu/pg' + str(page) # 寒亭区 76

url_fangzi = 'https://wf.lianjia.com/ershoufang/fangziqu/pg' + str(page) # 坊子区

url_kuiwenqu = 'https://wf.lianjia.com/ershoufang/kuiwenqu/pg' + str(page) # 奎文区

url_gaoxin = 'https://wf.lianjia.com/ershoufang/gaoxinjishuchanyekaifaqu/pg' + str(page) # 高新区

url_jingji = 'https://wf.lianjia.com/ershoufang/jingjijishukaifaqu2/pg' + str(page) # 经济技术85

url_shouguangshi = 'https://wf.lianjia.com/ershoufang/shouguangshi/pg' + str(page) # 寿光市 95

url_weichengqu = 'https://wf.lianjia.com/ershoufang/weichengqu/pg' + str(page) # 潍城区

list_wf = [url_hantingqu,url_jingji, url_shouguangshi, url_weichengqu, url_fangzi, url_kuiwenqu, url_gaoxin]

SpiderFunc().spider(list_wf)

elif page<=85:

url_fangzi = 'https://wf.lianjia.com/ershoufang/fangziqu/pg' + str(page) # 坊子区

url_kuiwenqu = 'https://wf.lianjia.com/ershoufang/kuiwenqu/pg' + str(page) # 奎文区

url_gaoxin = 'https://wf.lianjia.com/ershoufang/gaoxinjishuchanyekaifaqu/pg' + str(page) # 高新区

url_jingji = 'https://wf.lianjia.com/ershoufang/jingjijishukaifaqu2/pg' + str(page) # 经济技术85

url_shouguangshi = 'https://wf.lianjia.com/ershoufang/shouguangshi/pg' + str(page) # 寿光市 95

url_weichengqu = 'https://wf.lianjia.com/ershoufang/weichengqu/pg' + str(page) # 潍城区

list_wf = [url_jingji, url_shouguangshi, url_weichengqu, url_fangzi, url_kuiwenqu, url_gaoxin]

SpiderFunc().spider(list_wf)

elif page <=95:

url_shouguangshi = 'https://wf.lianjia.com/ershoufang/shouguangshi/pg' + str(page) # 寿光市 95

url_weichengqu = 'https://wf.lianjia.com/ershoufang/weichengqu/pg' + str(page) # 潍城区

url_fangzi = 'https://wf.lianjia.com/ershoufang/fangziqu/pg' + str(page) # 坊子区

url_kuiwenqu = 'https://wf.lianjia.com/ershoufang/kuiwenqu/pg' + str(page) # 奎文区

url_gaoxin = 'https://wf.lianjia.com/ershoufang/gaoxinjishuchanyekaifaqu/pg' + str(page) # 高新区

list_wf = [url_shouguangshi, url_weichengqu, url_fangzi, url_kuiwenqu, url_gaoxin]

SpiderFunc().spider(list_wf)

else:

url_weichengqu = 'https://wf.lianjia.com/ershoufang/weichengqu/pg' + str(page) # 潍城区

url_fangzi = 'https://wf.lianjia.com/ershoufang/fangziqu/pg' + str(page) # 坊子区

url_kuiwenqu = 'https://wf.lianjia.com/ershoufang/kuiwenqu/pg' + str(page) # 奎文区

url_gaoxin = 'https://wf.lianjia.com/ershoufang/gaoxinjishuchanyekaifaqu/pg' + str(page) # 高新区

list_wf = [url_weichengqu, url_fangzi,url_kuiwenqu, url_gaoxin]

SpiderFunc().spider(list_wf)

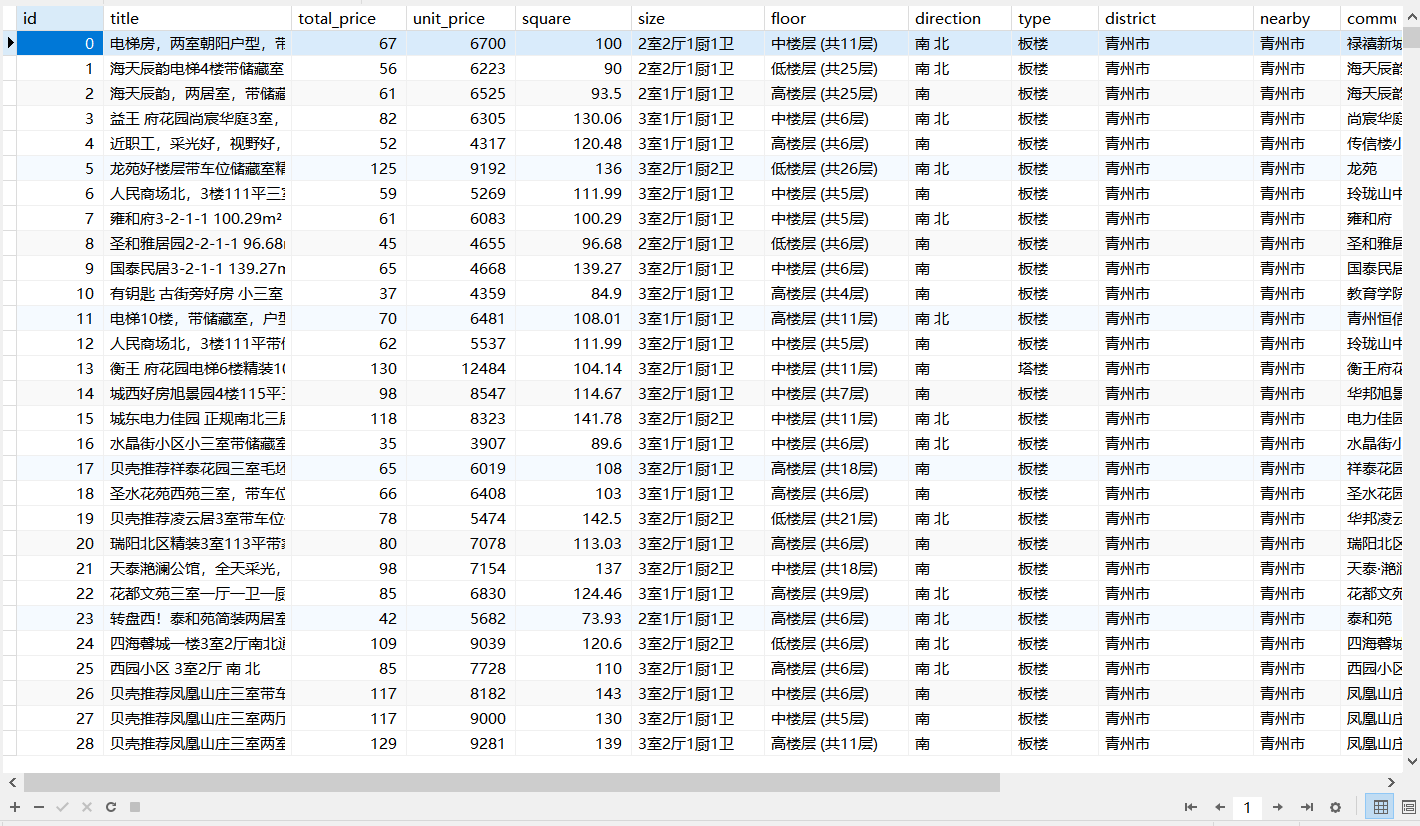

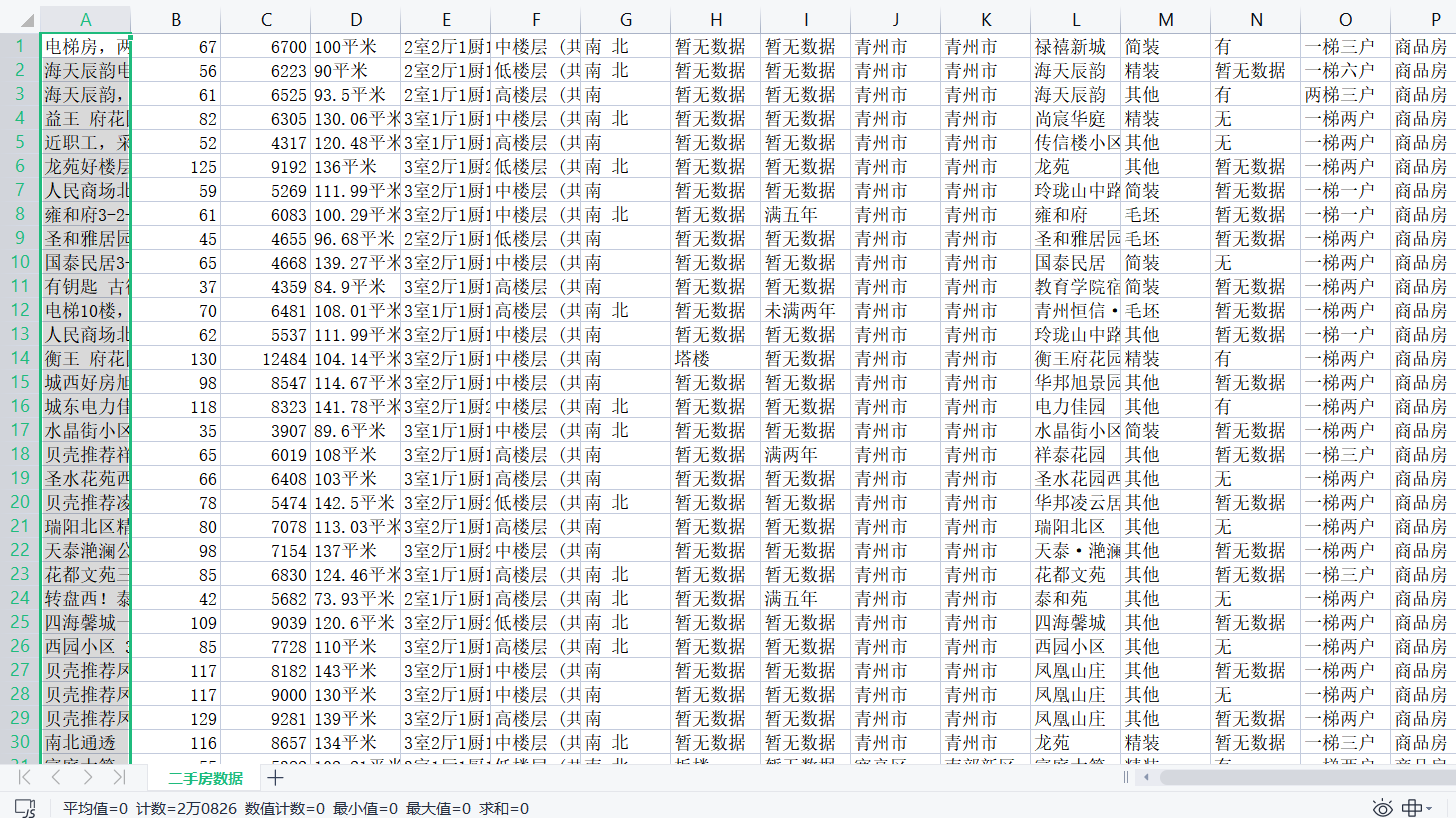

4、效果展示

总共获取到20826条数据,

我数据库因为要做数据分析,因而作了预处理,获得18031条

使用python爬虫爬取链家潍坊市二手房项目的更多相关文章

- python爬虫:利用BeautifulSoup爬取链家深圳二手房首页的详细信息

1.问题描述: 爬取链家深圳二手房的详细信息,并将爬取的数据存储到Excel表 2.思路分析: 发送请求--获取数据--解析数据--存储数据 1.目标网址:https://sz.lianjia.com ...

- Python——Scrapy爬取链家网站所有房源信息

用scrapy爬取链家全国以上房源分类的信息: 路径: items.py # -*- coding: utf-8 -*- # Define here the models for your scrap ...

- 43.scrapy爬取链家网站二手房信息-1

首先分析:目的:采集链家网站二手房数据1.先分析一下二手房主界面信息,显示情况如下: url = https://gz.lianjia.com/ershoufang/pg1/显示总数据量为27589套 ...

- python - 爬虫入门练习 爬取链家网二手房信息

import requests from bs4 import BeautifulSoup import sqlite3 conn = sqlite3.connect("test.db&qu ...

- 44.scrapy爬取链家网站二手房信息-2

全面采集二手房数据: 网站二手房总数据量为27650条,但有的参数字段会出现一些问题,因为只给返回100页数据,具体查看就需要去细分请求url参数去请求网站数据.我这里大概的获取了一下筛选条件参数,一 ...

- Python爬虫项目--爬取链家热门城市新房

本次实战是利用爬虫爬取链家的新房(声明: 内容仅用于学习交流, 请勿用作商业用途) 环境 win8, python 3.7, pycharm 正文 1. 目标网站分析 通过分析, 找出相关url, 确 ...

- Python爬取链家二手房源信息

爬取链家网站二手房房源信息,第一次做,仅供参考,要用scrapy. import scrapy,pypinyin,requests import bs4 from ..items import L ...

- python爬虫:爬取链家深圳全部二手房的详细信息

1.问题描述: 爬取链家深圳全部二手房的详细信息,并将爬取的数据存储到CSV文件中 2.思路分析: (1)目标网址:https://sz.lianjia.com/ershoufang/ (2)代码结构 ...

- python3 爬虫教学之爬取链家二手房(最下面源码) //以更新源码

前言 作为一只小白,刚进入Python爬虫领域,今天尝试一下爬取链家的二手房,之前已经爬取了房天下的了,看看链家有什么不同,马上开始. 一.分析观察爬取网站结构 这里以广州链家二手房为例:http:/ ...

- Python的scrapy之爬取链家网房价信息并保存到本地

因为有在北京租房的打算,于是上网浏览了一下链家网站的房价,想将他们爬取下来,并保存到本地. 先看链家网的源码..房价信息 都保存在 ul 下的li 里面 爬虫结构: 其中封装了一个数据库处理模 ...

随机推荐

- React工程化实践之UI组件库

分享日期: 2022-11-08 分享内容: 组件不是 React 特有的概念,但是 React 将组件化的思想发扬光大,可谓用到了极致.良好的组件设计会是良好的应用开发基础,这一讲就让我们谈一谈Re ...

- JS事件中的target,currentTarget及事件委托

1.target只存在其事件流的目标阶段,指向事件发生时的元素. 2.currentTarget:可位于捕获,目标和冒泡阶段,始终指向绑定事件的元素.如div>ul>li结构,事件委托在d ...

- FPGAUSB控制器编程

FPGA产生PLL LED子module,显示FPGA在运行 USB控制子module,USB时钟输入,状态输入,总线输出,USBFIFO地址总线,数据双向总线. USB状态机,Flaga有效时,转为 ...

- 安卓蓝牙协议栈中的RFCOMM状态机分析

1.1 数据结构 1.1.1 tRFC_MCB tRFC_MCB(type of rfcomm multiplexor control block的简写)代表了一个多路复用器.代表了RFCOMM规范 ...

- css中双冒号和单冒号区别

:--是指的伪类 ::--是指的伪元素 1.字面意思: 伪类,1.css中有类选择器,某些元素并未定义类名,就可以通过伪类赋予样式,如:[:nth-child(n)]:2.伪类可以应用于元素执行某种状 ...

- sscms自己从数据库筛选内容

where条件除了SiteId和ChannelId之外, 还需要加上IsChecked='True',而不是CheckedLevel

- geoserver leaflet 使用wms

注意事项 1. 地址是 http://192.168.31.120:8080/geoserver/cite/wms 不需要后面 2. 名称 city:Polyline3 3.默认层级别调试为0 ...

- 基于MassTransit.RabbitMQ的延时消息队列

1 nuget包 <PackageReference Include="MassTransit.RabbitMQ" Version="8.0.2" /&g ...

- js数组常用的方法

var arr=['hello','前端','world']; 1. arr.join(分隔符):将数组中的值拼接成一个字符串,返回这个字符串,默认分隔符"," arr.join( ...

- 含字母数字的字符串排序算法,仿Windows文件名排序算法

不废话,上排序前后对比: 类似与windows的目录文件排序,分几种版本C++/C#/JAVA给大家: 1.Java版 package com.eam.util;/* * The Alphanum A ...