Linear Decoders

Sparse Autoencoder Recap

In the sparse autoencoder, we had 3 layers of neurons: an input layer, a hidden layer and an output layer. In our previous description of autoencoders (and of neural networks), every neuron in the neural network used the same activation function. In these notes, we describe a modified version of the autoencoder in which some of the neurons use a different activation function. This will result in a model that is sometimes simpler to apply, and can also be more robust to variations in the parameters.

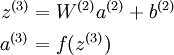

Recall that each neuron (in the output layer) computed the following:

where a(3) is the output. In the autoencoder, a(3) is our approximate reconstruction of the input x = a(1).

Because we used a sigmoid activation function for f(z(3)), we needed to constrain or scale the inputs to be in the range[0,1], since the sigmoid function outputs numbers in the range [0,1].

引入 —— 相同的activation function ,非线性映射会导致输入和输出不等

Linear Decoder

One easy fix for this problem is to set a(3) = z(3). Formally, this is achieved by having the output nodes use an activation function that's the identity function f(z) = z, so that a(3) = f(z(3)) = z(3). 输出结点不适用sigmoid 函数

This particular activation function

is called the linear activation function。Note however that in the hidden layer of the network, we still use a sigmoid (or tanh) activation function, so that the hidden unit activations are given by (say)

, where

is the sigmoid function, x is the input, and W(1) andb(1) are the weight and bias terms for the hidden units. It is only in the output layer that we use the linear activation function.

An autoencoder in this configuration--with a sigmoid (or tanh) hidden layer and a linear output layer--is called a linear decoder.

In this model, we have

. Because the output

is a now linear function of the hidden unit activations, by varying W(2), each output unit a(3) can be made to produce values greater than 1 or less than 0 as well. This allows us to train the sparse autoencoder real-valued inputs without needing to pre-scale every example to a specific range.

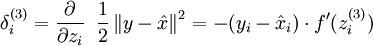

Since we have changed the activation function of the output units, the gradients of the output units also change. Recall that for each output unit, we had set set the error terms as follows:

where y = x is the desired output,

is the output of our autoencoder, and

is our activation function. Because in the output layer we now have f(z) = z, that implies f'(z) = 1 and thus the above now simplifies to:

output结点

Of course, when using backpropagation to compute the error terms for the hidden layer:

hidden结点

Because the hidden layer is using a sigmoid (or tanh) activation f, in the equation above

should still be the derivative of the sigmoid (or tanh) function.

Linear Decoders的更多相关文章

- (六)6.16 Neurons Networks linear decoders and its implements

Sparse AutoEncoder是一个三层结构的网络,分别为输入输出与隐层,前边自编码器的描述可知,神经网络中的神经元都采用相同的激励函数,Linear Decoders 修改了自编码器的定义,对 ...

- CS229 6.16 Neurons Networks linear decoders and its implements

Sparse AutoEncoder是一个三层结构的网络,分别为输入输出与隐层,前边自编码器的描述可知,神经网络中的神经元都采用相同的激励函数,Linear Decoders 修改了自编码器的定义,对 ...

- Unsupervised Feature Learning and Deep Learning(UFLDL) Exercise 总结

7.27 暑假开始后,稍有时间,“搞完”金融项目,便开始跑跑 Deep Learning的程序 Hinton 在Nature上文章的代码 跑了3天 也没跑完 后来Debug 把batch 从200改到 ...

- Deep learning:三十八(Stacked CNN简单介绍)

http://www.cnblogs.com/tornadomeet/archive/2013/05/05/3061457.html 前言: 本节主要是来简单介绍下stacked CNN(深度卷积网络 ...

- [UFLDL] Basic Concept

博客内容取材于:http://www.cnblogs.com/tornadomeet/archive/2012/06/24/2560261.html 参考资料: UFLDL wiki UFLDL St ...

- [UFLDL] Dimensionality Reduction

博客内容取材于:http://www.cnblogs.com/tornadomeet/archive/2012/06/24/2560261.html Deep learning:三十五(用NN实现数据 ...

- Deep Learning 教程翻译

Deep Learning 教程翻译 非常激动地宣告,Stanford 教授 Andrew Ng 的 Deep Learning 教程,于今日,2013年4月8日,全部翻译成中文.这是中国屌丝军团,从 ...

- ICLR 2013 International Conference on Learning Representations深度学习论文papers

ICLR 2013 International Conference on Learning Representations May 02 - 04, 2013, Scottsdale, Arizon ...

- Deep Learning基础--线性解码器、卷积、池化

本文主要是学习下Linear Decoder已经在大图片中经常采用的技术convolution和pooling,分别参考网页http://deeplearning.stanford.edu/wiki/ ...

随机推荐

- angular.js高级程序设计书本开头配置环境出错,谁能给解答一下

server.jsvar connect=require('connect');serveStatic=require('serve-static');var app=connect();app.us ...

- Linux环境下源码安装PostgreSQL

1.下载PostgreSQL源码包,并保存到Linux操作系统的一个目录下 2.解压PostgreSQL源码包 :tar zxvf postgresql-9.2.4.tar.gz 或 tar jxvf ...

- iview中 ...用法

1. 2. 3. 4.可以将divs转为数组解构 5. 解构 6.作为函数的参数 7.作为参数遍历

- datable

$("#table_d").append("<table id='dmglTable' class='table table-striped table-hover ...

- windos下安装多个mysql服务

最近需要使用Mysql制造大量数据,需要多个Mysql服务器.一开始的解决方案是使用多个windows机器.实体机不够,则用虚拟机来搞.但,,,,安装多个虚拟机…….好吧, 在网上查了下,有使用单个机 ...

- POJ2976 Dropping tests(01分数规划)

题意 给你n次测试的得分情况b[i]代表第i次测试的总分,a[i]代表实际得分. 你可以取消k次测试,得剩下的测试中的分数为 问分数的最大值为多少. 题解 裸的01规划. 然后ans没有清0坑我半天. ...

- IDEA使用GIT 上传到GitHub

1.下载Git https://www.git-scm.com/download/ 2.安装 3.IDEA配置Git(设置Git路径,点击Test),如下代表成功 4.创建仓库 5.add 6.pus ...

- 用eclipse 检索SVN 上 myEclipse 建的web项后,成java项目解决方法

用eclipse 检索SVN 上 myEclipse 建的web项后,成java项目解决方法 在网上找了非常多,都无论用. 说添加.project 文件几个属性.但我发现里面都有,在我这里无论什么用. ...

- 设计模式 - 组合模式(composite pattern) 迭代器(iterator) 具体解释

组合模式(composite pattern) 迭代器(iterator) 具体解释 本文地址: http://blog.csdn.net/caroline_wendy 參考组合模式(composit ...

- 网络芯片应用:GPS公交车行驶记录仪

项目描写叙述 佛罗里达大学学生 Miles Moody 使用WIZnet W5200以太网插板及Arduino Nano剖析了来自一个当地网页服务的HTML代码,并讲述了他每天带着公交车实时GPS坐标 ...