【CV知识学习】early stop、regularation、fine-tuning and some other trick to be known

深度学习有不少的trick,而且这些trick有时还挺管用的,所以,了解一些trick还是必要的。上篇说的normalization、initialization就是trick的一种,下面再总结一下自己看Deep Learning Summer School, Montreal 2016 总结的一些trick。请路过大牛指正~~~

early stop

“早停止”很好理解,就是在validation的error开始上升之前,就把网络的训练停止了。说到这里,把数据集分成train、validation和test也算是一种trick吧。看一张图就明白了:

L1、L2 regularization

第一次接触正则化是在学习PRML的回归模型,那时还不太明白,现在可以详细讲讲其中的原理了。额~~~~(I will write the surplus of this article in English)

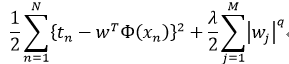

At the very outset, we take a insight on L1, L2 regularization. Assume the loss function of a linear regression model as  . In fact, L1, L2 regularization can be seen as introducing prior distribution for the parameters. Here, L1 regularization can be interpreted as Laplace prior, and Guass prior for L2 regularization.Such tricks are used to reduce the complexity of a model.

. In fact, L1, L2 regularization can be seen as introducing prior distribution for the parameters. Here, L1 regularization can be interpreted as Laplace prior, and Guass prior for L2 regularization.Such tricks are used to reduce the complexity of a model.

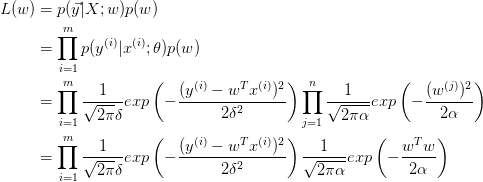

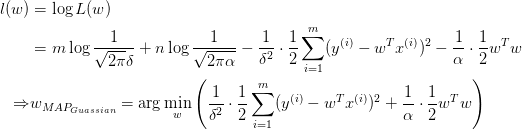

L2 regularization

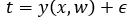

As before, we make a hypothesis that the target variable  is determined by the function

is determined by the function  with an additive guass noise

with an additive guass noise  , resulting in

, resulting in , where

, where  is a zero mean Guassian random variable with precision

is a zero mean Guassian random variable with precision  . Thus we know

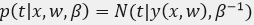

. Thus we know  and its loglikelihood function form can be wrote as

and its loglikelihood function form can be wrote as

Finding the optimal solution for this problem is equal to minimize the least squre function  . If a zero mean

. If a zero mean  variance Guass prior is introduced for

variance Guass prior is introduced for  , we get the posterior distribution for

, we get the posterior distribution for  from the function (need to know prior + likelihood = posterior)

from the function (need to know prior + likelihood = posterior)

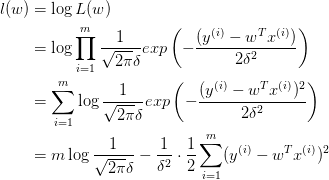

rewrite the equaltion in loglikelihood form, we will get

at the present, you see  . This derivation process accounts for why L2 regularization could be interpreted as Guass Prior.

. This derivation process accounts for why L2 regularization could be interpreted as Guass Prior.

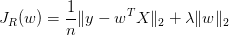

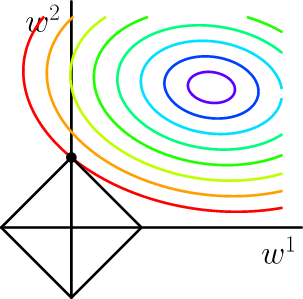

L1 regularization

Generally, solving the the L1 regularization is called LASSO problem, which will force some coefficients to zero thus create a sparse model. You can get reference from here.

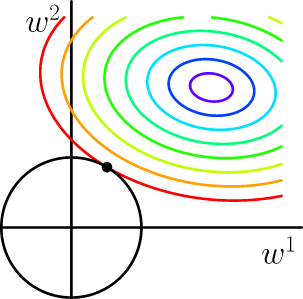

From the above images(two-dimensionality), something can be discovered that for the L1 regularization, the interaction points always locate on the axis, and this will forces some of the variables to be zero. Addtionally, we can learn that L2 regularization doesn't produce a sparse model(the right side image).

Fine tuning

It's easy to understand fine tuning, which could be interpreted as a way to initailizes the weight parameters then proceed a supervised learning. Parameters value can be migrated from a well-trained network, such as ImageNet. However, sometimes you may need an autoencoder, which is an unsupervised learning method and seems not very popular now. An autoencoder will learn the latent structure of the data in hidden layers, whose input and output should be same.

In fact, supervised learning of fine tune just performs regular feed forward process as in a feed-forwad network.

Then, I want to show you an image about autoencoder, also you can get more information in detail through this website.

Data argumentation

If training data is not large enough, it's a neccessary to expand the data by fliping, transferring or rotating to avoid overfit. However, according to the paper "Visualizing and Understanding Convolutional Networks", CNN may not be as stable when fliping for image as transferring and rotating, except a symmetrical image.

Pooling

I have introduced why pooling works well in the ealier article in this blog, if interested, you may need patience to look through the catalogue. In the paper "Visualizing and Understanding Convolutional Networks", you will see pooling sometimes a good way to control the invariance, I want to write down my notes about this classical paper in next article.

Dropout and Drop layer

Dropout now has become a prevalent technique to prevent overfitting, proposed by Hinton, etc. The dropout program will inactivate some nodes according to a probability p, which has been fixed before training. It makes the network more thinner. But why does it works ? There may exist two main reasons, (1) for a batch of input data, nodes can be trained better in a thinner network, for they trained with more data in average. (2) dropout inject noise into network which will do help.

Inspired by dropout and ResNet, Huang, etc propose drop layer, which make use of the shortcut connection in ResNet. It will enforce the information flow through the shortcut connection directly and obstruct the normal way according to a probability p just like what dropout does. This technique makes the network shorter in training, and can be used to trained a deeper network even more than 1000 layers.

Depth is important

The chart below interprets the reason why depth is important,

as the number of layer increasing, the classification accuracy arises. While visualizing the feature extracted from layers using decovnet("Visualizing and Understanding Convolutional Networks"), the deeper layer the more abstract feature extracted. And abstract feature will strengthen the robustness, i.e. the invariance to transformation.

【CV知识学习】early stop、regularation、fine-tuning and some other trick to be known的更多相关文章

- 【CV知识学习】神经网络梯度与归一化问题总结+highway network、ResNet的思考

这是一篇水货写的笔记,希望路过的大牛可以指出其中的错误,带蒟蒻飞啊~ 一. 梯度消失/梯度爆炸的问题 首先来说说梯度消失问题产生的原因吧,虽然是已经被各大牛说烂的东西.不如先看一个简单的网络结构 ...

- 【CV知识学习】Fisher Vector

在论文<action recognition with improved trajectories>中看到fisher vector,所以学习一下.但网上很多的资料我觉得都写的不好,查了一 ...

- 【CV知识学习】【转】beyond Bags of features for rec scenen categories。基于词袋模型改进的自然场景识别方法

原博文地址:http://www.cnblogs.com/nobadfish/articles/5244637.html 原论文名叫Byeond bags of features:Spatial Py ...

- L23模型微调fine tuning

resnet185352 链接:https://pan.baidu.com/s/1EZs9XVUjUf1MzaKYbJlcSA 提取码:axd1 9.2 微调 在前面的一些章节中,我们介绍了如何在只有 ...

- 网络知识学习2---(IP地址、子网掩码)(学习还不深入,待完善)

紧接着:网络知识学习1 1.IP地址 IP包头的结构如图 A.B.C网络类别的IP地址范围(图表) A.B.C不同的分配网络数和主机的方式(A是前8个IP地址代表网络,后24个代表主机:B是16 ...

- (原)caffe中fine tuning及使用snapshot时的sh命令

转载请注明出处: http://www.cnblogs.com/darkknightzh/p/5946041.html 参考网址: http://caffe.berkeleyvision.org/tu ...

- HTML5标签汇总及知识学习线路总结

HTML5标签汇总,以及知识学习线路总结.

- 安全测试3_Web后端知识学习

其实中间还应该学习下web服务和数据库的基础,对于web服务大家可以回家玩下tomcat或者wamp等东西,数据库的话大家掌握基本的增删该查就好了,另外最好掌握下数据库的内置函数,如:concat() ...

- GCC基础知识学习

GCC基础知识学习 一.GCC编译选项解析 常用编译选项 命令格式:gcc [选项] [文件名] -E:仅执行编译预处理: -S:将C代码转换为汇编代码: -c:仅执行编译操作,不进行连接操作: -o ...

随机推荐

- iOS-UI控件之UITableView(一)

UITableView 介绍 UITableView 是用来用列表的形式显示数据的UI控件 举例 QQ好友列表 通讯录 iPhone设置列表 tableView 常见属性 // 设置每一行cell的高 ...

- sql server 2008 r2 无法定位到数据库文件目录

像这样,选择数据库文件时, 无法定位到文件夹目录,子目录下的都不显示.明明选择的这个文件夹里还有很多子文件夹,却显示不了. 解决方法: 在此文件夹上右击,属性-安全 添加红框中的用户就可以了.

- MAC加域重复跳出---"talagent"想使用“本地项目” 的钥匙串

很简单的解决办法,就是把以前的钥匙串给删掉就好 (重要提示:这个方法,以前所有程序自动记录密码都会丢掉,safari的自动填充,QQ自动登录,imessages 的等等) 1.打开Finder -&g ...

- LOL喷子专用自动骂人工具,2018更新完整版!

软件截图 软件说明: 先进入游戏 打开程序 Z开启/C关闭 下载地址:密码 yjnm

- iOS重签

由于渠道推广需要,可能需要多个包做备份推广,区别是icon.游戏名称.登录logo.bundleid.签名证书.支付Consumables不同,其他游戏包体完全相同. 反复修改多次文件提交Jenkin ...

- bind - 将一个名字和一个套接字绑定到一起

SYNOPSIS 概述 #include <sys/types.h> #include <sys/socket.h> int bind(int sockfd, struct s ...

- JavaSE-15 Log4j参数详解

一:日志记录器输出级别,共有5级(从前往后的顺序排列) ①fatel:指出严重的错误事件将会导致应用程序的退出 ②error:指出虽然发生错误事件,但仍然不影响系统的继续运行 ③warn:表明会出现潜 ...

- thinkphp5中extend的使用?

1.创建处理数组的类ArrayList.php <?php /** * ArrayList实现类 * @author liu21st <liu21st@gmail.com> */ c ...

- Xcode5真机调试

http://blog.csdn.net/u011332675/article/details/17397849 (真机调试详解) http://blog.sina.com.cn/s/blog_ ...

- for in,Object.keys()与for of的用法与区别

Array.prototype.sayLength=function(){ console.log(this.length); } let arr = ['a','b','c','d']; arr.n ...