如何运行Spark程序

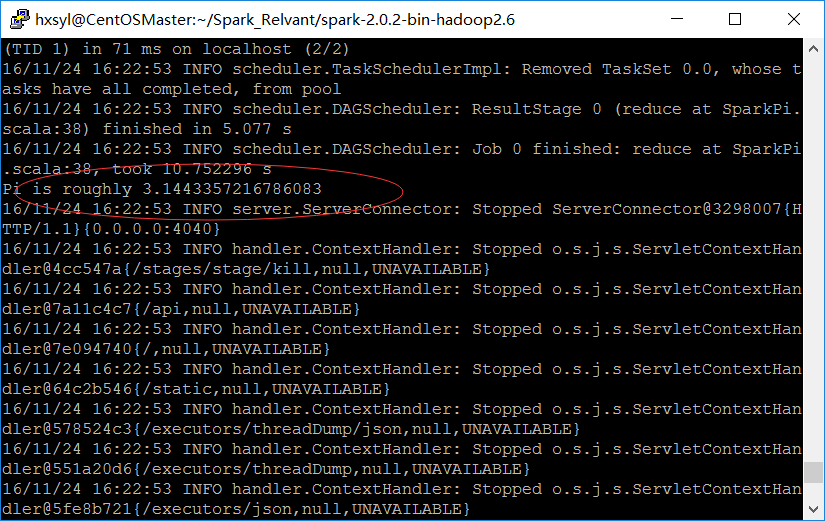

[hxsyl@CentOSMaster spark-2.0.2-bin-hadoop2.6]# ./bin/spark-submit --class org.apache.spark.examples.SparkPi --master local examples/jars/spark-examples_2.11-2.0.2.jar

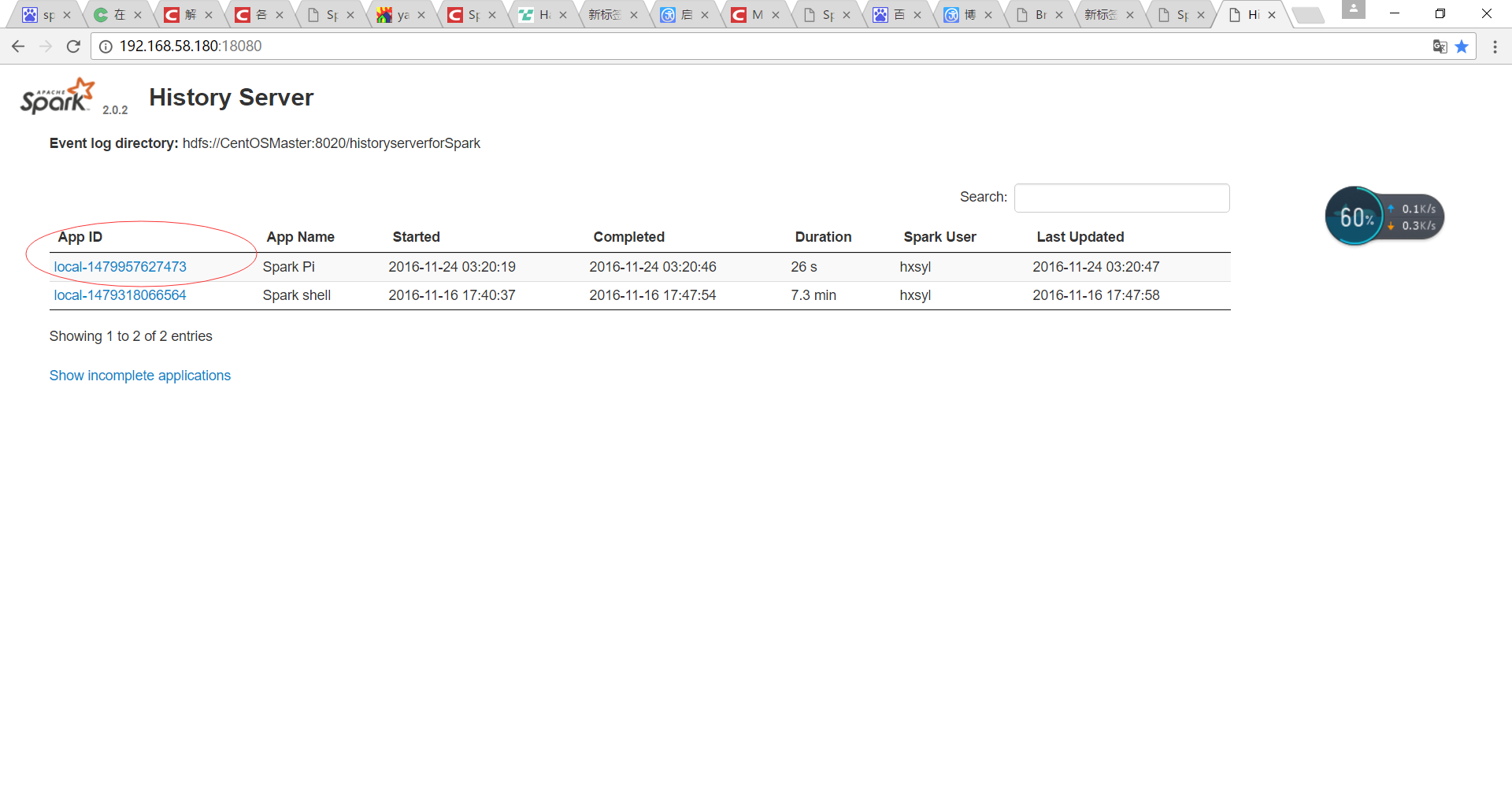

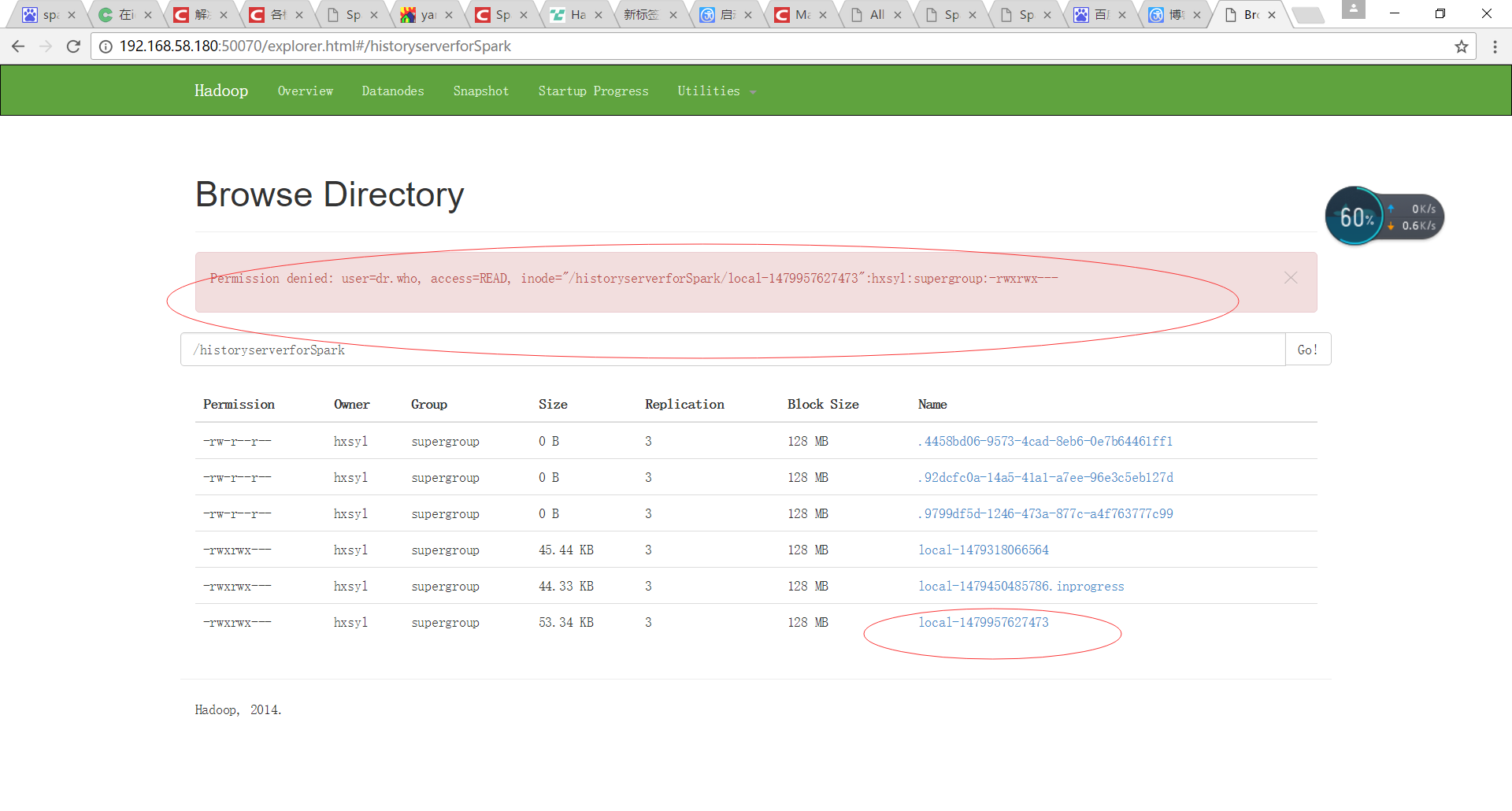

注意在hxsyl下,在root下运行提示hdfs上的historyserverforSpark没有权限,擦,好奇怪啊,另外运行后在hdfs上查看结果spark的用户是dr.who没有权限,fuck,奇葩。关键是这个dr.who哪来的?

spark-examples是你spark安装目录下的lib目录下的examples的jar包,其实以后在windows上用intellij写的spark程序也要打成jar包,放到这里来调用。

但是这里面

{"Event":"SparkListenerLogStart","Spark Version":"2.0.2"}

{"Event":"SparkListenerExecutorAdded","Timestamp":1479957627709,"Executor ID":"driver","Executor Info":{"Host":"localhost","Total Cores":1,"Log Urls":{}}}

{"Event":"SparkListenerBlockManagerAdded","Block Manager ID":{"Executor ID":"driver","Host":"192.168.58.180","Port":48482},"Maximum Memory":434031820,"Timestamp":1479957627795}

{"Event":"SparkListenerEnvironmentUpdate","JVM Information":{"Java Home":"/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre","Java Version":"1.8.0_91 (Oracle Corporation)","Scala Version":"version 2.11.8"},"Spark Properties":{"spark.executor.extraJavaOptions":"-XX:+PrintGCDetails -DKey=value -Dnumbers=\"one two three\"","spark.driver.host":"192.168.58.180","spark.history.fs.logDirectory":"hdfs://CentOSMaster:8020/historyserverforSpark","spark.eventLog.enabled":"true","spark.driver.port":"45821","spark.jars":"file:/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/examples/jars/spark-examples_2.11-2.0.2.jar","spark.app.name":"Spark Pi","spark.scheduler.mode":"FIFO","spark.executor.id":"driver","spark.submit.deployMode":"client","spark.master":"local","spark.yarn.archive":"hdfs://CentOSMaster:8020/sparkJars","spark.eventLog.dir":"hdfs://CentOSMaster:8020/historyserverforSpark","spark.app.id":"local-1479957627473","spark.yarn.historySever.address":"CentOSMaster:18080"},"System Properties":{"java.io.tmpdir":"/tmp","line.separator":"\n","path.separator":":","sun.management.compiler":"HotSpot 64-Bit Tiered Compilers","SPARK_SUBMIT":"true","sun.cpu.endian":"little","java.specification.version":"1.8","java.vm.specification.name":"Java Virtual Machine Specification","java.vendor":"Oracle Corporation","java.vm.specification.version":"1.8","user.home":"/home/hxsyl","file.encoding.pkg":"sun.io","sun.nio.ch.bugLevel":"","sun.arch.data.model":"64","sun.boot.library.path":"/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/amd64","user.dir":"/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6","java.library.path":"/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib","sun.cpu.isalist":"","os.arch":"amd64","java.vm.version":"25.91-b14","java.endorsed.dirs":"/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/endorsed","java.runtime.version":"1.8.0_91-b14","java.vm.info":"mixed mode","java.ext.dirs":"/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/ext:/usr/java/packages/lib/ext","java.runtime.name":"Java(TM) SE Runtime Environment","file.separator":"/","java.class.version":"52.0","java.specification.name":"Java Platform API Specification","sun.boot.class.path":"/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/resources.jar:/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/rt.jar:/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/sunrsasign.jar:/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/jsse.jar:/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/jce.jar:/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/charsets.jar:/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/lib/jfr.jar:/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre/classes","file.encoding":"UTF-8","user.timezone":"Asia/Hong_Kong","java.specification.vendor":"Oracle Corporation","sun.java.launcher":"SUN_STANDARD","os.version":"2.6.32-642.el6.x86_64","sun.os.patch.level":"unknown","java.vm.specification.vendor":"Oracle Corporation","user.country":"US","sun.jnu.encoding":"UTF-8","user.language":"en","java.vendor.url":"http://java.oracle.com/","java.awt.printerjob":"sun.print.PSPrinterJob","java.awt.graphicsenv":"sun.awt.X11GraphicsEnvironment","awt.toolkit":"sun.awt.X11.XToolkit","os.name":"Linux","java.vm.vendor":"Oracle Corporation","java.vendor.url.bug":"http://bugreport.sun.com/bugreport/","user.name":"hxsyl","java.vm.name":"Java HotSpot(TM) 64-Bit Server VM","sun.java.command":"org.apache.spark.deploy.SparkSubmit --master local --class org.apache.spark.examples.SparkPi examples/jars/spark-examples_2.11-2.0.2.jar","java.home":"/home/hxsyl/Spark_Relvant/jdk1.8.0_91/jre","java.version":"1.8.0_91","sun.io.unicode.encoding":"UnicodeLittle"},"Classpath Entries":{"/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-compress-1.4.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jetty-util-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/breeze-macros_2.11-0.11.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-network-shuffle_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jackson-xc-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/metrics-jvm-3.1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/javassist-3.18.1-GA.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-annotations-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-jaxrs-1.9.13.jar":"System Classpath","spark://192.168.58.180:45821/jars/spark-examples_2.11-2.0.2.jar":"Added By User","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/conf/":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/libthrift-0.9.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/aopalliance-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-cli-1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-digester-1.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/derby-10.12.1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/netty-all-4.0.29.Final.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/xbean-asm5-shaded-4.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/httpcore-4.4.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/scala-compiler-2.11.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jetty-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/hadoop-nfs-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hive-cli-1.2.1.spark2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/xz-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jul-to-slf4j-1.7.16.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/xz-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/antlr-2.7.7.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jta-1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jersey-server-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/univocity-parsers-2.1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-mapreduce-client-shuffle-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-digester-1.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-column-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/scalap-2.11.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/javax.annotation-api-1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jersey-server-2.22.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-httpclient-3.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-codec-1.10.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jettison-1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/breeze_2.11-0.11.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-annotations-2.6.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-compress-1.4.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-io-2.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jline-0.9.94.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-client-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/log4j-1.2.17.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/xercesImpl-2.9.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-xc-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/api-util-1.0.0-M20.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/RoaringBitmap-0.5.11.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/api-util-1.0.0-M20.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-server-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/junit-4.11.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-math3-3.1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-tags_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hive-exec-1.2.1.spark2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-mapper-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/JavaEWAH-0.3.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/stax-api-1.0-2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/py4j-0.10.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/servlet-api-2.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-logging-1.1.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/metrics-core-3.1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-mapreduce-client-app-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/hadoop-common-2.6.4-tests.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-httpclient-3.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jpam-1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/datanucleus-rdbms-3.2.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/avro-ipc-1.7.7.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/java-xmlbuilder-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-auth-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/calcite-core-1.2.0-incubating.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-beanutils-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jersey-core-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/super-csv-2.2.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-math3-3.4.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jsch-0.1.42.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/activation-1.1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hive-jdbc-1.2.1.spark2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/slf4j-api-1.7.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-core-2.6.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/avro-mapred-1.7.7-hadoop2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hive-beeline-1.2.1.spark2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/curator-framework-2.6.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jasper-compiler-5.5.23.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-yarn-server-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-compress-1.4.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/antlr4-runtime-4.5.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/javax.inject-1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/guice-3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/scala-reflect-2.11.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-api-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-yarn-api-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-net-3.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/mx4j-3.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/paranamer-2.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/javax.inject-1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/javax.inject-1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jersey-common-2.22.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/hadoop-annotations-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/log4j-1.2.17.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/javax.ws.rs-api-2.0.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-httpclient-3.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/snappy-0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/apache-log4j-extras-1.2.17.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/pmml-model-1.2.15.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-module-scala_2.11-2.6.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/curator-client-2.6.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/pyrolite-4.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/opencsv-2.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/asm-3.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-databind-2.6.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-registry-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-dbcp-1.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/bonecp-0.8.0.RELEASE.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-unsafe_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jaxb-api-2.2.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jsp-api-2.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-logging-1.1.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-collections-3.2.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/gson-2.2.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/xmlenc-0.52.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/arpack_combined_all-0.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/mail-1.4.7.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-launcher_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/objenesis-2.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jetty-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spire-macros_2.11-0.7.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-mllib_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-collections-3.2.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-2.6.4-tests.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/aopalliance-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/avro-1.7.7.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/ST4-4.0.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-yarn_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-core-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-core-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spire_2.11-0.7.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jettison-1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/metrics-json-3.1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/htrace-core-3.0.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/antlr-runtime-3.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-pool-1.5.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/scala-parser-combinators_2.11-1.0.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/protobuf-java-2.5.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/eigenbase-properties-1.1.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-encoding-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-yarn-server-web-proxy-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/json4s-ast_2.11-3.2.11.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-beanutils-core-1.8.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jsr305-1.3.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/curator-framework-2.6.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/activation-1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jsr305-1.3.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jasper-runtime-5.5.23.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-mapreduce-client-core-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-core-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/netty-3.8.0.Final.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jetty-util-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/scala-xml_2.11-1.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/httpclient-4.5.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/compress-lzf-1.0.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/stringtemplate-3.2.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/asm-3.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/netty-3.6.2.Final.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/minlog-1.3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/protobuf-java-2.5.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-compiler-2.7.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-graphx_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/xmlenc-0.52.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jcl-over-slf4j-1.7.16.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/ivy-2.4.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/bcprov-jdk15on-1.51.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/java-xmlbuilder-0.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hk2-api-2.4.0-b34.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/leveldbjni-all-1.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/stream-2.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jersey-guava-2.22.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/curator-client-2.6.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/osgi-resource-locator-1.0.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/base64-2.3.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/guice-3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/joda-time-2.9.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hk2-locator-2.4.0-b34.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/javolution-5.5.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-lang3-3.3.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-json-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/snappy-java-1.1.2.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/kryo-shaded-3.0.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-io-2.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/guava-11.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-network-common_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-yarn-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jtransforms-2.4.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-server-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/log4j-1.2.17.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-lang-2.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-net-2.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/junit-4.11.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/aopalliance-repackaged-2.4.0-b34.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-logging-1.1.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/guava-14.0.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/slf4j-api-1.7.16.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jersey-container-servlet-core-2.22.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/datanucleus-core-3.2.10.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/stax-api-1.0-2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-io-2.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/aopalliance-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/zookeeper-3.4.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-cli-1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-client-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-common-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/guava-11.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/httpcore-4.2.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/hadoop-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-catalyst_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-codec-1.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/javax.inject-2.4.0-b34.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/janino-2.7.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-codec-1.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jdo-api-3.0.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-yarn-client-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-lang-2.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/netty-3.6.2.Final.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/paranamer-2.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hk2-utils-2.4.0-b34.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-hadoop-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-hadoop-bundle-1.6.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/log4j-1.2.17.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jsr305-1.3.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/calcite-avatica-1.2.0-incubating.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-cli-1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jets3t-0.9.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-hdfs-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/apacheds-kerberos-codec-2.0.0-M15.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/json4s-jackson_2.11-3.2.11.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-repl_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jersey-json-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-codec-1.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-el-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/paranamer-2.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/asm-3.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/hadoop-auth-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/mockito-all-1.8.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jsp-api-2.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-lang-2.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/avro-1.7.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/curator-recipes-2.6.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/xz-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jaxb-api-2.2.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/asm-3.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-format-2.3.0-incubating.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-collections-3.2.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/chill_2.11-0.8.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/xmlenc-0.52.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-hive-thriftserver_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jersey-core-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-streaming_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-guice-1.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-mapreduce-client-common-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/api-asn1-api-1.0.0-M20.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-client-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/oro-2.0.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jetty-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-beanutils-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-configuration-1.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/stax-api-1.0.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/commons-io-2.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/chill-java-0.8.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/contrib/capacity-scheduler/*.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-el-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jersey-container-servlet-2.22.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/calcite-linq4j-1.2.0-incubating.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/zookeeper-3.4.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/log4j-1.2.17.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jetty-util-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/jsr305-1.3.9.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/json4s-core_2.11-3.2.11.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jets3t-0.9.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.4-tests.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/slf4j-log4j12-1.7.16.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/hamcrest-core-1.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/datanucleus-api-jdo-3.2.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/guava-11.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/gson-2.2.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jersey-media-jaxb-2.22.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/guice-servlet-3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-sql_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/validation-api-1.1.0.Final.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/htrace-core-3.0.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-hive_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jline-2.12.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-jackson-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/servlet-api-2.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/stax-api-1.0-2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/json-20090211.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/activation-1.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/commons-io-2.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/libfb303-0.9.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jersey-client-2.22.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/javax.servlet-api-3.1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jackson-module-paranamer-2.6.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/guice-3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/parquet-generator-1.7.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-core_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-lang-2.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/zookeeper-3.4.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/commons-cli-1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/guice-servlet-3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-sketch_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/snappy-java-1.0.4.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/lz4-1.3.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/spark-mllib-local_2.11-2.0.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/scala-library-2.11.8.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hive-metastore-1.2.1.spark2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/metrics-graphite-3.1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/pmml-schema-1.2.15.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/mesos-0.21.1-shaded-protobuf.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/avro-1.7.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/jodd-core-3.5.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/xz-1.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/curator-recipes-2.6.0.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/etc/hadoop/":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/hadoop-mapreduce-client-jobclient-2.6.4.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/servlet-api-2.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/yarn/lib/jetty-6.1.26.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/commons-configuration-1.6.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/httpclient-4.2.5.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/apacheds-i18n-2.0.0-M15.jar":"System Classpath","/home/hxsyl/Spark_Relvant/spark-2.0.2-bin-hadoop2.6/jars/core-1.1.2.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar":"System Classpath","/home/hxsyl/Spark_Relvant/hadoop-2.6.4/share/hadoop/common/lib/jersey-server-1.9.jar":"System Classpath"}}

{"Event":"SparkListenerApplicationStart","App Name":"Spark Pi","App ID":"local-1479957627473","Timestamp":1479957619976,"User":"hxsyl"}

{"Event":"SparkListenerJobStart","Job ID":0,"Submission Time":1479957637781,"Stage Infos":[{"Stage ID":0,"Stage Attempt ID":0,"Stage Name":"reduce at SparkPi.scala:38","Number of Tasks":2,"RDD Info":[{"RDD ID":1,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"1\",\"name\":\"map\"}","Callsite":"map at SparkPi.scala:34","Parent IDs":[0],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":2,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":0,"Name":"ParallelCollectionRDD","Scope":"{\"id\":\"0\",\"name\":\"parallelize\"}","Callsite":"parallelize at SparkPi.scala:34","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":2,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.rdd.RDD.reduce(RDD.scala:984)\norg.apache.spark.examples.SparkPi$.main(SparkPi.scala:38)\norg.apache.spark.examples.SparkPi.main(SparkPi.scala)\nsun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)\nsun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)\nsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\njava.lang.reflect.Method.invoke(Method.java:498)\norg.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:736)\norg.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:185)\norg.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:210)\norg.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)\norg.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)","Accumulables":[]}],"Stage IDs":[0],"Properties":{"spark.rdd.scope.noOverride":"true","spark.rdd.scope":"{\"id\":\"2\",\"name\":\"reduce\"}"}}

{"Event":"SparkListenerStageSubmitted","Stage Info":{"Stage ID":0,"Stage Attempt ID":0,"Stage Name":"reduce at SparkPi.scala:38","Number of Tasks":2,"RDD Info":[{"RDD ID":1,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"1\",\"name\":\"map\"}","Callsite":"map at SparkPi.scala:34","Parent IDs":[0],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":2,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":0,"Name":"ParallelCollectionRDD","Scope":"{\"id\":\"0\",\"name\":\"parallelize\"}","Callsite":"parallelize at SparkPi.scala:34","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":2,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.rdd.RDD.reduce(RDD.scala:984)\norg.apache.spark.examples.SparkPi$.main(SparkPi.scala:38)\norg.apache.spark.examples.SparkPi.main(SparkPi.scala)\nsun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)\nsun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)\nsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\njava.lang.reflect.Method.invoke(Method.java:498)\norg.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:736)\norg.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:185)\norg.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:210)\norg.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)\norg.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)","Accumulables":[]},"Properties":{"spark.rdd.scope.noOverride":"true","spark.rdd.scope":"{\"id\":\"2\",\"name\":\"reduce\"}"}}

{"Event":"SparkListenerTaskStart","Stage ID":0,"Stage Attempt ID":0,"Task Info":{"Task ID":0,"Index":0,"Attempt":0,"Launch Time":1479957641443,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":0,"Failed":false,"Accumulables":[]}}

{"Event":"SparkListenerTaskStart","Stage ID":0,"Stage Attempt ID":0,"Task Info":{"Task ID":1,"Index":1,"Attempt":0,"Launch Time":1479957645870,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":1479957645996,"Failed":false,"Accumulables":[]}}

{"Event":"SparkListenerTaskEnd","Stage ID":0,"Stage Attempt ID":0,"Task Type":"ResultTask","Task End Reason":{"Reason":"Success"},"Task Info":{"Task ID":1,"Index":1,"Attempt":0,"Launch Time":1479957645870,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":1479957645996,"Failed":false,"Accumulables":[{"ID":0,"Name":"internal.metrics.executorDeserializeTime","Update":45,"Value":45,"Internal":true,"Count Failed Values":true},{"ID":1,"Name":"internal.metrics.executorRunTime","Update":15,"Value":15,"Internal":true,"Count Failed Values":true},{"ID":2,"Name":"internal.metrics.resultSize","Update":945,"Value":945,"Internal":true,"Count Failed Values":true},{"ID":3,"Name":"internal.metrics.jvmGCTime","Update":4,"Value":4,"Internal":true,"Count Failed Values":true}]},"Task Metrics":{"Executor Deserialize Time":45,"Executor Run Time":15,"Result Size":945,"JVM GC Time":4,"Result Serialization Time":0,"Memory Bytes Spilled":0,"Disk Bytes Spilled":0,"Shuffle Read Metrics":{"Remote Blocks Fetched":0,"Local Blocks Fetched":0,"Fetch Wait Time":0,"Remote Bytes Read":0,"Local Bytes Read":0,"Total Records Read":0},"Shuffle Write Metrics":{"Shuffle Bytes Written":0,"Shuffle Write Time":0,"Shuffle Records Written":0},"Input Metrics":{"Bytes Read":0,"Records Read":0},"Output Metrics":{"Bytes Written":0,"Records Written":0},"Updated Blocks":[]}}

{"Event":"SparkListenerTaskEnd","Stage ID":0,"Stage Attempt ID":0,"Task Type":"ResultTask","Task End Reason":{"Reason":"Success"},"Task Info":{"Task ID":0,"Index":0,"Attempt":0,"Launch Time":1479957641443,"Executor ID":"driver","Host":"localhost","Locality":"PROCESS_LOCAL","Speculative":false,"Getting Result Time":0,"Finish Time":1479957646017,"Failed":false,"Accumulables":[{"ID":0,"Name":"internal.metrics.executorDeserializeTime","Update":3615,"Value":3660,"Internal":true,"Count Failed Values":true},{"ID":1,"Name":"internal.metrics.executorRunTime","Update":355,"Value":370,"Internal":true,"Count Failed Values":true},{"ID":2,"Name":"internal.metrics.resultSize","Update":1032,"Value":1977,"Internal":true,"Count Failed Values":true},{"ID":3,"Name":"internal.metrics.jvmGCTime","Update":184,"Value":188,"Internal":true,"Count Failed Values":true},{"ID":4,"Name":"internal.metrics.resultSerializationTime","Update":5,"Value":5,"Internal":true,"Count Failed Values":true}]},"Task Metrics":{"Executor Deserialize Time":3615,"Executor Run Time":355,"Result Size":1032,"JVM GC Time":184,"Result Serialization Time":5,"Memory Bytes Spilled":0,"Disk Bytes Spilled":0,"Shuffle Read Metrics":{"Remote Blocks Fetched":0,"Local Blocks Fetched":0,"Fetch Wait Time":0,"Remote Bytes Read":0,"Local Bytes Read":0,"Total Records Read":0},"Shuffle Write Metrics":{"Shuffle Bytes Written":0,"Shuffle Write Time":0,"Shuffle Records Written":0},"Input Metrics":{"Bytes Read":0,"Records Read":0},"Output Metrics":{"Bytes Written":0,"Records Written":0},"Updated Blocks":[]}}

{"Event":"SparkListenerStageCompleted","Stage Info":{"Stage ID":0,"Stage Attempt ID":0,"Stage Name":"reduce at SparkPi.scala:38","Number of Tasks":2,"RDD Info":[{"RDD ID":1,"Name":"MapPartitionsRDD","Scope":"{\"id\":\"1\",\"name\":\"map\"}","Callsite":"map at SparkPi.scala:34","Parent IDs":[0],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":2,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0},{"RDD ID":0,"Name":"ParallelCollectionRDD","Scope":"{\"id\":\"0\",\"name\":\"parallelize\"}","Callsite":"parallelize at SparkPi.scala:34","Parent IDs":[],"Storage Level":{"Use Disk":false,"Use Memory":false,"Deserialized":false,"Replication":1},"Number of Partitions":2,"Number of Cached Partitions":0,"Memory Size":0,"Disk Size":0}],"Parent IDs":[],"Details":"org.apache.spark.rdd.RDD.reduce(RDD.scala:984)\norg.apache.spark.examples.SparkPi$.main(SparkPi.scala:38)\norg.apache.spark.examples.SparkPi.main(SparkPi.scala)\nsun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)\nsun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)\nsun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)\njava.lang.reflect.Method.invoke(Method.java:498)\norg.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:736)\norg.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:185)\norg.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:210)\norg.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:124)\norg.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)","Submission Time":1479957641244,"Completion Time":1479957646085,"Accumulables":[{"ID":2,"Name":"internal.metrics.resultSize","Value":1977,"Internal":true,"Count Failed Values":true},{"ID":4,"Name":"internal.metrics.resultSerializationTime","Value":5,"Internal":true,"Count Failed Values":true},{"ID":1,"Name":"internal.metrics.executorRunTime","Value":370,"Internal":true,"Count Failed Values":true},{"ID":3,"Name":"internal.metrics.jvmGCTime","Value":188,"Internal":true,"Count Failed Values":true},{"ID":0,"Name":"internal.metrics.executorDeserializeTime","Value":3660,"Internal":true,"Count Failed Values":true}]}}

{"Event":"SparkListenerJobEnd","Job ID":0,"Completion Time":1479957646131,"Job Result":{"Result":"JobSucceeded"}}

{"Event":"SparkListenerApplicationEnd","Timestamp":1479957646285}

什么鬼

结果

二、

如何运行Spark程序的更多相关文章

- 使用IDEA运行Spark程序

使用IDEA运行Spark程序 1.安装IDEA 从IDEA官网下载Community版本,解压到/usr/local/idea目录下. tar –xzf ideaIC-13.1.4b.tar.gz ...

- eclipse运行spark程序时日志颜色为黑色的解决办法

自从开始学习spark计算框架以来,我们老师教的是local模式下用eclipse运行spark程序,然后我在运行spark程序时,发现控制台的日志颜色总是显示为黑色,哇,作为程序猿总有一种强迫症,发 ...

- Hadoop:开发机运行spark程序,抛出异常:ERROR Shell: Failed to locate the winutils binary in the hadoop binary path

问题: windows开发机运行spark程序,抛出异常:ERROR Shell: Failed to locate the winutils binary in the hadoop binary ...

- 如何在本地使用scala或python运行Spark程序

如何在本地使用scala或python运行Spark程序 包含两个部分: 本地scala语言编写程序,并编译打包成jar,在本地运行. 本地使用python语言编写程序,直接调用spark的接口, ...

- luigi框架--关于python运行spark程序

首先,目标是写个python脚本,跑spark程序来统计hdfs中的一些数据.参考了别人的代码,故用了luigi框架. 至于luigi的原理 底层的一些东西Google就好.本文主要就是聚焦快速使用, ...

- 运行Spark程序的几种模式

一. local 模式 -- 所有程序都运行在一个JVM中,主要用于开发时测试 无需开启任何服务,可直接运行 ./bin/run-example 或 ./bin/spark-submit 如: ...

- 在Windows上运行Spark程序

一.下载Saprk程序 https://d3kbcqa49mib13.cloudfront.net/spark-2.1.1-bin-hadoop2.7.tgz 解压到d:\spark-2.1.1-bi ...

- spark学习14(spark local模式运行spark程序的报错)

报错1 java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries. 解 ...

- 记一次运行spark程序遇到的权限问题

设置回滚点在本地运行时正常,在集群时就报错,后来是发现ceshi这个目录其他用户没有写的权限,修改其他用户的权限就好了 hdfs dfs - /ceshi

随机推荐

- java 正则匹配括号对以及其他成对出现的模式

最近,我们有个大调整,为了控制代码的质量,需要使用一些伪代码让业务人员编写应用逻辑(其实这么做完全是处于研发效能的考虑,95%以上的代码不需要特别注意都不会导致系统性风险,),然后通过工具自动生成实际 ...

- jasmine test 页面测试工具

before((request, response) -> { response.header("Access-Control-Allow-Origin", "ht ...

- android 自定义控件——(四)圆形进度条

----------------------------------↓↓圆形进度条(源代码下有属性解释)↓↓---------------------------------------------- ...

- TiQuery

TiQuery 是一个基于JQuery 的在Titanium上使用的 javascript 库 TiQuery 为TI 提供了很快捷的方法: // Utilities $.info('My messa ...

- CentOS下安装使用start-stop-daemon

CentOS下安装使用start-stop-daemon 在centos下下了个自启动的服务器脚本 执行的时候发现找不到start-stop-daemon命令 好吧 执行手动编译一下 加上这个命令 w ...

- 慎用 supportedRuntime

运行环境:win7, net4.5 现象: 无法连接SQL2012数据库,提示连接超时 原因: 真正的原因: 找微软去 解决的办法: 去除多余的supportedRuntime,或者修 ...

- 个人作业-week2:关于微软必应词典的案例分析

第一部分 调研,评测 评测基于微软必应词典Android5.2.2客户端,手机型号为MI NOTE LTE,Android版本为6.0.1. 软件bug:关于这方面,其实有一些疑问.因为相对于市面上其 ...

- html 概念

HTML 超文本标记语言,标准通用标记语言下的一个应用.http://baike.baidu.com/link?url=RYF4Pj7VUPifcXatU7OJLGRljIgkp4MjzkspARor ...

- Python简单爬虫入门三

我们继续研究BeautifulSoup分类打印输出 Python简单爬虫入门一 Python简单爬虫入门二 前两部主要讲述我们如何用BeautifulSoup怎去抓取网页信息以及获取相应的图片标题等信 ...

- Hibernate的 Restrictions用法

方法说明 方法 说明 Restrictions.eq = Restrictions.allEq 利用Map来进行多个等于的限制 Restrictions.gt > Restrictions.ge ...