python代码实现将PDF文件转为文本及其对应的音频

代码地址:

https://github.com/TiffinTech/python-pdf-audo

============================================

import pyttsx3,PyPDF2 #insert name of your pdf

pdfreader = PyPDF2.PdfReader(open('book.pdf', 'rb'))

speaker = pyttsx3.init() for page_num in range(len(pdfreader.pages)):

text = pdfreader.pages[page_num].extract_text()

clean_text = text.strip().replace('\n', ' ')

print(clean_text)

#name mp3 file whatever you would like

speaker.save_to_file(clean_text, 'story.mp3')

speaker.runAndWait() speaker.stop()

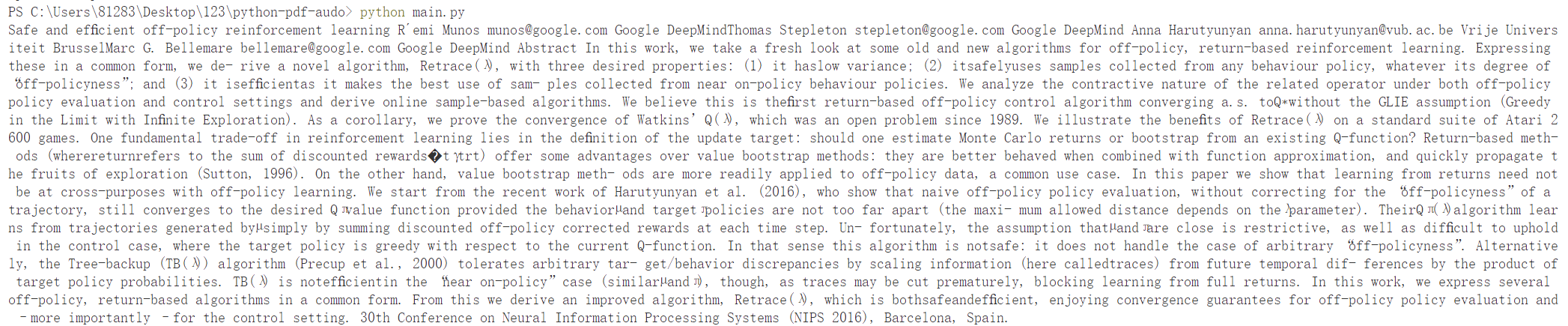

首先说下PDF文字提取的功能,大概还是可以凑合的,给出Demo:

提取的文字为:

Safe and efficient off-policy reinforcement learning R´emi Munos munos@google.com Google DeepMindThomas Stepleton stepleton@google.com Google DeepMind Anna Harutyunyan anna.harutyunyan@vub.ac.be Vrije Universiteit BrusselMarc G. Bellemare bellemare@google.com Google DeepMind Abstract In this work, we take a fresh look at some old and new algorithms for off-policy, return-based reinforcement learning. Expressing

these in a common form, we de- rive a novel algorithm, Retrace(λ), with three desired properties: (1) it haslow variance; (2) itsafelyuses samples collected from any behaviour policy, whatever its degree of

“off-policyness”; and (3) it isefficientas it makes the best use of sam- ples collected from near on-policy behaviour policies. We analyze the contractive nature of the related operator under both off-policy

policy evaluation and control settings and derive online sample-based algorithms. We believe this is thefirst return-based off-policy control algorithm converging a.s. toQ∗without the GLIE assumption (Greedy

in the Limit with Infinite Exploration). As a corollary, we prove the convergence of Watkins’ Q(λ), which was an open problem since 1989. We illustrate the benefits of Retrace(λ) on a standard suite of Atari 2600 games. One fundamental trade-off in reinforcement learning lies in the definition of the update target: should one estimate Monte Carlo returns or bootstrap from an existing Q-function? Return-based meth- ods (wherereturnrefers to the sum of discounted rewards� tγtrt) offer some advantages over value bootstrap methods: they are better behaved when combined with function approximation, and quickly propagate the fruits of exploration (Sutton, 1996). On the other hand, value bootstrap meth- ods are more readily applied to off-policy data, a common use case. In this paper we show that learning from returns need not be at cross-purposes with off-policy learning. We start from the recent work of Harutyunyan et al. (2016), who show that naive off-policy policy evaluation, without correcting for the “off-policyness” of a

trajectory, still converges to the desired Qπvalue function provided the behaviorµand targetπpolicies are not too far apart (the maxi- mum allowed distance depends on theλparameter). TheirQπ(λ)algorithm learns from trajectories generated byµsimply by summing discounted off-policy corrected rewards at each time step. Un- fortunately, the assumption thatµandπare close is restrictive, as well as difficult to uphold in the control case, where the target policy is greedy with respect to the current Q-function. In that sense this algorithm is notsafe: it does not handle the case of arbitrary “off-policyness”. Alternatively, the Tree-backup (TB(λ)) algorithm (Precup et al., 2000) tolerates arbitrary tar- get/behavior discrepancies by scaling information (here calledtraces) from future temporal dif- ferences by the product of target policy probabilities. TB(λ) is notefficientin the “near on-policy” case (similarµandπ), though, as traces may be cut prematurely, blocking learning from full returns. In this work, we express several

off-policy, return-based algorithms in a common form. From this we derive an improved algorithm, Retrace(λ), which is bothsafeandefficient, enjoying convergence guarantees for off-policy policy evaluation and – more importantly – for the control setting. 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain.

上面这些这就是文字提取的效果,而对于音频转换这部分就效果实在是糟糕的很,转换的音频是无法贴合原文的,因此这里认为上面代码中这个PDF文字提取功能还是可以勉强用的,为以后项目需要做一定的技术积累,而这个音频转换就无法考虑使用了。

=============================================

对应的视频:

https://www.youtube.com/watch?v=LXsdt6RMNfY

python代码实现将PDF文件转为文本及其对应的音频的更多相关文章

- 深入学习Python解析并解密PDF文件内容的方法

前面学习了解析PDF文档,并写入文档的知识,那篇文章的名字为深入学习Python解析并读取PDF文件内容的方法. 链接如下:https://www.cnblogs.com/wj-1314/p/9429 ...

- 深入学习python解析并读取PDF文件内容的方法

这篇文章主要学习了python解析并读取PDF文件内容的方法,包括对学习库的应用,python2.7和python3.6中python解析PDF文件内容库的更新,包括对pdfminer库的详细解释和应 ...

- 将python代码打印成pdf

将python代码打印成pdf,打印出来很丑,完全不能看. mac下:pycharm 编辑器有print的功能,但是会提示: Error: No print service found. 所以需要一个 ...

- 利用Python将多个PDF文件合并

from PyPDF2 import PdfFileMerger import os files = os.listdir()#列出目录中的所有文件 merger = PdfFileMerger() ...

- 利用python第三方库提取PDF文件的表格内容

小爬最近接到一个棘手任务:需要提取手机话费电子发票PDF文件中的数据.接到这个任务的第一时间,小爬决定搜集各个地区各个时间段的电子发票文件,看看其中的差异点.粗略统计下来,PDF文件的表格框架是统一的 ...

- python从TXT创建PDF文件——reportlab

使用reportlab创建PDF文件电子书一般都是txt格式的,某些电子阅读器不能读取txt的文档,如DPT-RP1.因此本文从使用python实现txt到pdf的转换,并且支持生成目录,目录能够生成 ...

- 【转】Python编程: 多个PDF文件合并以及网页上自动下载PDF文件

1. 多个PDF文件合并1.1 需求描述有时候,我们下载了多个PDF文件, 但希望能把它们合并成一个PDF文件.例如:你下载的数个PDF文件资料或者电子发票,你可以使用python程序合并成一个PDF ...

- 【转】Python 深入浅出 - PyPDF2 处理 PDF 文件

实际应用中,可能会涉及处理 pdf 文件,PyPDF2 就是这样一个库,使用它可以轻松的处理 pdf 文件,它提供了读,割,合并,文件转换等多种操作. 文档地址:http://pythonhosted ...

- Python实现多个pdf文件合并

背景 由于工作原因,经常需要将多个pdf文件合并后打印,有时候上网找免费合并工具比较麻烦(公司内网不能访问公网),于是决定搞个小工具. 具体实现 需要安装 PyPDF2 pip install PyP ...

- 办公室文员必备python神器,将PDF文件表格转换成excel表格!

[阅读全文] 第三方库说明 # PDF读取第三方库 import pdfplumber # DataFrame 数据结果处理 import pandas as pd 初始化DataFrame数据对象 ...

随机推荐

- 什么Java注释

定义:用于解释说明程序的文字分类: 单行注释:格式: // 注释文字多行注释:格式: /* 注释文字 */ 文档注释:格式:/** 注释文字 */ 作用:在程序中,尤其是复杂的程序中,适当地加入注释可 ...

- 一个开源且全面的C#算法实战教程

前言 算法在计算机科学和程序设计中扮演着至关重要的角色,如在解决问题.优化效率.决策优化.实现计算机程序.提高可靠性以及促进科学融合等方面具有广泛而深远的影响.今天大姚给大家分享一个开源.免费.全面的 ...

- Docker PHP启用各种扩展笔记

注意 如果apt-get install命令无法安装依赖,请先执行apt update更新依赖信息 启用ZIP扩展 原作者地址:找不到了... # 安装依赖库 $ apt-get install -y ...

- golang执行命令 && 实时获取输出结果

背景 golang可以获取命令执行的输出结果,但要执行完才能够获取. 如果执行的命令是ssh,我们要实时获取,并执行相应的操作呢? 示例 func main() { user := "roo ...

- openEuler 20.04 TLS3 上的 Python3.11.9 源码一键构建安装

#! /bin/bash # filename: python-instaler.sh SOURCE_PATH=/usr/local/source # 下载源码包 mkdir -p $SOURCE_P ...

- 图片接口JWT鉴权实现

图片接口JWT鉴权实现 前言 之前做了个返回图片链接的接口,然后没做授权,然后今天键盘到了,也是用JWT来做接口的权限控制. 然后JTW网上已经有很多文章来说怎么用了,这里就不做多的解释了,如果不懂的 ...

- win11添加开机自启动

方法1 win + R 打开运行,输入 shell:startup 会打开一个文件夹 将想要启动的程序快捷方式放进文件夹 在设置里面搜索"启动",可以看到开机启动项,确认已经打开. ...

- 3568F-视频开发案例

- K210开发板学习笔记-点亮LED灯

1. 介绍 和 51 单片机非常像,实验的2个LED灯都是一头接了 +3.3v 电源,控制 LED灯亮的话需要 K210芯片 对应的管脚提供一个低电平. 管脚: 低电平-LED亮 高电平-LED灭 G ...

- yb课堂之用户注册登陆模块《六》

用户注册功能接口开发 注册接口开发 MD5加密工具类封装 UserMapper.xml <?xml version="1.0" encoding="UTF-8&qu ...