【转帖】You can now run a GPT-3-level AI model on your laptop, phone, and Raspberry Pi

https://arstechnica.com/information-technology/2023/03/you-can-now-run-a-gpt-3-level-ai-model-on-your-laptop-phone-and-raspberry-pi/

Things are moving at lightning speed in AI Land. On Friday, a software developer named Georgi Gerganov created a tool called "llama.cpp" that can run Meta's new GPT-3-class AI large language model, LLaMA, locally on a Mac laptop. Soon thereafter, people worked out how to run LLaMA on Windows as well. Then someone showed it running on a Pixel 6 phone, and next came a Raspberry Pi (albeit running very slowly).

If this keeps up, we may be looking at a pocket-sized ChatGPT competitor before we know it.

But let's back up a minute, because we're not quite there yet. (At least not today—as in literally today, March 13, 2023.) But what will arrive next week, no one knows.

Since ChatGPT launched, some people have been frustrated by the AI model's built-in limits that prevent it from discussing topics that OpenAI has deemed sensitive. Thus began the dream—in some quarters—of an open source large language model (LLM) that anyone could run locally without censorship and without paying API fees to OpenAI.

Open source solutions do exist (such as GPT-J), but they require a lot of GPU RAM and storage space. Other open source alternatives could not boast GPT-3-level performance on readily available consumer-level hardware.

Enter LLaMA, an LLM available in parameter sizes ranging from 7B to 65B (that's "B" as in "billion parameters," which are floating point numbers stored in matrices that represent what the model "knows"). LLaMA made a heady claim: that its smaller-sized models could match OpenAI's GPT-3, the foundational model that powers ChatGPT, in the quality and speed of its output. There was just one problem—Meta released the LLaMA code open source, but it held back the "weights" (the trained "knowledge" stored in a neural network) for qualified researchers only.

Advertisement

Flying at the speed of LLaMA

Meta's restrictions on LLaMA didn't last long, because on March 2, someone leaked the LLaMA weights on BitTorrent. Since then, there has been an explosion of development surrounding LLaMA. Independent AI researcher Simon Willison has compared this situation to the release of Stable Diffusion, an open source image synthesis model that launched last August. Here's what he wrote in a post on his blog:

It feels to me like that Stable Diffusion moment back in August kick-started the entire new wave of interest in generative AI—which was then pushed into over-drive by the release of ChatGPT at the end of November.

That Stable Diffusion moment is happening again right now, for large language models—the technology behind ChatGPT itself. This morning I ran a GPT-3 class language model on my own personal laptop for the first time!

AI stuff was weird already. It’s about to get a whole lot weirder.

Typically, running GPT-3 requires several datacenter-class A100 GPUs (also, the weights for GPT-3 are not public), but LLaMA made waves because it could run on a single beefy consumer GPU. And now, with optimizations that reduce the model size using a technique called quantization, LLaMA can run on an M1 Mac or a lesser Nvidia consumer GPU (although "llama.cpp" only runs on CPU at the moment—which is impressive and surprising in its own way).

Things are moving so quickly that it's sometimes difficult to keep up with the latest developments. (Regarding AI's rate of progress, a fellow AI reporter told Ars, "It's like those videos of dogs where you upend a crate of tennis balls on them. [They] don't know where to chase first and get lost in the confusion.")

For example, here's a list of notable LLaMA-related events based on a timeline Willison laid out in a Hacker News comment:

- February 24, 2023: Meta AI announces LLaMA.

- March 2, 2023: Someone leaks the LLaMA models via BitTorrent.

- March 10, 2023: Georgi Gerganov creates llama.cpp, which can run on an M1 Mac.

- March 11, 2023: Artem Andreenko runs LLaMA 7B (slowly) on a Raspberry Pi 4, 4GB RAM, 10 sec/token.

- March 12, 2023: LLaMA 7B running on NPX, a node.js execution tool.

- March 13, 2023: Someone gets llama.cpp running on a Pixel 6 phone, also very slowly.

- March 13, 2023, 2023: Stanford releases Alpaca 7B, an instruction-tuned version of LLaMA 7B that "behaves similarly to OpenAI's "text-davinci-003" but runs on much less powerful hardware.

Advertisement

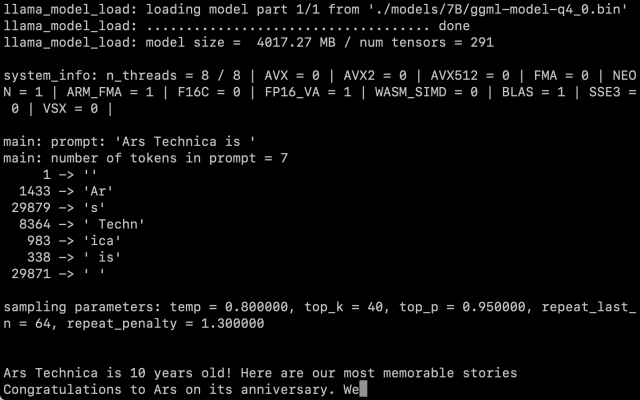

After obtaining the LLaMA weights ourselves, we followed Willison's instructions and got the 7B parameter version running on an M1 Macbook Air, and it runs at a reasonable rate of speed. You call it as a script on the command line with a prompt, and LLaMA does its best to complete it in a reasonable way.

There's still the question of how much the quantization affects the quality of the output. In our tests, LLaMA 7B trimmed down to 4-bit quantization was very impressive for running on a MacBook Air—but still not on par with what you might expect from ChatGPT. It's entirely possible that better prompting techniques might generate better results.

Also, optimizations and fine-tunings come quickly when everyone has their hands on the code and the weights—even though LLaMA is still saddled with some fairly restrictive terms of use. The release of Alpaca today by Stanford proves that fine tuning (additional training with a specific goal in mind) can improve performance, and it's still early days after LLaMA's release.

FURTHER READING

As of this writing, running LLaMA on a Mac remains a fairly technical exercise. You have to install Python and Xcode and be familiar with working on the command line. Willison has good step-by-step instructions for anyone who would like to attempt it. But that may soon change as developers continue to code away.

As for the implications of having this tech out in the wild—no one knows yet. While some worry about AI's impact as a tool for spam and misinformation, Willison says, "It’s not going to be un-invented, so I think our priority should be figuring out the most constructive possible ways to use it."

Right now, our only guarantee is that things will change rapidly.

【转帖】You can now run a GPT-3-level AI model on your laptop, phone, and Raspberry Pi的更多相关文章

- Raspberry Pi 3 --- Kernel Building and Run in A New Version Kernal

ABSTRACT There are two main methods for building the kernel. You can build locally on a Raspberry Pi ...

- OSMC Vs. OpenELEC Vs. LibreELEC – Kodi Operating System Comparison

Kodi's two slim-and-trim kid brothers LibreELEC and OpenELEC were once great solutions for getting t ...

- Jenkins报错Caused: java.io.IOException: Cannot run program "sh" (in directory "D:\Jenkins\Jenkins_home\workspace\jmeter_test"): CreateProcess error=2, 系统找不到指定的文件。

想在本地执行我的python文件,我本地搭建了一个Jenkins,使用了execute shell来运行我的脚本,发现报错 [jmeter_test] $ sh -xe D:\tomcat\apach ...

- FAT-fs (mmcblk0p1): Volume was not properly unmounted. Some data may be corrupt. Please run fsck.

/******************************************************************************** * FAT-fs (mmcblk0p ...

- (转载)如何创建一个以管理员权限运行exe 的快捷方式? How To Create a Shortcut That Lets a Standard User Run An Application as Administrator

How To Create a Shortcut That Lets a Standard User Run An Application as Administrator by Chris Hoff ...

- java.io.IOException: Cannot run program "/opt/jdk1.8.0_191/bin/java" (in directory "/var/lib/jenkins/workspace/xinguan"): error=2, No such file or directory

测试jenkins构建,报错如下 Parsing POMs Established TCP socket on 44463 [xinguan] $ /opt/jdk1.8.0_191/bin/java ...

- 【协作式原创】自己动手写docker之run代码解析

linux预备知识 urfave cli预备知识 准备工作 阿里云抢占式实例:centos7.4 每次实例释放后都要重新安装go wget https://dl.google.com/go/go1.1 ...

- FreeBSD 10 发布

发行注记:http://www.freebsd.org/releases/10.0R/relnotes.html 下文翻译中... 主要有安全问题修复.新的驱动与硬件支持.新的命名/选项.主要bug修 ...

- 【Xamarin挖墙脚系列:Mono项目的图标为啥叫Mono】

因为发起人大Boss :Miguel de lcaza 是西班牙人,喜欢猴子.................就跟Hadoop的创始人的闺女喜欢大象一样...................... 历 ...

- Win8.1重装win7或win10中途无法安装

一.有的是usb识别不了,因为新的机器可能都是USB3.0的,安装盘是Usb2.0的. F12更改系统BIOS设置,我改了三个地方: 1.设置启动顺序为U盘启动 2.关闭了USB3.0 control ...

随机推荐

- 温故而知新——MYSQL基本操作

相关连接: mysql和sqlserver的区别:https://www.cnblogs.com/vic-tory/p/12760197.html sqlserver基本操作:https://www. ...

- LeetCode 哈希表、映射、集合篇(242、49)

242. 有效的字母异位词 给定两个字符串 s 和 t ,编写一个函数来判断 t 是否是 s 的字母异位词. 示例 1: 输入: s = "anagram", t = " ...

- 华为云数据库GaussDB(for openGauss):初次见面,认识一下

摘要:本文从总体架构.主打场景.关键技术特性等方面进行介绍GaussDB(for openGauss). 1.背景介绍 3月16日,在华为云主办的GaussDB(for openGauss)系列技术第 ...

- 关于单元测试的那些事儿,Mockito 都能帮你解决

摘要:相信每一个程序猿在写Unit Test的时候都会碰到一些令人头疼的问题:如何测试一个rest接口:如何测试一个包含客户端调用服务端的复杂方法:如何测试一个包含从数据库读取数据的复杂方法...这些 ...

- 无法安装此app,因为无法验证其完整性 ,解决方案

最近有很多兄弟萌跟我反应"无法安装此app,因为无法验证其完整性 ",看来这个问题无法避免了,今天统一回复下,出现提示主要有以下几种可能 1.安装包不完整 首先申请我所有分享的破解 ...

- 火山引擎 DataLeap 通过中国信通院测评,数据管理能力获官方认可!

近日,火山引擎大数据研发治理套件 DataLeap 通过中国信通院第十五批"可信大数据"测评,在数据管理平台基础能力上获得认证. "可信大数据"产品能力 ...

- 基于rest_framework的ModelViewSet类编写登录视图和认证视图

背景:看了博主一抹浅笑的rest_framework认证模板,发现登录视图函数是基于APIView类封装. 优化:使用ModelViewSet类通过重写create方法编写登录函数. 环境:既然接触到 ...

- Python pydot与graphviz库在Anaconda环境的配置

本文介绍在Anaconda环境中,安装Python语言pydot与graphviz两个模块的方法. 最近进行随机森林(RF)的树的可视化操作,需要用到pydot与graphviz模块:因此记录 ...

- list求交集、并集、差集等//post或者get请求方法

package com.siebel.api.server.config.rest; import com.google.common.base.Joiner; import com.google.c ...

- scroll-view横向滚动的问题

最近在做一个小程序的项目,在写demo的时候,需要用到scroll-view来实现横向滚动的效果: 按照官方文档来写简直坑到家了,正确的写法如下: <scroll-view scroll-x=& ...