Data Replication in a Multi-Cloud Environment using Hadoop & Peer-to-Peer technologies

要FQ。。。

——————————————————————————————————————————————————————

Few years ago, i started working on a project named Jxtadoop providing Hadoop Distributed Filesystem capabilities on top of of a peer-to-peer network. This initial goal was simply to load a file once to a Data Cloud which will take care of replication wherever the peers (data nodes) are deployed... I also wanted to avoid putting my data outside of my private network to ensure complete data privacy.

After some times, it appeared that this solution is also a very good fit to support data replication in a Multi-Cloud Environment. Any file (small or big) can be loaded in one cloud and then gets automatically replicated to the other clouds. This makes multi-cloud Data Brokering very easy and straightforward.

Hadoop is a very good candidate to provide those functionalities at a datacenter level. However when moving to a multi-cloud environment, it is no longer viable unless a Virtual Private Data Network is built. This VPDN is created on top of a peer-to-peer network which provides redundancy, multi-path routing, privacy, encryption across all the clouds...

Concept

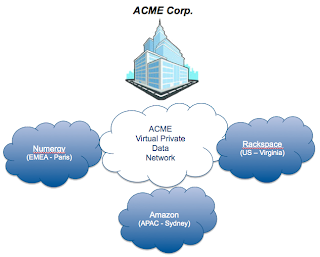

Let's assume we are in a true Multi-Cloud Broker environment. For this blog, i assume i actually have 3 clouds hosting multiple workloads (aka virtual servers) in each. The picture below depicts a classical configuration which will appear in the coming years where business will source IT from different Cloud providers and really consume IT in a true Service Broker model.

ACME Corp. has its headquarters based out of France and subsidiaries all over the world.

The new strategy is to source IT infrastructure from local service providers to deliver IT services directly to the local branches. There is no will to set up local IT anymore.

Data replication, propagation, protection (...) is really an issue in such a configuration and reversibility has to also be configured.

Setting up this Virtual Private Data Network will support this Service Broker Strategy.

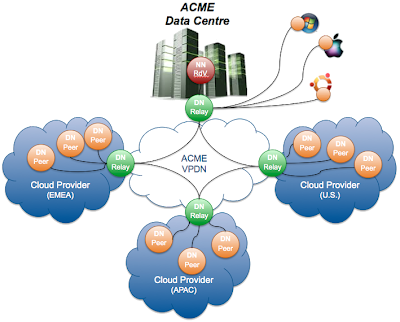

Conceptually, the solution is very simple. A master node (calledRendez-vous Namenode) is located in the HQ and is the brain of the VPDN. That's where all the logic is handled such as data availability, multi-path data transfer, data placement... In each Cloud, there is a Relay Datanode which acts as the entry point for the Cloud. It will play a routing role communicating directly with other Cloud relays and also play a buffering role for data transmission. To avoid any SPOF, all those peers can be deployed in a multi-instance mode.

Finally each workload instance (physical servers, virtual machines, containers...) hosts a Peer Datanode which is the actual endpoint for data storage and consumption.

Virtual Private Data Network Architecture

As explained in the previous section, the overall architecture relies on three main components.

- Namenode Rendez-Vous providing Data Transport Logic as well as Data placement and replication. It has the peer-to-peer network topology overall understanding as well as the data cloud meta-data. There is no data traffic going through this peer.

- Datanode Relay providing Data Storage as well as Data Transport. The local peers which can communicate between each others through multicast, will rely on the relays to communicate with remote peers located in other data clouds. It can also store data as a temporary buffer.

- Datanode Peer providing Data Storage to store data chunks on each server peer and even on remote desktop peers.

All the peers can be made redundant (multiple Rendez-Vous, multiple Relays ...) to increase the multi-path routing capability and avoid any SPOF. The data is then split into chunks of pre-defined size and dispatched across the Data Cloud. Data locality can be set to ensure there is one replica per Cloud or that replicas are limited to a Cloud (for example for data which must stay in a specific country).

All the communications are multi-path, authenticated, encrypted... There is no need to set-up VPNs between the Clouds which could lead to some contentions points. Here the communication is either direct through multicast or going through the best (shortest) routing path at the peer-to-peer layer level.

The traffic flows are of 2 kinds.

. The RPC flow and the DATA flow. The first one handles all the signaling required to operate the VPDN such as routing, heartbeat, placement requests, updates ... There is actually no business data on this flow, hence it is possible to have a set-up where data traffic is limited to a cloud or even a country while the commands are centrally managed.

. The DATA flow is the actual business data transferred over the wire. This flow can be local to a datacentre using multicast wherever possible. It can also still be local but transiting through the Cloud relay for multiple domains. Finally this flow can go through multiple relays. In the example below, a data block located on the Windows PC will get replicated to the APAC Cloud by going through 2 relays (the DC one + the APAC Cloud one).

Benefits

This new approach brings many benefits for a mutli-Cloud environment and for companies willing to operate their IT with an IT Service Broker model.

- Redundancy : the data is automatically replicated in the Clouds wherever needed ;

- Availability : the data is always available with the use of multiple replicas (3, 5, 7...) ;

- Efficiency : quick deployment, quick capacity expansion ;

- Simplicity : load once on a peer and automated replication ;

- Future-proof : leverage big data technologies ;

- Portable : can run on any server and desktop platforms supporting Java 7 ;

- Confidentiality : all the data transfer are encrypted, authenticated ... ;

- Locality : data can be located in a specific Cloud and not leak outside ;

Setting up your own environment

The technology used to create this Virtual Private Data Network can be found here. The testing described above has been done using a physical environment from OVH in France to simulate the HQ. 3 clouds have been consumed :Numergy (EMEA - France), Rackspace (U.S. - Virginia) and Amazon (APAC - Australia).

The testing leveraged Docker to create multiple Datanode peers on a single VM with a complex network topology (see1 & 2). The associated containers can be found on the Docker main repository :

- Namenode Rendez-Vous (jxtadoop/namenode)

- Datanode Relay (jxtadoop/relay)

- Datanode Peer (jxtadoop/datanode)

Desktop clients have been installed on Mac OS, Windows and Linux. Just ensure you use Windows 7.

Conclusion

This concludes my Jxtadoop project which will get released as version 1.0.0 later this month. I'll provide a SaaS set-up with a Rendez-vous Namenode and a Relay Peer for quick testing.

Next ideas :

- Roll-out StandaloneHDFSUI for Jxtadoop ;

- Release FileSharing capability based on Jxtadoop ;

- PaaS/SaaS set-up for Jxtadoop with Docker and CloudFoundry ;

- Think about magic combination of App Virtualization (Docker), Network Virtualization (Open vSwitch) and Data Virtualization (Jxtadoop) ;

Links

Data Replication in a Multi-Cloud Environment using Hadoop & Peer-to-Peer technologies的更多相关文章

- elasticsearch6.7 05. Document APIs(1)data replication model

data replication model 本节首先简要介绍Elasticsearch的data replication model,然后详细描述以下CRUD api: 1.读写文档(Reading ...

- ACID、Data Replication、CAP与BASE

ACID 在传数据库系统中,事务具有ACID 4个属性. (1) 原子性(Atomicity):事务是一个原子操作单元,其对数据的修改,要么全都执行,要么全都不执行. (2) 一致性(Consiste ...

- 【Cloud Computing】Hadoop环境安装、基本命令及MapReduce字数统计程序

[Cloud Computing]Hadoop环境安装.基本命令及MapReduce字数统计程序 1.虚拟机准备 1.1 模板机器配置 1.1.1 主机配置 IP地址:在学校校园网Wifi下连接下 V ...

- 6 Multi-Cloud Architecture Designs for an Effective Cloud

https://www.simform.com/multi-cloud-architecture/ Enterprises increasingly want to take advantage of ...

- Hive-0.x.x - Enviornment Setup

All Hadoop sub-projects such as Hive, Pig, and HBase support Linux operating system. Therefore, you ...

- Enabling granular discretionary access control for data stored in a cloud computing environment

Enabling discretionary data access control in a cloud computing environment can begin with the obtai ...

- Tagging Physical Resources in a Cloud Computing Environment

A cloud system may create physical resource tags to store relationships between cloud computing offe ...

- Awesome Big Data List

https://github.com/onurakpolat/awesome-bigdata A curated list of awesome big data frameworks, resour ...

- Scalable MySQL Cluster with Master-Slave Replication, ProxySQL Load Balancing and Orchestrator

MySQL is one of the most popular open-source relational databases, used by lots of projects around t ...

随机推荐

- Linux命令之 file命令

该命令用来识别文件类型,也可用来辨别一些文件的编码格式.它是通过查看文件的头部信息来获取文件类型,而不是像Windows通过扩展名来确定文件类型的. 执行权限 :All User 指令所在路径:/us ...

- 【JVM】调优笔记2-----JVM在JDK1.8以后的新特性以及VisualVM的安装使用

一.JVM在新版本的改进更新以及相关知识 1.JVM在新版本的改进更新 图中可以看到运行时常量池是放在方法区的 1.1对比: JDK 1.7 及以往的 JDK 版本中,Java 类信息.常量池.静态变 ...

- 设计工具-MindManager(思维导图)

1,百度百科 http://baike.baidu.com/view/30054.htm?from_id=7153629&type=syn&fromtitle=MindManager& ...

- javascript快速入门19--定位

元素尺寸 获取元素尺寸可以使用下面几种方式 元素的style属性width,height,但这些属性往往返回空值,因为它们只能返回使用行内style属性定义在元素上的样式 元素的currentStyl ...

- ClassNotFoundException和 NoClassDefFoundError区别验证

首先NoClassDefFoundError是一个错误,而ClassNotFoundException是一个异常 NoClassDefFoundError产生的原因: 如果JVM或者Classload ...

- fetch的用法

fetch api是被设计用来替换XmlHttpRequest的,详细用法如下: http://javascript.ruanyifeng.com/bom/ajax.html#toc27

- 19、Cocos2dx 3.0游戏开发找小三之Action:流动的水没有形状,漂流的风找不到踪迹、、、

重开发人员的劳动成果.转载的时候请务必注明出处:http://blog.csdn.net/haomengzhu/article/details/30478985 流动的水没有形状.漂流的风找不到踪迹. ...

- ohasd failed to start: Inappropriate ioctl for device

今天同事在安装GI的时候出现故障.让我帮忙看一下. 以下记录例如以下: 问题现象: 在安装gi的时候运行root.sh报例如以下错误: Finished running generic part of ...

- SAXReader解析xml文件demo

1. 加入jar包 2. 代码解析 package practice; import java.io.File; import java.util.List; import org.dom4j.Doc ...

- 最小生成树之Kruskal算法和Prim算法

依据图的深度优先遍历和广度优先遍历,能够用最少的边连接全部的顶点,并且不会形成回路. 这样的连接全部顶点并且路径唯一的树型结构称为生成树或扩展树.实际中.希望产生的生成树的全部边的权值和最小,称之为最 ...