UNDERSTANDING THE GAUSSIAN DISTRIBUTION

UNDERSTANDING THE GAUSSIAN DISTRIBUTION

Randomness is so present in our reality that we are used to take it for granted. Most of the phenomena which surround us have been generated by random processes. Hence, our brain is very good at recognise these random patterns. And is even better at spotting phenomena that should be random but they are actually aren’t. And this is when problems arise. Most software such as Unity or GameMaker simply lack the tools to generate realistic random numbers. This tutorial will introduce the Gaussian distribution, which plays a fundamental role in statistics since it is at the heart of many random phenomena in our everyday life.

Introduction

Let’s imagine you want to generate some random points on a plane. They can be enemies, trees, or whichever other entity you might thing of. The easiest way to do it in Unity is:

|

1

2

3

4

|

Vector3 position = new Vector3();

position.x = Random.Range(min,max),

position.y = Random.Range(min,max);

transform.position = position;

|

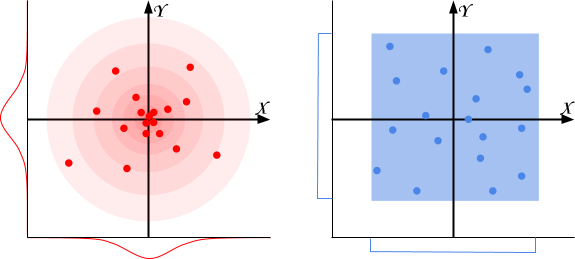

Using Random.Range will produce points distributed like in the blue box below. Some points might be closer than others, but globally they are all spread all over the place with the same density. We find approximately as many points on the left as there are on the right.

Many natural behaviours don’t follow this distribution. They are, instead, similar to the diagram on the left: these phenomena are Gaussian distributed. Thumb rule: when you have a natural phenomenon which should be around a certain value, the Gaussian distribution could be the way to go. For instance:

- Damage: the amount of damage an enemy or a weapon inflicts;

- Particle density: the amount of particles (sparkles, dust, …) around a particular object;

- Grass and trees: how grass and trees are distributed in a biome; for instance, the position of plants near a lake, or the scatter or rocks around a mountain;

- Enemy generation: if you want to generate enemies with random stats, you can design an “average” enemy and use the Gaussian distribution to get natural variations out of it.

This tutorial will explain what a Gaussian distribution exactly is, and why it appears in all the above mentioned phenomena.

Understanding uniform distributions

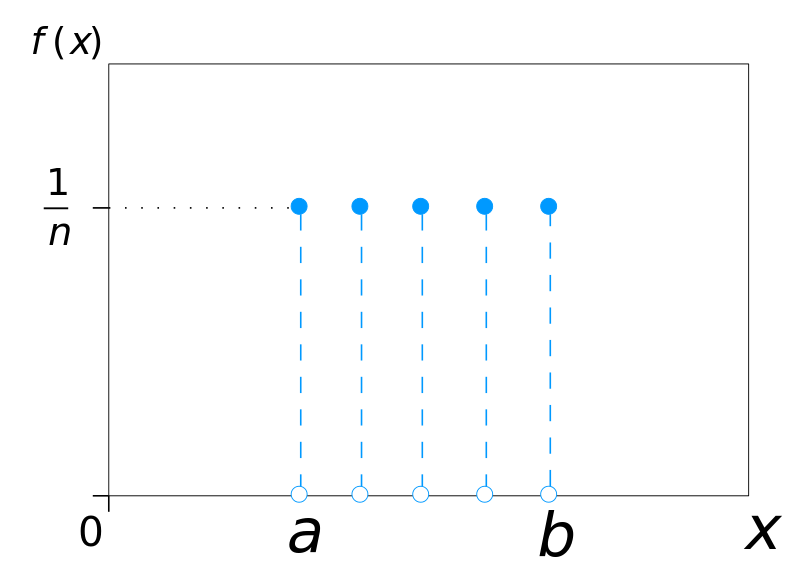

When you’re throwing a dice, there is one chance out of six to get a 6. Incidentally, every face of the dice also has the same chance. Statistically speaking, throwing a dice samples from a uniform discrete distribution (left). Every uniform distribution can be intuitively represented with a dice with faces. Each face

has the same probability of being chosen

. A function such as Random.Range, instead, returns values which are continuously uniformly distributed (right) over a particular range (typically, between 0 and 1).

|

|

In many cases, uniform distributions are a good choice. Choosing a random card from a deck, for instance, can be modelled perfectly with Random.Range.

What is a Gaussian distribution

There are other phenomena in the natural domain which don’t follow a uniform distribution. If you measure the height of all the people in a room, you’ll find that certain ranges occur more often than others. The majority of people will have a similar height, while extreme tall or short people are rare to find. If you randomly choose a person from that room, his height is likely to be close to the average height. These phenomena typically follow a distribution called the Gaussian (or normal) distribution. In a Gaussian distribution the probability of a given value to occur is given by:

If a uniform distribution is fully defined with its parameter , a Gaussian distribution is defined by two parameters

and

, namely the mean and the variance. The mean translates the curve left or right, centring it on the value which is expected to occur most frequently. The standard deviation, as the name suggests, indicates how easy is to deviate from the mean.

When a variable is generated by a phenomenon which is Gaussian distributed, it is usually indicated as:

Converging to a Gaussian distribution

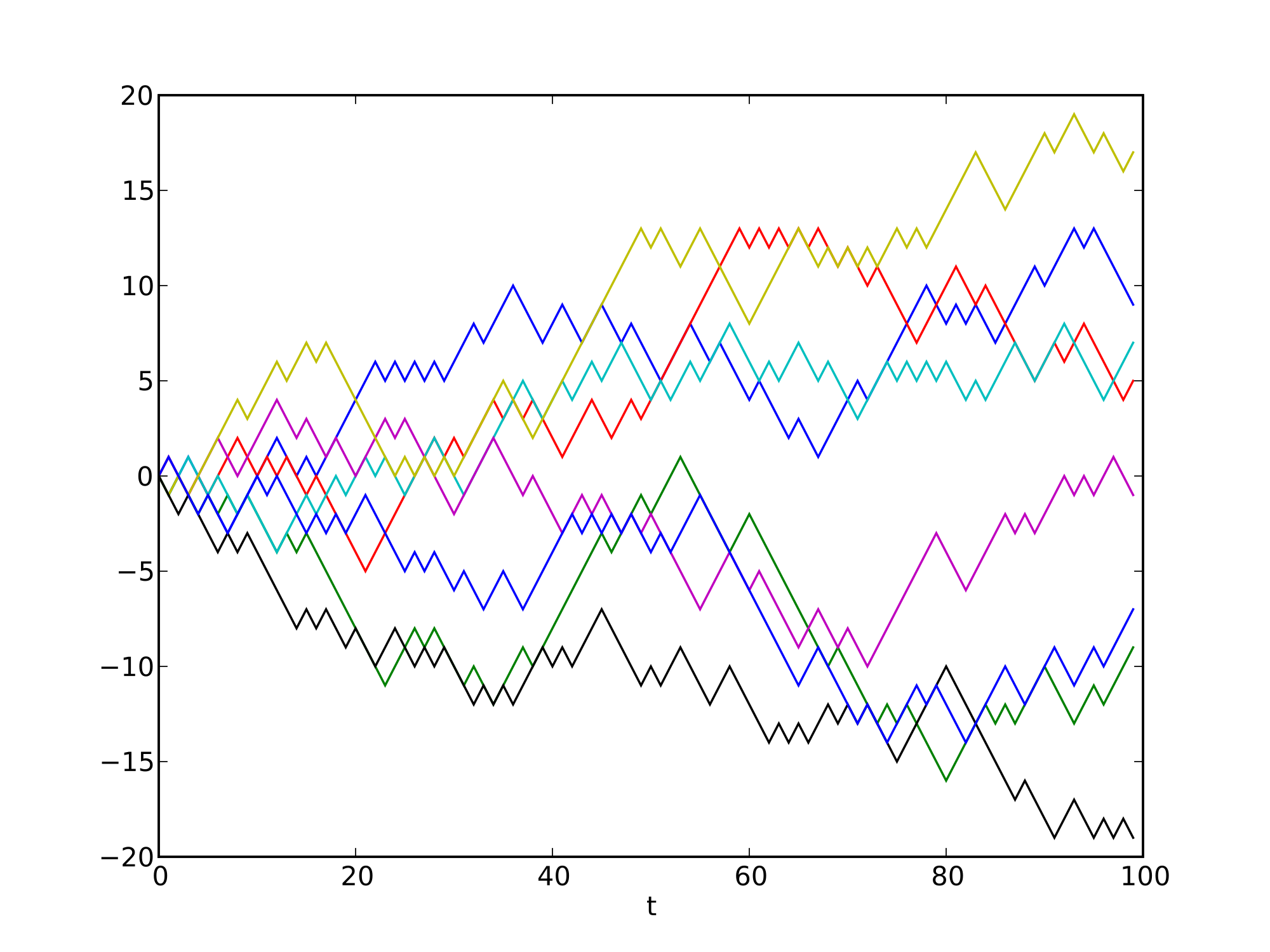

Surprisingly enough, the equation for a Gaussian distribution can be derived from a uniform distribution. Despite looking quite different, they are deeply connected. Now let’s imagine a scenario in which a drunk man has to walk straight down a line. At every step, he has a 50% chance of moving left, and another 50% chance of moving right. Where is most likely to find the drunk man after 5 step? And after 100?

Since every step has the same probability, all of the above paths are equally likely to occur. Always going left is as likely as alternating left and right for the entire time. However, there is only one path which leads to his extreme left, while there are many more paths leading to the centre (more details here). For this reason, the drunk man is expected to stay closer to the centre. Having enough drunk men and enough time to walk, their final positions always approximate a Gaussian curve.

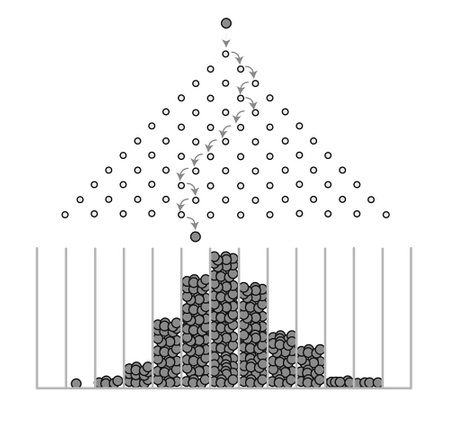

This concept can be explored without using actual drunk men. In the 19th century, Francis Galton came up with a device called bean machine: an old fashionedpachinko which allows for balls to naturally arrange themselves into the typical Gaussian bell.

This is related with the idea behind the central limit theorem; after a sufficiently large number of independent, well defined trials, results should approximate a Gaussian curve, regardless the underlying distribution of the original experiment.

Deriving the Gaussian distribution

If we look back at the bean machine, we can ask a very simple question: what is the probability for a ball to end up in a certain column? The answer depends on the number of right (or left) turns the ball makes. It is important to notice that the order doesn’t really matter: both (left, left, right) and (right, left, left) lead to the same column. And since there is a 50% change of going left or right at every turn, the question becomes: how many left turns is the ball making over

iterations (in the example above:

left turns over

, iterations)? This can be calculated considering the chance of turning left

times, with the chance of turning right

times:

. This form, however, accounts for only a single path: the one with

left turns followed by

right turns. We need to take into account all the possible permutations since they all lead to the same result. Without going too much into details, the number of permutations is described by the expression

:

This is known as the binominal distribution and it answers the question of how likely is to obtain successes out of

independent experiments, each one with the same probability

.

Even so, it still doesn’t look very Gaussian at all. The idea is to bring to the infinity, switching from a discrete to a continuous distribution. In order to do that, we first need to expand the binomial coefficient using its factorial form:

then, factorial terms should be approximated using the Stirling’s formula:

The rest of the derivation is mostly mechanic and incredibly tedious; if you are interested, you can find it here. As a result we obtain:

with and

.

Conclusion

This loosely explains why the majority of recurring, independent “natural” phenomena are, indeed, normally distributed. We are so surrounded by this distribution that our brain is incredibly good at recognise patterns which don’t follow it. This is the reason why, especially in games, is important to understand that some aspects must follow a normal distribution in order to be believable.

In the next post I’ll explore how to generate Gaussian distributed numbers, and how they can be used safely in your game.

- Part 1: Understanding the Gaussian distribution

- Part 2: How to generate Gaussian distributed numbers

Ways to Support

In the past months I've been dedicating more and more of my time to the creation of quality tutorials, mainly about game development and machine learning. If you think these posts have either helped or inspired you, please consider supporting me.

UNDERSTANDING THE GAUSSIAN DISTRIBUTION的更多相关文章

- 一起啃PRML - 1.2.4 The Gaussian distribution 高斯分布 正态分布

一起啃PRML - 1.2.4 The Gaussian distribution 高斯分布 正态分布 @copyright 转载请注明出处 http://www.cnblogs.com/chxer/ ...

- 正态分布(Normal distribution)又名高斯分布(Gaussian distribution)

正态分布(Normal distribution)又名高斯分布(Gaussian distribution),是一个在数学.物理及project等领域都很重要的概率分布,在统计学的很多方面有着重大的影 ...

- 高斯分布(Gaussian Distribution)的概率密度函数(probability density function)

高斯分布(Gaussian Distribution)的概率密度函数(probability density function) 对应于numpy中: numpy.random.normal(loc= ...

- 广义逆高斯分布(Generalized Inverse Gaussian Distribution)及修正贝塞尔函数

1. PDF generalized inverse Gaussian distribution (GIG) 是一个三参数的连续型概率分布: f(x)=(a/b)p/22Kp(ab−−√)xp−1e− ...

- 【翻译】拟合与高斯分布 [Curve fitting and the Gaussian distribution]

参考与前言 英文原版 Original English Version:https://fabiandablander.com/r/Curve-Fitting-Gaussian.html 如何通俗易懂 ...

- [Bayes] Why we prefer Gaussian Distribution

最后还是选取一个朴素直接的名字,在此通过手算体会高斯的便捷和神奇. Ref: The Matrix Cookbook 注意,这里的所有变量默认都为多元变量,不是向量就是矩阵.多元高斯密度函数如下: 高 ...

- 吴恩达机器学习笔记56-多元高斯分布及其在误差检测中的应用(Multivariate Gaussian Distribution & Anomaly Detection using the Multivariate Gaussian Distribution)

一.多元高斯分布简介 假使我们有两个相关的特征,而且这两个特征的值域范围比较宽,这种情况下,一般的高斯分布模型可能不能很好地识别异常数据.其原因在于,一般的高斯分布模型尝试的是去同时抓住两个特征的偏差 ...

- 吴恩达机器学习笔记53-高斯分布的算法(Algorithm of Gaussian Distribution)

如何应用高斯分布开发异常检测算法呢? 异常检测算法: 对于给定的数据集

- 吴恩达机器学习笔记52-异常检测的问题动机与高斯分布(Problem Motivation of Anomaly Detection& Gaussian Distribution)

一.问题动机 异常检测(Anomaly detection)问题是机器学习算法的一个常见应用.这种算法的一个有趣之处在于:它虽然主要用于非监督学习问题,但从某些角度看,它又类似于一些监督学习问题. 给 ...

随机推荐

- Teamwork(The second day of the team)

梦之翼 5.20工作汇报: Master:杨灵超 产品负责人:杨家安 第一次Sprint的目标和时间: 目标:这一次的sprint我们想先做成一个可以运行的可以展示,但是功能或许还不是很完善的一个模型 ...

- 使用java开发微信公众平台(1)

目录 开发服务器 域名验证 获取access_token 自定义菜单 个人账号不能定义url访问服务器,使用测试号就不用认证添加url了,进入公众平台测试账号 开发服务器 域名验证 进入公众平台测试账 ...

- 【CSAPP笔记】13. 链接

下面就要进入本书的第二部分--在系统上运行程序.书的第一部分,主要是研究单个应用程序,关注的是数据类型.机器指令.程序性能.存储器系统等话题.在书的第二部分,我们继续对计算机系统的探索.现代操作系统与 ...

- requests爬取知乎话题和子话题

zhihu.py # *_*coding:utf-8 *_* import pymysql import requests from lxml import etree from requests_t ...

- java中为什么要进行对象序列化?

序列化其实很好理解,假如你现在做一个项目,项目是分工合作的,并且你喝其他小组成员不在同一个城市,那么你要如何把你写的那些类给其他小组成员呢?这个时候就要用到序列化了,简单的说:序列化就是将内存中的类或 ...

- java.lang.NoClassDefFoundError: org/hibernate/annotations/common/reflection/ClassLoadingException

下载高版本的: hibernate-commons-annotations-5.0.1.Final.jar 低版本缺包

- 半夜思考之查漏补缺 , Spring 中 Bean 之间的依赖问题

每次看书都会发现自己的不足 . 当一个 singten 的 Bean 依赖一个 prototype 的 Bean 时 , 如果不加注意 , 会发生一些奇怪的事情 , prototype 变为了 sin ...

- list 交换位置扩展

public static List<T> Swap<T>(this List<T> list, int index1,int index2) { if(index ...

- C 函数——Day04

C 函数 函数是一组一起执行一个任务的语句.每个 C 程序都至少有一个函数,即主函数 main() ,所有简单的程序都可以定义其他额外的函数. 您可以把代码划分到不同的函数中.如何划分代码到不同的函数 ...

- 关于BIO和NIO的理解

摘要: 关于BIO和NIO的理解 最近大概看了ZooKeeper和Mina的源码发现都是用Java NIO实现的,所以有必要搞清楚什么是NIO.下面是我结合网络资料自己总结的,为了节约时间图示随便画的 ...