BatchNorm caffe源码

1、计算的均值和方差是channel的

2、test/predict 或者use_global_stats的时候,直接使用moving average

use_global_stats 表示是否使用全部数据的统计值(该数据实在train 阶段通过moving average 方法计算得到)训练阶段设置为 fasle, 表示通过当前的minibatch 数据计算得到, test/predict 阶段使用 通过全部数据计算得到的统计值

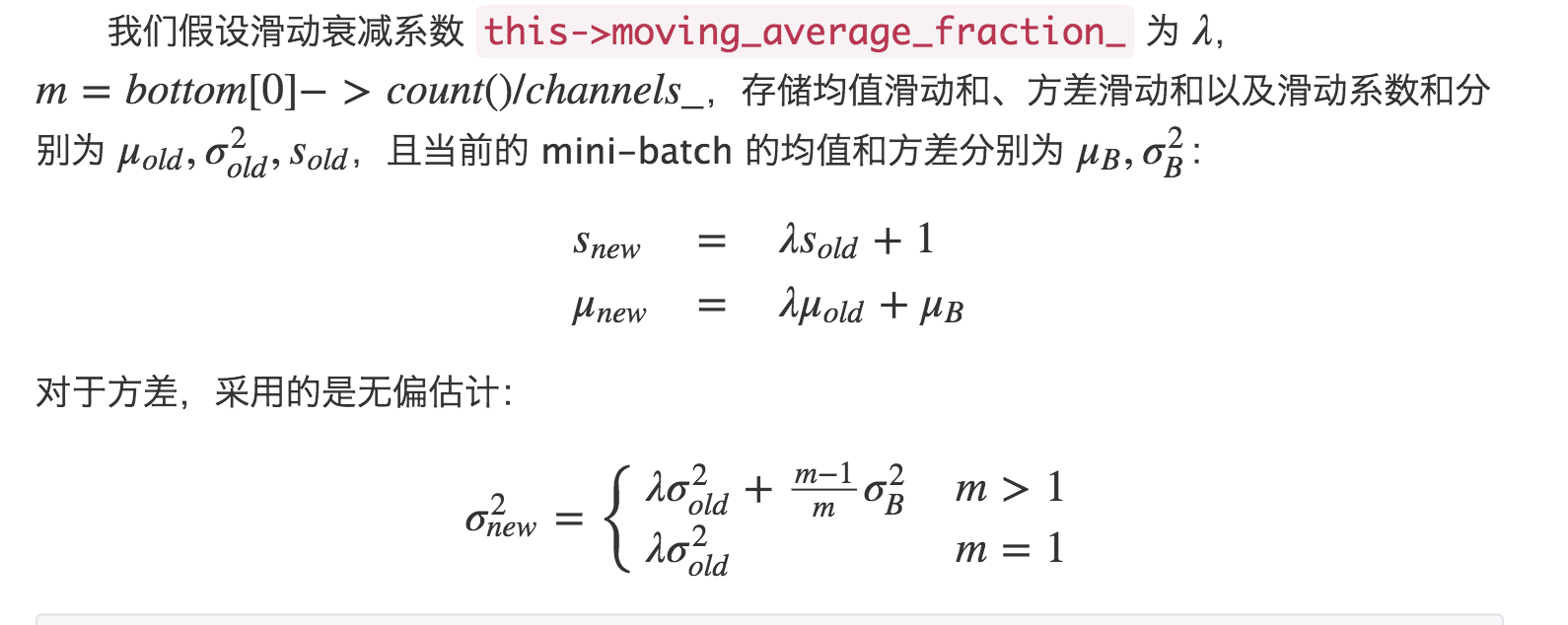

那什么是 moving average 呢:

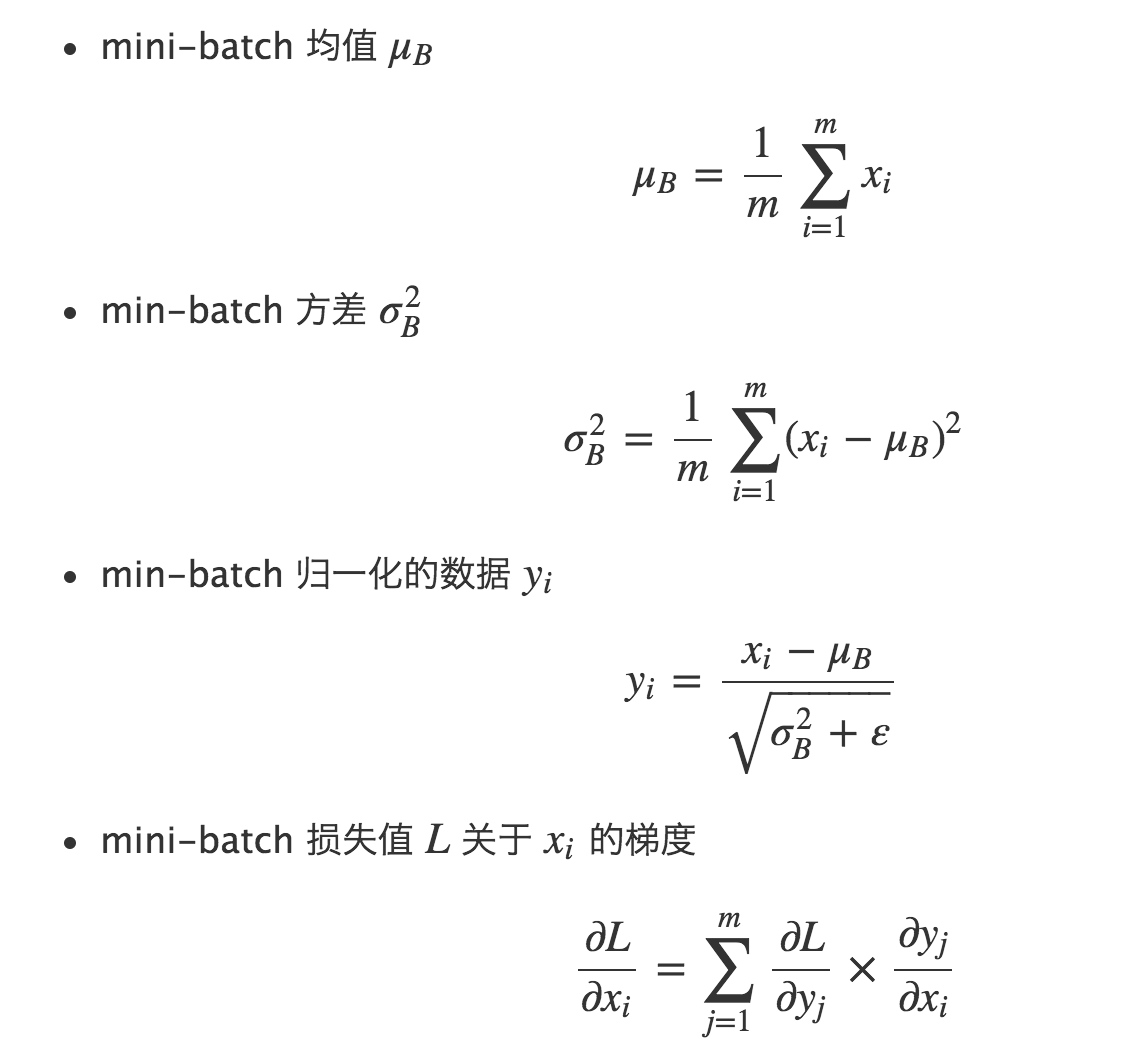

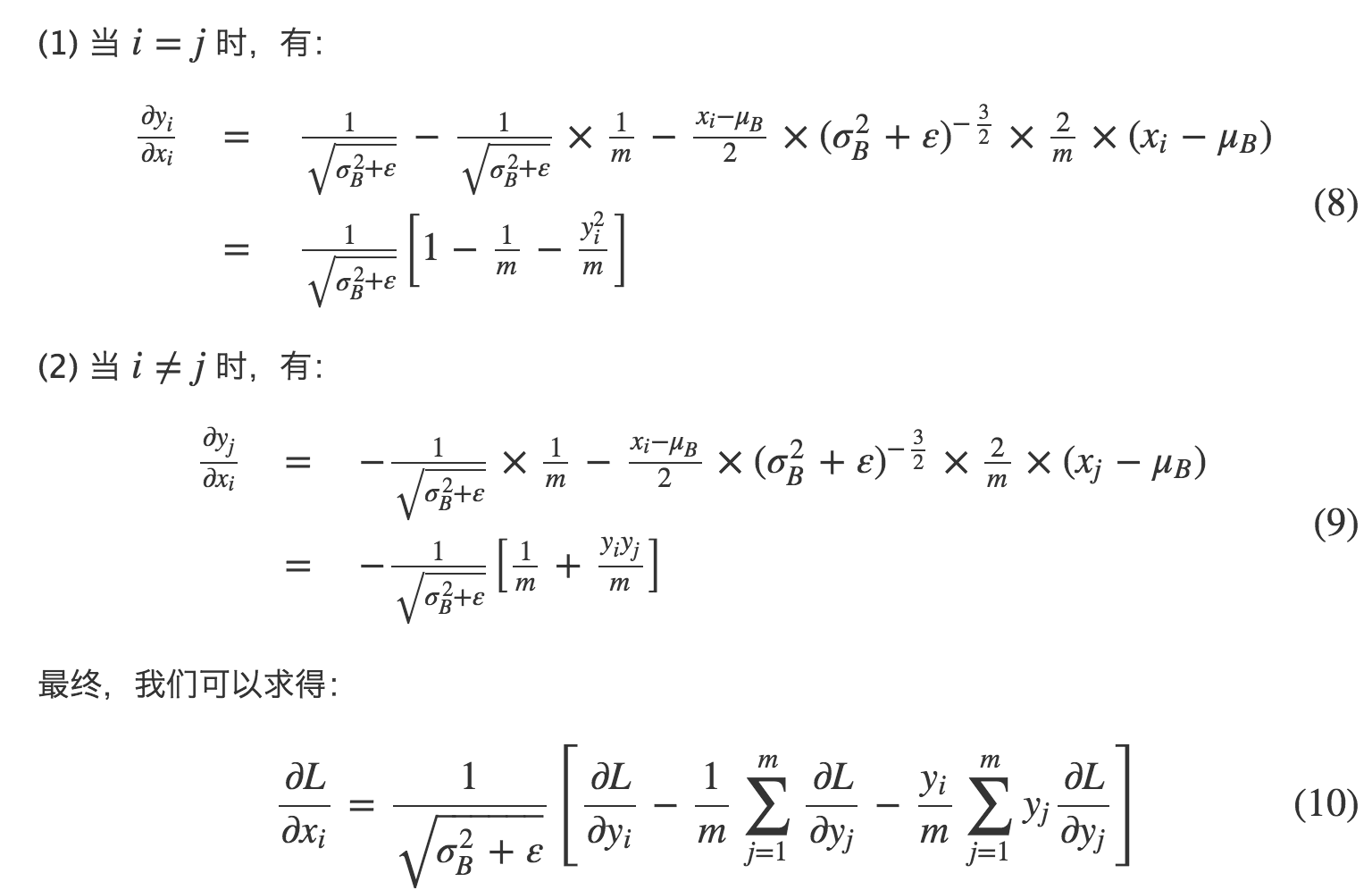

反向传播:

源码:(注:caffe_cpu_scale 是y=alpha*x ,这里面求滑动均值时候,alpha是滑动系数和的倒数,x是滑动均值和

template <typename Dtype>

void BatchNormLayer<Dtype>::Forward_cpu(const vector<Blob<Dtype>*>& bottom,

const vector<Blob<Dtype>*>& top) {

const Dtype* bottom_data = bottom[0]->cpu_data();

Dtype* top_data = top[0]->mutable_cpu_data();

int num = bottom[0]->shape(0);

int spatial_dim = bottom[0]->count()/(bottom[0]->shape(0)*channels_); if (bottom[0] != top[0]) {

caffe_copy(bottom[0]->count(), bottom_data, top_data);

} if (use_global_stats_) {

// use the stored mean/variance estimates.

const Dtype scale_factor = this->blobs_[2]->cpu_data()[0] == 0 ?

0 : 1 / this->blobs_[2]->cpu_data()[0];

caffe_cpu_scale(variance_.count(), scale_factor,

this->blobs_[0]->cpu_data(), mean_.mutable_cpu_data());

caffe_cpu_scale(variance_.count(), scale_factor,

this->blobs_[1]->cpu_data(), variance_.mutable_cpu_data());

} else {

// compute mean

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim,

1. / (num * spatial_dim), bottom_data,

spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

mean_.mutable_cpu_data());

} // subtract mean

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), mean_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, channels_ * num,

spatial_dim, 1, -1, num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 1., top_data); if (!use_global_stats_) {

// compute variance using var(X) = E((X-EX)^2)

caffe_powx(top[0]->count(), top_data, Dtype(2),

temp_.mutable_cpu_data()); // (X-EX)^2

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim,

1. / (num * spatial_dim), temp_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

variance_.mutable_cpu_data()); // E((X_EX)^2) // compute and save moving average

this->blobs_[2]->mutable_cpu_data()[0] *= moving_average_fraction_;

this->blobs_[2]->mutable_cpu_data()[0] += 1;

caffe_cpu_axpby(mean_.count(), Dtype(1), mean_.cpu_data(),

moving_average_fraction_, this->blobs_[0]->mutable_cpu_data());

int m = bottom[0]->count()/channels_;

Dtype bias_correction_factor = m > 1 ? Dtype(m)/(m-1) : 1;

caffe_cpu_axpby(variance_.count(), bias_correction_factor,

variance_.cpu_data(), moving_average_fraction_,

this->blobs_[1]->mutable_cpu_data());

} // normalize variance

caffe_add_scalar(variance_.count(), eps_, variance_.mutable_cpu_data());

caffe_powx(variance_.count(), variance_.cpu_data(), Dtype(0.5),

variance_.mutable_cpu_data()); // replicate variance to input size

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), variance_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, channels_ * num,

spatial_dim, 1, 1., num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 0., temp_.mutable_cpu_data());

caffe_div(temp_.count(), top_data, temp_.cpu_data(), top_data);

// TODO(cdoersch): The caching is only needed because later in-place layers

// might clobber the data. Can we skip this if they won't?

caffe_copy(x_norm_.count(), top_data,

x_norm_.mutable_cpu_data());

} template <typename Dtype>

void BatchNormLayer<Dtype>::Backward_cpu(const vector<Blob<Dtype>*>& top,

const vector<bool>& propagate_down,

const vector<Blob<Dtype>*>& bottom) {

const Dtype* top_diff;

if (bottom[0] != top[0]) {

top_diff = top[0]->cpu_diff();

} else {

caffe_copy(x_norm_.count(), top[0]->cpu_diff(), x_norm_.mutable_cpu_diff());

top_diff = x_norm_.cpu_diff();

}

Dtype* bottom_diff = bottom[0]->mutable_cpu_diff();

if (use_global_stats_) {

caffe_div(temp_.count(), top_diff, temp_.cpu_data(), bottom_diff);

return;

}

const Dtype* top_data = x_norm_.cpu_data();

int num = bottom[0]->shape()[0];

int spatial_dim = bottom[0]->count()/(bottom[0]->shape(0)*channels_);

// if Y = (X-mean(X))/(sqrt(var(X)+eps)), then

//

// dE(Y)/dX =

// (dE/dY - mean(dE/dY) - mean(dE/dY \cdot Y) \cdot Y)

// ./ sqrt(var(X) + eps)

//

// where \cdot and ./ are hadamard product and elementwise division,

// respectively, dE/dY is the top diff, and mean/var/sum are all computed

// along all dimensions except the channels dimension. In the above

// equation, the operations allow for expansion (i.e. broadcast) along all

// dimensions except the channels dimension where required. // sum(dE/dY \cdot Y)

caffe_mul(temp_.count(), top_data, top_diff, bottom_diff);

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim, 1.,

bottom_diff, spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

mean_.mutable_cpu_data()); // reshape (broadcast) the above

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), mean_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, channels_ * num,

spatial_dim, 1, 1., num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 0., bottom_diff); // sum(dE/dY \cdot Y) \cdot Y

caffe_mul(temp_.count(), top_data, bottom_diff, bottom_diff); // sum(dE/dY)-sum(dE/dY \cdot Y) \cdot Y

caffe_cpu_gemv<Dtype>(CblasNoTrans, channels_ * num, spatial_dim, 1.,

top_diff, spatial_sum_multiplier_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemv<Dtype>(CblasTrans, num, channels_, 1.,

num_by_chans_.cpu_data(), batch_sum_multiplier_.cpu_data(), 0.,

mean_.mutable_cpu_data());

// reshape (broadcast) the above to make

// sum(dE/dY)-sum(dE/dY \cdot Y) \cdot Y

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num, channels_, 1, 1,

batch_sum_multiplier_.cpu_data(), mean_.cpu_data(), 0.,

num_by_chans_.mutable_cpu_data());

caffe_cpu_gemm<Dtype>(CblasNoTrans, CblasNoTrans, num * channels_,

spatial_dim, 1, 1., num_by_chans_.cpu_data(),

spatial_sum_multiplier_.cpu_data(), 1., bottom_diff); // dE/dY - mean(dE/dY)-mean(dE/dY \cdot Y) \cdot Y

caffe_cpu_axpby(temp_.count(), Dtype(1), top_diff,

Dtype(-1. / (num * spatial_dim)), bottom_diff); // note: temp_ still contains sqrt(var(X)+eps), computed during the forward

// pass.

caffe_div(temp_.count(), bottom_diff, temp_.cpu_data(), bottom_diff);

} #ifdef CPU_ONLY

STUB_GPU(BatchNormLayer);

#endif INSTANTIATE_CLASS(BatchNormLayer);

REGISTER_LAYER_CLASS(BatchNorm);

} // namespace caffe

BatchNorm caffe源码的更多相关文章

- caffe源码学习之Proto数据格式【1】

前言: 由于业务需要,接触caffe已经有接近半年,一直忙着阅读各种论文,重现大大小小的模型. 期间也总结过一些caffe源码学习笔记,断断续续,这次打算系统的记录一下caffe源码学习笔记,巩固一下 ...

- Caffe源码理解2:SyncedMemory CPU和GPU间的数据同步

目录 写在前面 成员变量的含义及作用 构造与析构 内存同步管理 参考 博客:blog.shinelee.me | 博客园 | CSDN 写在前面 在Caffe源码理解1中介绍了Blob类,其中的数据成 ...

- caffe源码阅读

参考网址:https://www.cnblogs.com/louyihang-loves-baiyan/p/5149628.html 1.caffe代码层次熟悉blob,layer,net,solve ...

- Caffe源码中syncedmem文件分析

Caffe源码(caffe version:09868ac , date: 2015.08.15)中有一些重要文件,这里介绍下syncedmem文件. 1. include文件: (1).& ...

- Caffe源码中math_functions文件分析

Caffe源码(caffe version:09868ac , date: 2015.08.15)中有一些重要文件,这里介绍下math_functions文件. 1. include文件: ...

- Caffe源码中caffe.proto文件分析

Caffe源码(caffe version:09868ac , date: 2015.08.15)中有一些重要文件,这里介绍下caffe.proto文件. 在src/caffe/proto目录下有一个 ...

- Caffe源码阅读(1) 全连接层

Caffe源码阅读(1) 全连接层 发表于 2014-09-15 | 今天看全连接层的实现.主要看的是https://github.com/BVLC/caffe/blob/master/src ...

- vscode下调试caffe源码

caffe目录: ├── build -> .build_release // make生成目录,生成各种可执行bin文件,直接调用入口: ├── cmake ├── CMakeLists.tx ...

- Caffe源码中common文件分析

Caffe源码(caffe version:09868ac , date: 2015.08.15)中的一些重要头文件如caffe.hpp.blob.hpp等或者外部调用Caffe库使用时,一般都会in ...

随机推荐

- linux内核设计与实现一书阅读整理 之第三章

chapter 3 进程管理 3.1 进程 进程就是处于执行期的程序. 进程就是正在执行的程序代码的实时结果. 内核调度的对象是线程而并非进程. 在现代操作系统中,进程提供两种虚拟机制: 虚拟处理器 ...

- bzoj2141: 排队(分块+树状数组)

块套树为什么会这么快.. 先跑出原序列逆序对. 显然交换两个位置$l,r$,对$[1,l),(r,n]$里的数没有影响,所以只需要考虑$[l,r]$内的数. 设$(l,r)$内的数$a_i$,则按以下 ...

- [HAOI2011]防线修建

题目描述 近来A国和B国的矛盾激化,为了预防不测,A国准备修建一条长长的防线,当然修建防线的话,肯定要把需要保护的城市修在防线内部了.可是A国上层现在还犹豫不决,到底该把哪些城市作为保护对象呢?又由于 ...

- POI导入excel文件2

POI上传到服务器读取excel文件1中已经介绍了上传文件和导入excel所有的内容http://www.cnblogs.com/fxwl/p/5896893.html , 本文中只是单单读取本地文件 ...

- Qt error ------ no matching function for call to QObject::connect(QSpinBox*&, <unresolved overloaded function type>, QSlider*&, void (QAbstractSlider::*)(int))

connect(ui->spinBox_luminosity,&QSpinBox::valueChanged, ui->horizontalSlider_luminosity, & ...

- 理解jquery的$.extend()、$.fn和$.fn.extend()的区别及用法

viajQuery为开发插件提拱了两个方法,分别是: jQuery.fn.extend(); jQuery.extend(); jQuery.fn jQuery.fn = jQuery.prototy ...

- Openssh版本升级(Centos6.7)

实现前提公司服务器需要进行安全测评,扫描漏洞的设备扫出了关于 openssh 漏洞,主要是因为 openssh的当前版本为5.3,版本低了,而yum最新的openssh也只是5.3,没办法只能到 rp ...

- IOC轻量级框架之Unity

任何事物的出现,总有它独特的原因,Unity也是如此,在Unity产生之前,我们是这么做的 我们需要在一个类A中引用另一个类B的时候,总是将类B的实例放置到类A的构造函数中,以便在初始化类A的时候,得 ...

- 美轮美奂!9款设计独特的jQuery/CSS3全新应用插件(下拉菜单、动画、图表、导航等)

今天要为大家分享9款设计非常独特的jQuery/CSS3全新应用插件,插件包含菜单.jQuery焦点图.jQuery表单.jQuery图片特效等.下面大家一起来看看吧. 1.jQuery水晶样式下拉导 ...

- bzoj 1564 [NOI2009]二叉查找树(树形DP)

[题目链接] http://www.lydsy.com/JudgeOnline/problem.php?id=1564 [题意] 给定一个Treap,总代价为深度*距离之和.可以每次以K的代价修改权值 ...