MapReduce编程系列 — 5:单表关联

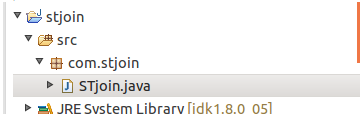

1、项目名称:

Tom Lucy

Tom Jack

Jone Lucy

Jone Jack

Lucy Mary

Lucy Ben

Jack Alice

Jack Jesse

Terry Alice

Terry Jesse

Philip Terry

Philip Alima

Mark Terry

Mark Alma

package com.stjoin; import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser; public class STjoin {

public static int time = 0;

//map将输入分割成child和parent,然后正序输出一次作为右表,

//反序输出一次作为左表,需要注意的是在输出的value中必须加上左右表区别标志

public static class Map extends Mapper<Object, Text, Text, Text>{

public void map(Object key,Text value,Context context)throws IOException,InterruptedException{

String childname = new String();

String parentname = new String();

String relationtype = new String();

String line = value.toString();

System.out.println("mapper...............");

int i = 0;

while(line.charAt(i) != ' '){

i++;

}

String[] values = {line.substring(0, i),line.substring(i+1)};

System.out.println("child:"+values[0]+" parent:"+values[1]);

if(values[0].compareTo("child") != 0){//如果是child,则为0,否则为-1

childname=values[0];

parentname=values[1];

//左表

relationtype="1";

context.write(new Text(values[1]),new Text(relationtype+"+"+childname+"+"+parentname));

System.out.println("key:"+values[1]+" value: "+relationtype+"+"+childname+"+"+parentname);

//右表

relationtype = "2";

context.write(new Text(values[0]), new Text(relationtype+"+"+childname+"+"+parentname));

System.out.println("key:"+values[0]+" value: "+relationtype+"+"+childname+"+"+parentname);

}

}

}

public static class Reduce extends Reducer<Text, Text, Text, Text>{

public void reduce(Text key,Iterable<Text> values,Context context) throws IOException, InterruptedException{

System.out.println("reduce.....................");

System.out.println("key:"+key+" values:"+values);

//输出表头

if(time==0){

context.write(new Text("grandchild"), new Text("grandparent"));

time++;

}

int grandchildnum = 0;

String grandchild[] = new String[10];

int grandparentnum = 0;

String grandparent[] = new String[10]; Iterator ite = values.iterator();

while(ite.hasNext()){

String record = ite.next().toString();

System.out.println("record: "+record); int len = record.length();

int i = 2;

if(len==0) continue;

char relationtype = record.charAt(0);

String childname = new String();

String parentname = new String();

//获取value-list中的value的child

while(record.charAt(i)!='+'){

childname = childname + record.charAt(i);

i++;

}

System.out.println("childname: "+childname);

i=i+1;

//获取value-list中的value的parent

while(i<len){

parentname=parentname+record.charAt(i);

i++;

}

System.out.println("parentname: "+parentname);

//左表,取出child放入grandchild数组中

if (relationtype=='1') {

grandchild[grandchildnum] = childname;

grandchildnum++;

}

//右表,取出child放入grandparent数组中

else{

grandparent[grandparentnum]=parentname;

grandparentnum++;

}

}

//grandchild和grandparent数组求笛卡儿积

if(grandparentnum!=0&&grandchildnum!=0){

for(int m = 0 ; m < grandchildnum ; m++){

for(int n = 0 ; n < grandparentnum; n++){

context.write(new Text(grandchild[m]), new Text(grandparent[n]));

System.out.println("grandchild: "+grandchild[m]+" grandparent: "+grandparent[n]);

}

}

}

}

} public static void main(String [] args)throws Exception{

Configuration conf = new Configuration();

String otherArgs[] = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage: sort<in><out>");

System.exit(2);

}

Job job = new Job(conf,"single table join");

job.setJarByClass(STjoin.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class); job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class); FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job,new Path(otherArgs[1])); System.exit(job.waitForCompletion(true)? 0 : 1);

}

}

版本2(简化版):

package com.stjoin; import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser; public class STjoin {

public static int time = 0; public static class Map extends Mapper<Object, Text, Text, Text>{

public void map(Object key,Text value,Context context)throws IOException,InterruptedException{

String relationtype = new String();

String line = value.toString();

System.out.println("mapper...............");

int i = 0;

//遍历方法一:一个字符一个字符对照确定分割点

/* while(line.charAt(i) != ' '){

i++;

}

String[] values = {line.substring(0, i),line.substring(i+1)};

*/

//遍历方法二:使用迭代器取出child和parent

String[] values = new String[10];

StringTokenizer itr = new StringTokenizer(line);

while(itr.hasMoreTokens()){

values[i] = itr.nextToken();

i = i+1;

} System.out.println("child:"+values[0]+" parent:"+values[1]);

if(values[0].compareTo("child") != 0){//如果是child,则为0,否则为-1 relationtype="1";

context.write(new Text(values[1]),new Text(relationtype+"+"+values[0]));

System.out.println("key:"+values[1]+" value: "+relationtype+"+"+values[0]);

relationtype = "2";

context.write(new Text(values[0]), new Text(relationtype+"+"+values[1]));

System.out.println("key:"+values[0]+" value: "+relationtype+"+"+values[1]);

}

}

} public static class Reduce extends Reducer<Text, Text, Text, Text>{

public void reduce(Text key,Iterable<Text> values,Context context) throws IOException, InterruptedException{

System.out.println("reduce.....................");

System.out.println("key:"+key+" values:"+values);

if(time==0){

context.write(new Text("grandchild"), new Text("grandparent"));

time++;

}

int grandchildnum = 0;

String grandchild[] = new String[10];

int grandparentnum = 0;

String grandparent[] = new String[10]; String name = new String();

//遍历方法一:用迭代器

// Iterator ite = values.iterator();

// while(ite.hasNext()){ //遍历方法二:用for循环

for(Text val : values){

// String record = ite.next().toString();

String record = val.toString();

System.out.println("record: "+record); int i = 2;

char relationtype = record.charAt(0);

name = record.substring(i); System.out.println("name: "+name); if (relationtype=='1') {

grandchild[grandchildnum] = name;

grandchildnum++;

}

else{

grandparent[grandparentnum]=name;

grandparentnum++;

}

}

//遍历方法三:就是详细方法的charAt(),一个一个字符遍历

if(grandparentnum!=0&&grandchildnum!=0){

for(int m = 0 ; m < grandchildnum ; m++){

for(int n = 0 ; n < grandparentnum; n++){

context.write(new Text(grandchild[m]), new Text(grandparent[n]));

System.out.println("grandchild: "+grandchild[m]+" grandparent: "+grandparent[n]);

}

}

}

}

}

public static void main(String [] args)throws Exception{

Configuration conf = new Configuration();

String otherArgs[] = new GenericOptionsParser(conf,args).getRemainingArgs();

if(otherArgs.length != 2){

System.err.println("Usage: sort<in><out>");

System.exit(2);

}

Job job = new Job(conf,"single table join");

job.setJarByClass(STjoin.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class); job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class); FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job,new Path(otherArgs[1])); System.exit(job.waitForCompletion(true)? 0 : 1);

}

}

14/09/22 20:31:48 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

14/09/22 20:31:48 INFO input.FileInputFormat: Total input paths to process : 1

14/09/22 20:31:48 WARN snappy.LoadSnappy: Snappy native library not loaded

14/09/22 20:31:48 INFO mapred.JobClient: Running job: job_local_0001

14/09/22 20:31:48 INFO util.ProcessTree: setsid exited with exit code 0

14/09/22 20:31:48 INFO mapred.Task: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@1a430c37

14/09/22 20:31:48 INFO mapred.MapTask: io.sort.mb = 100

14/09/22 20:31:48 INFO mapred.MapTask: data buffer = 79691776/99614720

14/09/22 20:31:48 INFO mapred.MapTask: record buffer = 262144/327680

mapper...............

child:child parent:parent

mapper...............

child:Tom parent:Lucy

key:Lucy value: 1+Tom+Lucy

key:Tom value: 2+Tom+Lucy

mapper...............

child:Tom parent:Jack

key:Jack value: 1+Tom+Jack

key:Tom value: 2+Tom+Jack

mapper...............

child:Jone parent:Lucy

key:Lucy value: 1+Jone+Lucy

key:Jone value: 2+Jone+Lucy

mapper...............

child:Jone parent:Jack

key:Jack value: 1+Jone+Jack

key:Jone value: 2+Jone+Jack

mapper...............

child:Lucy parent:Mary

key:Mary value: 1+Lucy+Mary

key:Lucy value: 2+Lucy+Mary

mapper...............

child:Lucy parent:Ben

key:Ben value: 1+Lucy+Ben

key:Lucy value: 2+Lucy+Ben

mapper...............

child:Jack parent:Alice

key:Alice value: 1+Jack+Alice

14/09/22 20:31:49 INFO mapred.MapTask: Starting flush of map output

key:Jack value: 2+Jack+Alice

mapper...............

child:Jack parent:Jesse

key:Jesse value: 1+Jack+Jesse

key:Jack value: 2+Jack+Jesse

mapper...............

child:Terry parent:Alice

key:Alice value: 1+Terry+Alice

key:Terry value: 2+Terry+Alice

mapper...............

child:Terry parent:Jesse

key:Jesse value: 1+Terry+Jesse

key:Terry value: 2+Terry+Jesse

mapper...............

child:Philip parent:Terry

key:Terry value: 1+Philip+Terry

key:Philip value: 2+Philip+Terry

mapper...............

child:Philip parent:Alima

key:Alima value: 1+Philip+Alima

key:Philip value: 2+Philip+Alima

mapper...............

child:Mark parent:Terry

key:Terry value: 1+Mark+Terry

key:Mark value: 2+Mark+Terry

mapper...............

child:Mark parent:Alma

key:Alma value: 1+Mark+Alma

key:Mark value: 2+Mark+Alma

14/09/22 20:31:49 INFO mapred.MapTask: Finished spill 0

14/09/22 20:31:49 INFO mapred.Task: Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting

14/09/22 20:31:49 INFO mapred.JobClient: map 0% reduce 0%

14/09/22 20:31:51 INFO mapred.LocalJobRunner:

14/09/22 20:31:51 INFO mapred.Task: Task 'attempt_local_0001_m_000000_0' done.

14/09/22 20:31:51 INFO mapred.Task: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@5c448d98

14/09/22 20:31:51 INFO mapred.LocalJobRunner:

14/09/22 20:31:51 INFO mapred.Merger: Merging 1 sorted segments

14/09/22 20:31:51 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 564 bytes

14/09/22 20:31:51 INFO mapred.LocalJobRunner:

reduce.....................

key:Alice values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 1+Jack+Alice

childname: Jack

parentname: Alice

record: 1+Terry+Alice

childname: Terry

parentname: Alice

reduce.....................

key:Alima values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 1+Philip+Alima

childname: Philip

parentname: Alima

reduce.....................

key:Alma values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 1+Mark+Alma

childname: Mark

parentname: Alma

reduce.....................

key:Ben values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 1+Lucy+Ben

childname: Lucy

parentname: Ben

reduce.....................

key:Jack values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 2+Jack+Alice

childname: Jack

parentname: Alice

record: 2+Jack+Jesse

childname: Jack

parentname: Jesse

record: 1+Tom+Jack

childname: Tom

parentname: Jack

record: 1+Jone+Jack

childname: Jone

parentname: Jack

grandchild: Tom grandparent: Alice

grandchild: Tom grandparent: Jesse

grandchild: Jone grandparent: Alice

grandchild: Jone grandparent: Jesse

reduce.....................

key:Jesse values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 1+Jack+Jesse

childname: Jack

parentname: Jesse

record: 1+Terry+Jesse

childname: Terry

parentname: Jesse

reduce.....................

key:Jone values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 2+Jone+Lucy

childname: Jone

parentname: Lucy

record: 2+Jone+Jack

childname: Jone

parentname: Jack

reduce.....................

key:Lucy values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 1+Tom+Lucy

childname: Tom

parentname: Lucy

record: 1+Jone+Lucy

childname: Jone

parentname: Lucy

record: 2+Lucy+Mary

childname: Lucy

parentname: Mary

record: 2+Lucy+Ben

childname: Lucy

parentname: Ben

grandchild: Tom grandparent: Mary

grandchild: Tom grandparent: Ben

grandchild: Jone grandparent: Mary

grandchild: Jone grandparent: Ben

reduce.....................

key:Mark values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 2+Mark+Terry

childname: Mark

parentname: Terry

record: 2+Mark+Alma

childname: Mark

parentname: Alma

reduce.....................

key:Mary values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 1+Lucy+Mary

childname: Lucy

parentname: Mary

reduce.....................

key:Philip values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 2+Philip+Terry

childname: Philip

parentname: Terry

record: 2+Philip+Alima

childname: Philip

parentname: Alima

reduce.....................

key:Terry values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 2+Terry+Alice

childname: Terry

parentname: Alice

record: 2+Terry+Jesse

childname: Terry

parentname: Jesse

record: 1+Philip+Terry

childname: Philip

parentname: Terry

record: 1+Mark+Terry

childname: Mark

parentname: Terry

grandchild: Philip grandparent: Alice

grandchild: Philip grandparent: Jesse

grandchild: Mark grandparent: Alice

grandchild: Mark grandparent: Jesse

reduce.....................

key:Tom values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@f67d4d0

record: 2+Tom+Jack

childname: Tom

parentname: Jack

record: 2+Tom+Lucy

childname: Tom

parentname: Lucy

14/09/22 20:31:52 INFO mapred.Task: Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting

14/09/22 20:31:52 INFO mapred.LocalJobRunner:

14/09/22 20:31:52 INFO mapred.Task: Task attempt_local_0001_r_000000_0 is allowed to commit now

14/09/22 20:31:52 INFO output.FileOutputCommitter: Saved output of task 'attempt_local_0001_r_000000_0' to hdfs://localhost:9000/user/hadoop/stjoin_output07

14/09/22 20:31:52 INFO mapred.JobClient: map 100% reduce 0%

14/09/22 20:31:54 INFO mapred.LocalJobRunner: reduce > reduce

14/09/22 20:31:54 INFO mapred.Task: Task 'attempt_local_0001_r_000000_0' done.

14/09/22 20:31:55 INFO mapred.JobClient: map 100% reduce 100%

14/09/22 20:31:55 INFO mapred.JobClient: Job complete: job_local_0001

14/09/22 20:31:55 INFO mapred.JobClient: Counters: 22

14/09/22 20:31:55 INFO mapred.JobClient: Map-Reduce Framework

14/09/22 20:31:55 INFO mapred.JobClient: Spilled Records=56

14/09/22 20:31:55 INFO mapred.JobClient: Map output materialized bytes=568

14/09/22 20:31:55 INFO mapred.JobClient: Reduce input records=28

14/09/22 20:31:55 INFO mapred.JobClient: Virtual memory (bytes) snapshot=0

14/09/22 20:31:55 INFO mapred.JobClient: Map input records=15

14/09/22 20:31:55 INFO mapred.JobClient: SPLIT_RAW_BYTES=117

14/09/22 20:31:55 INFO mapred.JobClient: Map output bytes=506

14/09/22 20:31:55 INFO mapred.JobClient: Reduce shuffle bytes=0

14/09/22 20:31:55 INFO mapred.JobClient: Physical memory (bytes) snapshot=0

14/09/22 20:31:55 INFO mapred.JobClient: Reduce input groups=13

14/09/22 20:31:55 INFO mapred.JobClient: Combine output records=0

14/09/22 20:31:55 INFO mapred.JobClient: Reduce output records=13

14/09/22 20:31:55 INFO mapred.JobClient: Map output records=28

14/09/22 20:31:55 INFO mapred.JobClient: Combine input records=0

14/09/22 20:31:55 INFO mapred.JobClient: CPU time spent (ms)=0

14/09/22 20:31:55 INFO mapred.JobClient: Total committed heap usage (bytes)=408420352

14/09/22 20:31:55 INFO mapred.JobClient: File Input Format Counters

14/09/22 20:31:55 INFO mapred.JobClient: Bytes Read=163

14/09/22 20:31:55 INFO mapred.JobClient: FileSystemCounters

14/09/22 20:31:55 INFO mapred.JobClient: HDFS_BYTES_READ=326

14/09/22 20:31:55 INFO mapred.JobClient: FILE_BYTES_WRITTEN=81802

14/09/22 20:31:55 INFO mapred.JobClient: FILE_BYTES_READ=912

14/09/22 20:31:55 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=149

14/09/22 20:31:55 INFO mapred.JobClient: File Output Format Counters

14/09/22 20:31:55 INFO mapred.JobClient: Bytes Written=149

14/09/22 20:26:02 WARN mapred.JobClient: No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

14/09/22 20:26:02 INFO input.FileInputFormat: Total input paths to process : 1

14/09/22 20:26:02 WARN snappy.LoadSnappy: Snappy native library not loaded

14/09/22 20:26:03 INFO mapred.JobClient: Running job: job_local_0001

14/09/22 20:26:03 INFO util.ProcessTree: setsid exited with exit code 0

14/09/22 20:26:03 INFO mapred.Task: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@3b8c40d0

14/09/22 20:26:03 INFO mapred.MapTask: io.sort.mb = 100

14/09/22 20:26:03 INFO mapred.MapTask: data buffer = 79691776/99614720

14/09/22 20:26:03 INFO mapred.MapTask: record buffer = 262144/327680

mapper...............

child:child parent:parent

mapper...............

child:Tom parent:Lucy

key:Lucy value: 1+Tom

key:Tom value: 2+Lucy

mapper...............

child:Tom parent:Jack

key:Jack value: 1+Tom

key:Tom value: 2+Jack

mapper...............

child:Jone parent:Lucy

key:Lucy value: 1+Jone

key:Jone value: 2+Lucy

mapper...............

child:Jone parent:Jack

key:Jack value: 1+Jone

key:Jone value: 2+Jack

mapper...............

child:Lucy parent:Mary

key:Mary value: 1+Lucy

key:Lucy value: 2+Mary

mapper...............

child:Lucy parent:Ben

key:Ben value: 1+Lucy

key:Lucy value: 2+Ben

mapper...............

child:Jack parent:Alice

key:Alice value: 1+Jack

key:Jack value: 2+Alice

mapper...............

child:Jack parent:Jesse

key:Jesse value: 1+Jack

key:Jack value: 2+Jesse

mapper...............

child:Terry parent:Alice

key:Alice value: 1+Terry

key:Terry value: 2+Alice

mapper...............

child:Terry parent:Jesse

key:Jesse value: 1+Terry

key:Terry value: 2+Jesse

mapper...............

child:Philip parent:Terry

key:Terry value: 1+Philip

key:Philip value: 2+Terry

mapper...............

child:Philip parent:Alima

key:Alima value: 1+Philip

key:Philip value: 2+Alima

mapper...............

child:Mark parent:Terry

key:Terry value: 1+Mark

key:Mark value: 2+Terry

mapper...............

child:Mark parent:Alma

key:Alma value: 1+Mark

key:Mark value: 2+Alma

14/09/22 20:26:03 INFO mapred.MapTask: Starting flush of map output

14/09/22 20:26:03 INFO mapred.MapTask: Finished spill 0

14/09/22 20:26:03 INFO mapred.Task: Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting

14/09/22 20:26:04 INFO mapred.JobClient: map 0% reduce 0%

14/09/22 20:26:06 INFO mapred.LocalJobRunner:

14/09/22 20:26:06 INFO mapred.Task: Task 'attempt_local_0001_m_000000_0' done.

14/09/22 20:26:06 INFO mapred.Task: Using ResourceCalculatorPlugin : org.apache.hadoop.util.LinuxResourceCalculatorPlugin@4eba27a5

14/09/22 20:26:06 INFO mapred.LocalJobRunner:

14/09/22 20:26:06 INFO mapred.Merger: Merging 1 sorted segments

14/09/22 20:26:06 INFO mapred.Merger: Down to the last merge-pass, with 1 segments left of total size: 414 bytes

14/09/22 20:26:06 INFO mapred.LocalJobRunner:

reduce.....................

key:Alice values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 1+Jack

name: Jack

record: 1+Terry

name: Terry

reduce.....................

key:Alima values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 1+Philip

name: Philip

reduce.....................

key:Alma values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 1+Mark

name: Mark

reduce.....................

key:Ben values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 1+Lucy

name: Lucy

reduce.....................

key:Jack values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 2+Alice

name: Alice

record: 2+Jesse

name: Jesse

record: 1+Tom

name: Tom

record: 1+Jone

name: Jone

grandchild: Tom grandparent: Alice

grandchild: Tom grandparent: Jesse

grandchild: Jone grandparent: Alice

grandchild: Jone grandparent: Jesse

reduce.....................

key:Jesse values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 1+Jack

name: Jack

record: 1+Terry

name: Terry

reduce.....................

key:Jone values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 2+Lucy

name: Lucy

record: 2+Jack

name: Jack

reduce.....................

key:Lucy values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 1+Tom

name: Tom

record: 1+Jone

name: Jone

record: 2+Mary

name: Mary

record: 2+Ben

name: Ben

grandchild: Tom grandparent: Mary

grandchild: Tom grandparent: Ben

grandchild: Jone grandparent: Mary

grandchild: Jone grandparent: Ben

reduce.....................

key:Mark values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 2+Terry

name: Terry

record: 2+Alma

name: Alma

reduce.....................

key:Mary values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 1+Lucy

name: Lucy

reduce.....................

key:Philip values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 2+Terry

name: Terry

record: 2+Alima

name: Alima

reduce.....................

key:Terry values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 2+Alice

name: Alice

record: 2+Jesse

name: Jesse

record: 1+Philip

name: Philip

record: 1+Mark

name: Mark

grandchild: Philip grandparent: Alice

grandchild: Philip grandparent: Jesse

grandchild: Mark grandparent: Alice

grandchild: Mark grandparent: Jesse

reduce.....................

key:Tom values:org.apache.hadoop.mapreduce.ReduceContext$ValueIterable@5914dda1

record: 2+Jack

name: Jack

record: 2+Lucy

name: Lucy

14/09/22 20:26:06 INFO mapred.Task: Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting

14/09/22 20:26:06 INFO mapred.LocalJobRunner:

14/09/22 20:26:06 INFO mapred.Task: Task attempt_local_0001_r_000000_0 is allowed to commit now

14/09/22 20:26:06 INFO output.FileOutputCommitter: Saved output of task 'attempt_local_0001_r_000000_0' to hdfs://localhost:9000/user/hadoop/stjoin_output06

14/09/22 20:26:07 INFO mapred.JobClient: map 100% reduce 0%

14/09/22 20:26:09 INFO mapred.LocalJobRunner: reduce > reduce

14/09/22 20:26:09 INFO mapred.Task: Task 'attempt_local_0001_r_000000_0' done.

14/09/22 20:26:10 INFO mapred.JobClient: map 100% reduce 100%

14/09/22 20:26:10 INFO mapred.JobClient: Job complete: job_local_0001

14/09/22 20:26:10 INFO mapred.JobClient: Counters: 22

14/09/22 20:26:10 INFO mapred.JobClient: Map-Reduce Framework

14/09/22 20:26:10 INFO mapred.JobClient: Spilled Records=56

14/09/22 20:26:10 INFO mapred.JobClient: Map output materialized bytes=418

14/09/22 20:26:10 INFO mapred.JobClient: Reduce input records=28

14/09/22 20:26:10 INFO mapred.JobClient: Virtual memory (bytes) snapshot=0

14/09/22 20:26:10 INFO mapred.JobClient: Map input records=15

14/09/22 20:26:10 INFO mapred.JobClient: SPLIT_RAW_BYTES=117

14/09/22 20:26:10 INFO mapred.JobClient: Map output bytes=356

14/09/22 20:26:10 INFO mapred.JobClient: Reduce shuffle bytes=0

14/09/22 20:26:10 INFO mapred.JobClient: Physical memory (bytes) snapshot=0

14/09/22 20:26:10 INFO mapred.JobClient: Reduce input groups=13

14/09/22 20:26:10 INFO mapred.JobClient: Combine output records=0

14/09/22 20:26:10 INFO mapred.JobClient: Reduce output records=13

14/09/22 20:26:10 INFO mapred.JobClient: Map output records=28

14/09/22 20:26:10 INFO mapred.JobClient: Combine input records=0

14/09/22 20:26:10 INFO mapred.JobClient: CPU time spent (ms)=0

14/09/22 20:26:10 INFO mapred.JobClient: Total committed heap usage (bytes)=406847488

14/09/22 20:26:10 INFO mapred.JobClient: File Input Format Counters

14/09/22 20:26:10 INFO mapred.JobClient: Bytes Read=163

14/09/22 20:26:10 INFO mapred.JobClient: FileSystemCounters

14/09/22 20:26:10 INFO mapred.JobClient: HDFS_BYTES_READ=326

14/09/22 20:26:10 INFO mapred.JobClient: FILE_BYTES_WRITTEN=81502

14/09/22 20:26:10 INFO mapred.JobClient: FILE_BYTES_READ=762

14/09/22 20:26:10 INFO mapred.JobClient: HDFS_BYTES_WRITTEN=149

14/09/22 20:26:10 INFO mapred.JobClient: File Output Format Counters

14/09/22 20:26:10 INFO mapred.JobClient: Bytes Written=149

Tom Alice

Tom Jesse

Jone Alice

Jone Jesse

Tom Mary

Tom Ben

Jone Mary

Jone Ben

Philip Alice

Philip Jesse

Mark Alice

Mark Jesse

MapReduce编程系列 — 5:单表关联的更多相关文章

- MapReduce应用案例--单表关联

1. 实例描述 单表关联这个实例要求从给出的数据中寻找出所关心的数据,它是对原始数据所包含信息的挖掘. 实例中给出child-parent 表, 求出grandchild-grandparent表. ...

- Hadoop on Mac with IntelliJ IDEA - 8 单表关联NullPointerException

简化陆喜恒. Hadoop实战(第2版)5.4单表关联的代码时遇到空指向异常,经分析是逻辑问题,在此做个记录. 环境:Mac OS X 10.9.5, IntelliJ IDEA 13.1.5, Ha ...

- Hadoop 单表关联

前面的实例都是在数据上进行一些简单的处理,为进一步的操作打基础.单表关联这个实例要求从给出的数据中寻找到所关心的数据,它是对原始数据所包含信息的挖掘.下面进入这个实例. 1.实例描述 实例中给出chi ...

- MapRedece(单表关联)

源数据:Child--Parent表 Tom Lucy Tom Jack Jone Lucy Jone Jack Lucy Marry Lucy Ben Jack Alice Jack Jesse T ...

- MR案例:单表关联查询

"单表关联"这个实例要求从给出的数据中寻找所关心的数据,它是对原始数据所包含信息的挖掘. 需求:实例中给出 child-parent(孩子—父母)表,要求输出 grandchild ...

- MySQL 性能优化系列之一 单表预处理

MySQL 性能优化系列之一 单表预处理 背景介绍 我们经常在写多表关联的SQL时,会想到 left jion(左关联),right jion(右关联),inner jion(内关联)等. 但是,当表 ...

- MySql系列之单表查询

单表查询的语法 SELECT 字段1,字段2... FROM 表名 WHERE 条件 GROUP BY field HAVING 筛选 ORDER BY field LIMIT 限制条数 关键字的执行 ...

- MapReduce编程系列 — 6:多表关联

1.项目名称: 2.程序代码: 版本一(详细版): package com.mtjoin; import java.io.IOException; import java.util.Iterator; ...

- 【原创】MapReduce编程系列之表连接

问题描述 需要连接的表如下:其中左边是child,右边是parent,我们要做的是找出grandchild和grandparent的对应关系,为此需要进行表的连接. Tom Lucy Tom Jim ...

随机推荐

- Python的类实例方法,类方法,类静态方法

以下面的类定义为例: # coding:utf-8 class A: count = 0 def __init__(self, inst_name): self.inst_name = inst_na ...

- 数据的增量更新之EXISTS

有时候需要实现是数据的增量更新,因为更新全量会带来时间跟数据库资源的浪费,还有可能是数据出现冗余,所以需要使用增量数据同步,下面是一个数据增量同步的小实例. ---drop table A CREAT ...

- Query execution was interrupted, max_statement_time exceeded

版本:5.6.16 群里看见一个问题,在备份的时候,报如下错误:[root@B28-19-75 bak]# mysqldump -root -p --single-transaction --mast ...

- Java架构师之路:JAVA程序员必看的15本书

作为Java程序员来说,最痛苦的事情莫过于可以选择的范围太广,可以读的书太多,往往容易无所适从.我想就我自己读过的技术书籍中挑选出来一些,按照学习的先后顺序,推荐给大家,特别是那些想不断提高自己技术水 ...

- hifi/ headphone test

https://www.youtube.com/watch?v=-r0gRjqN0N8 https://www.youtube.com/watch?v=sMh_zvCw6us

- C++中用辗转相除法求两个数的最大公约数和最小公倍数

两个数的最大公约数:不能大于两个数中的最小值,算法口诀:小的给大的,余数给小的,整除返回小的,即最大公约数,(res=max%min)==0? max=min,min=res return min; ...

- bndtools教程

使用工具编程的确能给人们带来很多便利,但是在不会用之前,且缺乏相应的中文资料让你去了解时,真是一种折磨,同时也是一种挑战. bndTools其实就是用来开发OSGi的一个工具,它为开发提供了便利,具体 ...

- C# - 设置DLL的属性Embed Interop Type 设为False

错误: Error msg: A reference was created to embedded interop assembly. because of an indirect referenc ...

- EXTJS学习笔记

由于公司统一决策,决定使用EXTJS作为前台的开发UI框架,所以从今天开始学习EXTJS,对学习过程中遇到的问题做记录. 首先第一个问题:为什么要用ExtJS作为公司开发基础架构? 推荐一篇文章: ...

- myeclipse/eclipse添加Spket插件实现ExtJs4.2/ExtJs3智能提示

前言 感谢luotao,本博客是copy这篇博客的:http://www.cnblogs.com/luotaoyeah/p/3803926.html ,因为太重要了,所以笔者再写一次. 重要说明:ec ...