[转]Deep Reinforcement Learning Based Trading Application at JP Morgan Chase

Deep Reinforcement Learning Based Trading Application at JP Morgan Chase

https://medium.com/@ranko.mosic/reinforcement-learning-based-trading-application-at-jp-morgan-chase-f829b8ec54f2

FT released a story today about the new application that will optimize JP Morgan Chase trade execution ( Business Insider article on the same topic for readers that do not have FT subscription ). The intent is to reduce market impact and provide best trade execution results for large orders.

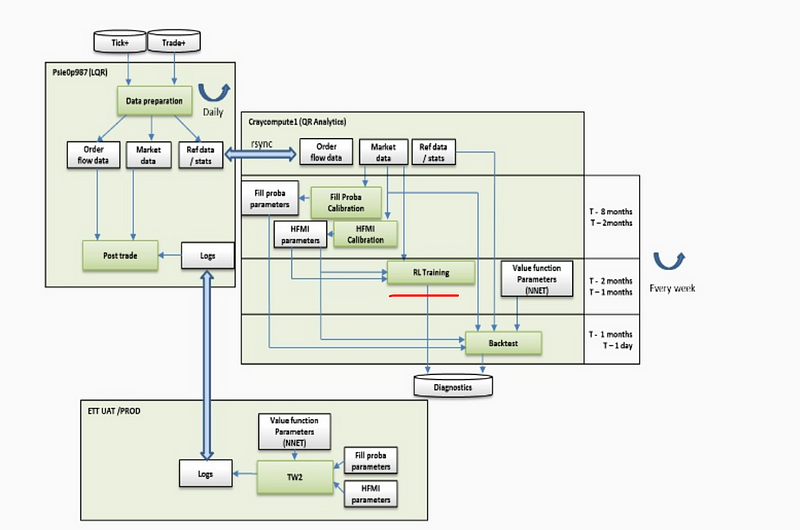

It is a complex application with many moving parts:

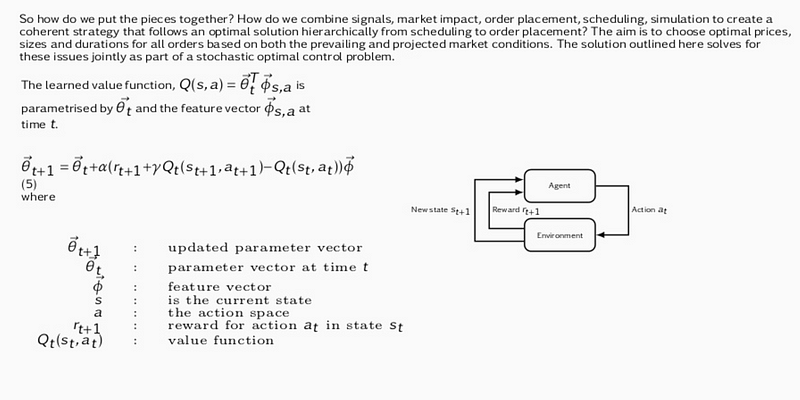

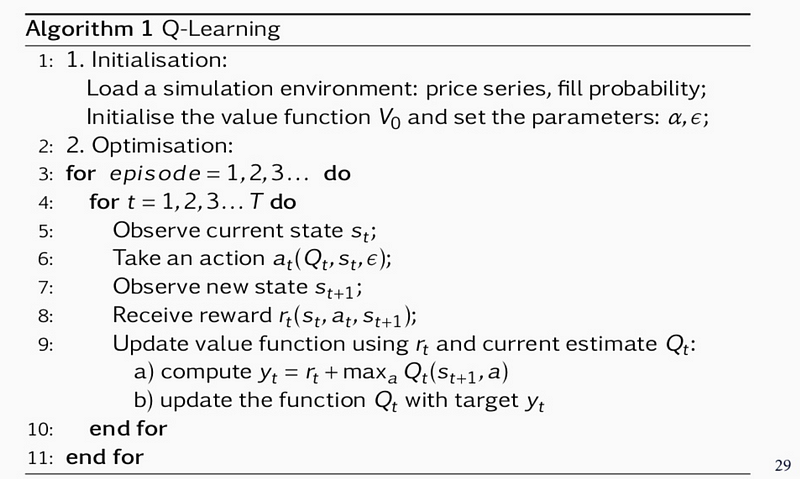

Its core is an RL algorithm that learns to perform the best action ( choose optimal price, duration and order size ) based on market conditions. It is not clear if it is Sarsa ( On-Policy TD Control) or Q-learning (Off-Policy Temporal Difference Control Algorithm ) as both algorithms are present in JP Morgan slides:

Sarsa

Q-learning

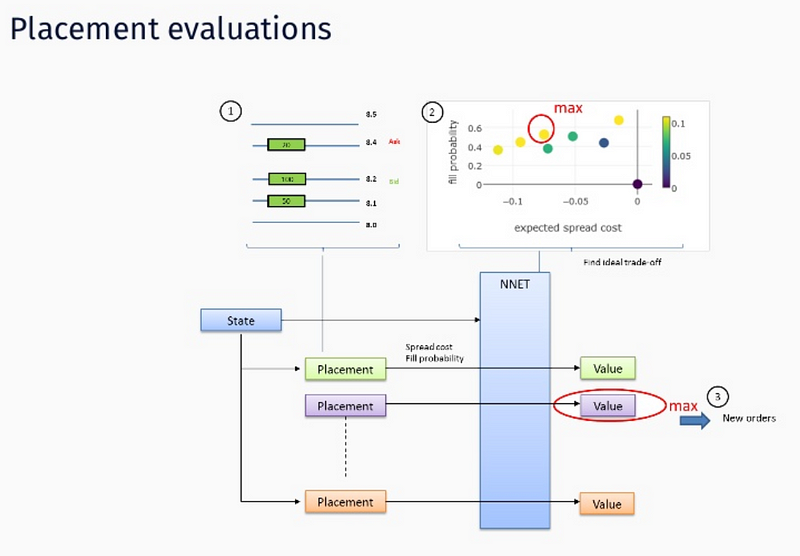

State consists of price series, expected spread cost, fill probability, size placed, as well as elapsed time, %progress, etc. Rewards are immediate rewards ( price spread ) and terminal ( end of episode ) rewards like completion, order duration and market penalties ( obviously those are negative rewards that punish the agent along these dimensions ).

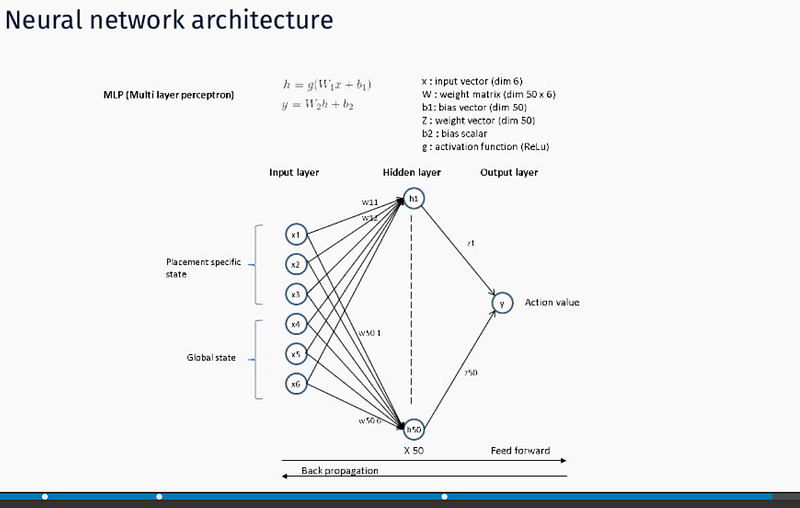

Actions are memorized as weights of a Deep Neural Network — function approximation via NN is used since state, action space is too big to be handled in tabular form. We assume stochastic gradient descent is used for both feed forward and backprop operation operation ( hence Deep designation ):

JP Morgan is convinced this is the very first real time trading AI/ML application on Wall Street. We are assuming this is not true i.e. there are surely other players operating in this space as RL implementation to order execution is known for quite a while now ( Kearns and Nevmyvaka 2006 ).

The latest LOXM developmentswill be presented at QuantMinds Conference in Lisbon (May of 2018).

Instinet is also using Q-learning, probably for the same purpose ( market impact reduction ).

[转]Deep Reinforcement Learning Based Trading Application at JP Morgan Chase的更多相关文章

- 【资料总结】| Deep Reinforcement Learning 深度强化学习

在机器学习中,我们经常会分类为有监督学习和无监督学习,但是尝尝会忽略一个重要的分支,强化学习.有监督学习和无监督学习非常好去区分,学习的目标,有无标签等都是区分标准.如果说监督学习的目标是预测,那么强 ...

- (转) Deep Reinforcement Learning: Playing a Racing Game

Byte Tank Posts Archive Deep Reinforcement Learning: Playing a Racing Game OCT 6TH, 2016 Agent playi ...

- (转) Deep Reinforcement Learning: Pong from Pixels

Andrej Karpathy blog About Hacker's guide to Neural Networks Deep Reinforcement Learning: Pong from ...

- (转) Playing FPS games with deep reinforcement learning

Playing FPS games with deep reinforcement learning 博文转自:https://blog.acolyer.org/2016/11/23/playing- ...

- (zhuan) Deep Reinforcement Learning Papers

Deep Reinforcement Learning Papers A list of recent papers regarding deep reinforcement learning. Th ...

- 论文笔记之:Asynchronous Methods for Deep Reinforcement Learning

Asynchronous Methods for Deep Reinforcement Learning ICML 2016 深度强化学习最近被人发现貌似不太稳定,有人提出很多改善的方法,这些方法有很 ...

- [DQN] What is Deep Reinforcement Learning

已经成为DL中专门的一派,高大上的样子 Intro: MIT 6.S191 Lecture 6: Deep Reinforcement Learning Course: CS 294: Deep Re ...

- 论文笔记:Learning how to Active Learn: A Deep Reinforcement Learning Approach

Learning how to Active Learn: A Deep Reinforcement Learning Approach 2018-03-11 12:56:04 1. Introduc ...

- 18 Issues in Current Deep Reinforcement Learning from ZhiHu

深度强化学习的18个关键问题 from: https://zhuanlan.zhihu.com/p/32153603 85 人赞了该文章 深度强化学习的问题在哪里?未来怎么走?哪些方面可以突破? 这两 ...

随机推荐

- HDFS shell操作及HDFS Java API编程

HDFS shell操作及HDFS Java API编程 1.熟悉Hadoop文件结构. 2.进行HDFS shell操作. 3.掌握通过Hadoop Java API对HDFS操作. 4.了解Had ...

- Async:简洁优雅的异步之道

前言 在异步处理方案中,目前最为简洁优雅的便是 async函数(以下简称A函数).经过必要的分块包装后,A函数能使多个相关的异步操作如同同步操作一样聚合起来,使其相互间的关系更为清晰.过程更为简洁.调 ...

- nginx支持HTTP2的配置过程

一.获取安装包 http://zlib.net/zlib-1.2.11.tar.gz https://www.openssl.org/source/openssl-1.0.2e.tar.gz (ope ...

- APK骨架分析

APK反编译的一般步骤是: 使用apktool将apk文件解压(后辍apk改为rar用winrar也可解压但这样不能解密res/value目录下的各文件),厉害的可以直接静态分析smali文件(ida ...

- android开发环境搭建教程

首先安装jdk,然后下载android studio,双击安装即可. 官网:http://www.android-studio.org/ 直接下载链接:https://dl.google.com/dl ...

- vue中alert toast confirm loading 公用

import Vue from 'vue' import { ToastPlugin, AlertPlugin, ConfirmPlugin, LoadingPlugin } from 'vux' / ...

- mac nginx+php-fpm配置(安装过后nginx后访问php文件下载,访问php文件请求200显示空白页面)

访问php文件下载是因为没配置php-fpm 两个问题主要都是nginx.conf配置的问题: /usr/local/etc/nginx/nginx.conf server { listen 8 ...

- mysql 如何在访问某张数据表按照某个字段分类输出

也许大家有时候会遇到需要将把数据库中的某张表的数据按照该表的某个字段分类输出,比如一张数据表area如下 我们需要将里面的area按照serialize字段进行分类输出,比如这种形式: areas ...

- U启动安装原版Win7系统教程

1.制作u启动u盘启动盘2.下载原版win7系统镜像并存入u盘启动盘3.硬盘模式更改为ahci模式 第一步: 将准备好的u启动u盘启动盘插在电脑usb接口上,然后重启电脑,在出现开机画面时通过u盘启动 ...

- day16-python常用的内置模块2

logging模块的使用 一:日志是我们排查问题的关键利器,写好日志记录,当我们发生问题时,可以快速定位代码范围进行修改.Python有给我们开发者们提供好的日志模块,下面我们就来介绍一下loggin ...