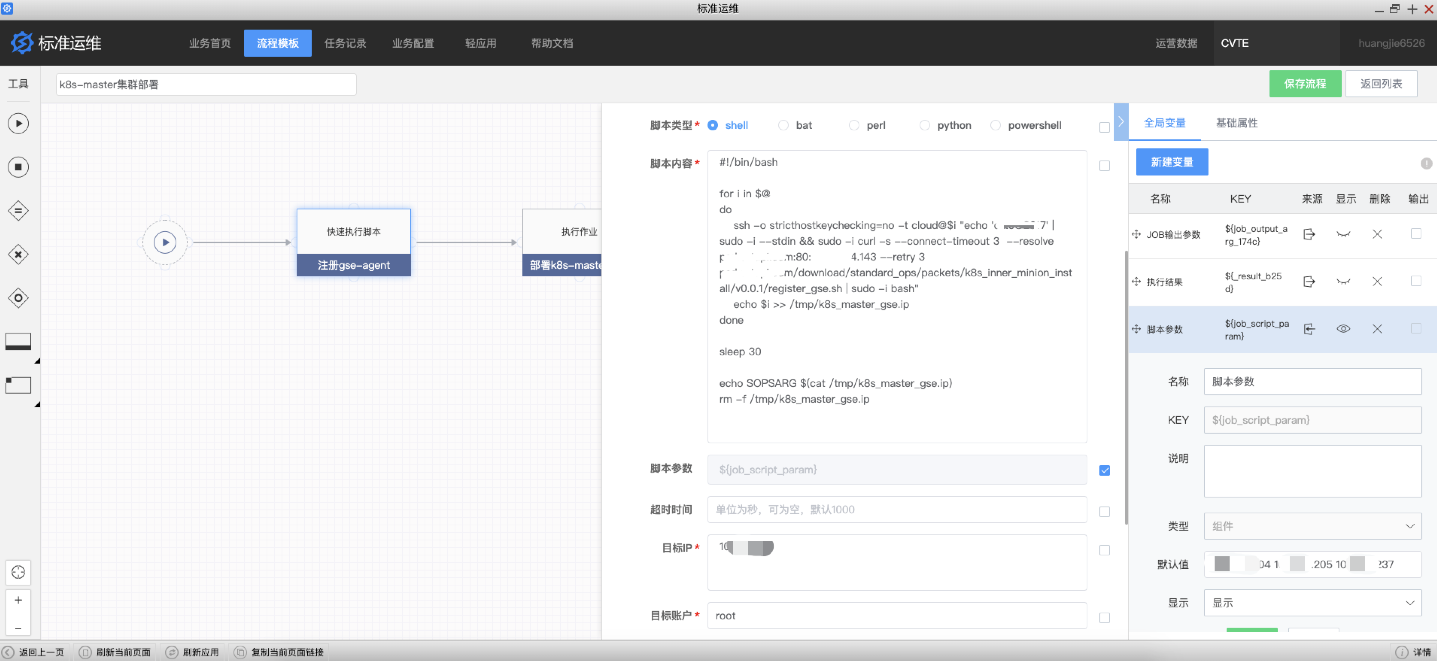

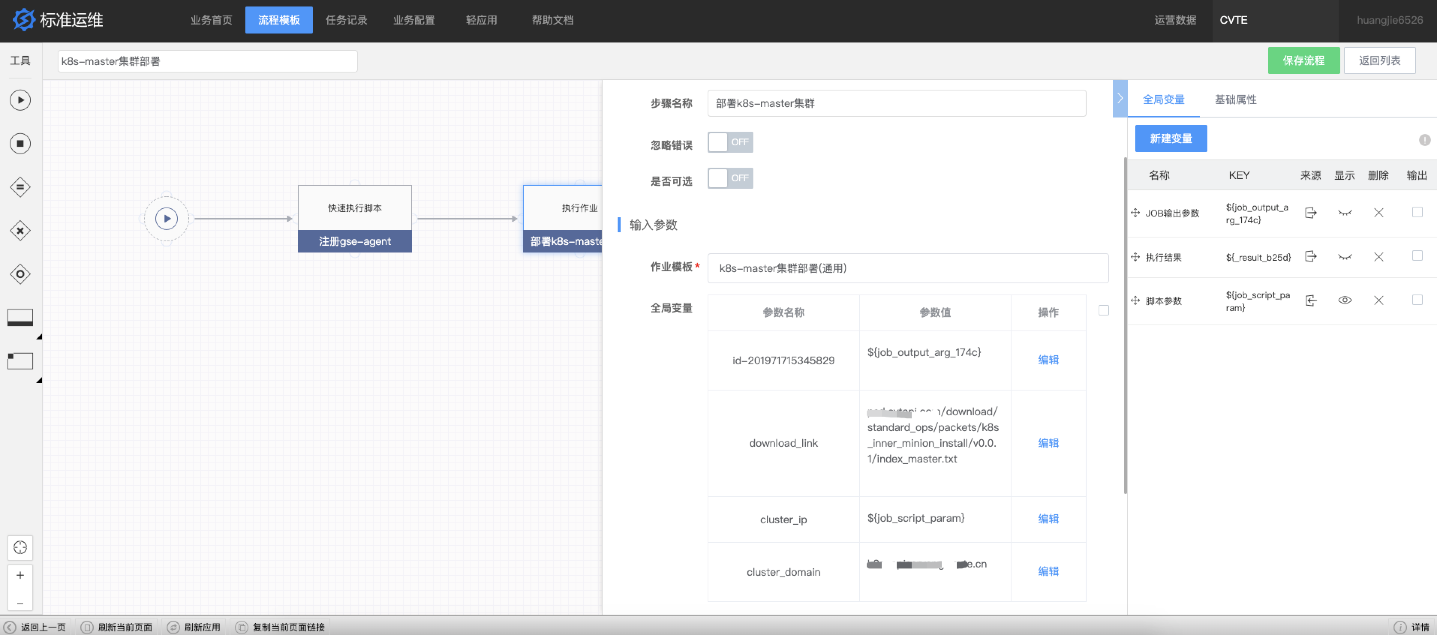

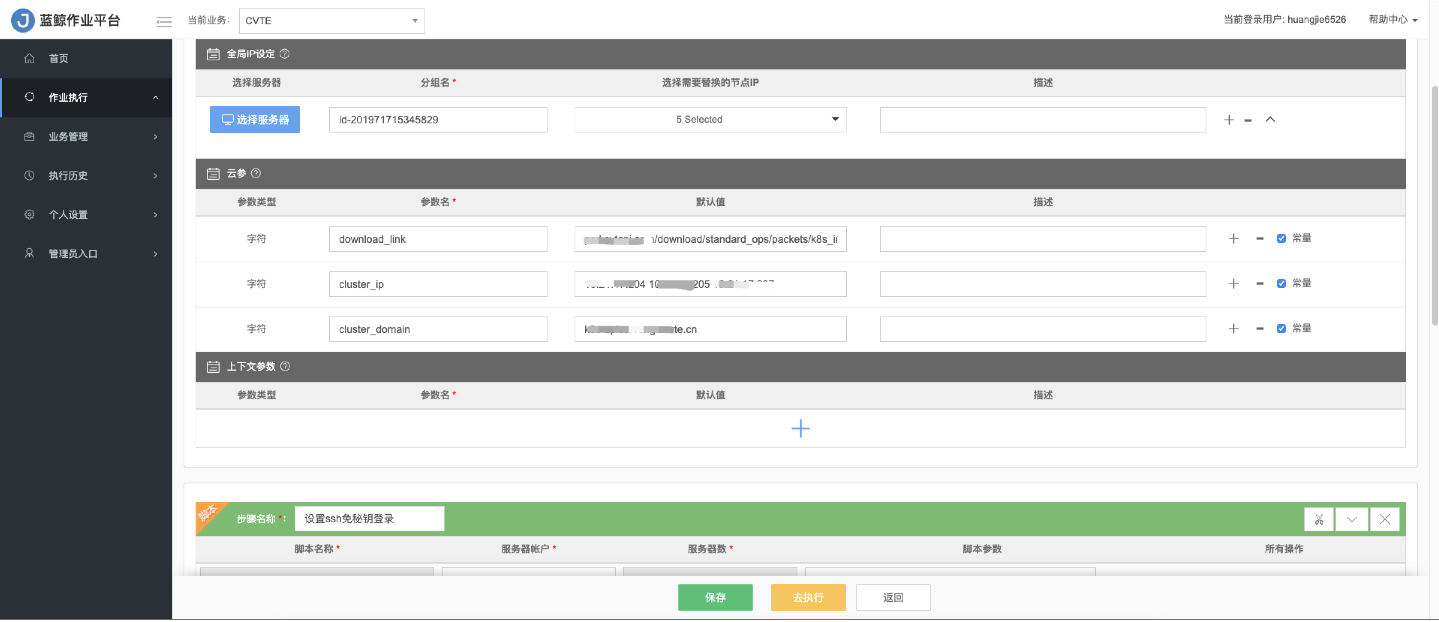

[原创]自动化部署K8S(v1.10.11)集群

#!/bin/bash anynowtime="date +'%Y-%m-%d %H:%M:%S'"

NOW="echo [\`$anynowtime\`][PID:$$]" ##### 可在脚本开始运行时调用,打印当时的时间戳及PID。

function job_start

{

echo "`eval $NOW` job_start"

} ##### 可在脚本执行成功的逻辑分支处调用,打印当时的时间戳及PID。

function job_success

{

MSG="$*"

echo "`eval $NOW` job_success:[$MSG]"

exit 0

} ##### 可在脚本执行失败的逻辑分支处调用,打印当时的时间戳及PID。

function job_fail

{

MSG="$*"

echo "`eval $NOW` job_fail:[$MSG]"

exit 1

} job_start function set_ssh_login()

{

mkdir -p /root/.ssh/

echo "-----BEGIN RSA PRIVATE KEY-----

MIIEowIBAAKCAQEAs889Icr6U1aCTohdxQL+e/L5jIqmEBHzlTqTGb57b4vthxuT

n/GP3ccvBHwvdssdvsdvJwgWGZqHVeDOgzzkFbdl42wE2jW8A8lIhOS+xE

66w1VaO0Ii/+EwS7+FQHDyidYnlJ5dAPA/Uq+QIDAQABAoIBAD2BvHWcyzhKtVRL

zVehCJA5sydiHiANI/d+C+eYgvzLLrsysbLanM3OXsT3+IMrmWGLOYqAvCtsKCAx

fb1UW1N7uF4HO2FYrQsvdeVwew2FqMKNUQqvTlo+m3vKaxmeEOwg19aPXM

dGQGfKGyk5qpK+YOkrjvpgBMLmg8kOLRgePj20TgF/7MSLkZAknm/qZOKRBc1Eyc

jSQePCkCgYEA4m3/NfWvNqX9MckAssTqgGhKyVl6VB5h1rGexmOnZRUNT7YGaS1F

IeY/wfM/K+t0oNL+lMsdv5dr4KWT56uW/v7hhLSCPLZgh65poBjjVudANxdHTvsd

/gmBvNCkA7gokoiA+PdCBZKntm/YnLzGNu6WkXzAQ7WfvNJntCOhvO/K/pyNIH+Q

dhHcik03nrJgeYTOGyxUG1R45CgurRdmh70iH+8CgYBgI3gCvyf/ugaBBukG/lja

4G0whI9N/ABqmcviTBmc741RVOXv7kq2E/7qKI+f5D8Gsc9p9TtEgAUeO2lQ6kMi

wGGcXQpVzoaEE0tYcLRj+UxeNvsz1oFDpItMs25Dk97tDbNzDWyJ15K9kMGKkC

/pwHId7SI0rkJ5ysx/qn4dSGB/G1ocFxrDqfH/3NisxaFxGtJyNodG2TNMJvl0Ir

hL0GfMKF5Bq2CcS2++x9WkJJ4sR4Xi52MxLYCZhx7a3BTX9VY01HhjOpRZrzz02w

mBSfAoGBAKmDfycTkiV1cddx5AVej8cHHhNcLenPlxqwFCZumij6RWTZaTMWdpdQ

g1NE8KnNBYXwj2hSqdpDAO/rdif6ReNsQsYhL24OA0sBk7sS/SSBT8JvbvvF0jYq

cOxPIozavG58534jcEVDaWnGwuqNa7RgxOKsCAVRSdt2YWKPwNHJ

-----END RSA PRIVATE KEY-----" >> /root/.ssh/id_rsa

echo "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCzzz0hyvpTVoJOiF3FAv578vmMiqYQEfOVOpMZvntvi+2HG5Of8Y/dxy8EfB92SIrTxKeE+HiLgMhkPC3wLH9Y5oh1emi+y7/PV0OoXpf/A/pBr032BAFXMVOn5F8OgEuIQpN3J9o63zH0x7hIZGOGHNfp083Y1+/olbGBkggpyGeXEdwNtFh/acnCBYZmodV4M6DPOQVt2XjbATaNbwDyUiE5L7ETrrDVVo7QiL/4TBLv4VAcPKJ1ieUnl0A8D9Sr5 root@k8s" >> /root/.ssh/authorized_keys

chmod 700 /root/.ssh

chmod 600 /root/.ssh/id_rsa

chmod 600 /root/.ssh/authorized_keys

sed -i s/PermitRootLogin no/PermitRootLogin yes/g /etc/ssh/sshd_config

systemctl restart sshd

} set_ssh_login

#!/bin/bash anynowtime="date +'%Y-%m-%d %H:%M:%S'"

NOW="echo [\`$anynowtime\`][PID:$$]" ##### 可在脚本开始运行时调用,打印当时的时间戳及PID。

function job_start

{

echo "`eval $NOW` job_start"

} ##### 可在脚本执行成功的逻辑分支处调用,打印当时的时间戳及PID。

function job_success

{

MSG="$*"

echo "`eval $NOW` job_success:[$MSG]"

exit 0

} ##### 可在脚本执行失败的逻辑分支处调用,打印当时的时间戳及PID。

function job_fail

{

MSG="$*"

echo "`eval $NOW` job_fail:[$MSG]"

exit 1

} job_start master1=$1

master2=$2

master3=$3 systemctl stop firewalld

systemctl disable firewalld

setenforce 0

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

EOF

sysctl --system

iptables -P FORWARD ACCEPT while true

do

tmp_ip=$(ip a | grep eth0 -C 0 | grep inet | awk '{print $2}' | awk -F "/" '{print $1}')

if [[ ${tmp_ip} == "" ]];then

ip=$(ip a | grep $(route -n | grep UG | awk '{print $NF}') -C 0 | grep inet | awk '{print $2}' | awk -F "/" '{print $1}')

else

ip=${tmp_ip}

fi if [[ "x$ip" == "x" ]]; then

sleep 5

echo "waiting ip..."

else

break

fi

done hostname=k8s-${ip//./-} hostnamectl set-hostname --static $hostname hostname1=k8s-${master1//./-}

hostname2=k8s-${master2//./-}

hostname3=k8s-${master3//./-} echo "$master1 $hostname1" >> /etc/hosts

echo "$master2 $hostname2" >> /etc/hosts

echo "$master3 $hostname3" >> /etc/hosts yum -y install etcd if [[ $ip == $master1 ]]; then

etcd_name="etcd1"

elif [[ $ip == $master2 ]]; then

etcd_name="etcd2"

else

etcd_name="etcd3"

fi cat << EOF > /etc/etcd/etcd.conf

ETCD_NAME=${etcd_name}

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="http://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://${hostname}:2380"

ETCD_INITIAL_CLUSTER="etcd1=http://${hostname1}:2380,etcd2=http://${hostname2}:2380,etcd3=http://${hostname3}:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://${hostname}:2379"

EOF cat << EOF > /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target [Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

User=etcd

# set GOMAXPROCS to number of processors

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/etcd --name=\"\${ETCD_NAME}\" --data-dir=\"\${ETCD_DATA_DIR}\" --listen-client-urls=\"\${ETCD_LISTEN_CLIENT_URLS}\""

Restart=on-failure

LimitNOFILE=65536 [Install]

WantedBy=multi-user.target

EOF systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

systemctl status etcd

sleep 10

etcdctl cluster-health

etcdctl member list etcdctl mkdir /k8s

etcdctl set /k8s/network/config '{ "Network": "172.30.0.0/16", "SubnetLen":24,"Backend": { "Type": "vxlan" } }'

#!/bin/bash anynowtime="date +'%Y-%m-%d %H:%M:%S'"

NOW="echo [\`$anynowtime\`][PID:$$]" ##### 可在脚本开始运行时调用,打印当时的时间戳及PID。

function job_start

{

echo "`eval $NOW` job_start"

} ##### 可在脚本执行成功的逻辑分支处调用,打印当时的时间戳及PID。

function job_success

{

MSG="$*"

echo "`eval $NOW` job_success:[$MSG]"

exit 0

} ##### 可在脚本执行失败的逻辑分支处调用,打印当时的时间戳及PID。

function job_fail

{

MSG="$*"

echo "`eval $NOW` job_fail:[$MSG]"

exit 1

} job_start mkdir -p /root/pki

mkdir -p /etc/kubernetes/pki

mkdir -p /etc/kubernetes/auth while true

do

tmp_ip=$(ip a | grep eth0 -C 0 | grep inet | awk '{print $2}' | awk -F "/" '{print $1}')

if [[ ${tmp_ip} == "" ]];then

ip=$(ip a | grep $(route -n | grep UG | awk '{print $NF}') -C 0 | grep inet | awk '{print $2}' | awk -F "/" '{print $1}')

else

ip=${tmp_ip}

fi if [[ "x$ip" == "x" ]]; then

sleep 5

echo "waiting ip..."

else

break

fi

done if [[ $ip == $1 ]]; then

cd /root/pki

cat << EOF > csr.conf

[ req ]

default_bits = 2048

prompt = no

default_md = sha256

req_extensions = req_ext

distinguished_name = dn [ dn ]

C = cn

ST = cn

L = cn

O = gz

OU = gz

CN = $1 [ req_ext ]

subjectAltName = IP:10.96.0.1 [ alt_names ]

DNS.1 = kubernetes

DNS.2 = kubernetes.default

DNS.3 = kubernetes.default.svc

DNS.4 = kubernetes.default.svc.cluster

IP.1 = $1

IP.2 = 10.96.0.1 [ v3_ext ]

authorityKeyIdentifier=keyid,issuer:always

basicConstraints=CA:FALSE

keyUsage=keyEncipherment,dataEncipherment

extendedKeyUsage=serverAuth,clientAuth

subjectAltName=@alt_names

EOF openssl genrsa -out ca.key 2048

openssl req -x509 -new -nodes -key ca.key -config csr.conf -out ca.crt -days 10000 openssl genrsa -out server.key 2048

openssl req -new -key server.key -config csr.conf -out server.csr

openssl x509 -req -in server.csr -CA ca.crt -CAkey ca.key -CAcreateserial -days 10000 -extfile csr.conf -out server.crt cp /root/pki/* /etc/kubernetes/pki/

chmod 600 /etc/kubernetes/pki/*

echo "*******,abc,1" > /etc/kubernetes/auth/auth.csv

echo "*******,abc,1" > /etc/kubernetes/auth/token.csv

else

scp -o stricthostkeychecking=no $1:/root/pki/* /etc/kubernetes/pki/

scp -o stricthostkeychecking=no $1:/etc/kubernetes/auth/* /etc/kubernetes/auth/

fi

#!/bin/bash anynowtime="date +'%Y-%m-%d %H:%M:%S'"

NOW="echo [\`$anynowtime\`][PID:$$]" ##### 可在脚本开始运行时调用,打印当时的时间戳及PID。

function job_start

{

echo "`eval $NOW` job_start"

} ##### 可在脚本执行成功的逻辑分支处调用,打印当时的时间戳及PID。

function job_success

{

MSG="$*"

echo "`eval $NOW` job_success:[$MSG]"

exit 0

} ##### 可在脚本执行失败的逻辑分支处调用,打印当时的时间戳及PID。

function job_fail

{

MSG="$*"

echo "`eval $NOW` job_fail:[$MSG]"

exit 1

} job_start master1=$1

master2=$2

master3=$3

cluster_domain=$4 while true

do

tmp_ip=$(ip a | grep eth0 -C 0 | grep inet | awk '{print $2}' | awk -F "/" '{print $1}')

if [[ ${tmp_ip} == "" ]];then

ip=$(ip a | grep $(route -n | grep UG | awk '{print $NF}') -C 0 | grep inet | awk '{print $2}' | awk -F "/" '{print $1}')

else

ip=${tmp_ip}

fi if [[ "x$ip" == "x" ]]; then

sleep 5

echo "waiting ip..."

else

break

fi

done function get_download_host_resolve()

{

iplist=("xxx.xxx.xxx.xxx" "xxx.xxx.xxx.xxx")

for ip in ${iplist[@]};do

ping -c 1 -W 3 $ip > /dev/null 2>&1

if [[ $? == 0 ]];then

echo $ip

break

fi

done

} download_host_ip=$(get_download_host_resolve)

echo "use download_host_ip:$download_host_ip"

curl_option="-s --connect-timeout 3 --resolve doamin:80:$download_host_ip --retry 3" index_list=`curl $curl_option $download_link`

[[ $? != 0 ]] && job_fail "donwload index_list fail" echo "================= index list start ====================="

echo $index_list

echo "================= index list end =======================" cd /root/

mkdir docker

mkdir kubernetes for i in ${index_list[@]};do

echo "downloading... $i"

curl -O $curl_option $i

[[ $? != 0 ]] && job_fail "donwload file fail"

done mv kube* /root/kubernetes/ cd /root/kubernetes

chmod 744 kube-controller-manager

chmod 744 kube-scheduler

chmod 744 kube-apiserver

chmod 744 kube-proxy

chmod 744 kubelet

chmod 744 kubectl cp kube-controller-manager kube-scheduler kube-apiserver kubectl /usr/bin/

cp kube-controller-manager kube-scheduler kube-apiserver kubectl /bin/

cp kube-*.service /usr/lib/systemd/system/

systemctl daemon-reload mkdir -p /var/log/kubernetes/kube-proxy

mkdir -p /var/log/kubernetes/kube-scheduler

mkdir -p /var/log/kubernetes/kubelet

mkdir -p /var/log/kubernetes/kube-controller-manager

mkdir -p /var/log/kubernetes/kube-apiserver cat << EOF > /etc/kubernetes/kube-apiserver

KUBE_OTHER_OPTIONS="--kubelet-preferred-address-types=InternalIP"

KUBE_LOGTOSTDERR="--logtostderr=false"

KUBE_LOG_DIR="--log-dir=/var/log/kubernetes/kube-apiserver"

KUBE_LOG_LEVEL="--v=5"

KUBE_ETCD_SERVERS="--etcd-servers=http://${master1}:2379,http://${master2}:2379,http://${master3}:2379"

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

KUBE_API_PORT="--secure-port=6443"

KUBE_ADVERTISE_ADDR="--advertise-address=${ip}"

KUBE_ALLOW_PRIV="--allow-privileged=true"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.96.0.0/16"

KUBE_ADMISSION_CONTROL="--admission-control=Initializers,NamespaceLifecycle,ServiceAccount,DefaultTolerationSeconds,ResourceQuota"

KUBE_API_TLS_CERT_FILE="--tls-cert-file=/etc/kubernetes/pki/server.crt"

KUBE_API_TLS_PRIVATE_KEY_FILE="--tls-private-key-file=/etc/kubernetes/pki/server.key"

KUBE_API_TOKEN_AUTH_ARGS="--token-auth-file=/etc/kubernetes/auth/token.csv"

KUBE_API_BASIC_AUTH_ARGS="--basic-auth-file=/etc/kubernetes/auth/auth.csv"

KUBE_NEW_FEATURES="--feature-gates=CustomPodDNS=true"

KUBE_APISERVER_COUNT="--apiserver-count=3"

EOF cat << EOF > /etc/kubernetes/kube-controller-manager

KUBE_MASTER="--master=https://${cluster_domain}"

KUBE_LOGTOSTDERR="--logtostderr=false"

KUBE_LOG_LEVEL="--v=5"

KUBE_LOG_DIR="--log-dir=/var/log/kubernetes/kube-controller-manager"

KUBE_CONFIG="--kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

KUBE_CONTROLLER_MANAGER_ROOT_CA_FILE="--root-ca-file=/etc/kubernetes/pki/ca.crt"

KUBE_CONTROLLER_MANAGER_SERVICE_ACCOUNT_PRIVATE_KEY_FILE="--service-account-private-key-file=/etc/kubernetes/pki/server.key"

KUBE_LEADER_ELECT="--leader-elect=true"

KUBE_CONTROLLER_OTHER_OPTIONS="--terminated-pod-gc-threshold=1"

KUBE_NEW_FEATURES="--feature-gates=CustomPodDNS=true"

EOF cat << EOF > /etc/kubernetes/kube-scheduler

KUBE_LOGTOSTDERR="--logtostderr=false"

KUBE_MASTER="--master=https://${cluster_domain}"

KUBE_LOG_LEVEL="--v=5"

KUBE_LOG_DIR="--log-dir=/var/log/kubernetes/kube-scheduler"

KUBE_CONFIG="--kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

KUBE_LEADER_ELECT="--leader-elect=true"

KUBE_SCHEDULER_ARGS=""

KUBE_NEW_FEATURES="--feature-gates=CustomPodDNS=true"

EOF cat << EOF > /etc/kubernetes/kubelet.kubeconfig

apiVersion: v1

kind: Config

clusters:

- cluster:

server: https://${cluster_domain}

insecure-skip-tls-verify: true

name: master-server

contexts:

- context:

cluster: master-server

user: remote-api-auth

name: default

current-context: default

users:

- name: remote-api-auth

user:

password: *******

username: abc

EOF systemctl enable kube-apiserver kube-controller-manager kube-scheduler systemctl start kube-apiserver kube-controller-manager kube-scheduler systemctl status kube-apiserver kube-controller-manager kube-scheduler sleep 10

#!/bin/bash anynowtime="date +'%Y-%m-%d %H:%M:%S'"

NOW="echo [\`$anynowtime\`][PID:$$]" ##### 可在脚本开始运行时调用,打印当时的时间戳及PID。

function job_start

{

echo "`eval $NOW` job_start"

} ##### 可在脚本执行成功的逻辑分支处调用,打印当时的时间戳及PID。

function job_success

{

MSG="$*"

echo "`eval $NOW` job_success:[$MSG]"

exit 0

} ##### 可在脚本执行失败的逻辑分支处调用,打印当时的时间戳及PID。

function job_fail

{

MSG="$*"

echo "`eval $NOW` job_fail:[$MSG]"

exit 1

} job_start master1=$1

master2=$2

master3=$3

cluster_domain=$4 cd /root/

tar -zxvf master_install_scripts.tar.gz cat << EOF > install_node.sh

#!/bin/bash K8S_API_URL="https://${cluster_domain}"

ETCD_URL="http://${master1}:2379,http://${master2}:2379,http://${master3}:2379"

POD_BASE_IMAGE="harbor-domain/library/pod-infrastructure:latest" yum install -y wget telnet net-tools bash +x *.sh

./1.yum.sh

./2.flannel.sh "\${ETCD_URL}"

./3.docker.sh

./4.install-kube-proxy.sh

./5.kubelet.sh "\${K8S_API_URL}" "\${POD_BASE_IMAGE}"

./6.config-kube-proxy.sh

./7.firewalld.sh

./8.restart-service.sh

./9.nfs.sh

EOF chmod +x install_node.sh

bash install_node.sh bash register_k8s_inner.sh bash 8.restart-service.sh docker version

#cat kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes [Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver

ExecStart=/usr/bin/kube-apiserver ${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${KUBE_LOG_DIR} \

${KUBE_OTHER_OPTIONS} \

${KUBE_ETCD_SERVERS} \

${KUBE_ETCD_CAFILE} \

${KUBE_ETCD_CERTFILE} \

${KUBE_ETCD_KEYFILE} \

${KUBE_API_ADDRESS} \

${KUBE_API_PORT} \

${NODE_PORT} \

${KUBE_ADVERTISE_ADDR} \

${KUBE_ALLOW_PRIV} \

${KUBE_SERVICE_ADDRESSES} \

${KUBE_ADMISSION_CONTROL} \

${KUBE_API_CLIENT_CA_FILE} \

${KUBE_API_TLS_CERT_FILE} \

${KUBE_API_TLS_PRIVATE_KEY_FILE} \

${KUBE_API_TOKEN_AUTH_ARGS} \

${KUBE_NEW_FEATURES} \

${KUBE_API_BASIC_AUTH_ARGS} \

${KUBE_APISERVER_COUNT}

Restart=on-failure

LimitCORE=infinity

LimitNOFILE=65536

LimitNPROC=65536 [Install]

WantedBy=multi-user.target

#cat kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes [Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager

ExecStart=/usr/bin/kube-controller-manager ${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${KUBE_CONTROLLER_OTHER_OPTIONS} \

${KUBE_LOG_DIR} \

${KUBE_MASTER} \

${KUBE_CONFIG} \

${KUBE_CONTROLLER_MANAGER_ROOT_CA_FILE} \

${KUBE_CONTROLLER_MANAGER_SERVICE_ACCOUNT_PRIVATE_KEY_FILE}\

${KUBE_NEW_FEATURES} \

${KUBE_LEADER_ELECT}

Restart=on-failure

LimitCORE=infinity

LimitNOFILE=65536

LimitNPROC=65536 [Install]

WantedBy=multi-user.target

#cat kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes [Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler

ExecStart=/usr/bin/kube-scheduler ${KUBE_LOGTOSTDERR} \

${KUBE_LOG_LEVEL} \

${KUBE_LOG_DIR} \

${KUBE_MASTER} \

${KUBE_CONFIG} \

${KUBE_LEADER_ELECT} \

${KUBE_NEW_FEATURES} \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitCORE=infinity

LimitNOFILE=65536

LimitNPROC=65536 [Install]

WantedBy=multi-user.target

# kubectl create namespace huangjie

# cat app_nginx.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: app-nginx

namespace: huangjie

spec:

replicas: 5

template:

metadata:

labels:

app: app-nginx

spec:

containers:

- name: app-nginx

image: harhor-domain/hj-demo/nginx:latest

ports:

- containerPort: 80

lifecycle:

preStop:

exec:

command: ["sleep","5"]

# cat app_nginx-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: app-nginx

namespace: huangjie

spec:

clusterIP: None

ports:

- name: web

port: 8090

protocol: TCP

targetPort: 80

selector:

app: app-nginx

sessionAffinity: None

type: ClusterIP

status:

loadBalancer: {}

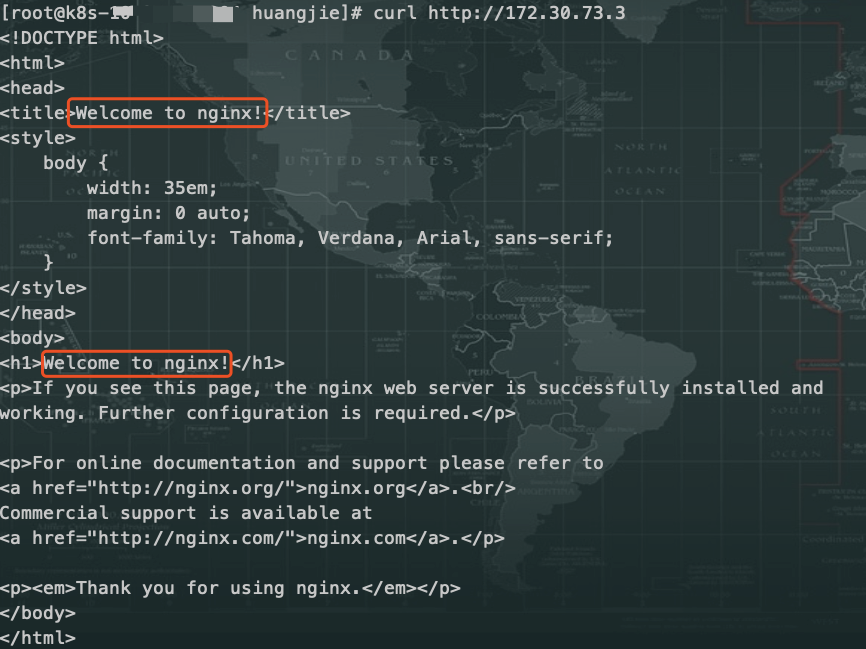

测试集群内访问:

[原创]自动化部署K8S(v1.10.11)集群的更多相关文章

- 使用kubeadm部署K8S v1.17.0集群

kubeadm部署K8S集群 安装前的准备 集群机器 172.22.34.34 K8S00 172.22.34.35 K8S01 172.22.34.36 K8S02 注意: 本文档中的 etcd . ...

- 基于腾讯云CLB实现K8S v1.10.1集群高可用+负载均衡

概述: 最近对K8S非常感兴趣,同时对容器的管理等方面非常出色,是一款非常开源,强大的容器管理方案,最后经过1个月的本地实验,最终决定在腾讯云平台搭建属于我们的K8S集群管理平台~ 采购之后已经在本地 ...

- 使用kubeadm搭建高可用k8s v1.16.3集群

目录 1.部署环境说明 2.集群架构及部署准备工作 2.1.集群架构说明 2.2.修改hosts及hostname 2.3.其他准备 3.部署keepalived 3.1.安装 3.2.配置 3.3. ...

- lvs+keepalived部署k8s v1.16.4高可用集群

一.部署环境 1.1 主机列表 主机名 Centos版本 ip docker version flannel version Keepalived version 主机配置 备注 lvs-keepal ...

- Centos7.6部署k8s v1.16.4高可用集群(主备模式)

一.部署环境 主机列表: 主机名 Centos版本 ip docker version flannel version Keepalived version 主机配置 备注 master01 7.6. ...

- 企业运维实践-还不会部署高可用的kubernetes集群?使用kubeadm方式安装高可用k8s集群v1.23.7

关注「WeiyiGeek」公众号 设为「特别关注」每天带你玩转网络安全运维.应用开发.物联网IOT学习! 希望各位看友[关注.点赞.评论.收藏.投币],助力每一个梦想. 文章目录: 0x00 前言简述 ...

- Ansible自动化部署K8S集群

Ansible自动化部署K8S集群 1.1 Ansible介绍 Ansible是一种IT自动化工具.它可以配置系统,部署软件以及协调更高级的IT任务,例如持续部署,滚动更新.Ansible适用于管理企 ...

- Centos7部署k8s[v1.16]高可用[keepalived]集群

实验目的 一般情况下,k8s集群中只有一台master和多台node,当master故障时,引发的事故后果可想而知. 故本文目的在于体现集群的高可用,即当集群中的一台master宕机后,k8s集群通过 ...

- Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列之集群部署环境规划(一)

0.前言 整体架构目录:ASP.NET Core分布式项目实战-目录 k8s架构目录:Kubernetes(k8s)集群部署(k8s企业级Docker容器集群管理)系列目录 一.环境规划 软件 版本 ...

随机推荐

- Windows Phone SplashScreen初始屏幕示例

protected override void OnLaunched(LaunchActivatedEventArgs args) { if (args.PreviousExecutionState ...

- C#跳转语句

1.break 退出直接封闭它的switch.while.do.for或foreach语句. 当有嵌套时,break只退出最里层的语句块. break不能跳出finally语句块. 2.continu ...

- mstsc也要使用/admin参数

mstsc.exe /admin http://stackoverflow.com/questions/6757232/service-not-responding-error-1053

- 关于联合体union的详细解释

1.概述 联合体union的定义方式与结构体一样,但是二者有根本区别. 在结构中各成员有各自的内存空间,一个结构变量的总长度是各成员长度之和.而在“联合”中,各成员共享一段内存空间,一个联合变量的长度 ...

- Web性能优化分析

如果你的网站在1000ms内加载完成,那么会有平均一个用户停留下来.2014年,平均网页的大小是1.9MB.看下图了解更多统计信息. 直击现场 <HTML开发MacOSApp教程> ht ...

- PHP网站开发方案

一. 开发成员 a)项目主管 b)页面美工c)页面开发 d)服务端程序开发e)系统与数据管理f)测试与版本控制 二. 网站组开发简明流程 三. 开发工具与环境 a) 服务器配置i. WEB服务器: F ...

- dedecms自学

[dedecms笔记] index.htm 主页模板head.htm 列表头部模板footer.htm ...

- net开发框架never

[一] 摘要 never是纯c#语言开发的一个框架,同时可在netcore下运行. 该框架github地址:https://github.com/shelldudu/never 同时,配合never_ ...

- kubernetes实战篇之创建一个只读权限的用户

系列目录 上一节我们讲解到了如何限制用户访问dashboard的权限,这节我们讲解一个案例:如何创建一个只读权限的用户. 虽然可以根据实际情况灵活创建各种权限用户,但是实际生产环境中往往只需要两个就行 ...

- 【React】遍历的两种方式

1.foreach(推荐) list.forEach((item)=>{ }); eg: dataSource.forEach((item) => { const est = item.e ...