spark源码学习-withScope

以前的sparkUI中只有stage的执行情况,也就是说我们不可以看到上个RDD到下个RDD的具体信息。于是为了在

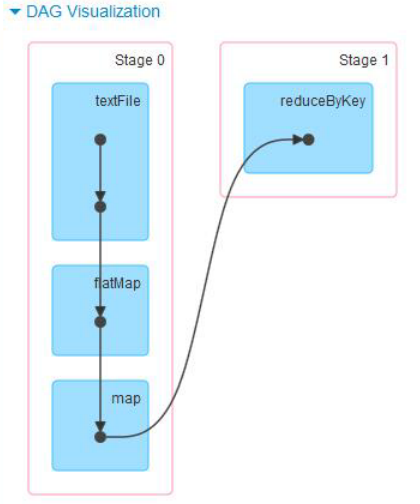

sparkUI中能展示更多的信息。所以把所有创建的RDD的方法都包裹起来,同时用RDDOperationScope 记录 RDD 的操作历史和关联,就能达成目标。下面就是一张WordCount的DAG visualization on SparkUI

记录关系的RDDOperationScope源码如下:

/**

* A general, named code block representing an operation that instantiates RDDs.

*

* All RDDs instantiated in the corresponding code block will store a pointer to this object.

* Examples include, but will not be limited to, existing RDD operations, such as textFile,

* reduceByKey, and treeAggregate.

*

* An operation scope may be nested in other scopes. For instance, a SQL query may enclose

* scopes associated with the public RDD APIs it uses under the hood.

*

* There is no particular relationship between an operation scope and a stage or a job.

* A scope may live inside one stage (e.g. map) or span across multiple jobs (e.g. take).

*/

@JsonInclude(Include.NON_NULL)

@JsonPropertyOrder(Array("id", "name", "parent"))

private[spark] class RDDOperationScope(

val name: String,

val parent: Option[RDDOperationScope] = None,

val id: String = RDDOperationScope.nextScopeId().toString) { def toJson: String = {

RDDOperationScope.jsonMapper.writeValueAsString(this)

} /**

* Return a list of scopes that this scope is a part of, including this scope itself.

* The result is ordered from the outermost scope (eldest ancestor) to this scope.

*/

@JsonIgnore

def getAllScopes: Seq[RDDOperationScope] = {

parent.map(_.getAllScopes).getOrElse(Seq.empty) ++ Seq(this)

} override def equals(other: Any): Boolean = {

other match {

case s: RDDOperationScope =>

id == s.id && name == s.name && parent == s.parent

case _ => false

}

} override def hashCode(): Int = Objects.hashCode(id, name, parent) override def toString: String = toJson

} /**

* A collection of utility methods to construct a hierarchical representation of RDD scopes.

* An RDD scope tracks the series of operations that created a given RDD.

*/

private[spark] object RDDOperationScope extends Logging {

private val jsonMapper = new ObjectMapper().registerModule(DefaultScalaModule)

private val scopeCounter = new AtomicInteger() def fromJson(s: String): RDDOperationScope = {

jsonMapper.readValue(s, classOf[RDDOperationScope])

} /** Return a globally unique operation scope ID. */

def nextScopeId(): Int = scopeCounter.getAndIncrement /**

* Execute the given body such that all RDDs created in this body will have the same scope.

* The name of the scope will be the first method name in the stack trace that is not the

* same as this method's.

*

* Note: Return statements are NOT allowed in body.

*/

private[spark] def withScope[T](

sc: SparkContext,

allowNesting: Boolean = false)(body: => T): T = {

val ourMethodName = "withScope"

val callerMethodName = Thread.currentThread.getStackTrace()

.dropWhile(_.getMethodName != ourMethodName)

.find(_.getMethodName != ourMethodName)

.map(_.getMethodName)

.getOrElse {

// Log a warning just in case, but this should almost certainly never happen

logWarning("No valid method name for this RDD operation scope!")

"N/A"

}

withScope[T](sc, callerMethodName, allowNesting, ignoreParent = false)(body)

} /**

* Execute the given body such that all RDDs created in this body will have the same scope.

*

* If nesting is allowed, any subsequent calls to this method in the given body will instantiate

* child scopes that are nested within our scope. Otherwise, these calls will take no effect.

*

* Additionally, the caller of this method may optionally ignore the configurations and scopes

* set by the higher level caller. In this case, this method will ignore the parent caller's

* intention to disallow nesting, and the new scope instantiated will not have a parent. This

* is useful for scoping physical operations in Spark SQL, for instance.

*

* Note: Return statements are NOT allowed in body.

*/

private[spark] def withScope[T](

sc: SparkContext,

name: String,

allowNesting: Boolean,

ignoreParent: Boolean)(body: => T): T = {

// Save the old scope to restore it later

val scopeKey = SparkContext.RDD_SCOPE_KEY

val noOverrideKey = SparkContext.RDD_SCOPE_NO_OVERRIDE_KEY

val oldScopeJson = sc.getLocalProperty(scopeKey)

val oldScope = Option(oldScopeJson).map(RDDOperationScope.fromJson)

val oldNoOverride = sc.getLocalProperty(noOverrideKey)

try {

if (ignoreParent) {

// Ignore all parent settings and scopes and start afresh with our own root scope

sc.setLocalProperty(scopeKey, new RDDOperationScope(name).toJson)

} else if (sc.getLocalProperty(noOverrideKey) == null) {

// Otherwise, set the scope only if the higher level caller allows us to do so

sc.setLocalProperty(scopeKey, new RDDOperationScope(name, oldScope).toJson)

}

// Optionally disallow the child body to override our scope

if (!allowNesting) {

sc.setLocalProperty(noOverrideKey, "true")

log.info("this is textFile1")

log.info("this is textFile2" )

//println("this is textFile3")

log.error("this is textFile4err")

log.warn("this is textFile5WARN")

log.debug("this is textFile6debug")

}

body

} finally {

// Remember to restore any state that was modified before exiting

sc.setLocalProperty(scopeKey, oldScopeJson)

sc.setLocalProperty(noOverrideKey, oldNoOverride)

}

}

}

spark源码学习-withScope的更多相关文章

- Spark源码学习1.2——TaskSchedulerImpl.scala

许久没有写博客了,没有太多时间,最近陆续将Spark源码的一些阅读笔记传上,接下来要修改Spark源码了. 这个类继承于TaskScheduler类,重载了TaskScheduler中的大部分方法,是 ...

- Spark源码学习1.1——DAGScheduler.scala

本文以Spark1.1.0版本为基础. 经过前一段时间的学习,基本上能够对Spark的工作流程有一个了解,但是具体的细节还是需要阅读源码,而且后续的科研过程中也肯定要修改源码的,所以最近开始Spark ...

- Spark源码学习2

转自:http://www.cnblogs.com/hseagle/p/3673123.html 在源码阅读时,需要重点把握以下两大主线. 静态view 即 RDD, transformation a ...

- Spark源码学习1.6——Executor.scala

Executor.scala 一.Executor类 首先判断本地性,获取slaves的host name(不是IP或者host: port),匹配运行环境为集群或者本地.如果不是本地执行,需要启动一 ...

- Spark源码学习1.5——BlockManager.scala

一.BlockResult类 该类用来表示返回的匹配的block及其相关的参数.共有三个参数: data:Iterator [Any]. readMethod: DataReadMethod.Valu ...

- Spark源码学习1.4——MapOutputTracker.scala

相关类:MapOutputTrackerMessage,GetMapOutputStatuses extends MapPutputTrackerMessage,StopMapOutputTracke ...

- Spark源码学习3

转自:http://www.cnblogs.com/hseagle/p/3673132.html 一.概要 本篇主要阐述在TaskRunner中执行的task其业务逻辑是如何被调用到的,另外试图讲清楚 ...

- Spark源码学习1

转自:http://www.cnblogs.com/hseagle/p/3664933.html 一.基本概念(Basic Concepts) RDD - resillient distributed ...

- Spark源码学习1.8——ShuffleBlockManager.scala

shuffleBlockManager继承于Logging,参数为blockManager和shuffleManager.shuffle文件有三个特性:shuffleId,整个shuffle stag ...

随机推荐

- VS2010打开高版本VS解决方案

http://blog.csdn.net/backspace110/article/details/62111273 Microsoft Visual Studio Solution File, Fo ...

- Hackrank Candies DP

题目链接:传送门 题意: n个学生站一行,老师给每个学生发至少一个糖 相邻学生,a[i] > a[i-1] 的话,那么右边学生的糖一定要发得比左边学生的糖多 问你满足条件这个老师总共最少的发多少 ...

- 滚动条样式优化(CSS3自定义滚动条样式 -webkit-scrollbar)

有时候觉得浏览器自带的原始滚动条不是很美观,那webkit浏览器是如何自定义滚动条的呢? Webkit支持拥有overflow属性的区域,列表框,下拉菜单,textarea的滚动条自定义样式.当然,兼 ...

- YTU 2426: C语言习题 字符串排序

2426: C语言习题 字符串排序 时间限制: 1 Sec 内存限制: 128 MB 提交: 262 解决: 164 题目描述 用指向指针的指针的方法对5个字符串排序并输出.要求将排序单独写成一个 ...

- BZOJ_2099_[Usaco2010 Dec]Letter 恐吓信_后缀自动机+贪心

BZOJ_2099_[Usaco2010 Dec]Letter 恐吓信_后缀自动机 Description FJ刚刚和邻居发生了一场可怕的争吵,他咽不下这口气,决定佚名发给他的邻居 一封脏话连篇的信. ...

- BZOJ_1511_[POI2006]OKR-Periods of Words_KMP

BZOJ_1511_[POI2006]OKR-Periods of Words_KMP Description 一个串是有限个小写字符的序列,特别的,一个空序列也可以是一个串. 一个串P是串A的前缀, ...

- 洛谷P3243 [HNOI2015]菜肴制作——拓扑排序

题目:https://www.luogu.org/problemnew/show/P3243 正向按字典序拓扑排序很容易发现是不对的,因为并不是序号小的一定先做: 但若让序号大的尽可能放在后面,则不会 ...

- bzoj2502【有上下界的最大流】

2502: 清理雪道 Time Limit: 10 Sec Memory Limit: 128 MBSubmit: 834 Solved: 442[Submit][Status][Discuss] ...

- Gym 100512F Funny Game (博弈+数论)

题意:给两个数 n,m,让你把它们分成 全是1,每次操作只能分成几份相等的,求哪一个分的次数最多. 析:很明显,每次都除以最小的约数是最优的. 代码如下: #pragma comment(linker ...

- PostgreSQL 9.6.2版本在centOS下的安装和配置

1.如果有用yum安装过旧版,卸载掉: yum remove postgresql* 2.更新一下yum: sudo yum update 3.去 官网 找到 适合你系统 的资源的下载地址,然后使用w ...