Hadoop伪集群部署

环境准备

[root@jiagoushi ~]# yum -y install lrzsz

已加载插件:fastestmirror

Repository 'saltstack-repo': Error parsing config: Error parsing "gpgkey = 'https\xef\xbc\x9a// repo.saltstack.com/yum/redhat/7/x86_64/latest/SALTSTACK-GPG-KEY.pub'": URL must be http, ftp, f

ile or https not ""Determining fastest mirrors

* base: centos.ustc.edu.cn

* extras: centos.ustc.edu.cn

* updates: centos.ustc.edu.cn

base | 3.6 kB 00:00:00

epel | 3.2 kB 00:00:00

extras | 3.4 kB 00:00:00

updates | 3.4 kB 00:00:00

(1/7): epel/x86_64/group_gz | 88 kB 00:00:05

(2/7): base/7/x86_64/group_gz | 166 kB 00:00:06

(3/7): epel/x86_64/updateinfo | 950 kB 00:00:06

(4/7): epel/x86_64/primary | 3.6 MB 00:00:01

(5/7): extras/7/x86_64/primary_db | 156 kB 00:00:06

(6/7): base/7/x86_64/primary_db | 6.0 MB 00:00:22

(7/7): updates/7/x86_64/primary_db | 1.3 MB 00:00:20

epel 12851/12851

正在解决依赖关系

--> 正在检查事务

---> 软件包 lrzsz.x86_64.0.0.12.20-36.el7 将被 安装

--> 解决依赖关系完成 依赖关系解决 ===============================================================================================================================================================================================

Package 架构 版本 源 大小

===============================================================================================================================================================================================

正在安装:

lrzsz x86_64 0.12.20-36.el7 base 78 k 事务概要

===============================================================================================================================================================================================

安装 1 软件包 总下载量:78 k

安装大小:181 k

Downloading packages:

lrzsz-0.12.20-36.el7.x86_64.rpm | 78 kB 00:00:05

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

正在安装 : lrzsz-0.12.20-36.el7.x86_64 1/1

验证中 : lrzsz-0.12.20-36.el7.x86_64 1/1 已安装:

lrzsz.x86_64 0:0.12.20-36.el7 完毕!

[root@jiagoushi ~]# yum -y install vim wget

已加载插件:fastestmirror

Repository 'saltstack-repo': Error parsing config: Error parsing "gpgkey = 'https\xef\xbc\x9a// repo.saltstack.com/yum/redhat/7/x86_64/latest/SALTSTACK-GPG-KEY.pub'": URL must be http, ftp, f

ile or https not ""Loading mirror speeds from cached hostfile

* base: centos.ustc.edu.cn

* extras: centos.ustc.edu.cn

* updates: centos.ustc.edu.cn

正在解决依赖关系

--> 正在检查事务

---> 软件包 vim-enhanced.x86_64.2.7.4.160-4.el7 将被 升级

---> 软件包 vim-enhanced.x86_64.2.7.4.160-5.el7 将被 更新

--> 正在处理依赖关系 vim-common = 2:7.4.160-5.el7,它被软件包 2:vim-enhanced-7.4.160-5.el7.x86_64 需要

---> 软件包 wget.x86_64.0.1.14-15.el7_4.1 将被 升级

---> 软件包 wget.x86_64.0.1.14-18.el7 将被 更新

--> 正在检查事务

---> 软件包 vim-common.x86_64.2.7.4.160-4.el7 将被 升级

---> 软件包 vim-common.x86_64.2.7.4.160-5.el7 将被 更新

--> 解决依赖关系完成 依赖关系解决 ===============================================================================================================================================================================================

Package 架构 版本 源 大小

===============================================================================================================================================================================================

正在更新:

vim-enhanced x86_64 2:7.4.160-5.el7 base 1.0 M

wget x86_64 1.14-18.el7 base 547 k

为依赖而更新:

vim-common x86_64 2:7.4.160-5.el7 base 5.9 M 事务概要

===============================================================================================================================================================================================

升级 2 软件包 (+1 依赖软件包) 总下载量:7.5 M

Downloading packages:

Delta RPMs disabled because /usr/bin/applydeltarpm not installed.

(1/3): vim-common-7.4.160-5.el7.x86_64.rpm | 5.9 MB 00:00:07

(2/3): vim-enhanced-7.4.160-5.el7.x86_64.rpm | 1.0 MB 00:00:08

(3/3): wget-1.14-18.el7.x86_64.rpm | 547 kB 00:00:08

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

总计 859 kB/s | 7.5 MB 00:00:08

Running transaction check

Running transaction test

Transaction test succeeded

Running transaction

正在更新 : 2:vim-common-7.4.160-5.el7.x86_64 1/6

正在更新 : 2:vim-enhanced-7.4.160-5.el7.x86_64 2/6

正在更新 : wget-1.14-18.el7.x86_64 3/6

清理 : 2:vim-enhanced-7.4.160-4.el7.x86_64 4/6

清理 : 2:vim-common-7.4.160-4.el7.x86_64 5/6

清理 : wget-1.14-15.el7_4.1.x86_64 6/6

验证中 : 2:vim-enhanced-7.4.160-5.el7.x86_64 1/6

验证中 : wget-1.14-18.el7.x86_64 2/6

验证中 : 2:vim-common-7.4.160-5.el7.x86_64 3/6

验证中 : wget-1.14-15.el7_4.1.x86_64 4/6

验证中 : 2:vim-enhanced-7.4.160-4.el7.x86_64 5/6

验证中 : 2:vim-common-7.4.160-4.el7.x86_64 6/6 更新完毕:

vim-enhanced.x86_64 2:7.4.160-5.el7 wget.x86_64 0:1.14-18.el7 作为依赖被升级:

vim-common.x86_64 2:7.4.160-5.el7 完毕!

[root@jiagoushi ~]# cd /usr/local/

[root@jiagoushi local]# ls

bin etc games include lib lib64 libexec sbin share src

[root@jiagoushi local]# ls

bin etc games hadoop-2.6.4.tar.gz include jdk-7u80-linux-x64.tar.gz lib lib64 libexec sbin share src

安装jdk 设置环境变量

[root@jiagoushi local]# tar xf jdk-7u80-linux-x64.tar.gz

[root@jiagoushi local]# ln -s /usr/local/jdk1.7.0_80 /usr/local/jdk

[root@jiagoushi local]# vim /etc/profile.d/jdk.sh

export JAVA_HOME=/usr/local/jdk

export PATH=$JAVA_HOME/bin:$PATH

[root@jiagoushi local]# . /etc/profile.d/jdk.sh

[root@jiagoushi local]# java -version

java version "1.7.0_80"

Java(TM) SE Runtime Environment (build 1.7.0_80-b15)

Java HotSpot(TM) 64-Bit Server VM (build 24.80-b11, mixed mode)

安装Hadoop

[root@jiagoushi local]# mkdir hadoop

[root@jiagoushi local]# tar xf hadoop-2.6.4.tar.gz -C hadoop

[root@jiagoushi local]# ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

Generating public/private dsa key pair.

Created directory '/root/.ssh'.

Your identification has been saved in /root/.ssh/id_dsa.

Your public key has been saved in /root/.ssh/id_dsa.pub.

The key fingerprint is:

SHA256:IR6QuB5NAjRUx/6kEwDGEInPdGzxT5LLkV6UC1Ru3og root@jiagoushi

The key's randomart image is:

+---[DSA 1024]----+

|XXo++=..o. |

|+.=.B+.=. |

| + Bo O.*. |

| = .* #.+ |

| . . E S . |

| . o . |

| . |

| |

| |

+----[SHA256]-----+

[root@jiagoushi local]# cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

[root@jiagoushi local]# ssh 192.168.10.5

Last login: Mon Jan 7 12:12:19 2019 from jiagoushi

[root@jiagoushi ~]# hostnamectl set-hostname hadoop

[root@jiagoushi ~]# exec bash

[root@hadoop ~]# hostnamectl set-hostname Master && exec bash

[root@master ~]# vim /etc/hosts #127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.10.5 master [root@master ~]# mkdir /usr/local/hadoop/tmp

[root@master ~]# mkdir /usr/local/hadoop/hdfs/name -p

[root@master ~]# mkdir /usr/local/hadoop/hdfs/data

[root@master ~]# vim ~/.bash_profile # .bash_profile # Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi # User specific environment and startup programs PATH=$PATH:$HOME/bin export PATH

export HADOOP_HAME=/usr/local/hadoop/hadoop-2.6.4

export PATH=$PATH:$HADOOP_HAME/bin

配置hadoop安装启动

[root@master ~]# cd /usr/local/hadoop/hadoop-2.6.4/

[root@master hadoop-2.6.4]# cd etc/

[root@master etc]# ls

hadoop

[root@master etc]# cd hadoop/

[root@master hadoop]# ls

capacity-scheduler.xml httpfs-env.sh mapred-env.sh

configuration.xsl httpfs-log4j.properties mapred-queues.xml.template

container-executor.cfg httpfs-signature.secret mapred-site.xml.template

core-site.xml httpfs-site.xml slaves

hadoop-env.cmd kms-acls.xml ssl-client.xml.example

hadoop-env.sh kms-env.sh ssl-server.xml.example

hadoop-metrics2.properties kms-log4j.properties yarn-env.cmd

hadoop-metrics.properties kms-site.xml yarn-env.sh

hadoop-policy.xml log4j.properties yarn-site.xml

hdfs-site.xml mapred-env.cmd

[root@master hadoop]# vim hadoop-env.sh 1 # Licensed to the Apache Software Foundation (ASF) under one

2 # or more contributor license agreements. See the NOTICE file

3 # distributed with this work for additional information

4 # regarding copyright ownership. The ASF licenses this file

5 # to you under the Apache License, Version 2.0 (the

6 # "License"); you may not use this file except in compliance

7 # with the License. You may obtain a copy of the License at

8 #

9 # http://www.apache.org/licenses/LICENSE-2.0

10 #

11 # Unless required by applicable law or agreed to in writing, software

12 # distributed under the License is distributed on an "AS IS" BASIS,

13 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

14 # See the License for the specific language governing permissions and

15 # limitations under the License.

16

17 # Set Hadoop-specific environment variables here.

18

19 # The only required environment variable is JAVA_HOME. All others are

20 # optional. When running a distributed configuration it is best to

21 # set JAVA_HOME in this file, so that it is correctly defined on

22 # remote nodes.

23

24 # The java implementation to use.

25 #export JAVA_HOME=${JAVA_HOME}

26 export JAVA_HOME=/usr/local/jdk java环境变量的指定

[root@master hadoop]# vim yarn-env.sh 1 # Licensed to the Apache Software Foundation (ASF) under one or more

2 # contributor license agreements. See the NOTICE file distributed with

3 # this work for additional information regarding copyright ownership.

4 # The ASF licenses this file to You under the Apache License, Version 2.0

5 # (the "License"); you may not use this file except in compliance with

6 # the License. You may obtain a copy of the License at

7 #

8 # http://www.apache.org/licenses/LICENSE-2.0

9 #

10 # Unless required by applicable law or agreed to in writing, software

11 # distributed under the License is distributed on an "AS IS" BASIS,

12 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

13 # See the License for the specific language governing permissions and

14 # limitations under the License.

15

16 # User for YARN daemons

17 export HADOOP_YARN_USER=${HADOOP_YARN_USER:-yarn}

18

19 # resolve links - $0 may be a softlink

20 export YARN_CONF_DIR="${YARN_CONF_DIR:-$HADOOP_YARN_HOME/conf}"

21

22 # some Java parameters

23 # export JAVA_HOME=/home/y/libexec/jdk1.6.0/

24 export JAVA_HOME=/usr/local/jdk

25

[root@master hadoop]# vim core-site.xml <?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://Master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property> [root@master hadoop]# cp -a mapred-site.xml.template mapred-site.xml

[root@master hadoop]# vim hdfs-site.xml See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

[root@master hadoop]# vim mapred-site.xml you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

--> <!-- Put site-specific property overrides in this file. --> <configuration>

<name>mapreduce.framwork.name</name>

<value>yarn</value>

</configuration>

[root@master hadoop]# vim yarn-site.xml Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration> <!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

[root@master hadoop]# cd ../../bin/

[root@master bin]# ./hdfs namenode -format 格式化文件系统

19/01/07 13:23:56 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = master/192.168.10.5

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.6.4

STARTUP_MSG: classpath = /usr/local/hadoop/hadoop-2.6.4/etc/hadoop:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/c

ommon/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/httpcore-4.2.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/hadoop-annotations-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/htrace-core-3.0.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-lang-2.6.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jets3t-0.9.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/gson-2.2.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/httpclient-4.2.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/curator-client-2.6.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-math3-3.1.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/curator-recipes-2.6.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/stax-api-1.0-2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/hadoop-auth-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/junit-4.11.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/curator-framework-2.6.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/hadoop-nfs-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/hadoop-common-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/common/hadoop-common-2.6.4-tests.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/htrace-core-3.0.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-nfs-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/hdfs/hadoop-hdfs-2.6.4-tests.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/servlet-api-2.5.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-cli-1.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/guava-11.0.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-lang-2.6.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-codec-1.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/activation-1.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-client-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jsr305-1.3.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jetty-6.1.26.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-json-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jline-0.9.94.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/jettison-1.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-client-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-api-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-common-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-tests-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-registry-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/yarn/hadoop-yarn-server-common-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/hadoop-annotations-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/junit-4.11.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.4-tests.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.6.4.jar:/usr/local/hadoop/hadoop-2.6.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.6.4.jar:/contrib/capacity-scheduler/*.jarSTARTUP_MSG: build = Unknown -r Unknown; compiled by 'hadoop' on 2016-03-08T03:44Z

STARTUP_MSG: java = 1.7.0_80

************************************************************/

19/01/07 13:23:56 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

19/01/07 13:23:56 INFO namenode.NameNode: createNameNode [-format]

19/01/07 13:23:58 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:23:58 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:23:58 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:23:58 WARN conf.Configuration: bad conf file: element not <property>

Formatting using clusterid: CID-4b99d05c-bbea-448b-a7dc-4e7a839b4cc3

19/01/07 13:23:58 INFO namenode.FSNamesystem: No KeyProvider found.

19/01/07 13:23:59 INFO namenode.FSNamesystem: fsLock is fair:true

19/01/07 13:23:59 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

19/01/07 13:23:59 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

19/01/07 13:23:59 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

19/01/07 13:23:59 INFO blockmanagement.BlockManager: The block deletion will start around 2019 一月 07 13:23:59

19/01/07 13:23:59 INFO util.GSet: Computing capacity for map BlocksMap

19/01/07 13:23:59 INFO util.GSet: VM type = 64-bit

19/01/07 13:23:59 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB

19/01/07 13:23:59 INFO util.GSet: capacity = 2^21 = 2097152 entries

19/01/07 13:23:59 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

19/01/07 13:23:59 INFO blockmanagement.BlockManager: defaultReplication = 1

19/01/07 13:23:59 INFO blockmanagement.BlockManager: maxReplication = 512

19/01/07 13:23:59 INFO blockmanagement.BlockManager: minReplication = 1

19/01/07 13:23:59 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

19/01/07 13:23:59 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

19/01/07 13:23:59 INFO blockmanagement.BlockManager: encryptDataTransfer = false

19/01/07 13:23:59 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

19/01/07 13:23:59 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

19/01/07 13:23:59 INFO namenode.FSNamesystem: supergroup = supergroup

19/01/07 13:23:59 INFO namenode.FSNamesystem: isPermissionEnabled = true

19/01/07 13:23:59 INFO namenode.FSNamesystem: HA Enabled: false

19/01/07 13:23:59 INFO namenode.FSNamesystem: Append Enabled: true

19/01/07 13:23:59 INFO util.GSet: Computing capacity for map INodeMap

19/01/07 13:23:59 INFO util.GSet: VM type = 64-bit

19/01/07 13:23:59 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB

19/01/07 13:23:59 INFO util.GSet: capacity = 2^20 = 1048576 entries

19/01/07 13:23:59 INFO namenode.NameNode: Caching file names occuring more than 10 times

19/01/07 13:23:59 INFO util.GSet: Computing capacity for map cachedBlocks

19/01/07 13:23:59 INFO util.GSet: VM type = 64-bit

19/01/07 13:23:59 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB

19/01/07 13:23:59 INFO util.GSet: capacity = 2^18 = 262144 entries

19/01/07 13:23:59 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

19/01/07 13:23:59 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

19/01/07 13:23:59 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

19/01/07 13:23:59 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

19/01/07 13:23:59 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

19/01/07 13:23:59 INFO util.GSet: Computing capacity for map NameNodeRetryCache

19/01/07 13:23:59 INFO util.GSet: VM type = 64-bit

19/01/07 13:23:59 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB

19/01/07 13:23:59 INFO util.GSet: capacity = 2^15 = 32768 entries

19/01/07 13:23:59 INFO namenode.NNConf: ACLs enabled? false

19/01/07 13:23:59 INFO namenode.NNConf: XAttrs enabled? true

19/01/07 13:23:59 INFO namenode.NNConf: Maximum size of an xattr: 16384

19/01/07 13:23:59 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:23:59 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:23:59 INFO namenode.FSImage: Allocated new BlockPoolId: BP-587833549-192.168.10.5-1546838639745

19/01/07 13:23:59 INFO common.Storage: Storage directory /usr/local/hadoop/hdfs/name has been successfully formatted.

19/01/07 13:24:00 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

19/01/07 13:24:00 INFO util.ExitUtil: Exiting with status 0

19/01/07 13:24:00 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at master/192.168.10.5

[root@master bin]# cd ../sbin/

[root@master sbin]# ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

19/01/07 13:32:29 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:32:29 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:32:29 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:32:29 WARN conf.Configuration: bad conf file: element not <property>

Starting namenodes on [Master]

Master: namenode running as process 15561. Stop it first.

localhost: datanode running as process 15675. Stop it first.

Starting secondary namenodes [0.0.0.0]

0.0.0.0: secondarynamenode running as process 15823. Stop it first.

19/01/07 13:32:55 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:32:55 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:32:55 WARN conf.Configuration: bad conf file: element not <property>

19/01/07 13:32:55 WARN conf.Configuration: bad conf file: element not <property>

starting yarn daemons

starting resourcemanager, logging to /usr/local/hadoop/hadoop-2.6.4/logs/yarn-root-resourcemanager-jiagoushi.out

localhost: starting nodemanager, logging to /usr/local/hadoop/hadoop-2.6.4/logs/yarn-root-nodemanager-master.out

[root@master sbin]# jps

15675 DataNode

17407 Jps

17247 NodeManager

16973 ResourceManager

15823 SecondaryNameNode

15561 NameNode

[root@master sbin]# ss -lntp | grep java

LISTEN 0 128 *:50020 *:* users:(("java",pid=15675,fd=202))

LISTEN 0 128 192.168.10.5:9000 *:* users:(("java",pid=15561,fd=202))

LISTEN 0 128 *:50090 *:* users:(("java",pid=15823,fd=196))

LISTEN 0 128 *:50070 *:* users:(("java",pid=15561,fd=189))

LISTEN 0 50 *:50010 *:* users:(("java",pid=15675,fd=189))

LISTEN 0 128 *:50075 *:* users:(("java",pid=15675,fd=193))

LISTEN 0 128 :::8030 :::* users:(("java",pid=16973,fd=205))

LISTEN 0 128 :::8031 :::* users:(("java",pid=16973,fd=194))

LISTEN 0 128 :::8032 :::* users:(("java",pid=16973,fd=215))

LISTEN 0 128 :::8033 :::* users:(("java",pid=16973,fd=229))

LISTEN 0 128 :::8040 :::* users:(("java",pid=17247,fd=232))

LISTEN 0 128 :::8042 :::* users:(("java",pid=17247,fd=243))

LISTEN 0 128 :::35564 :::* users:(("java",pid=17247,fd=221))

LISTEN 0 128 :::8088 :::* users:(("java",pid=16973,fd=225))

LISTEN 0 50 :::13562 :::* users:(("java",pid=17247,fd=242))

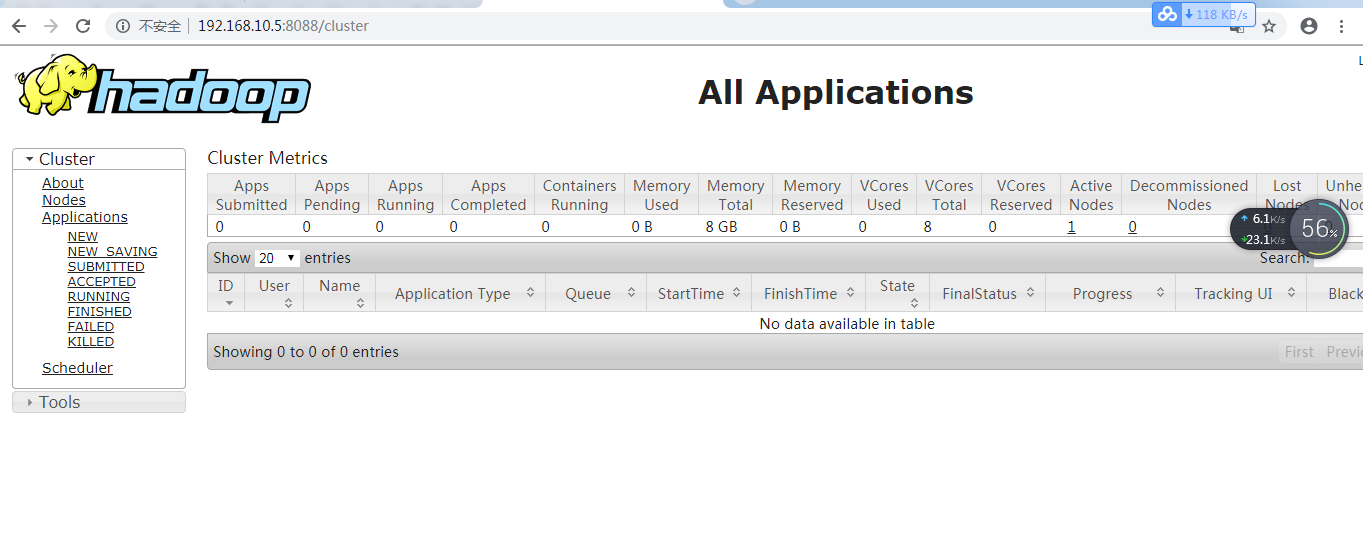

浏览器访问http://192.168.10.5:8088

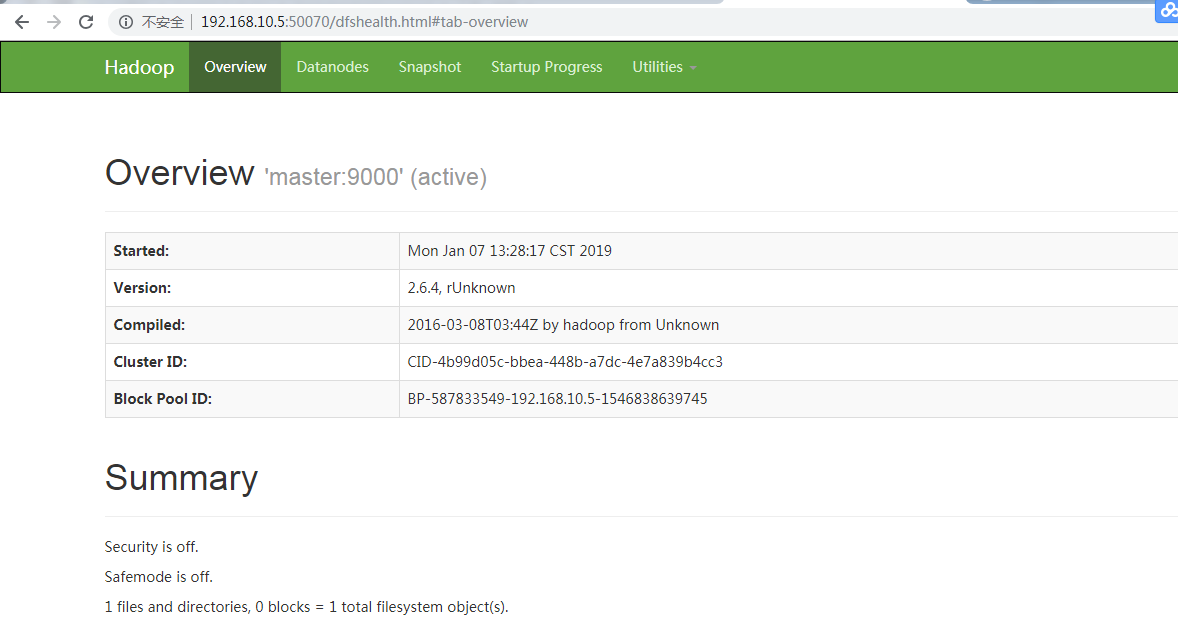

存储访问路径http://192.168.10.5:50070

Hadoop伪集群部署的更多相关文章

- zookeeper的单实例和伪集群部署

原文链接: http://gudaoyufu.com/?p=1395 zookeeper工作方式 ZooKeeper 是一个开源的分布式协调服务,由雅虎创建,是 Google Chubby 的开源实现 ...

- Hadoop分布式集群部署(单namenode节点)

Hadoop分布式集群部署 系统系统环境: OS: CentOS 6.8 内存:2G CPU:1核 Software:jdk-8u151-linux-x64.rpm hadoop-2.7.4.tar. ...

- Hadoop教程(五)Hadoop分布式集群部署安装

Hadoop教程(五)Hadoop分布式集群部署安装 1 Hadoop分布式集群部署安装 在hadoop2.0中通常由两个NameNode组成,一个处于active状态,还有一个处于standby状态 ...

- Hadoop记录-Apache hadoop+spark集群部署

Hadoop+Spark集群部署指南 (多节点文件分发.集群操作建议salt/ansible) 1.集群规划节点名称 主机名 IP地址 操作系统Master centos1 192.168.0.1 C ...

- hadoop分布式集群部署①

Linux系统的安装和配置.(在VM虚拟机上) 一:安装虚拟机VMware Workstation 14 Pro 以上,虚拟机软件安装完成. 二:创建虚拟机. 三:安装CentOS系统 (1)上面步 ...

- ubuntu18.04.2 Hadoop伪集群搭建

准备工作: 若没有下载vim请下载vim 若出现 Could not get lock /var/lib/dpkg/lock 问题请参考: https://jingyan.baidu.com/arti ...

- Kafka入门初探+伪集群部署

Kafka是目前非常流行的消息队列中间件,常用于做普通的消息队列.网站的活性数据分析(PV.流量.点击量等).日志的搜集(对接大数据存储引擎做离线分析). 全部内容来自网络,可信度有待考证!如有问题, ...

- ActiveMQ伪集群部署

本文借鉴http://www.cnblogs.com/guozhen/p/5984915.html,在此基础上进行了完善,使之成为一个完整版的伪分布式部署说明,在此记录一下! 一.本文目的 介绍如何在 ...

- 大数据系列之Hadoop分布式集群部署

本节目的:搭建Hadoop分布式集群环境 环境准备 LZ用OS X系统 ,安装两台Linux虚拟机,Linux系统用的是CentOS6.5:Master Ip:10.211.55.3 ,Slave ...

随机推荐

- robotframework:appium切换webview后,在第一个页面操作成功,跳转到第二个页面后,执行命令失败

问题: 在用robot写手机淘宝app的自动化时,打开手机淘宝后,点击天猫国际,跳转到天猫国际页面,天猫国际页面是H5, 需要切换到对应的webview,切换到webview后,点击美妆菜单,跳转到美 ...

- 深入理解js里面的this

闲聊两句(可以忽略): 毕业有半年了,时间还过得真快,不过还好,感觉自己相对于刚毕业那会确实成长了很多:好久没有打游戏了(自己决心要戒掉的),消磨时光的时候就看看电影或者追追电视剧,再无聊就洗洗衣服. ...

- 【旧文章搬运】ZwQuerySystemInformation枚举内核模块及简单应用

原文发表于百度空间,2008-10-24========================================================================== 简单说,即 ...

- C - Woodcutters

Time Limit:1000MS Memory Limit:262144KB 64bit IO Format:%I64d & %I64u Description Little ...

- 任务39:Role以及Claims授权

基于Role角色的授权 asp.net core在逐渐淘汰这种基于RoleBase的基于角色的授权.鼓励大家使用基于Claim的授权 在认证的时候Cliam已经加入了Role 注释38节课的 自定义验 ...

- Android Service完全解析,关于服务你所需知道的一切(下) (转载)

转自:http://blog.csdn.net/guolin_blog/article/details/9797169 转载请注册出处:http://blog.csdn.net/guolin_blog ...

- c++常见面试题30道

1.new.delete.malloc.free关系 delete会调用对象的析构函数,和new对应free只会释放内存,new调用构造函数.malloc与free是C++/C语言的标准库函数,new ...

- linux 读取部分文件内容

一般我们在linux上读取文件,是用vi工具,如果是写shell 脚本时,一般式通过cat 再 使用管道来达到流的处理. 但如果文件太大,单纯的cat 可能会使用过多的内存,而且实现上还需要后续的加工 ...

- 黑客攻防技术宝典web实战篇:攻击应用程序架构习题

猫宁!!! 参考链接:http://www.ituring.com.cn/book/885 随书答案. 1. 假设受攻击的应用程序使用两台不同的服务器:一台应用程序服务器和一台数据库服务器.已经发现一 ...

- Nginx系列篇四:Nginx+keepalived搭建一个高可用的双机双主热备

建议:先阅读Nginx+keepalived主从配置,因为此篇是接着上篇开始的 上一篇我们简单的介绍了主从配置及其缺点,我们看一下双主热备配置: 2台Nginx+keepalived互为主备,各自绑定 ...