HiBench成长笔记——(3) HiBench测试Spark

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html

创建并修改配置文件conf/spark.conf

cp conf/spark.conf.template conf/spark.conf

参考:https://github.com/Intel-bigdata/HiBench/blob/master/docs/run-sparkbench.md,设置属性为下列值

# Spark home hibench.spark.home /opt/cloudera/parcels/CDH--.cdh5./lib/spark # Spark master # standalone mode: spark://xxx:7077 # YARN mode: yarn-client hibench.spark.master yarn-client

执行脚本

bin/workloads/micro/wordcount/prepare/prepare.sh

返回信息

[root@node1 prepare]# ./prepare.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/micro/wordcount.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start HadoopPrepareWordcount bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Wordcount/Input Deleted hdfs://node1:8020/HiBench/Wordcount/Input Submit MapReduce Job: /opt/cloudera/parcels/CDH--.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn jar /opt/cloudera/parcels/CDH--.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-examples--cdh5. -D mapreduce.randomtextwriter.bytespermap= -D mapreduce.job.maps= -D mapreduce.job.reduces= hdfs://node1:8020/HiBench/Wordcount/Input The job took seconds. finish HadoopPrepareWordcount bench

执行脚本

bin/workloads/micro/wordcount/spark/run.sh

返回信息

[root@node1 spark]# ./run.sh patching args= Parsing conf: /home/cf/app/HiBench-master/conf/hadoop.conf Parsing conf: /home/cf/app/HiBench-master/conf/hibench.conf Parsing conf: /home/cf/app/HiBench-master/conf/spark.conf Parsing conf: /home/cf/app/HiBench-master/conf/workloads/micro/wordcount.conf probe -.cdh5./lib/hadoop/../../jars/hadoop-mapreduce-client-jobclient--cdh5.14.2-tests.jar start ScalaSparkWordcount bench hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -rm -r -skipTrash hdfs://node1:8020/HiBench/Wordcount/Output Deleted hdfs://node1:8020/HiBench/Wordcount/Output hdfs -.cdh5./bin/hadoop --config /etc/hadoop/conf.cloudera.yarn fs -du -s hdfs://node1:8020/HiBench/Wordcount/Input Export env: SPARKBENCH_PROPERTIES_FILES=/home/cf/app/HiBench-master/report/wordcount/spark/conf/sparkbench/sparkbench.conf Export env: HADOOP_CONF_DIR=/etc/hadoop/conf.cloudera.yarn Submit Spark job: /opt/cloudera/parcels/CDH--.cdh5./lib/spark/bin/spark-submit --properties- --executor-cores --executor-memory 4g /home/cf/app/HiBench-master/sparkbench/assembly/target/sparkbench-assembly-7.1-SNAPSHOT-dist.jar hdfs://node1:8020/HiBench/Wordcount/Input hdfs://node1:8020/HiBench/Wordcount/Output // :: INFO remote.RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon. finish ScalaSparkWordcount bench

查看report/hibench.report

Type Date Time Input_data_size Duration(s) Throughput(bytes/s) Throughput/node HadoopWordcount -- :: ScalaSparkWordcount -- ::

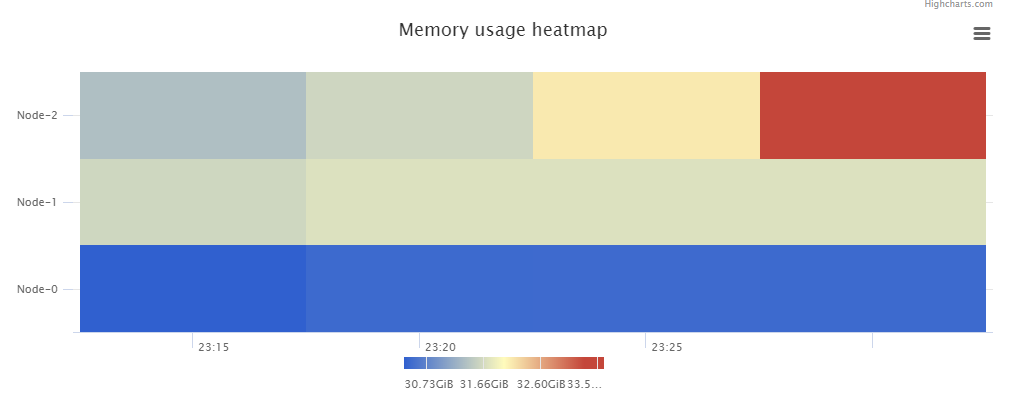

\report\wordcount\spark下有多个文件:monitor.log是原始日志,bench.log是scheduler.DAGScheduler和scheduler.TaskSetManager信息,monitor.html可视化了系统的性能信息,\conf\wordcount.conf、\conf\sparkbench\spark.conf和\conf\sparkbench\sparkbench.conf是本次任务的环境变量

monitor.html中包含了Memory usage heatmap等统计图:

根据官方文档 https://github.com/Intel-bigdata/HiBench/blob/master/docs/run-sparkbench.md ,还可以修改 hibench.scale.profile 调整测试的数据规模,修改 hibench.default.map.parallelism 和 hibench.default.shuffle.parallelism 调整并行化,修改hibench.yarn.executor.num、hibench.yarn.executor.cores、spark.executor.memory和spark.driver.memory控制Spark executor 的数量、核数、内存和driver的内存。

HiBench成长笔记——(3) HiBench测试Spark的更多相关文章

- HiBench成长笔记——(1) HiBench概述

测试分类 HiBench共计19个测试方向,可大致分为6个测试类别:分别是micro,ml(机器学习),sql,graph,websearch和streaming. 2.1 micro Benchma ...

- HiBench成长笔记——(4) HiBench测试Spark SQL

很多内容之前的博客已经提过,这里不再赘述,详细内容参照本系列前面的博客:https://www.cnblogs.com/ratels/p/10970905.html 和 https://www.cnb ...

- HiBench成长笔记——(6) HiBench测试结果分析

Scan Join Aggregation Scan Join Aggregation Scan Join Aggregation Scan Join Aggregation Scan Join Ag ...

- HiBench成长笔记——(5) HiBench-Spark-SQL-Scan源码分析

run.sh #!/bin/bash # Licensed to the Apache Software Foundation (ASF) under one or more # contributo ...

- HiBench成长笔记——(2) CentOS部署安装HiBench

安装Scala 使用spark-shell命令进入shell模式,查看spark版本和Scala版本: 下载Scala2.10.5 wget https://downloads.lightbend.c ...

- HiBench成长笔记——(7) 阅读《The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis》

<The HiBench Benchmark Suite: Characterization of the MapReduce-Based Data Analysis>内容精选 We th ...

- HiBench成长笔记——(10) 分析源码execute_with_log.py

#!/usr/bin/env python2 # Licensed to the Apache Software Foundation (ASF) under one or more # contri ...

- HiBench成长笔记——(9) 分析源码monitor.py

monitor.py 是主监控程序,将监控数据写入日志,并统计监控数据生成HTML统计展示页面: #!/usr/bin/env python2 # Licensed to the Apache Sof ...

- HiBench成长笔记——(8) 分析源码workload_functions.sh

workload_functions.sh 是测试程序的入口,粘连了监控程序 monitor.py 和 主运行程序: #!/bin/bash # Licensed to the Apache Soft ...

随机推荐

- 15、python面对对象之类和对象

前言:本文主要介绍python面对对象中的类和对象,包括类和对象的概念.类的定义.类属性.实例属性及实例方法等. 一.类和对象的概念 问题:什么是类?什么是实例对象? 类:是一类事物的抽象概念,不是真 ...

- Vue.js项目的开发环境搭建与运行

写作背景:手上入一个用Vue框架写的微信公众号项目,根据公司安排,我负责项目源代码的验收工作(当然专业的工作检测会交给web开发人员,我只是想运行起来看一看). 1 开发环境安装步骤: (一)安装no ...

- SQL SERVER 语法汇总

一.基础 1.说明:创建数据库CREATE DATABASE database-name 2.说明:删除数据库drop database dbname3.说明:备份sql server--- 创建 备 ...

- NLP的比赛和数据集

整理了NLP领域的比赛.数据集.模型 比赛 网站 主办方(作者) decaNLP http://decanlp.com/ Salesforce CLUE https://github.com/CLUE ...

- 第一个Vue-cli创建项目

需要环境: Node.js:http://nodejs.cn/download/ 安装完成之后,使用cmd测试: 我现在的是最新的 安装Node.js加速器: 这个下载的稍微慢一些 npm insta ...

- 劫后余生--New Start

被搁置的计划 It was the best of times,it was the worst of times,it was the age of wisidom,it was the age o ...

- IDEA导入项目后,导入artifacts 方法 以及 Spring的配置文件找不到的解决方法

我们一般选择 open 项目,如果没有artifacts 的添加选项,我们就要选择 import 项目. 如果没有artifacts ,项目下面会有错误提示,点击错误提示Fix,设置里面导入artif ...

- redhat 7.6 网络配置

网卡配置目录 /etc/sysconfig/network-scripts/ 关闭网卡 $$ 打开网卡 ifdown ensp8 && ifup ensp8 重启网卡服务 servic ...

- mysql学习指令

mysql 用户管理和权限设置 参考文章:http://www.cnblogs.com/fslnet/p/3143344.html Mysql命令大全 参考文章: http://www.cnblogs ...

- LeetCode刷题--26.删除排序数组中的重复项(简单)

题目描述 给定一个排序数组,你需要在原地删除重复出现的元素,使得每个元素只出现一次,返回移除后数组的新长度.不要使用额外的数组空间,你必须在原地修改输入数组并在使用O(1)额外空间的条件下完成. 示例 ...